标签:方案 target uri mamicode 一个 情况下 ref started https

今天是继续对之前 kafka 集群遗留问题的查漏补缺。

扩容后对副本进行再平衡:

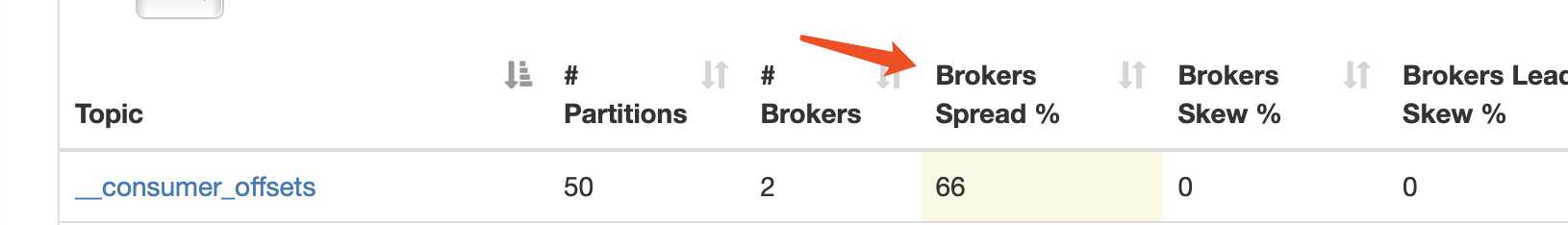

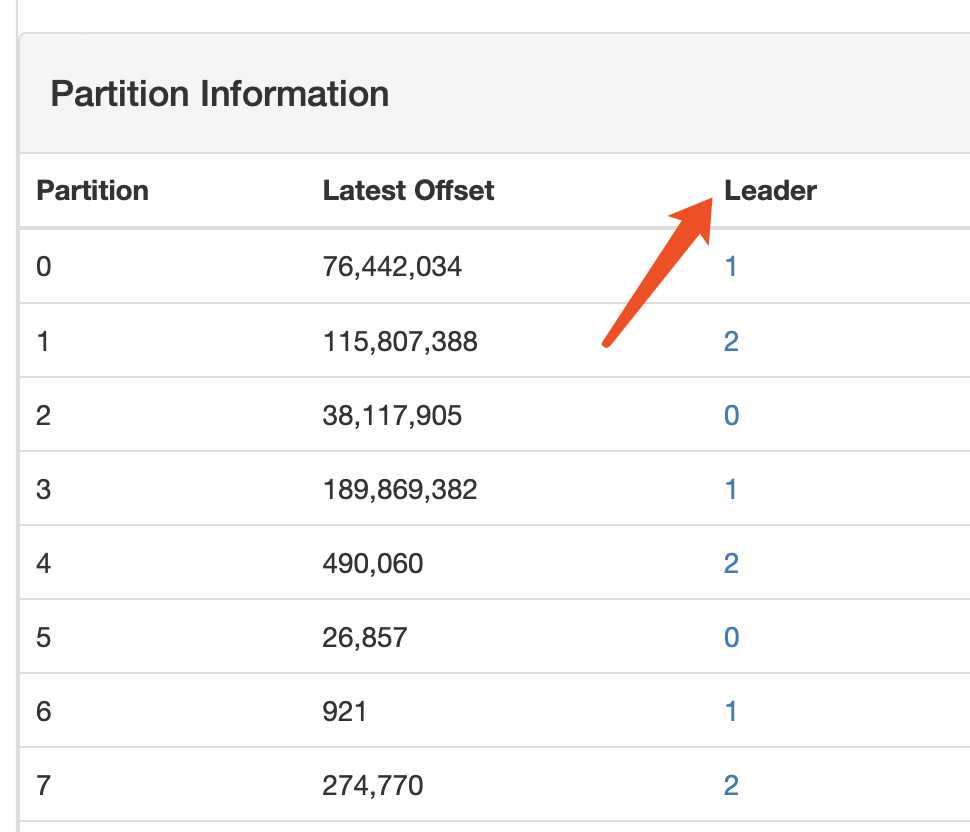

今天检查 kafka manager 发现了一个 __consumer_offsets 主题(消费者分区位移保存主题)的 leader 副本只被部署在了已有三节点中的两个节点上。并没有将三个 broker 上都平均分布上副本,具体表现为

我们点开这个主题

可以发现原本是三个节点的我们,却非常不均匀的只有两个节点承担了存放该 partition 的任务。

所以我们需要重新非配这个 topic 的副本均匀的到三个节点上去。

根据官方文档提供的方法,我们可以借助

./kafka-reassign-partitions.sh --zookeeper 10.171.97.1:2181 --topics-to-move-json-file ~/consumer_offsets_adjust.json --broker-list "0,1,2" --generate

这里的 consumer_offsets_adjust.json 的格式 官方给了一个格式

> cat topics-to-move.json {"topics": [{"topic": "foo1"}, {"topic": "foo2"}], "version":1 }

这里 topics 就是我们想要重新分配的 topics。

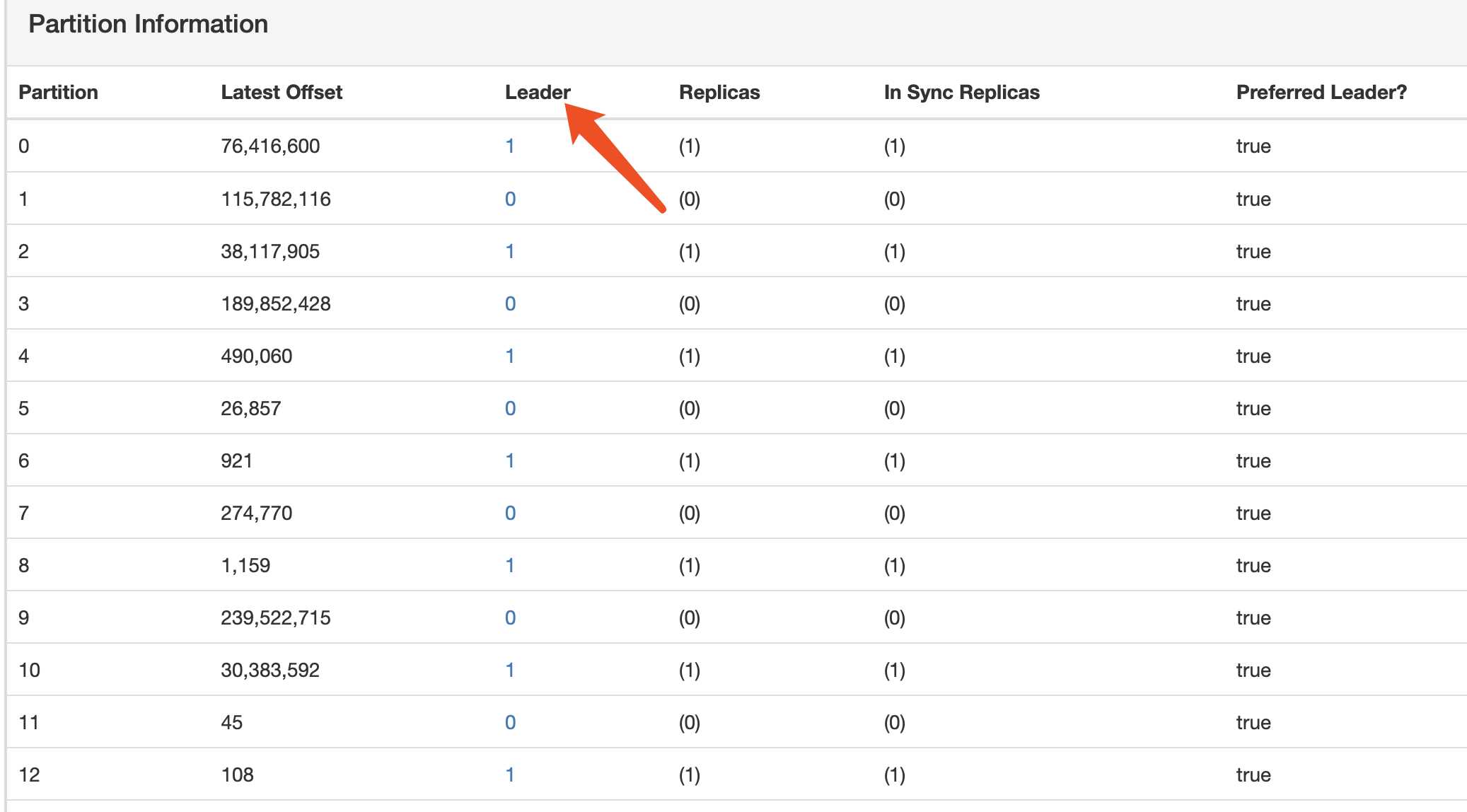

命令获得重新分配后的结构。里面的具体参数都很常见在这里不再细说,执行这个命令之后我们会获得一份 再分区前的数据情况,和再分区之后的情况。

我这里贴一份提供格式化之后的 proposed partition reaasigment 我们看下

{ "version": 1, "partitions": [{ "topic": "__consumer_offsets", "partition": 19, "replicas": [2], "log_dirs": ["any"] }, { "topic": "__consumer_offsets", "partition": 10, "replicas": [2], "log_dirs": ["any"] }, { "topic": "__consumer_offsets", "partition": 14, "replicas": [0], "log_dirs": ["any"] }

//中间省略若干 partitions

}

可以看到 replicas 现在有 0 1 2 三个 broker 了。之后我们选择执行该方案,将该方案拷贝进 ~/target.json 文件中再运行:

./kafka-reassign-partitions.sh --zookeeper 10.171.97.1:2181 --reassignment-json-file ~/target.json --execute

就可以开始执行再分配的任务了 另外 kafka 会返回给我们消息

Current partition replica assignment {"version":1,"partitions":[{"topic":"__consumer_offsets","partition":19,"replicas":[0],"log_dirs":["any"]},{"topic":"__consumer_offsets","partition":30,"replicas":[1],"log_dirs":["any"]},{"topic":"__consumer_offsets","partition":47,"replicas":[0],"log_dirs":["any"]},{"topic":"__consumer_offsets","partition":29,"replicas":[0],"log_dirs":["any"]},{"topic":"__consumer_offsets","partition":41,"replicas":[0],"log_dirs":["any"]},{"topic":"__consumer_offsets","partition":39,"replicas":[0],"log_dirs":["any"]},{"topic":"__consumer_offsets","partition":10,"replicas":[1],"log_dirs":["any"]},{"topic":"__consumer_offsets","partition":17,"replicas":[0],"log_dirs":["any"]},{"topic":"__consumer_offsets","partition":14,"replicas":[1],"log_dirs":["any"]},{"topic":"__consumer_offsets","partition":40,"replicas":[1],"log_dirs":["any"]},{"topic":"__consumer_offsets","partition":18,"replicas":[1],"log_dirs":["any"]},{"topic":"__consumer_offsets","partition":26,"replicas":[1],"log_dirs":["any"]},{"topic":"__consumer_offsets","partition":0,"replicas":[1],"log_dirs":["any"]},{"topic":"__consumer_offsets","partition":24,"replicas":[1],"log_dirs":["any"]},{"topic":"__consumer_offsets","partition":33,"replicas":[0],"log_dirs":["any"]},{"topic":"__consumer_offsets","partition":20,"replicas":[1],"log_dirs":["any"]},{"topic":"__consumer_offsets","partition":21,"replicas":[0],"log_dirs":["any"]},{"topic":"__consumer_offsets","partition":3,"replicas":[0],"log_dirs":["any"]},{"topic":"__consumer_offsets","partition":5,"replicas":[0],"log_dirs":["any"]},{"topic":"__consumer_offsets","partition":22,"replicas":[1],"log_dirs":["any"]},{"topic":"__consumer_offsets","partition":12,"replicas":[1],"log_dirs":["any"]},{"topic":"__consumer_offsets","partition":8,"replicas":[1],"log_dirs":["any"]},{"topic":"__consumer_offsets","partition":23,"replicas":[0],"log_dirs":["any"]},{"topic":"__consumer_offsets","partition":15,"replicas":[0],"log_dirs":["any"]},{"topic":"__consumer_offsets","partition":48,"replicas":[1],"log_dirs":["any"]},{"topic":"__consumer_offsets","partition":11,"replicas":[0],"log_dirs":["any"]},{"topic":"__consumer_offsets","partition":13,"replicas":[0],"log_dirs":["any"]},{"topic":"__consumer_offsets","partition":49,"replicas":[0],"log_dirs":["any"]},{"topic":"__consumer_offsets","partition":6,"replicas":[1],"log_dirs":["any"]},{"topic":"__consumer_offsets","partition":28,"replicas":[1],"log_dirs":["any"]},{"topic":"__consumer_offsets","partition":4,"replicas":[1],"log_dirs":["any"]},{"topic":"__consumer_offsets","partition":37,"replicas":[0],"log_dirs":["any"]},{"topic":"__consumer_offsets","partition":31,"replicas":[0],"log_dirs":["any"]},{"topic":"__consumer_offsets","partition":44,"replicas":[1],"log_dirs":["any"]},{"topic":"__consumer_offsets","partition":42,"replicas":[1],"log_dirs":["any"]},{"topic":"__consumer_offsets","partition":34,"replicas":[1],"log_dirs":["any"]},{"topic":"__consumer_offsets","partition":46,"replicas":[1],"log_dirs":["any"]},{"topic":"__consumer_offsets","partition":25,"replicas":[0],"log_dirs":["any"]},{"topic":"__consumer_offsets","partition":45,"replicas":[0],"log_dirs":["any"]},{"topic":"__consumer_offsets","partition":27,"replicas":[0],"log_dirs":["any"]},{"topic":"__consumer_offsets","partition":32,"replicas":[1],"log_dirs":["any"]},{"topic":"__consumer_offsets","partition":43,"replicas":[0],"log_dirs":["any"]},{"topic":"__consumer_offsets","partition":36,"replicas":[1],"log_dirs":["any"]},{"topic":"__consumer_offsets","partition":35,"replicas":[0],"log_dirs":["any"]},{"topic":"__consumer_offsets","partition":7,"replicas":[0],"log_dirs":["any"]},{"topic":"__consumer_offsets","partition":9,"replicas":[0],"log_dirs":["any"]},{"topic":"__consumer_offsets","partition":38,"replicas":[1],"log_dirs":["any"]},{"topic":"__consumer_offsets","partition":1,"replicas":[0],"log_dirs":["any"]},{"topic":"__consumer_offsets","partition":16,"replicas":[1],"log_dirs":["any"]},{"topic":"__consumer_offsets","partition":2,"replicas":[1],"log_dirs":["any"]}]} Save this to use as the --reassignment-json-file option during rollback Successfully started reassignment of partitions.

提供给了我们 rollback 的方案,另外这个时候其实就已经开始 ressign 了。ressign 肯定会通知 controller 负责来做这些改变。

我们可以通过命令

./kafka-reassign-partitions.sh --zookeeper 10.171.97.1:2181 --reassignment-json-file ~/target.json --verify

来检查 ressign 进度

Reassignment of partition __consumer_offsets-38 completed successfully Reassignment of partition __consumer_offsets-13 completed successfully Reassignment of partition __consumer_offsets-8 completed successfully Reassignment of partition __consumer_offsets-5 completed successfully Reassignment of partition __consumer_offsets-39 completed successfully Reassignment of partition __consumer_offsets-36 completed successfully Reassignment of partition __consumer_offsets-40 completed successfully Reassignment of partition __consumer_offsets-45 completed successfully Reassignment of partition __consumer_offsets-15 completed successfully Reassignment of partition __consumer_offsets-33 completed successfully Reassignment of partition __consumer_offsets-37 completed successfully Reassignment of partition __consumer_offsets-21 completed successfully Reassignment of partition __consumer_offsets-6 completed successfully Reassignment of partition __consumer_offsets-11 completed successfully Reassignment of partition __consumer_offsets-20 completed successfully Reassignment of partition __consumer_offsets-47 completed successfully Reassignment of partition __consumer_offsets-2 completed successfully Reassignment of partition __consumer_offsets-27 completed successfully Reassignment of partition __consumer_offsets-34 completed successfully Reassignment of partition __consumer_offsets-9 completed successfully Reassignment of partition __consumer_offsets-22 completed successfully Reassignment of partition __consumer_offsets-42 completed successfully Reassignment of partition __consumer_offsets-14 completed successfully Reassignment of partition __consumer_offsets-25 completed successfully Reassignment of partition __consumer_offsets-10 completed successfully Reassignment of partition __consumer_offsets-48 completed successfully Reassignment of partition __consumer_offsets-31 completed successfully Reassignment of partition __consumer_offsets-18 completed successfully Reassignment of partition __consumer_offsets-19 completed successfully Reassignment of partition __consumer_offsets-12 completed successfully Reassignment of partition __consumer_offsets-46 completed successfully Reassignment of partition __consumer_offsets-43 completed successfully Reassignment of partition __consumer_offsets-1 completed successfully Reassignment of partition __consumer_offsets-26 completed successfully Reassignment of partition __consumer_offsets-30 completed successfully

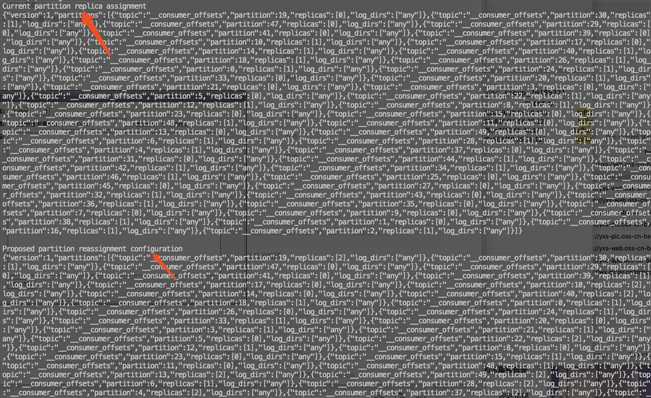

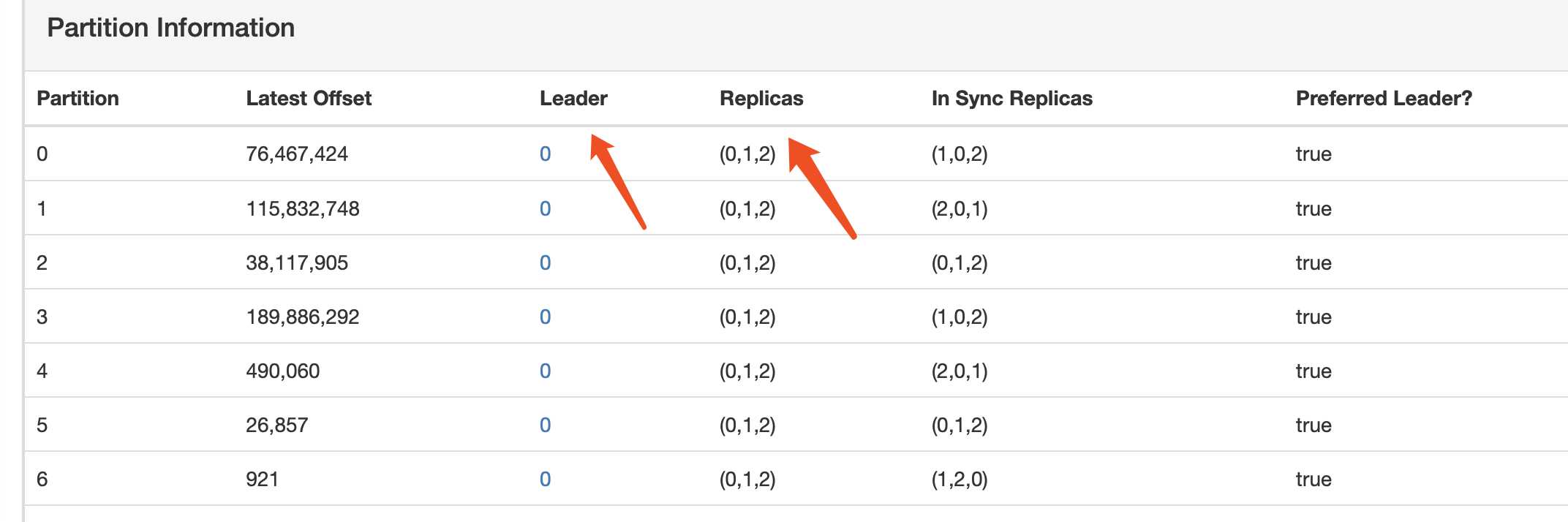

到此位置我们可以来检查一下扩容是否已经成功,leader 副本是否已经被成功的被平均分配到了各个节点上。

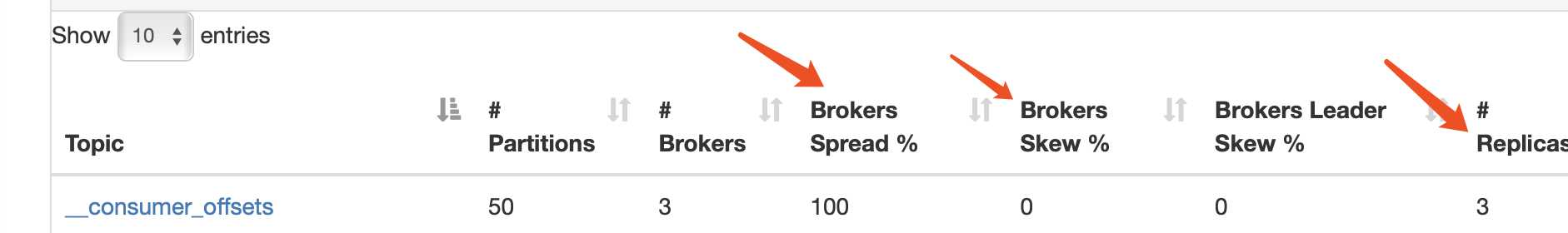

首先 kafka brokers spread

leader

可以看到我们完成了! IT WORKED!这里多提一点是,如果我们需要不让某个 broker 来存储该 topic 的副本的时候,我们同样可以使用 ressign 机制,并且在 broker list 那里去掉对应节点即可。

为已有分区增加 replica:

通过上面的截图大家可能也注意到一个问题,由于历史遗留问题,我们最最最重要的 offsets 提交分区只有一个 replica 也就是只有 leader 副本。通常情况下我们会设置三副本机制使得 broker 挂掉可以轻松切换,所以我们需要为其添加新的 replica vi ~/inc.json 。

{"version":1,

"partitions":[{"topic":"__consumer_offsets","partition":0,"replicas":[0,1,2]}]}

./kafka-reassign-partitions.sh --zookeeper 10.171.97.1:2181 --reassignment-json-file ~/inc.json --execute

我这里只延时了调整一个 partition 的事实上我们需要调整 50个 partitions 。。。。 我就不上脚本了 反正调整完成了之后变成这样

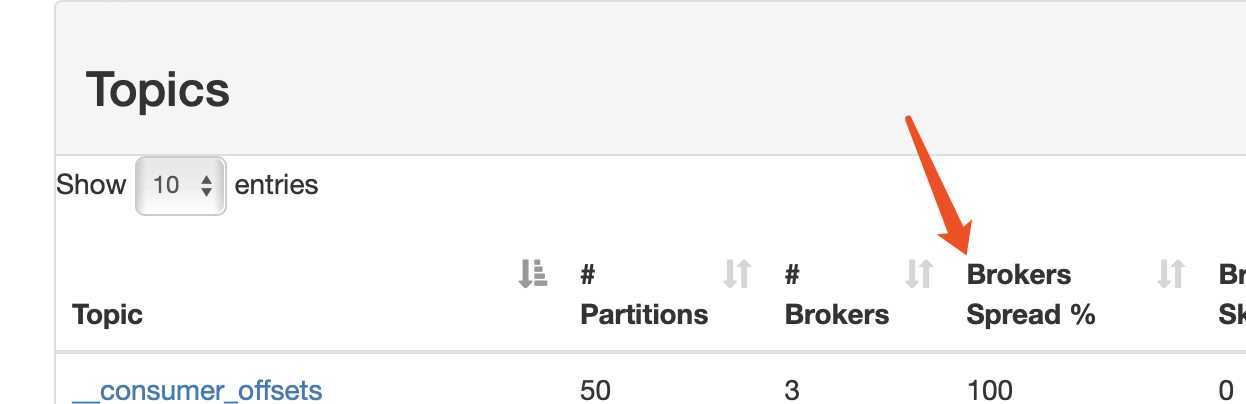

可以看到这明显有问题,这是因为刚才我在设置 replica 的时候无脑写 0 1 2 ,但是因为顺序关系,第一个副本位置会被设置成 leader 也就是所谓的 Preferred Leader 导致我们 leader 严重偏移了。

现在我们要重新纠正一下这个问题重新执行增加集群的步骤重新平衡一下即可,如果你的集群没有开启

auto.leader.rebalance.enable = true

执行完后 可能还需要执行一下

./kafka-preferred-replica-election.sh --zookeeper 10.171.97.1:2181

对 Leader 进行重分配。

这个时候再来看下我们集群的状态

Not Bad!

Reference:

http://kafka.apache.org/10/documentation.html#basic_ops_cluster_expansion

http://kafka.apache.org/10/documentation.html#basic_ops_increase_replication_factor

https://zhuanlan.zhihu.com/p/38721205 KAFKA集群扩容后的数据迁移

Kafka 进行机器扩容后的副本再平衡 和 为已有分区增加 replica 实践

标签:方案 target uri mamicode 一个 情况下 ref started https

原文地址:https://www.cnblogs.com/piperck/p/12172752.html