标签:csharp private tor source end ref 文本 sam add

ONNX是一种针对机器学习所设计的开放式的文件格式,用于存储训练好的模型。它使得不同的人工智能框架(如Pytorch, MXNet)可以采用相同格式存储模型数据并交互。 ONNX的规范及代码主要由微软,亚马逊 ,Facebook 和 IBM 等公司共同开发,以开放源代码的方式托管在Github上。目前官方支持加载ONNX模型并进行推理的深度学习框架有: Caffe2, PyTorch, MXNet,ML.NET,TensorRT 和 Microsoft CNTK,并且 TensorFlow 也非官方的支持ONNX。---维基百科

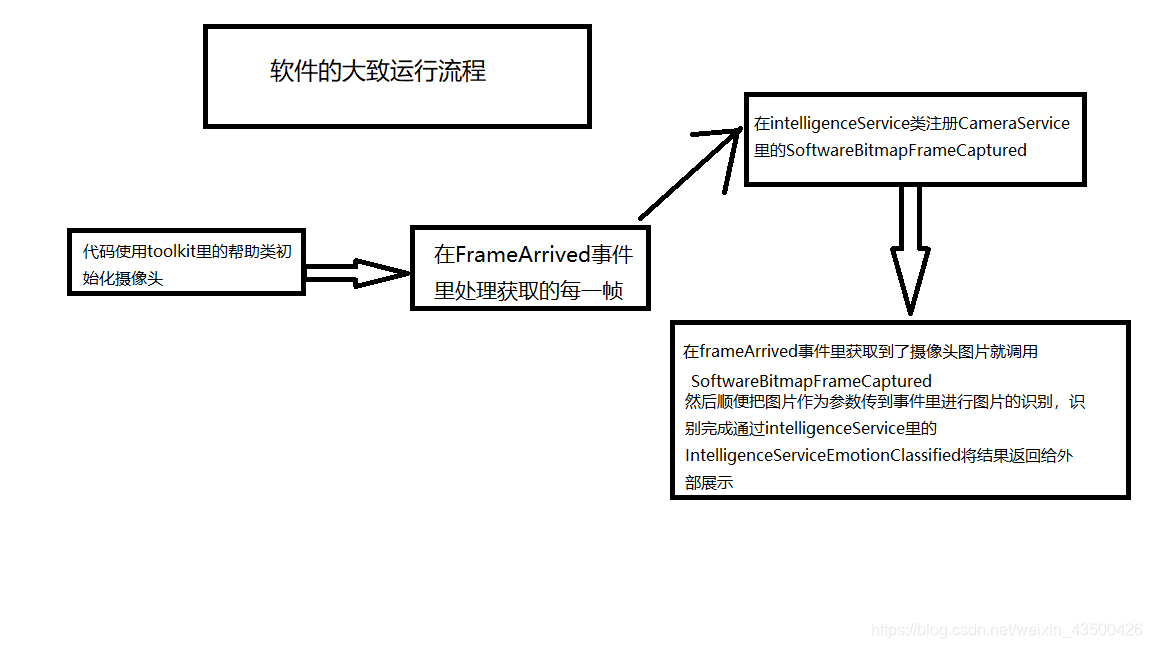

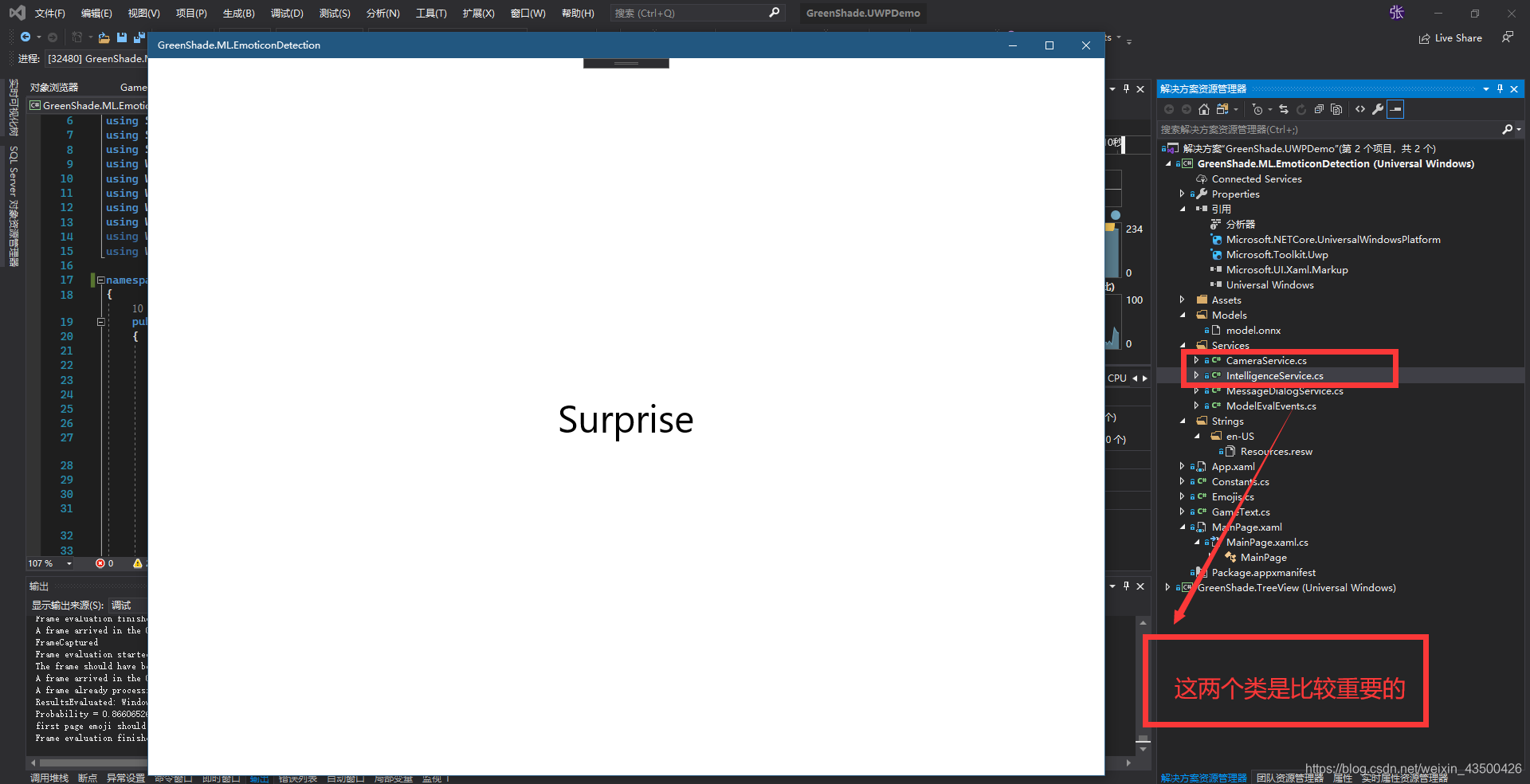

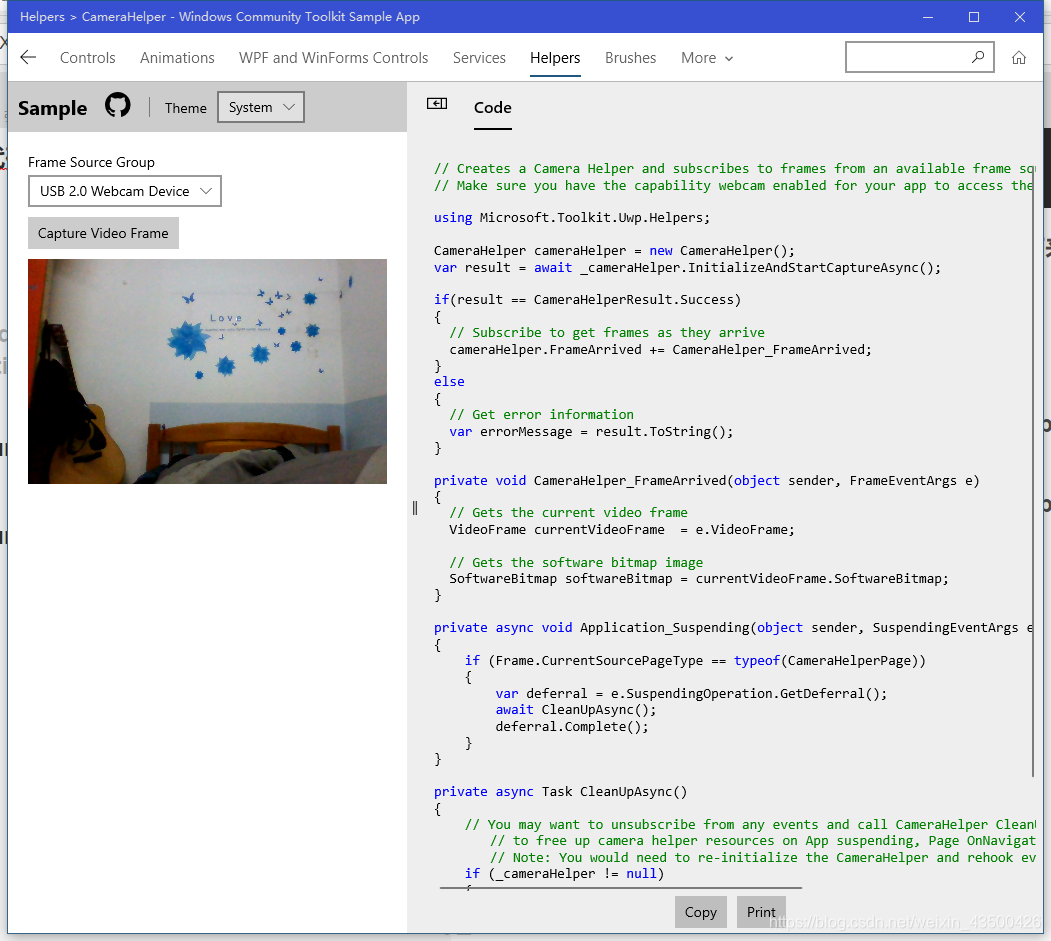

private async void Current_SoftwareBitmapFrameCaptured(object sender, SoftwareBitmapEventArgs e)

{

Debug.WriteLine("FrameCaptured");

Debug.WriteLine($"Frame evaluation started {DateTime.Now}" );

if (e.SoftwareBitmap != null)

{

BitmapPixelFormat bpf = e.SoftwareBitmap.BitmapPixelFormat;

var uncroppedBitmap = SoftwareBitmap.Convert(e.SoftwareBitmap, BitmapPixelFormat.Nv12);

var faces = await _faceDetector.DetectFacesAsync(uncroppedBitmap);

if (faces.Count > 0)

{

//crop image to focus on face portion

var faceBox = faces[0].FaceBox;

VideoFrame inputFrame = VideoFrame.CreateWithSoftwareBitmap(e.SoftwareBitmap);

VideoFrame tmp = null;

tmp = new VideoFrame(e.SoftwareBitmap.BitmapPixelFormat, (int)(faceBox.Width + faceBox.Width % 2) - 2, (int)(faceBox.Height + faceBox.Height % 2) - 2);

await inputFrame.CopyToAsync(tmp, faceBox, null);

//crop image to fit model input requirements

VideoFrame croppedInputImage = new VideoFrame(BitmapPixelFormat.Gray8, (int)_inputImageDescriptor.Shape[3], (int)_inputImageDescriptor.Shape[2]);

var srcBounds = GetCropBounds(

tmp.SoftwareBitmap.PixelWidth,

tmp.SoftwareBitmap.PixelHeight,

croppedInputImage.SoftwareBitmap.PixelWidth,

croppedInputImage.SoftwareBitmap.PixelHeight);

await tmp.CopyToAsync(croppedInputImage, srcBounds, null);

ImageFeatureValue imageTensor = ImageFeatureValue.CreateFromVideoFrame(croppedInputImage);

_binding = new LearningModelBinding(_session);

TensorFloat outputTensor = TensorFloat.Create(_outputTensorDescriptor.Shape);

List<float> _outputVariableList = new List<float>();

// Bind inputs + outputs

_binding.Bind(_inputImageDescriptor.Name, imageTensor);

_binding.Bind(_outputTensorDescriptor.Name, outputTensor);

// Evaluate results

var results = await _session.EvaluateAsync(_binding, new Guid().ToString());

Debug.WriteLine("ResultsEvaluated: " + results.ToString());

var outputTensorList = outputTensor.GetAsVectorView();

var resultsList = new List<float>(outputTensorList.Count);

for (int i = 0; i < outputTensorList.Count; i++)

{

resultsList.Add(outputTensorList[i]);

}

var softMaxexOutputs = SoftMax(resultsList);

double maxProb = 0;

int maxIndex = 0;

// Comb through the evaluation results

for (int i = 0; i < Constants.POTENTIAL_EMOJI_NAME_LIST.Count(); i++)

{

// Record the dominant emotion probability & its location

if (softMaxexOutputs[i] > maxProb)

{

maxIndex = i;

maxProb = softMaxexOutputs[i];

}

}

Debug.WriteLine($"Probability = {maxProb}, Threshold set to = {Constants.CLASSIFICATION_CERTAINTY_THRESHOLD}, Emotion = {Constants.POTENTIAL_EMOJI_NAME_LIST[maxIndex]}");

// For evaluations run on the MainPage, update the emoji carousel

if (maxProb >= Constants.CLASSIFICATION_CERTAINTY_THRESHOLD)

{

Debug.WriteLine("first page emoji should start to update");

IntelligenceServiceEmotionClassified?.Invoke(this, new ClassifiedEmojiEventArgs(CurrentEmojis._emojis.Emojis[maxIndex]));

}

// Dispose of resources

if (e.SoftwareBitmap != null)

{

e.SoftwareBitmap.Dispose();

e.SoftwareBitmap = null;

}

}

}

IntelligenceServiceProcessingCompleted?.Invoke(this, null);

Debug.WriteLine($"Frame evaluation finished {DateTime.Now}");

}

//WinML team function

private List<float> SoftMax(List<float> inputs)

{

List<float> inputsExp = new List<float>();

float inputsExpSum = 0;

for (int i = 0; i < inputs.Count; i++)

{

var input = inputs[i];

inputsExp.Add((float)Math.Exp(input));

inputsExpSum += inputsExp[i];

}

inputsExpSum = inputsExpSum == 0 ? 1 : inputsExpSum;

for (int i = 0; i < inputs.Count; i++)

{

inputsExp[i] /= inputsExpSum;

}

return inputsExp;

}

public static BitmapBounds GetCropBounds(int srcWidth, int srcHeight, int targetWidth, int targetHeight)

{

var modelHeight = targetHeight;

var modelWidth = targetWidth;

BitmapBounds bounds = new BitmapBounds();

// we need to recalculate the crop bounds in order to correctly center-crop the input image

float flRequiredAspectRatio = (float)modelWidth / modelHeight;

if (flRequiredAspectRatio * srcHeight > (float)srcWidth)

{

// clip on the y axis

bounds.Height = (uint)Math.Min((srcWidth / flRequiredAspectRatio + 0.5f), srcHeight);

bounds.Width = (uint)srcWidth;

bounds.X = 0;

bounds.Y = (uint)(srcHeight - bounds.Height) / 2;

}

else // clip on the x axis

{

bounds.Width = (uint)Math.Min((flRequiredAspectRatio * srcHeight + 0.5f), srcWidth);

bounds.Height = (uint)srcHeight;

bounds.X = (uint)(srcWidth - bounds.Width) / 2; ;

bounds.Y = 0;

}

return bounds;

}

标签:csharp private tor source end ref 文本 sam add

原文地址:https://www.cnblogs.com/GreenShade/p/12275048.html