标签:top linux nop ons load ssh免密钥 数据 软件源 signed

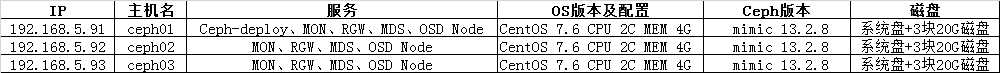

1.1 各版本规划如下表

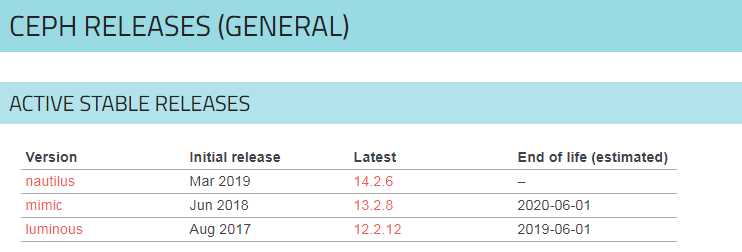

到今目前为止各软件的支持情况 https://docs.ceph.com/docs/master/releases/general/

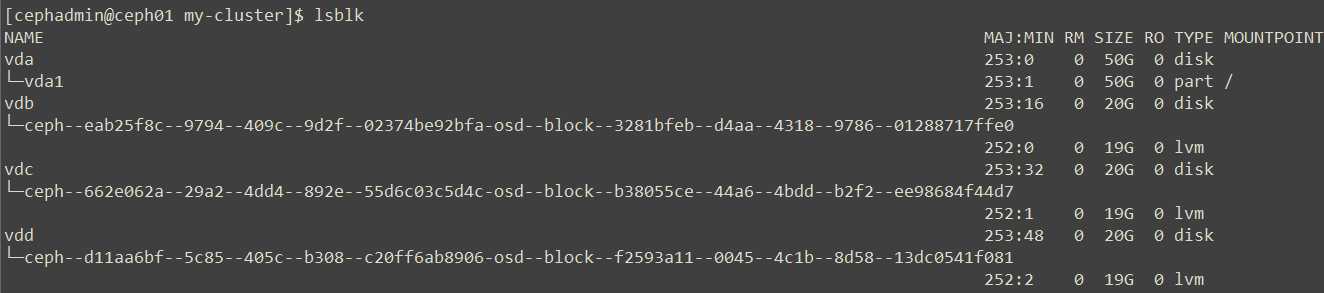

1.2 查看磁盘信息

# lsblk NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINT vda 253:0 0 50G 0 disk └─vda1 253:1 0 50G 0 part / vdb 253:16 0 20G 0 disk vdc 253:32 0 20G 0 disk vdd 253:48 0 20G 0 disk

2.1需要配置时间同步

# crontab -e */5 * * * * /usr/sbin/ntpdate time1.aliyun.com

或者使用

yum install ntpdate ntp -y ntpdate cn.ntp.org.cn systemctl restart ntpd && systemctl enable ntpd

2.2 关闭SELinux

sed -i "/^SELINUX/s/enforcing/disabled/" /etc/selinux/config setenforce 0

2.3 关闭firewalld

systemctl stop firewalld

systemctl disable firewalld

或者增加规则

firewall-cmd --zone=public --add-port=6789/tcp --permanent firewall-cmd --zone=public --add-port=6800-7100/tcp --permanent firewall-cmd --reload firewall-cmd --zone=public --list-all

2.4 增加本地hosts解析

# vim /etc/hosts 192.168.5.91 ceph01 192.168.5.92 ceph02 192.168.5.93 ceph03

2.5 配置sudo不需要tty,7.6应该不需要配置

sed -i ‘s/Default requiretty/#Default requiretty/‘ /etc/sudoers

2.6 创建ceph.repo配置ceph m版本的软件安装源

阿里其它版本软件源都在此位置找:http://mirrors.aliyun.com/ceph/

# vim /etc/yum.repos.d/ceph.repo [ceph] name=ceph baseurl=http://mirrors.aliyun.com/ceph/rpm-mimic/el7/x86_64/ gpgcheck=0 [ceph-noarch] name=cephnoarch baseurl=http://mirrors.aliyun.com/ceph/rpm-mimic/el7/noarch/ gpgcheck=0

2.7 创建cephadmin部署帐户

useradd cephadmin echo ‘cephadmin‘ | passwd --stdin cephadmin echo "cephadmin ALL = (root) NOPASSWD:ALL" | sudo tee /etc/sudoers.d/cephadmin chmod 0440 /etc/sudoers.d/cephadmin

2.8 仅在ceph01 节点部署节点配置ssh免密钥登录

[root@ceph01 ~]# su - cephadmin [cephadmin@ceph01 ~]$ ssh-keygen [cephadmin@ceph01 ~]$ ssh-copy-id cephadmin@ceph01 [cephadmin@ceph01 ~]$ ssh-copy-id cephadmin@ceph02 [cephadmin@ceph01 ~]$ ssh-copy-id cephadmin@ceph03

3.1 在ceph01 节点安装ceph-deploy和python-pip软件

[cephadmin@ceph01 ~]$ sudo yum install -y ceph-deploy python-pip

3.2 在三个节点上安装ceph软件包

sudo yum install -y ceph ceph-radosgw

3.3 在ceph01上安装集群

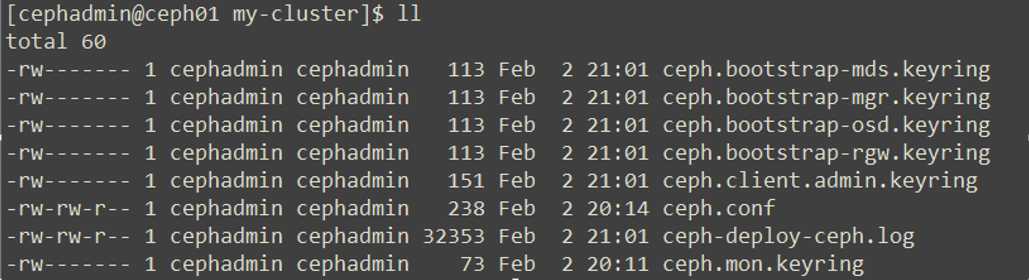

[cephadmin@ceph01 ~]$ mkdir my-cluster [cephadmin@ceph01 ~]$ cd my-cluster/ [cephadmin@ceph01 my-cluster]$ ceph-deploy new ceph01 ceph02 ceph03 # 如果不指定自定义集群的名称默认为ceph,多个集群安装的时候可以进行指定。 ceph-deploy --cluster {cluster-name} new node1 node2 # 安装完成之后会生成配置文件 [cephadmin@ceph01 my-cluster]$ ls ceph.conf ceph-deploy-ceph.log ceph.mon.keyring

也可以将集群网络和内部网络互相隔离,在ceph.conf中增加两行配置。

[cephadmin@ceph01 my-cluster]$ cat ceph.conf [global] fsid = 4d02981a-cd20-4cc9-8390-7013da54b161 mon_initial_members = ceph01, ceph02, ceph03 mon_host = 192.168.5.91,192.168.5.92,192.168.5.93 auth_cluster_required = cephx auth_service_required = cephx auth_client_required = cephx # 如果是双网卡只设置一个网关,然后增加如下两行。若不分离网络请忽添加。 public network = 192.168.5.0/24 cluster network = 192.168.10.0/24

3.4 集群初始化monitor 监控组件

[cephadmin@ceph01 my-cluster]$ ceph-deploy mon create-initial

3.5 把配置信息拷贝到各个节点当中

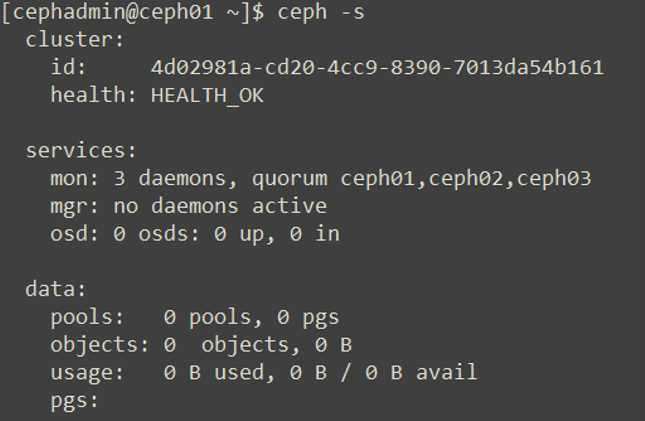

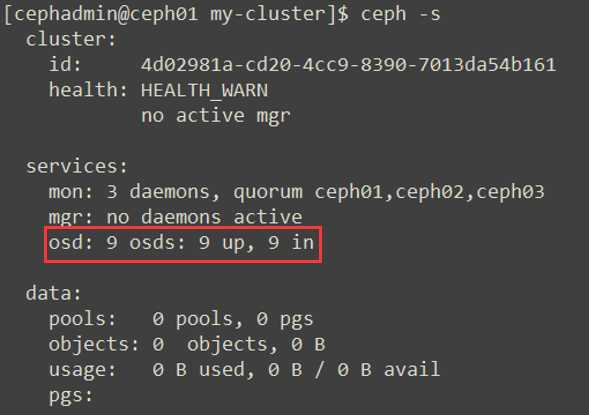

[cephadmin@ceph01 my-cluster]$ ceph-deploy admin ceph01 ceph02 ceph03 # 在所有节点执行操作;为/etc/ceph 授予cephadmin的权限 sudo chown -R cephadmin.cephadmin /etc/ceph [cephadmin@ceph01 my-cluster]$ ceph -s # 查看集群状态

3.6 配置OSD,添加到上面的OSD当中,用来存储数据

在ceph01的my-cluster目录对3个节点进行操作:清空磁盘、创建磁盘、加 入集群中,如果不想整个磁盘做数据目录,但也不能使用目录,可以使用一个LVM分区。如果要在LVM卷上创建OSD,则参数--data 必须是volume_group/lv_name,而不是卷的块设备路径。

for dev in /dev/vdb /dev/vdc /dev/vdd do ceph-deploy disk zap ceph01 $dev ceph-deploy osd create ceph01 --data $dev ceph-deploy disk zap ceph02 $dev ceph-deploy osd create ceph02 --data $dev ceph-deploy disk zap ceph03 $dev ceph-deploy osd create ceph03 --data $dev done

一个OSD守护进程相当于一块物理磁盘

3.7 部署MGR,L版本以后需要安装mgr,它用来管理dashbord组件,必须要部署的。

[cephadmin@ceph01 my-cluster]$ ceph-deploy mgr create ceph01 ceph02 ceph03

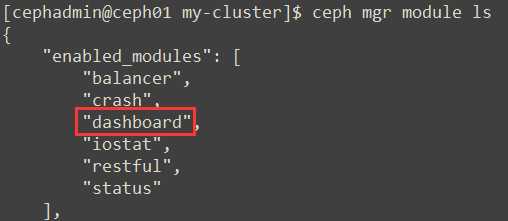

4.1 打开dashboard模块

[cephadmin@ceph01 my-cluster]$ ceph mgr module ls

4.2 生成自签证书

[cephadmin@ceph01 my-cluster]$ ceph dashboard create-self-signed-cert

Self-signed certificate created

4.3 生成密钥对

[cephadmin@ceph01 mgr-dashboard]$ openssl req -new -nodes -x509 -subj "/O=IT/CN=ceph-mgr-dashboard" -days 3650 -keyout dashboard.key -out dashboard.crt -extensions v3_ca Generating a 2048 bit RSA private key ...................................+++ ...........+++ writing new private key to ‘dashboard.key‘ ----- [cephadmin@ceph01 mgr-dashboard]$ ls dashboard.crt dashboard.key

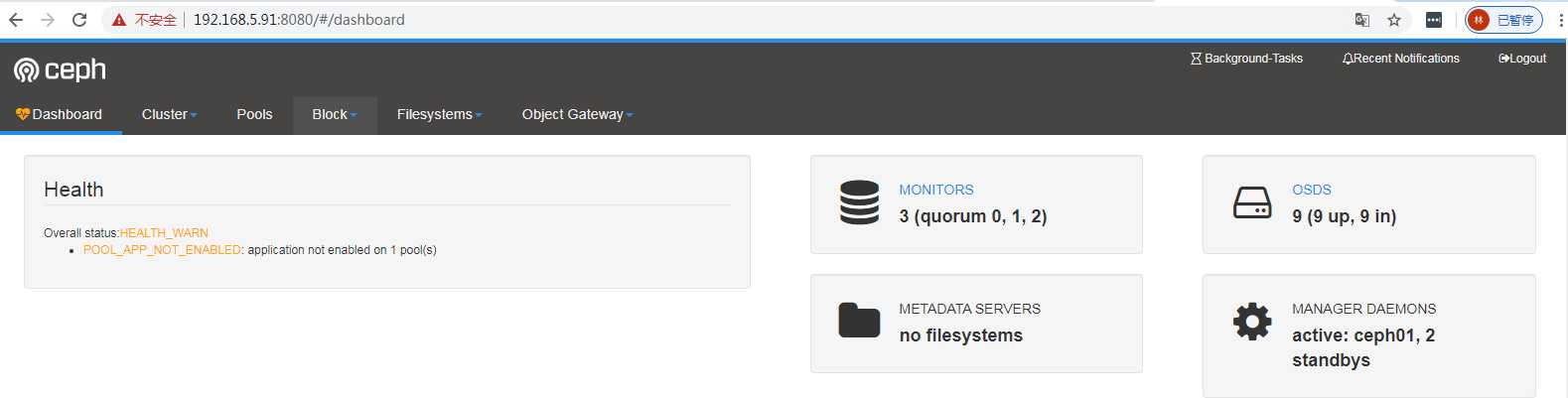

4.4 设置IP和端口,惹不设置默认为8443

[cephadmin@ceph01 my-cluster]$ ceph config set mgr mgr/dashboard/server_addr 192.168.5.91 [cephadmin@ceph01 my-cluster]$ ceph config set mgr mgr/dashboard/server_port 8080 [cephadmin@ceph01 my-cluster]$ ceph mgr services { "dashboard": "https://ceph01:8443/" }

4.5 重启dashboard

[cephadmin@ceph01 my-cluster]$ ceph mgr module disable dashboard [cephadmin@ceph01 my-cluster]$ ceph mgr module enable dashboard [cephadmin@ceph01 my-cluster]$ ceph mgr services { "dashboard": "https://192.168.5.91:8080/" }

4.6 设置登录帐号和密码

[cephadmin@ceph01 my-cluster]$ ceph dashboard set-login-credentials admin admin

4.7 可以直接访问

https://192.168.5.91:8080/

CentOS7.6 使用ceph-deploy部署mimic 13.2.8集群(一)

标签:top linux nop ons load ssh免密钥 数据 软件源 signed

原文地址:https://www.cnblogs.com/cyleon/p/12287753.html