标签:rate object lis The 十分 oid erro 不同类 list

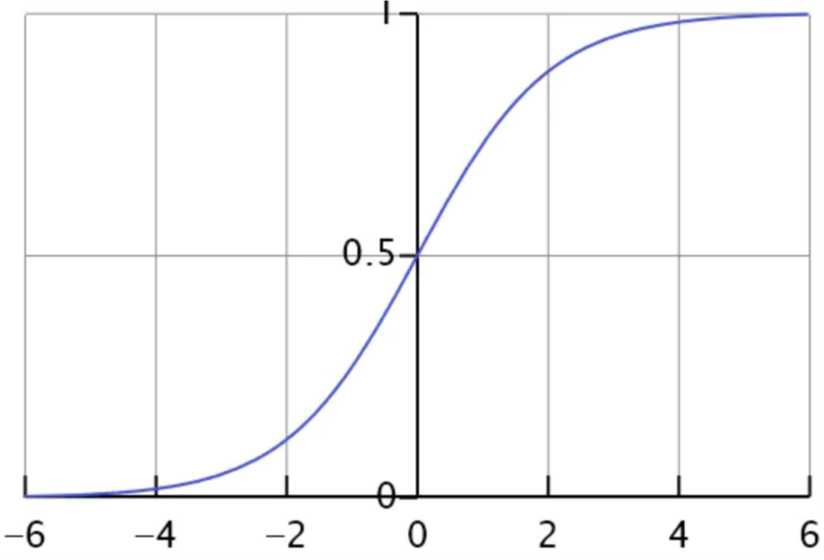

逻辑函数

\(g(z)=\frac{1}{1+e^{-z}}\)

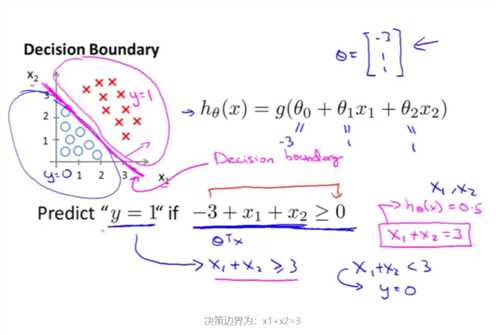

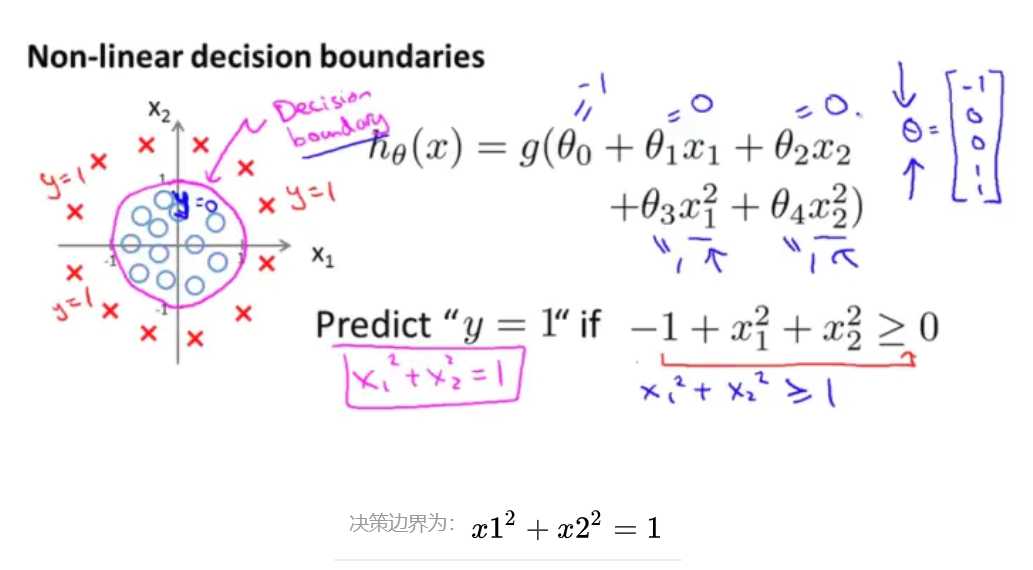

决策边界,也称为决策面,是用于在N维空间,将不同类别样本分开的直线或曲线,平面或曲面

根据以上假设函数表示概率,我们可以推得

if \(h_{\theta}(x) \geqslant 0.5 \Rightarrow y=1\)

if \(h_{\theta}(x)<0.5 \Rightarrow y=0\)

在线性回归中的代价函数为

\(J(\theta)=\frac{1}{2 m} \sum_{i=1}^{m}\left(h_{\theta}\left(x^{(i)}\right)-y^{(i)}\right)^{2}\)

\(\begin{aligned} L(\theta) &=\prod_{i=1}^{m} P\left(y^{(i)} | x^{(i)} ; \theta\right) \\ &=\prod_{i=1}^{m}\left(h_{\theta}\left(x^{(i)}\right)\right)^{y^{(0)}}\left(1-h_{\theta}\left(x^{(i)}\right)\right)^{1-y^{(i)}} \end{aligned}\)

似然函数取对数之后

\(\begin{aligned} l(\theta) &=\log L(\theta) \\ &=\sum_{i=1}^{m}\left(y^{(i)} \log h_{\theta}\left(x^{(i)}\right)+\left(1-y^{(i)}\right) \log \left(1-h_{\theta}\left(x^{(i)}\right)\right)\right) \end{aligned}\)

损失函数中增加惩罚项:参数值越大惩罚越大–>让算法去尽量减少参数值

损失函数 \(J(β)\)的简写形式:

\(J(\beta)=\frac{1}{m} \sum_{i=1}^{m} \cos (y, \beta)+\frac{\lambda}{2 m} \sum_{j=1}^{n} \beta_{j}^{2}\)

l1正则化

\(J(\beta)=\frac{1}{m} \sum_{i=1}^{\mathrm{m}} \cos t(y, \beta)+\frac{\lambda}{2 m} \sum_{j=1}^{n}\left|\beta_{j}\right|\)

l2正则化

\(J(\beta)=\frac{1}{m} \sum_{i=1}^{\mathrm{m}} \cos t(y, \beta)+\frac{\lambda}{2 m} \sum_{j=1}^{n} \beta_{j}^{2}\)

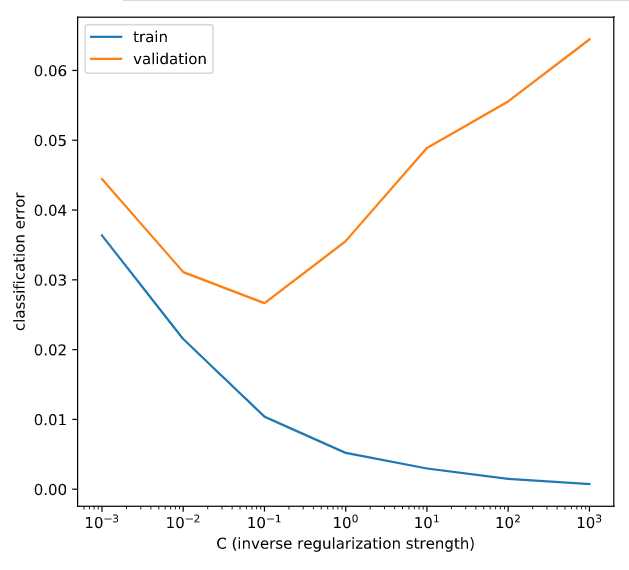

# Create LogisticRegression object and fit

lr = LogisticRegression(C=C_value)

lr.fit(X_train, y_train)

# Evaluate error rates and append to lists

train_errs.append( 1.0 - lr.score(X_train, y_train) )

valid_errs.append( 1.0 - lr.score(X_valid, y_valid) )

# Plot results

plt.semilogx(C_values, train_errs, C_values, valid_errs)

plt.legend(("train", "validation"))

plt.show()

标签:rate object lis The 十分 oid erro 不同类 list

原文地址:https://www.cnblogs.com/gaowenxingxing/p/12321461.html