标签:size 基础上 ons 参考 正则表达 href exception odi 视频

上篇获取到了每一集的下载url,这篇就用获取到的url下载视频

在下载文件时希望可以给出进度条之类的提示,在网上搜索了一波,发现有一个库可以实现:tqdm库,具体用法参考这篇博客:https://www.jianshu.com/p/1ed2a8b2c77b

在原来的类下面,再加一个方法,用来下载文件,如下

def download_file(url, name): """下载文件""" try: response = requests.get(url=url, stream=True) content_size = int(response.headers[‘Content-Length‘]) / 1024 # 文件大小,从响应头中获取 if content_size: with open(name, "wb") as f: print("total: ", content_size, ‘k‘) for data in tqdm(iterable=response.iter_content(1024), total=content_size, unit=‘k‘): # 实现下载进度条 f.write(data) # f.close() print("\n done " + name) except RequestException as e: print("接口错误信息为 %r", e)

原有基础上加了一个主函数

def main(self): """主函数""" root_dir = os.path.abspath(os.path.join(os.path.dirname(__file__), os.pardir)) print(root_dir) download_url = self.get_tv_url("血疫第一季") # 调用get_tv_url(),获取剧集url for t in download_url: name = t.split(‘/‘)[-1] # 获取下载链接url中/后的一段文本,用作文件名 file_path = root_dir + "/movies/" # 设置文件保存路径 if not os.path.exists(file_path): os.makedirs(file_path) print("正在下载【{}】".format(name)) self.download_file(t, file_path+name) # 调用download_file(),根据下载链接开始下载文件

最后完整的代码如下

1 # coding: utf-8 2 """ 3 author: hmk 4 describe: 爬虫80s电影网 5 create_time: 2019/01/18 6 """ 7 8 import re 9 10 import os 11 from bs4 import BeautifulSoup 12 from requests.exceptions import RequestException 13 import requests 14 from tqdm import tqdm 15 16 class DownloadTV: 17 @staticmethod 18 def get_html(url, data=None, header=None, method=None): 19 """获取一个url的html格式文本内容""" 20 21 if method == "get": 22 response = requests.get(url, params=data, headers=header) 23 else: 24 response = requests.post(url, data=data, headers=header) 25 try: 26 if response.status_code == 200: 27 response.encoding = response.apparent_encoding 28 # print(response.status_code) 29 # print(response.text) 30 return response.text 31 return None 32 except RequestException: 33 print("请求失败") 34 return None 35 36 def get_tv_id(self, tv_name): 37 """获取所查询电视剧对应的id""" 38 headers = { 39 "Content-Type": "application/x-www-form-urlencoded" 40 } 41 42 data = { 43 "search_typeid": "1", 44 "skey": tv_name, # 用一个变量来表示要搜索的剧名 45 "Input": "搜索" 46 } 47 48 url = "http://www.y80s.com/movie/search/" # 请求url 49 50 response = self.get_html(url, data, headers, "post") 51 52 html = response 53 # print(html) 54 55 soup = BeautifulSoup(html, "html.parser") 56 name_label = soup.find_all("a", title=tv_name) # 获取所有title属性为影视剧名称的<a>标签,用一个变量来动态表示剧名 57 # print(soup.prettify()) 58 # print(name_label) 59 # print(name_label[0].get(‘href‘)) 60 61 ju_id = re.compile(r‘(\d+)‘, re.S) # 定义一个正则表达式,提取标签内容中的数字 62 if name_label: 63 href_value = ju_id.search(name_label[0].get(‘href‘)) 64 if href_value: 65 tv_id = href_value.group() 66 print("查询影视剧对应的id为:{}".format(tv_id)) 67 # print(type(tv_id)) # 查看获取到的tv_id的数据类型,如果是int的话,在后续拼接时需要使用str()转成字符串 68 return tv_id 69 70 def get_tv_url(self, tv_name): 71 """获取电视剧的下载url""" 72 tv_id = self.get_tv_id(tv_name) # 调用get_tv_id()方法,获取tv_id 73 url = "http://www.y80s.com/ju/" + tv_id # 利用tv_id拼接url 74 75 r = self.get_html(url,method="get") 76 html = r 77 soup = BeautifulSoup(html, "html.parser") 78 a_tv_url = soup.find_all("a", title="本地下载") # 提取title属性为"本地下载"的a标签,返回一个包含所有a标签的列表 79 # print(a_tv_url) 80 tv_url = [] 81 for t in a_tv_url: 82 tv_url.append(t.get(‘href‘)) # 用get方法获取每个a标签中的href属性值 83 print(tv_url) 84 return tv_url 85 86 @staticmethod 87 def download_file(url, name): 88 """下载文件""" 89 try: 90 response = requests.get(url=url, stream=True) 91 content_size = int(response.headers[‘Content-Length‘]) / 1024 # 文件大小,从响应头中获取 92 93 if content_size: 94 with open(name, "wb") as f: 95 print("total: ", content_size, ‘k‘) 96 for data in tqdm(iterable=response.iter_content(1024), total=content_size, unit=‘k‘): 97 f.write(data) 98 # f.close() 99 print("\n done " + name) 100 101 except RequestException as e: 102 print("接口错误信息为 %r", e) 103 104 def main(self): 105 """主函数""" 106 root_dir = os.path.abspath(os.path.join(os.path.dirname(__file__), os.pardir)) 107 print(root_dir) 108 download_url = self.get_tv_url("血疫第一季") # 调用get_tv_url(),获取剧集url 109 for t in download_url: 110 name = t.split(‘/‘)[-1] # 获取下载链接url中/后的一段文本,用作文件名 111 file_path = root_dir + "/movies/" # 设置文件保存路径 112 if not os.path.exists(file_path): 113 os.makedirs(file_path) 114 print("正在下载【{}】".format(name)) 115 self.download_file(t, file_path+name) # 调用download_file(),根据下载链接开始下载文件 116 117 if __name__ == ‘__main__‘: 118 test = DownloadTV() 119 test.main()

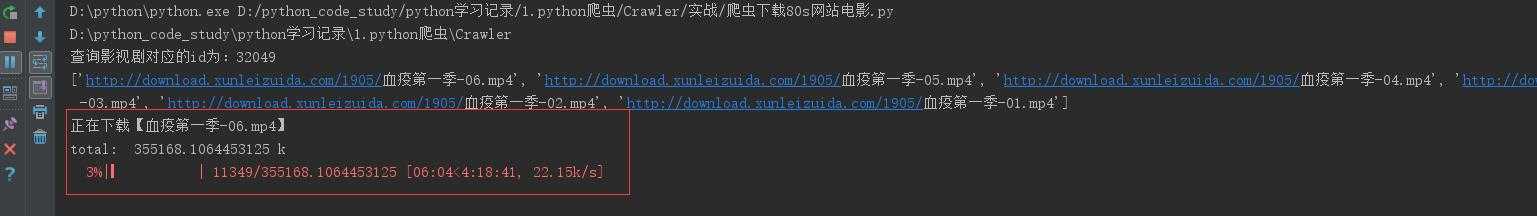

运行一下

标签:size 基础上 ons 参考 正则表达 href exception odi 视频

原文地址:https://www.cnblogs.com/hanmk/p/12323678.html