标签:art obat when hdp 解码器 mys sla MIXED navig

系统 centos7

远程连接工具MobaXterm

待整理

1.三台CES

2.若需要,购买公网弹性IP并绑定

3.若需要,可以购买云盘

挂载数据盘

阿里云购买的第2块云盘默认是不自动挂载的,需要手动配置挂载上。

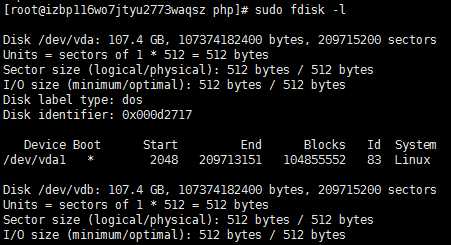

(1)查看SSD云盘

sudo fdisk -l

可以看到SSD系统已经识别为/dev/vdb

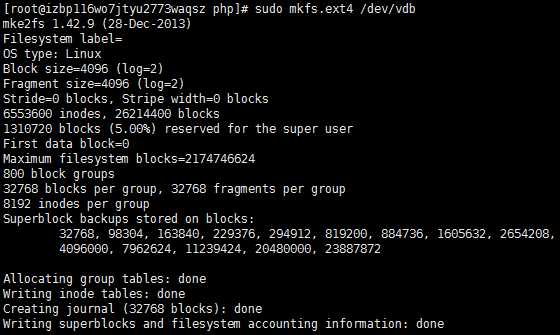

(2)格式化云盘

sudo mkfs.ext4 /dev/vdb

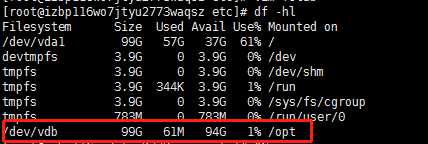

(3)挂载

sudo mount /dev/vdb /opt

将云盘挂载到/opt目录下。

(4)配置开机自动挂载

修改/etc/fstab文件,文件末尾添加:

/dev/vdb /opt ext4 defaults 0 0

然后df -hl就可以看到第二块挂载成功咯

centos 7 默认使用的是firewall,不是iptables

systemctl stop firewalld.service

systemctl mask firewalld.service

vim /etc/selinux/config

设置SELINUX=disabled

分别命名为node01、node02、node03

以node01为例

[root@node01 ~]# hostnamectl set-hostname node01 [root@node01 ~]# cat /etc/hostname node01

已经修改,重新登录即可。

生成私钥和公钥

ssh-keygen -t rsa

将公钥拷贝到要免密登录的目标机器上

ssh-copy-id node01 ssh-copy-id node02 ssh-copy-id node03

使用rsync编写xsync

#!/bin/sh # 获取输入参数个数,如果没有参数,直接退出 pcount=$# if((pcount==0)); then echo no args...; exit; fi # 获取文件名称 p1=$1 fname=`basename $p1` echo fname=$fname # 获取上级目录到绝对路径 pdir=`cd -P $(dirname $p1); pwd` echo pdir=$pdir # 获取当前用户名称 user=`whoami` # 循环 for((host=1; host<=3; host++)); do echo $pdir/$fname $user@slave$host:$pdir echo ==================slave$host================== rsync -rvl $pdir/$fname $user@slave$host:$pdir done #Note:这里的slave对应自己主机名,需要做相应修改。另外,for循环中的host的边界值由自己的主机编号决定

xcall.sh

#! /bin/bash for host in node01 node02 node03 do echo ------------ $i ------------------- ssh $i "$*" done

执行上面脚本之前将/etc/profile中的环境变量追加到~/.bashrc中,否则ssh执行命令会报错

[root@node01 bigdata]# cat /etc/profile >> ~/.bashrc [root@node02 bigdata]# cat /etc/profile >> ~/.bashrc [root@node03 bigdata]# cat /etc/profile >> ~/.bashrc

下载JDK,这里我们下载JDK8,https://www.oracle.com/java/technologies/javase/javase-jdk8-downloads.html

需要Oracale账号密码,可以网络搜索

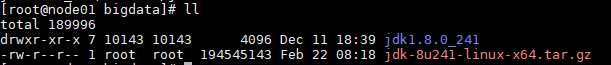

上传JDK到各个节点的/bigdata目录下

解压缩

tar -zxvf jdk-8u241-linux-x64.tar.gz

文件属主和属组如果不是root进行修改,下面是

Linux系统按文件所有者、文件所有者同组用户和其他用户来规定了不同的文件访问权限。

1、chgrp:更改文件属组

语法:

chgrp [-R] 属组名 文件名2、chown:更改文件属主,也可以同时更改文件属组

语法:

chown [–R] 属主名 文件名 chown [-R] 属主名:属组名 文件名

创建软连接

ln -s /root/bigdata/jdk1.8.0_241/ /usr/local/jdk

配置环境变量

vi /etc/profile

在最后面添加

export JAVA_HOME=/usr/local/jdk

export PATH=$PATH:${JAVA_HOME}/bin

加载配置文件

source /etc/profile

查看Java版本

[root@node03 bigdata]# java -version java version "1.8.0_241" Java(TM) SE Runtime Environment (build 1.8.0_241-b07) Java HotSpot(TM) 64-Bit Server VM (build 25.241-b07, mixed mode)

安装成功

http://maven.apache.org/download.cgi

下载,解压

tar -zxvf apache-maven-3.6.1-bin.tar.gz

建立软连接

ln -s /bigdata/apache-maven-3.6.3 /usr/local/maven

加入/etc/profile中

export M2_HOME=/usr/local/maven3

export PATH=$PATH:$M2_HOME/bin

yum install git

JAVA_HOME 一定要是 /usr/java/java-version

yum install bind-utils psmisc cyrus-sasl-plain cyrus-sasl-gssapi fuse portmap fuse-libs /lib/lsb/init-functions httpd mod_ssl openssl-devel python-psycopg2 MySQL-python libxslt

版本 6.3.1

| RHEL 7 Compatible | https://archive.cloudera.com/cm6/6.3.1/redhat7/yum/ | cloudera-manager.repo |

下载cloudera-manager.repo 文件,放到Cloudera Manager Server节点的 /etc/yum.repos.d/ 目录 中

[root@node01 ~]# cat /etc/yum.repos.d/cloudera-manager.repo [cloudera-manager] name=Cloudera Manager 6.3.1 baseurl=https://archive.cloudera.com/cm6/6.3.1/redhat7/yum/ gpgkey=https://archive.cloudera.com/cm6/6.3.1/redhat7/yum/RPM-GPG-KEY-cloudera gpgcheck=1 enabled=1 autorefresh=0

yum install cloudera-manager-daemons cloudera-manager-agent cloudera-manager-server

如果速度太慢,可以去 https://archive.cloudera.com/cm6/6.3.1/redhat7/yum/RPMS/x86_64/ 下载rpm包,上传到服务器进行安装

rpm -ivh cloudera-manager-agent-6.3.1-1466458.el7.x86_64.rpm cloudera-manager-daemons-6.3.1-1466458.el7.x86_64.rpm cloudera-manager-server-6.3.1-1466458.el7.x86_64.rpm

安装完后

[root@node01 cm]# ll /opt/cloudera/ total 16 drwxr-xr-x 27 cloudera-scm cloudera-scm 4096 Mar 3 19:36 cm drwxr-xr-x 8 root root 4096 Mar 3 19:36 cm-agent drwxr-xr-x 2 cloudera-scm cloudera-scm 4096 Sep 25 16:34 csd drwxr-xr-x 2 cloudera-scm cloudera-scm 4096 Sep 25 16:34 parcel-repo

所有节点

server_host=node01

安装mysql

修改密码,配置权限

移动引擎日志文件

将旧的InnoDB log files /var/lib/mysql/ib_logfile0 和 /var/lib/mysql/ib_logfile1 从 /var/lib/mysql/ 中移动到其他你指定的地方做备份

[root@node01 ~]# mv /var/lib/mysql/ib_logfile0 /bigdata [root@node01 ~]# mv /var/lib/mysql/ib_logfile1 /bigdata

更新my.cnf文件

默认在/etc/my.cnf目录中

[root@node01 etc]# mv my.cnf my.cnf.bak [root@node01 etc]# vi my.cnf

官方推荐配置

[mysqld] datadir=/var/lib/mysql socket=/var/lib/mysql/mysql.sock transaction-isolation = READ-COMMITTED # Disabling symbolic-links is recommended to prevent assorted security risks; # to do so, uncomment this line: symbolic-links = 0 key_buffer_size = 32M max_allowed_packet = 32M thread_stack = 256K thread_cache_size = 64 query_cache_limit = 8M query_cache_size = 64M query_cache_type = 1 max_connections = 550 #expire_logs_days = 10 #max_binlog_size = 100M #log_bin should be on a disk with enough free space. #Replace ‘/var/lib/mysql/mysql_binary_log‘ with an appropriate path for your #system and chown the specified folder to the mysql user. log_bin=/var/lib/mysql/mysql_binary_log #In later versions of MySQL, if you enable the binary log and do not set #a server_id, MySQL will not start. The server_id must be unique within #the replicating group. server_id=1 binlog_format = mixed read_buffer_size = 2M read_rnd_buffer_size = 16M sort_buffer_size = 8M join_buffer_size = 8M # InnoDB settings innodb_file_per_table = 1 innodb_flush_log_at_trx_commit = 2 innodb_log_buffer_size = 64M innodb_buffer_pool_size = 4G innodb_thread_concurrency = 8 innodb_flush_method = O_DIRECT innodb_log_file_size = 512M [mysqld_safe] log-error=/var/log/mysqld.log pid-file=/var/run/mysqld/mysqld.pid sql_mode=STRICT_ALL_TABLES

确保开机启动

systemctl enable mysqld

启动MySql

systemctl start mysqld

下载

wget https://dev.mysql.com/get/Downloads/Connector-J/mysql-connector-java-5.1.46.tar.gz

解压缩

tar zxvf mysql-connector-java-5.1.46.tar.gz

拷贝驱动到 /usr/share/java/ 目录中并重命名,如果没有创建该目录

[root@node01 etc]# mkdir -p /usr/share/java/ [root@node01 etc]# cd mysql-connector-java-5.1.46 [root@node01 mysql-connector-java-5.1.46]# cp mysql-connector-java-5.1.46-bin.jar /usr/share/java/mysql-connector-java.jar

Cloudera Manager Server, Oozie Server, Sqoop Server, Activity Monitor, Reports Manager, Hive Metastore Server, Hue Server, Sentry Server, Cloudera Navigator Audit Server, and Cloudera Navigator Metadata Server这些组件都需要建立数据库

| Service | Database | User |

|---|---|---|

| Cloudera Manager Server | scm | scm |

| Activity Monitor | amon | amon |

| Reports Manager | rman | rman |

| Hue | hue | hue |

| Hive Metastore Server | metastore | hive |

| Sentry Server | sentry | sentry |

| Cloudera Navigator Audit Server | nav | nav |

| Cloudera Navigator Metadata Server | navms | navms |

| Oozie | oozie | oozie |

登录mysql,输入密码

mysql -u root -p

Create databases for each service deployed in the cluster using the following commands. You can use any value you want for the <database>, <user>, and <password> parameters. The Databases for Cloudera Software table, below lists the default names provided in the Cloudera Manager configuration settings, but you are not required to use them.

Configure all databases to use the utf8 character set.

Include the character set for each database when you run the CREATE DATABASE statements described below.

为每个部属在集里的服务创建数据库,所有数据库都使用 utf8 character set

CREATE DATABASE <database> DEFAULT CHARACTER SET utf8 DEFAULT COLLATE utf8_general_ci;

赋权限

GRANT ALL ON <database>.* TO ‘<user>‘@‘%‘ IDENTIFIED BY ‘<password>‘;

实例

mysql> CREATE DATABASE amon DEFAULT CHARACTER SET utf8 DEFAULT COLLATE utf8_general_ci; Query OK, 1 row affected (0.00 sec) mysql> CREATE DATABASE scm DEFAULT CHARACTER SET utf8 DEFAULT COLLATE utf8_general_ci; Query OK, 1 row affected (0.00 sec) mysql> CREATE DATABASE hive DEFAULT CHARACTER SET utf8 DEFAULT COLLATE utf8_general_ci; Query OK, 1 row affected (0.00 sec) mysql> CREATE DATABASE oozie DEFAULT CHARACTER SET utf8 DEFAULT COLLATE utf8_general_ci; Query OK, 1 row affected (0.00 sec) mysql> CREATE DATABASE hue DEFAULT CHARACTER SET utf8 DEFAULT COLLATE utf8_general_ci; Query OK, 1 row affected (0.01 sec) mysql> CREATE DATABASE rman DEFAULT CHARACTER SET utf8 DEFAULT COLLATE utf8_general_ci; Query OK, 1 row affected (0.00 sec) mysql> CREATE DATABASE sentry DEFAULT CHARACTER SET utf8 DEFAULT COLLATE utf8_general_ci; Query OK, 1 row affected (0.01 sec) mysql> mysql> CREATE DATABASE nav DEFAULT CHARACTER SET utf8 DEFAULT COLLATE utf8_general_ci; Query OK, 1 row affected (0.00 sec) mysql> CREATE DATABASE metastore DEFAULT CHARACTER SET utf8 DEFAULT COLLATE utf8_general_ci; Query OK, 1 row affected (0.00 sec)

mysql> GRANT ALL ON scm.* TO ‘scm‘@‘%‘ IDENTIFIED BY ‘@Zhaojie123‘; Query OK, 0 rows affected, 1 warning (0.01 sec) mysql> GRANT ALL ON amon.* TO ‘amon‘@‘%‘ IDENTIFIED BY ‘@Zhaojie123‘; Query OK, 0 rows affected, 1 warning (0.00 sec) mysql> GRANT ALL ON hive.* TO ‘hive‘@‘%‘ IDENTIFIED BY ‘@Zhaojie123‘; Query OK, 0 rows affected, 1 warning (0.00 sec) mysql> GRANT ALL ON oozie.* TO ‘oozie‘@‘%‘ IDENTIFIED BY ‘@Zhaojie123‘; Query OK, 0 rows affected, 1 warning (0.00 sec) mysql> GRANT ALL ON hue.* TO ‘hue‘@‘%‘ IDENTIFIED BY ‘@Zhaojie123‘; Query OK, 0 rows affected, 1 warning (0.00 sec) mysql> GRANT ALL ON rman.* TO ‘rman‘@‘%‘ IDENTIFIED BY ‘@Zhaojie123‘; Query OK, 0 rows affected, 1 warning (0.01 sec) mysql> GRANT ALL ON metastore.* TO ‘metastore‘@‘%‘ IDENTIFIED BY ‘@Zhaojie123‘; Query OK, 0 rows affected, 1 warning (0.00 sec) mysql> GRANT ALL ON nav.* TO ‘nav‘@‘%‘ IDENTIFIED BY ‘@Zhaojie123‘; Query OK, 0 rows affected, 1 warning (0.00 sec) mysql> GRANT ALL ON navms.* TO ‘navms‘@‘%‘ IDENTIFIED BY ‘@Zhaojie123‘; Query OK, 0 rows affected, 1 warning (0.00 sec) mysql> GRANT ALL ON sentry.* TO ‘sentry‘@‘%‘ IDENTIFIED BY ‘@Zhaojie123‘; Query OK, 0 rows affected, 1 warning (0.01 sec)

flush privileges;Record the values you enter for database names, usernames, and passwords. The Cloudera Manager installation wizard requires this information to correctly connect to these databases.

使用CM自带脚本创建

/opt/cloudera/cm/schema/scm_prepare_database.sh <databaseType> <databaseName> <databaseUser>

实例

[root@node01 cm]# /opt/cloudera/cm/schema/scm_prepare_database.sh mysql scm scm Enter SCM password: JAVA_HOME=/usr/local/jdk Verifying that we can write to /etc/cloudera-scm-server Creating SCM configuration file in /etc/cloudera-scm-server Executing: /usr/local/jdk/bin/java -cp /usr/share/java/mysql-connector-java.jar:/usr/share/java/oracle-connector-java.jar:/usr/share/java/postgresql-connector-java.jar:/opt/cloudera/cm/schema/../lib/* com.cloudera.enterprise.dbutil.DbCommandExecutor /etc/cloudera-scm-server/db.properties com.cloudera.cmf.db. Tue Mar 03 19:46:36 CST 2020 WARN: Establishing SSL connection without server‘s identity verification is not recommended. According to MySQL 5.5.45+, 5.6.26+ and 5.7.6+ requirements SSL connection must be established by default if explicit option isn‘t set. For compliance with existing applications not using SSL the verifyServerCertificate property is set to ‘false‘. You need either to explicitly disable SSL by setting useSSL=false, or set useSSL=true and provide truststore for server certificate verification. 2020-03-03 19:46:36,866 [main] INFO com.cloudera.enterprise.dbutil.DbCommandExecutor - Successfully connected to database. All done, your SCM database is configured correctly!

主节点

vim /etc/cloudera-scm-server/db.properties

com.cloudera.cmf.db.type=mysql

com.cloudera.cmf.db.host=node01

com.cloudera.cmf.db.name=scm

com.cloudera.cmf.db.user=scm

com.cloudera.cmf.db.setupType=EXTERNAL

com.cloudera.cmf.db.password=@Z

准备parcels,将CDH相关文件拷贝到主节点

[root@node01 parcel-repo]# pwd /opt/cloudera/parcel-repo [root@node01 parcel-repo]# ll total 2035084 -rw-r--r-- 1 root root 2083878000 Mar 3 21:27 CDH-6.3.1-1.cdh6.3.1.p0.1470567-el7.parcel -rw-r--r-- 1 root root 40 Mar 3 21:15 CDH-6.3.1-1.cdh6.3.1.p0.1470567-el7.parcel.sha1 -rw-r--r-- 1 root root 33887 Mar 3 21:15 manifest.json [root@node01 parcel-repo]# mv CDH-6.3.1-1.cdh6.3.1.p0.1470567-el7.parcel.sha1 CDH-6.3.1-1.cdh6.3.1.p0.1470567-el7.parcel.sha [root@node01 parcel-repo]# ll total 2035084 -rw-r--r-- 1 root root 2083878000 Mar 3 21:27 CDH-6.3.1-1.cdh6.3.1.p0.1470567-el7.parcel -rw-r--r-- 1 root root 40 Mar 3 21:15 CDH-6.3.1-1.cdh6.3.1.p0.1470567-el7.parcel.sha -rw-r--r-- 1 root root 33887 Mar 3 21:15 manifest.json

启动

主节点

systemctl start cloudera-scm-server

systemctl start cloudera-scm-agent

从节点

systemctl start cloudera-scm-agent

浏览器输入地址 ip:7180,登录,用户名和密码均为admin

继续

接受协议,继续

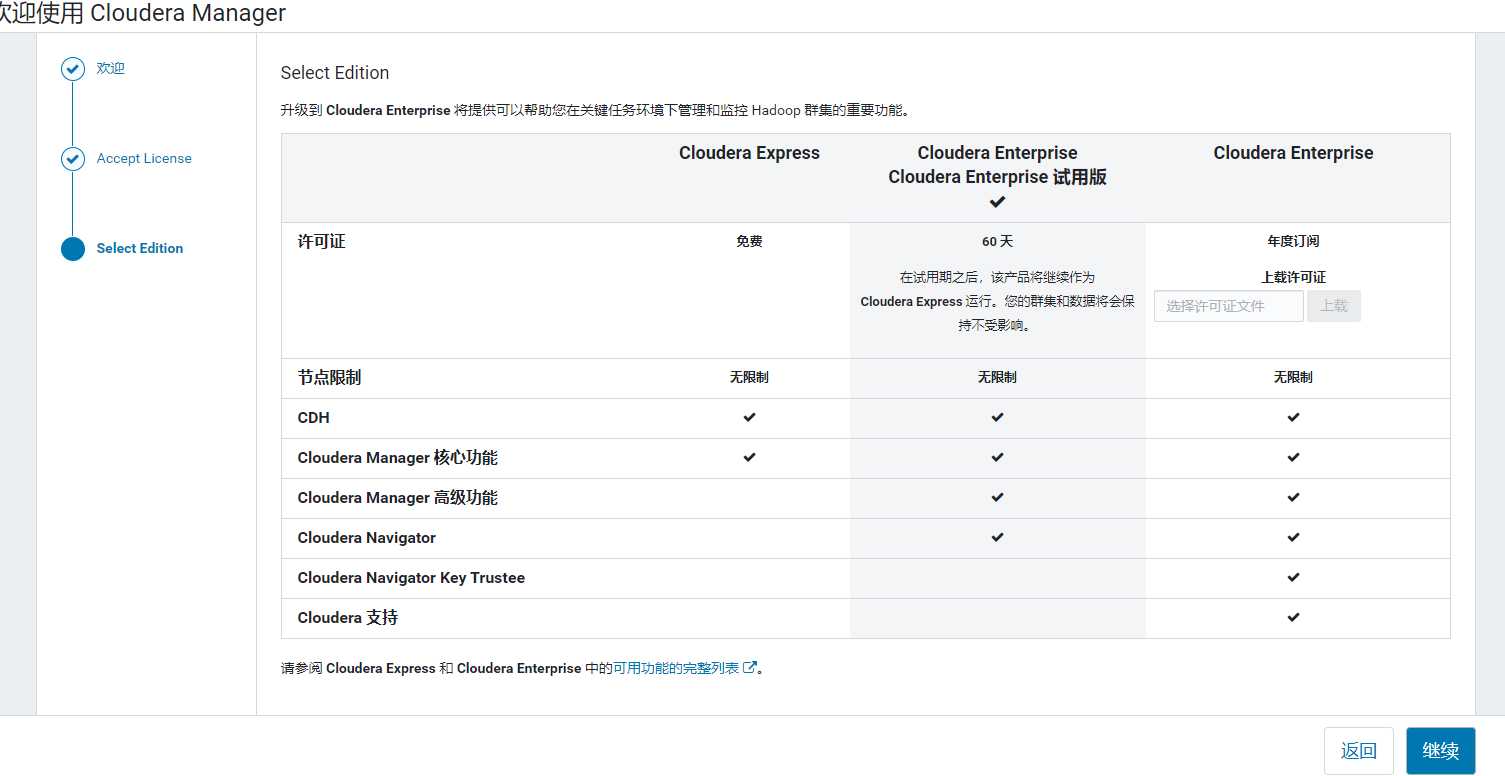

选择版本,继续

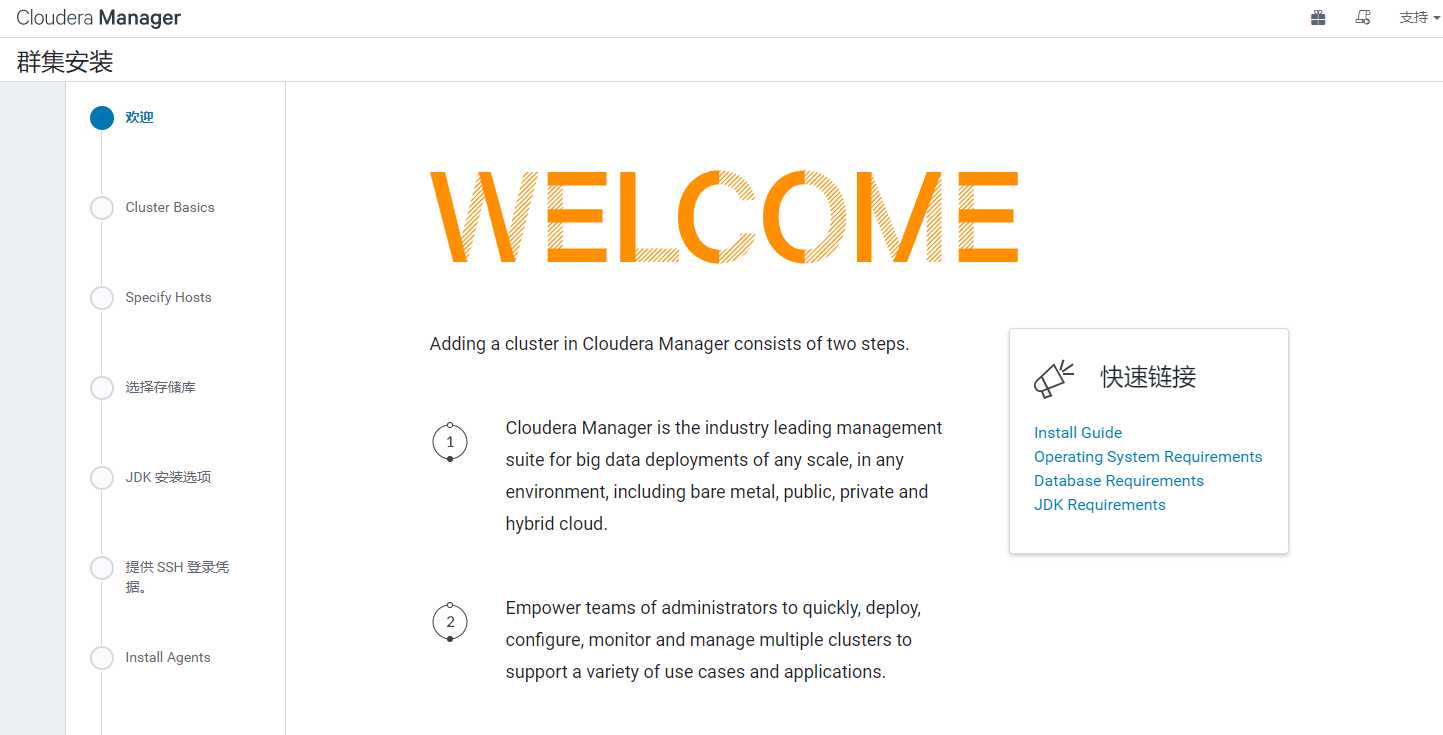

进入集群安装欢迎页

继续, 为集群命名,

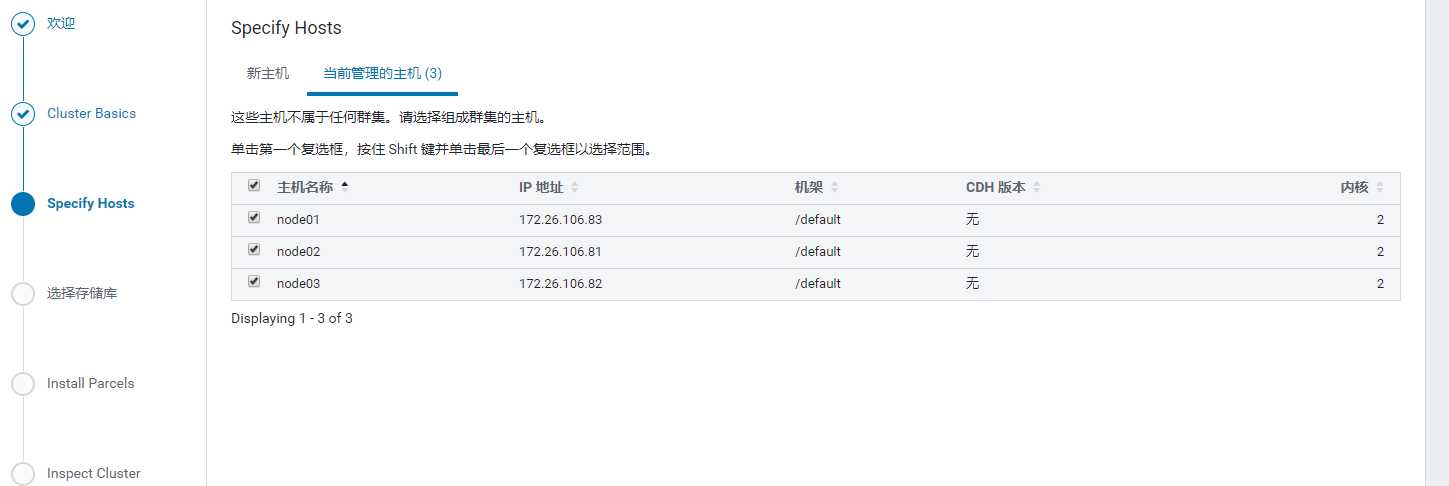

继续, 选择管理的主机

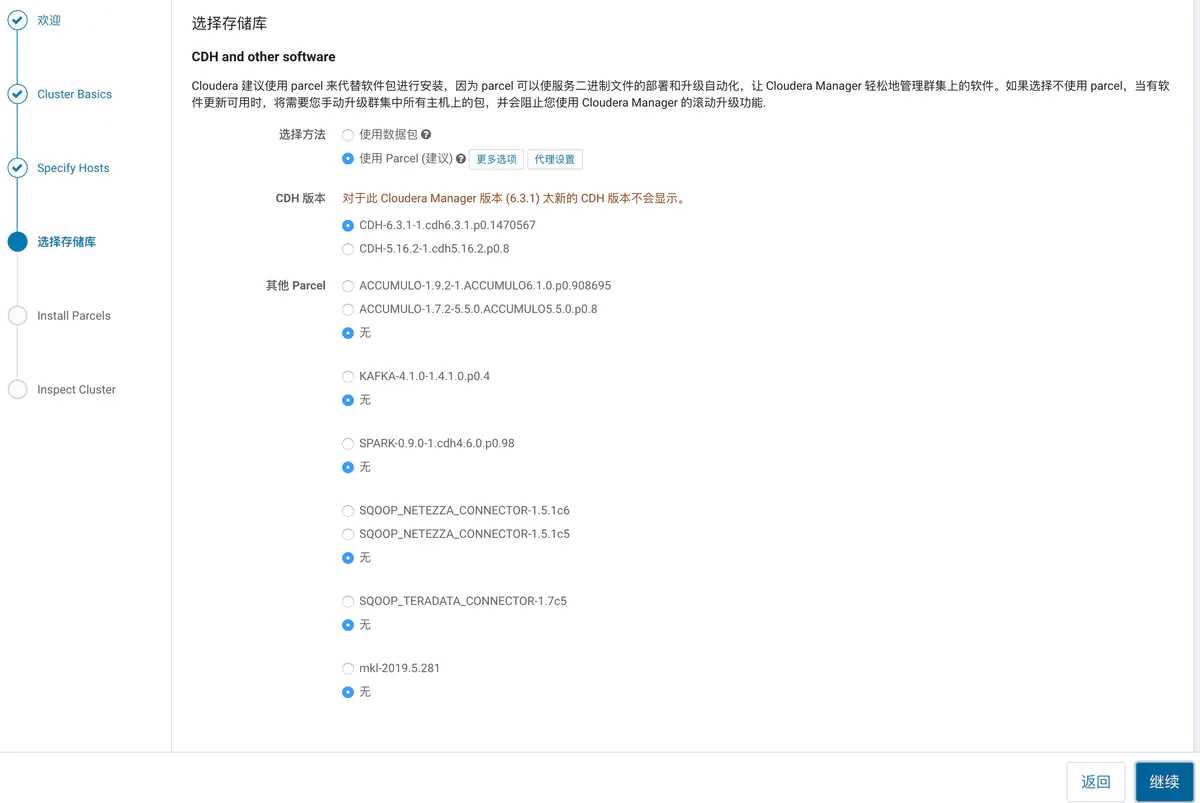

选择CDH版本

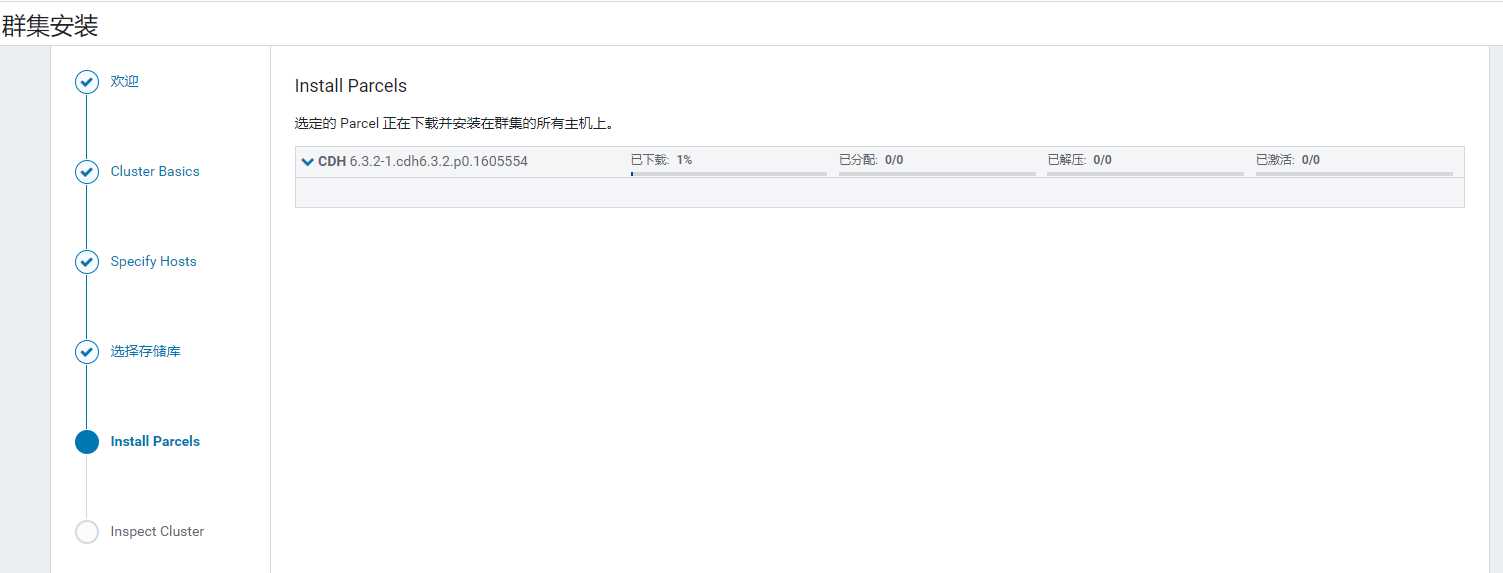

集群安装

速度慢,可去https://archive.cloudera.com/cdh6/6.3.2/parcels/下载

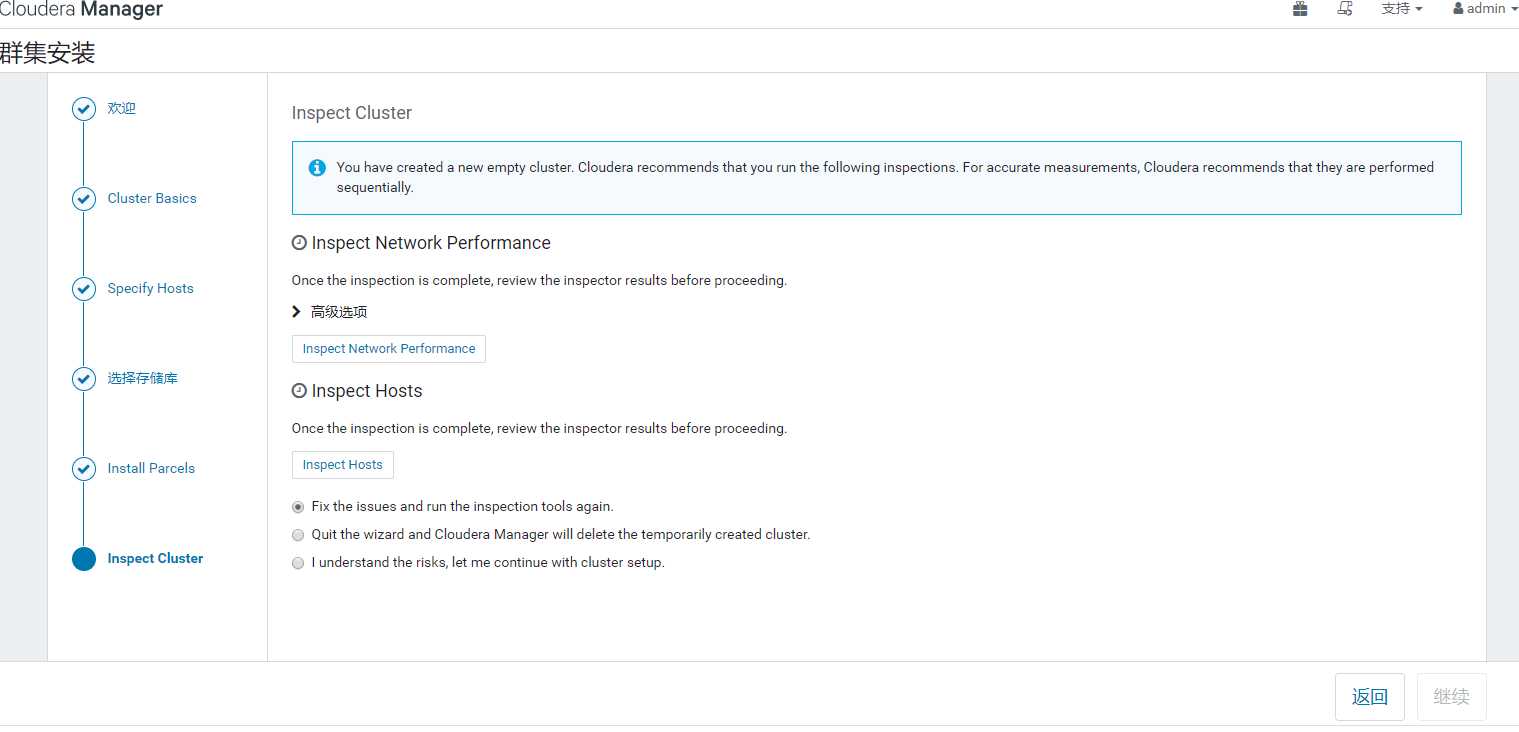

检测网络和主机

不断继续

服务暂时选HDFS、YARN、Zookeeper

分配角色

继续直到完成

LzoCodec和LzopCodec区别

两种压缩编码LzoCodec和LzopCodec区别:

1. LzoCodec比LzopCodec更快, LzopCodec为了兼容LZOP程序添加了如 bytes signature, header等信息。

2. LzoCodec作为Reduce输出,结果文件扩展名为 ”.lzo_deflate” ,无法被lzop读取;使用LzopCodec作为Reduce输出,生成扩展名为 ”.lzo” 的文件,可被lzop读取。

3. LzoCodec结果(.lzo_deflate文件) 不能由 lzo index job 的 "DistributedLzoIndexer" 创建index。

4. “.lzo_deflate” 文件不能作为MapReduce输入。而这些 “.LZO” 文件都支持。

综上所述,map输出的中间结果使用LzoCodec,reduce输出使用 LzopCodec。另外:org.apache.hadoop.io.compress.LzoCodec和com.hadoop.compression.lzo.LzoCodec功能一样,都是源码包中带的,生成的都是 lzo_deflate 文件。

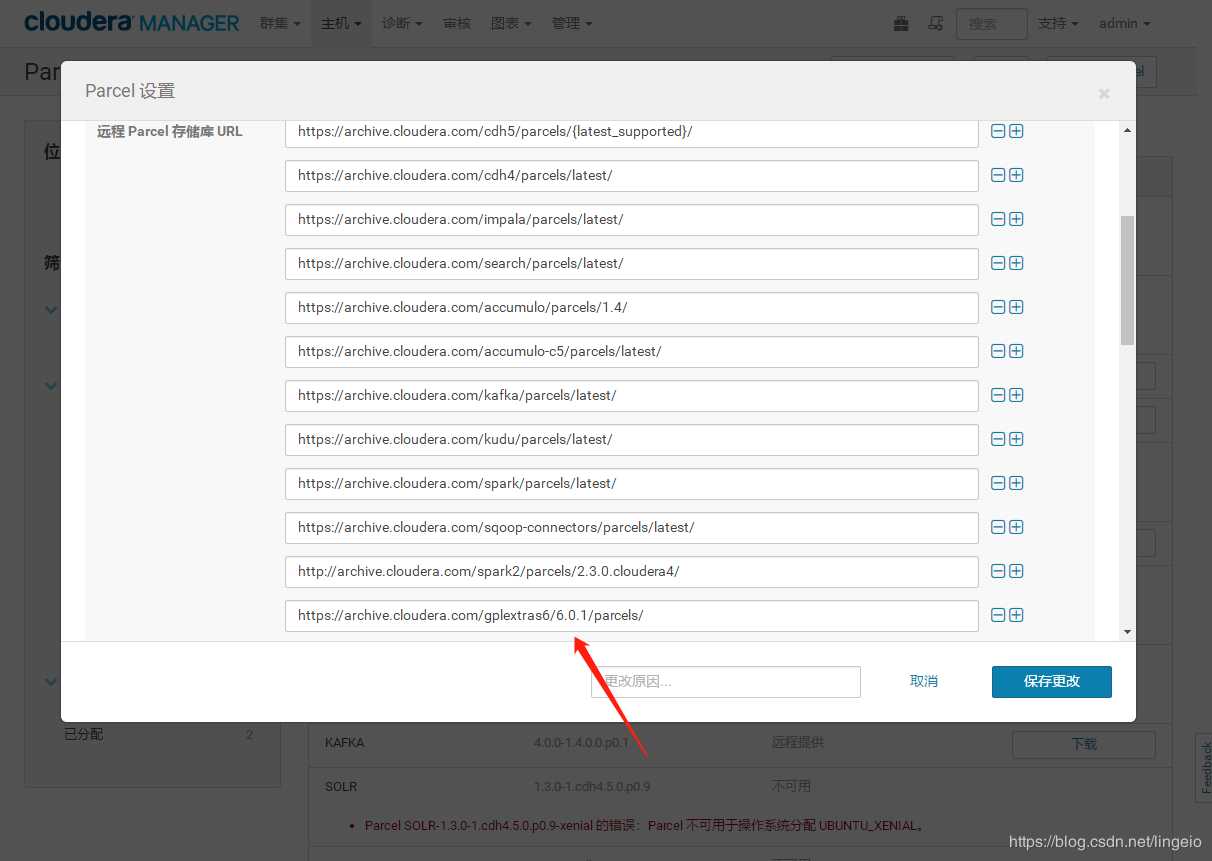

在线Parcel安装Lzo

下载地址:修改6.x.y为对应版本

CDH6:https://archive.cloudera.com/gplextras6/6.x.y/parcels/ CDH5:https://archive.cloudera.com/gplextras5/parcels/5.x.y/

1. 在CDH的 Parcel 配置中,“远程Parcel存储库URL”,点击 “+” 号,添加地址栏:

CDH6:https://archive.cloudera.com/gplextras6/6.0.1/parcels/ CDH5:http://archive.cloudera.com/gplextras/parcels/latest/

其他离线方式:

下载parcel放到 /opt/cloudera/parcel-repo 目录下

或者

搭建httpd,更改parcel URL地址,再在按远程安装

2. 返回Parcel列表,延迟几秒后会看到多出了 GPLEXTRAS(CDH6) 或者 HADOOP_LZO (CDH5),

下载 -- 分配 -- 激活。

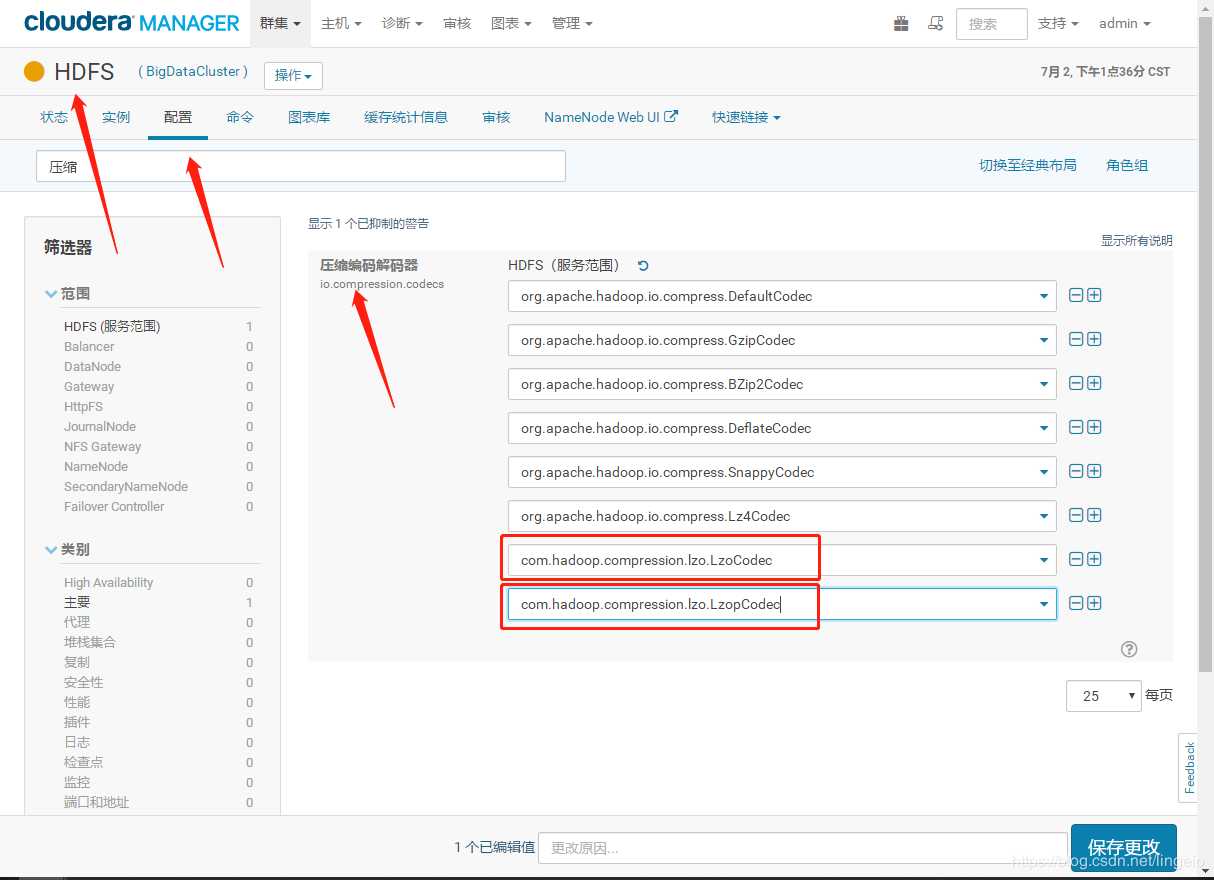

3. 安装完LZO后,打开HDFS配置,找到“压缩编码解码器”,点击 “+” 号,

添加:

com.hadoop.compression.lzo.LzoCodec

com.hadoop.compression.lzo.LzopCodec

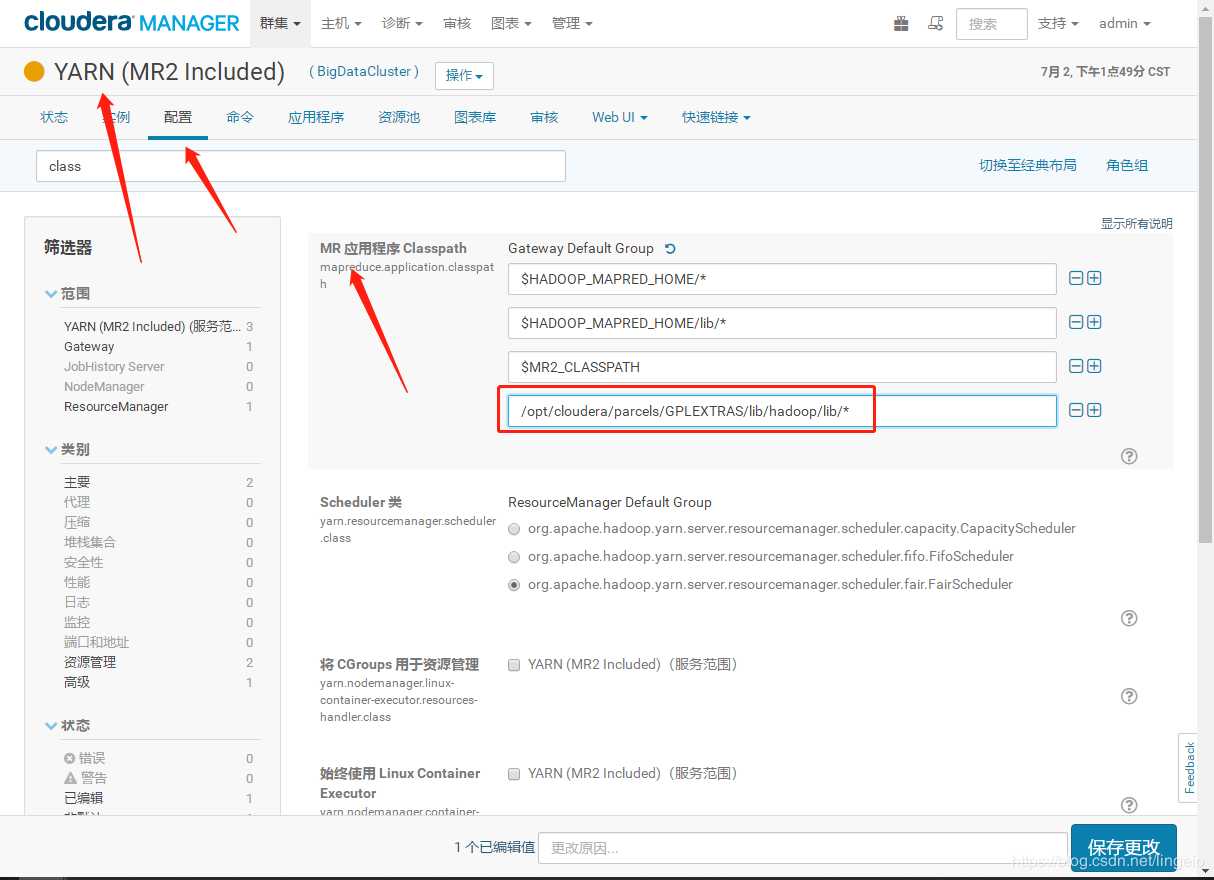

4. YARN配置,找到 “MR 应用程序 Classpath”(mapreduce.application.classpath)

添加:

/opt/cloudera/parcels/GPLEXTRAS/lib/hadoop/lib/*

5. 重启更新过期配置

继续

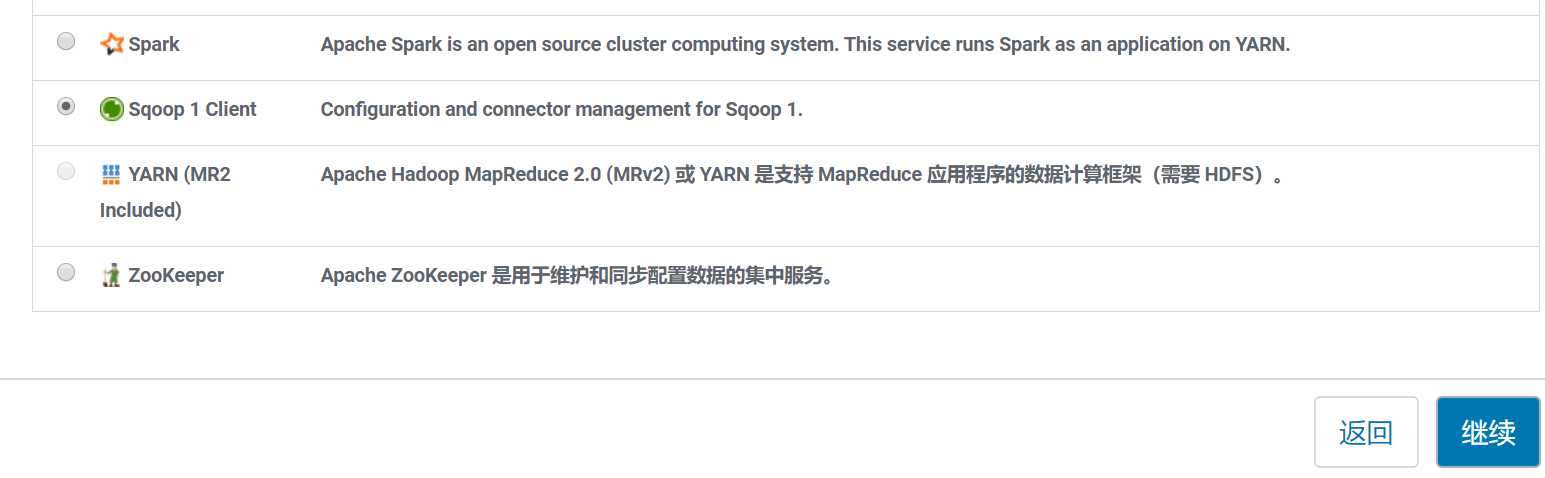

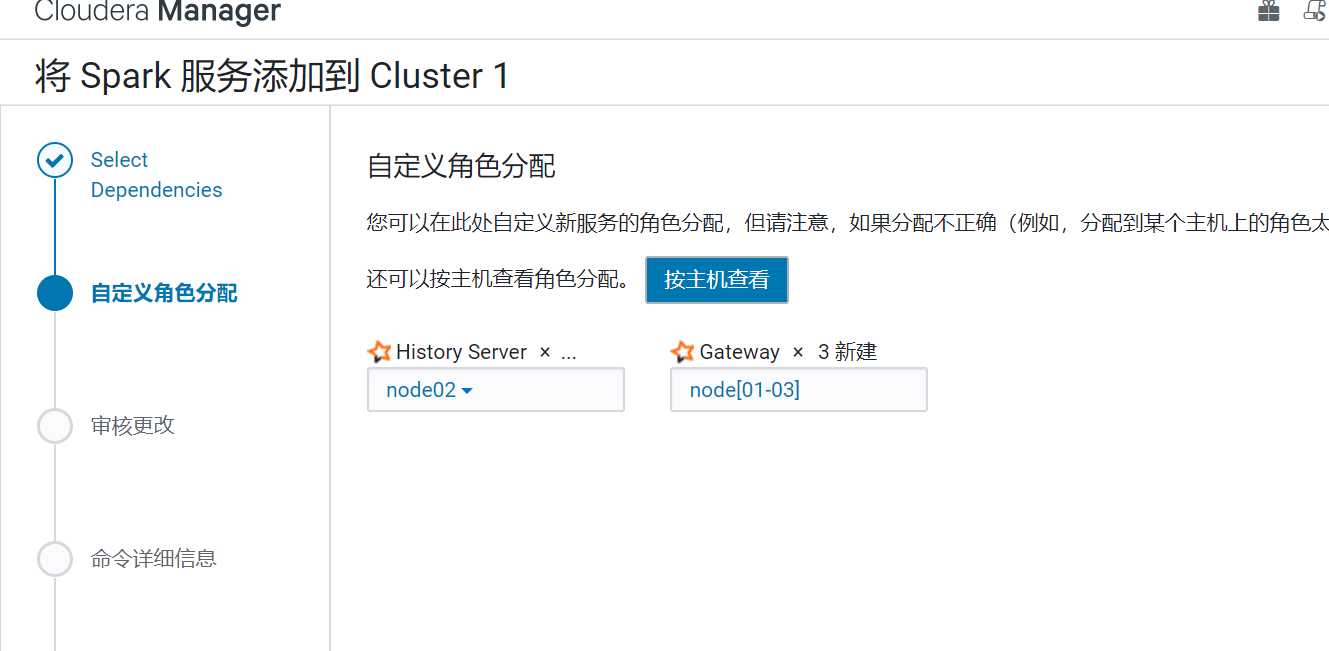

添加服务,添加spark

服务添加完成后,去节点进行配置

三台节点都要配置

进入目录

cd /opt/cloudera/parcels/CDH/lib/spark/conf

添加JAVA路径

vi spark-env.sh

末尾添加

export JAVA_HOME=/usr/local/jdk

创建slaves文件

添加work节点

node02

node03

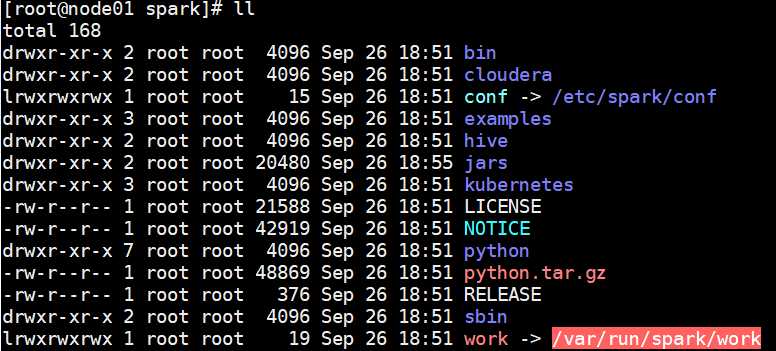

删除软连接work

rm -r work

修改端口,防止与yarn冲突

vi spark-defaults.conf

spark.shuffle.service.port=7337 可改为7338

启动时发现

[root@node01 sbin]# ./start-all.sh WARNING: User-defined SPARK_HOME (/opt/cloudera/parcels/CDH-6.3.1-1.cdh6.3.1.p0.1470567/lib/spark) overrides detected (/opt/cloudera/parcels/CDH/lib/spark). WARNING: Running start-master.sh from user-defined location. /opt/cloudera/parcels/CDH/lib/spark/bin/load-spark-env.sh: line 77: /opt/cloudera/parcels/CDH-6.3.1-1.cdh6.3.1.p0.1470567/lib/spark/bin/start-master.sh: No such file or directory WARNING: User-defined SPARK_HOME (/opt/cloudera/parcels/CDH-6.3.1-1.cdh6.3.1.p0.1470567/lib/spark) overrides detected (/opt/cloudera/parcels/CDH/lib/spark). WARNING: Running start-slaves.sh from user-defined location. /opt/cloudera/parcels/CDH/lib/spark/bin/load-spark-env.sh: line 77: /opt/cloudera/parcels/CDH-6.3.1-1.cdh6.3.1.p0.1470567/lib/spark/bin/start-slaves.sh: No such file or directory

将sbin目录下的文件拷贝到bin目录下

[root@node01 bin]# xsync start-slave.sh [root@node01 bin]# xsync start-master.sh

启动成功

jps命令查看,node1又master,node2和node3有worker

进入shell

[root@node01 bin]# spark-shell Setting default log level to "WARN". To adjust logging level use sc.setLogLevel(newLevel). For SparkR, use setLogLevel(newLevel). 20/03/04 13:22:07 WARN cluster.YarnSchedulerBackend$YarnSchedulerEndpoint: Attempted to request executors before the AM has registered! Spark context Web UI available at http://node01:4040 Spark context available as ‘sc‘ (master = yarn, app id = application_1583295431127_0001). Spark session available as ‘spark‘. Welcome to ____ __ / __/__ ___ _____/ /__ _\ \/ _ \/ _ `/ __/ ‘_/ /___/ .__/\_,_/_/ /_/\_\ version 2.4.0-cdh6.3.1 /_/ Using Scala version 2.11.12 (Java HotSpot(TM) 64-Bit Server VM, Java 1.8.0_241) Type in expressions to have them evaluated. Type :help for more information. scala> var h =1 h: Int = 1 scala> h + 3 res1: Int = 4 scala> :quit

本人编译号的Flink

链接:https://pan.baidu.com/s/1lIqeBtNpj0wR-Q8KAEAIsg

提取码:89wi

1、环境

Jdk 1.8、centos7.6、Maven 3.2.5、Scala-2.122、源码和CDH 版本

Flink 1.10.0 、 CDH 6.3.1(Hadoop 3.0.0)

flink重新编译

修改maven的配置文件

vi settings.xml

配置maven源

<mirrors> <mirror> <id>alimaven</id> <mirrorOf>central</mirrorOf> <name>aliyun maven</name> <url>http://maven.aliyun.com/nexus/content/repositories/central/</url> </mirror> <mirror> <id>alimaven</id> <name>aliyun maven</name> <url>http://maven.aliyun.com/nexus/content/groups/public/</url> <mirrorOf>central</mirrorOf> </mirror> <mirror> <id>central</id> <name>Maven Repository Switchboard</name> <url>http://repo1.maven.org/maven2/</url> <mirrorOf>central</mirrorOf> </mirror> <mirror> <id>repo2</id> <mirrorOf>central</mirrorOf> <name>Human Readable Name for this Mirror.</name> <url>http://repo2.maven.org/maven2/</url> </mirror> <mirror> <id>ibiblio</id> <mirrorOf>central</mirrorOf> <name>Human Readable Name for this Mirror.</name> <url>http://mirrors.ibiblio.org/pub/mirrors/maven2/</url> </mirror> <mirror> <id>jboss-public-repository-group</id> <mirrorOf>central</mirrorOf> <name>JBoss Public Repository Group</name> <url>http://repository.jboss.org/nexus/content/groups/public</url> </mirror> <mirror> <id>google-maven-central</id> <name>Google Maven Central</name> <url>https://maven-central.storage.googleapis.com </url> <mirrorOf>central</mirrorOf> </mirror> <mirror> <id>maven.net.cn</id> <name>oneof the central mirrors in china</name> <url>http://maven.net.cn/content/groups/public/</url> <mirrorOf>central</mirrorOf> </mirror> </mirrors>

下载依赖的 flink-shaded 源码

不同的 Flink 版本使用的 Flink-shaded不同,1.10 版本使用 10.0

https://mirrors.tuna.tsinghua.edu.cn/apache/flink/flink-shaded-10.0/flink-shaded-10.0-src.tgz

解压后,在 pom.xml 中,添加如下,加入到标签中

<profile>

<id>vendor-repos</id>

<activation>

<property>

<name>vendor-repos</name>

</property>

</activation>

<!-- Add vendor maven repositories -->

<repositories>

<!-- Cloudera -->

<repository>

<id>cloudera-releases</id>

<url>https://repository.cloudera.com/artifactory/cloudera-repos</url>

<releases>

<enabled>true</enabled>

</releases>

<snapshots>

<enabled>false</enabled>

</snapshots>

</repository>

<!-- Hortonworks -->

<repository>

<id>HDPReleases</id>

<name>HDP Releases</name>

<url>https://repo.hortonworks.com/content/repositories/releases/</url>

<snapshots><enabled>false</enabled></snapshots>

<releases><enabled>true</enabled></releases>

</repository>

<repository>

<id>HortonworksJettyHadoop</id>

<name>HDP Jetty</name>

<url>https://repo.hortonworks.com/content/repositories/jetty-hadoop</url>

<snapshots><enabled>false</enabled></snapshots>

<releases><enabled>true</enabled></releases>

</repository>

<!-- MapR -->

<repository>

<id>mapr-releases</id>

<url>https://repository.mapr.com/maven/</url>

<snapshots><enabled>false</enabled></snapshots>

<releases><enabled>true</enabled></releases>

</repository>

</repositories>

</profile>

在flink-shade目录下运行下面的命令,进行编译

mvn -T2C clean install -DskipTests -Pvendor-repos -Dhadoop.version=3.0.0-cdh6.3.1 -Dscala-2.12 -Drat.skip=true

下载flink源码 https://mirrors.aliyun.com/apache/flink/flink-1.10.0/

解压,进入目录,修改文件

[root@node02 ~]# cd /bigdata/ [root@node02 bigdata]# cd flink [root@node02 flink]# cd flink-1.10.0 [root@node02 flink-1.10.0]# cd flink-runtime-web/ [root@node02 flink-runtime-web]# ll total 24 -rw-r--r-- 1 501 games 8726 Mar 7 23:31 pom.xml -rw-r--r-- 1 501 games 3505 Feb 8 02:18 README.md drwxr-xr-x 4 501 games 4096 Feb 8 02:18 src drwxr-xr-x 3 501 games 4096 Mar 7 23:19 web-dashboard [root@node02 flink-runtime-web]# vi pom.xml

加入国内的下载地址,否则很可能报错

<execution> <id>install node and npm</id> <goals> <goal>install-node-and-npm</goal> </goals> <configuration>

<nodeDownloadRoot>http://npm.taobao.org/mirrors/node/</nodeDownloadRoot>

<npmDownloadRoot>http://npm.taobao.org/mirrors/npm/</npmDownloadRoot>

<nodeVersion>v10.9.0</nodeVersion> </configuration> </execution>

在flink源码解压目录下运行下列命令,编译 Flink 源码

mvn clean install -DskipTests -Dfast -Drat.skip=true -Dhaoop.version=3.0.0-cdh6.3.1 -Pvendor-repos -Dinclude-hadoop -Dscala-2.12 -T2C

提取出 flink-1.10.0 二进制包即可

目录地址:

flink-1.10.0/flink-dist/target/flink-1.10.0-bin

flink on yarn模式

三个节点配置环境变量

export HADOOP_HOME=/opt/cloudera/parcels/CDH-6.3.1-1.cdh6.3.1.p0.1470567

export HADOOP_CONF_DIR=/etc/hadoop/conf

export PATH=$PATH:$HADOOP_HOME/bin:$HADOOP_HOME/sbin

source下配置文件

如果机器上安装了spark,其worker端口8081会和flink的web端口冲突进行修改

进入一个节点flink目录下conf目录中的的配置文件

vi flink-conf.yaml

设置

rest.port: 8082

并继续在该文件中添加或修改

high-availability: zookeeper high-availability.storageDir: hdfs://node01:8020/flink_yarn_ha high-availability.zookeeper.path.root: /flink-yarn high-availability.zookeeper.quorum: node01:2181,node02:2181,node03:2181 yarn.application-attempts: 10

将flink分发到各个节点

xsync flink-1.10.0

hdfs上面创建文件夹

node01执行以下命令创建hdfs文件夹

hdfs dfs -mkdir -p /flink_yarn_ha

建立测试文件

vim wordcount.txt

内容如下

hello world

flink hadoop

hive spark

hdfs上面创建文件夹并上传文件

hdfs dfs -mkdir -p /flink_input hdfs dfs -put wordcount.txt /flink_input

测试

[root@node01 flink-1.10.0]# bin/flink run -m yarn-cluster ./examples/batch/WordCount.jar -input hdfs://node01:8020/flink_input -output hdfs://node01:8020/out_result1/out_count.txt -yn 2 -yjm 1024 -ytm 1024

查看输出结果

hdfs dfs -cat hdfs://node01:8020/out_result/out_count.txt

标签:art obat when hdp 解码器 mys sla MIXED navig

原文地址:https://www.cnblogs.com/aidata/p/12343715.html