标签:blog files const 配置环境 ring 情况下 config 没有 参考

HA+Zookeeper搭建:

hadoop完全分布式搭建: https://www.cnblogs.com/Hephaestus/p/12213719.html

hadoop高可用搭建: https://www.cnblogs.com/Hephaestus/p/12420370.html

Zookeeper集群搭建: https://www.cnblogs.com/Hephaestus/p/12421265.html

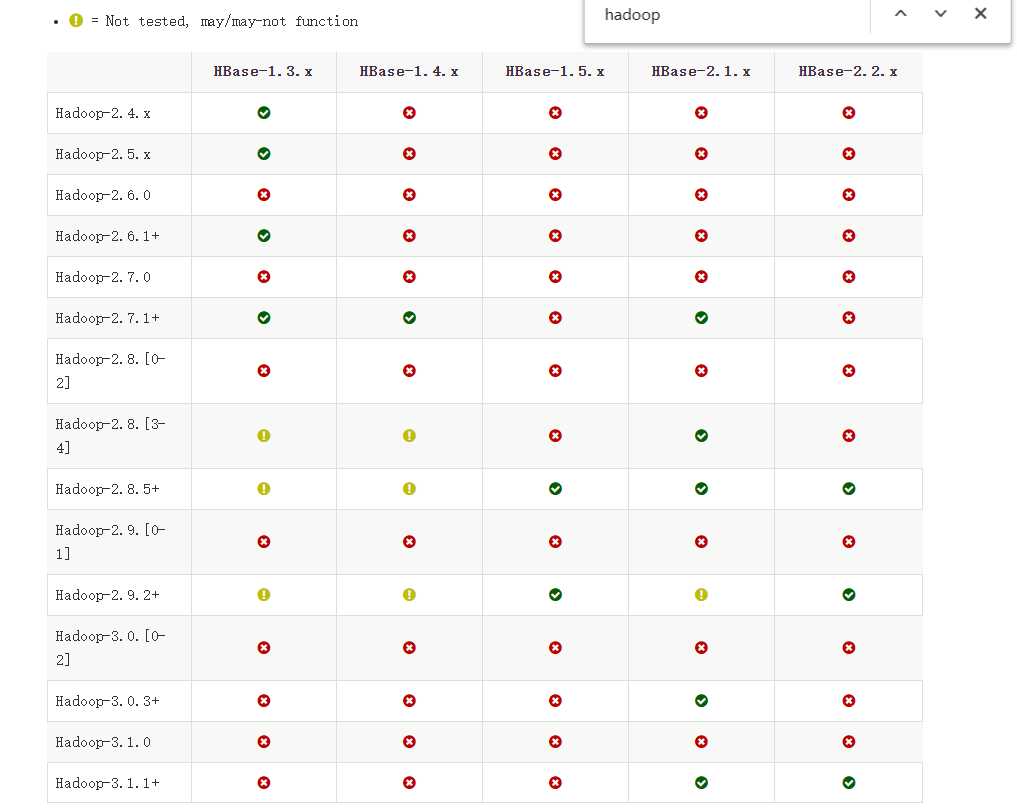

hadoop2.9.2 + Zookeeper3.5.7 + HBase2.2.3

版本兼容官方文档: http://hbase.apache.org/book.html#configuration

下载地址: https://hbase.apache.org/downloads.html

| namenode | datanode | journalnode | Zookeeper | HBase | |

|---|---|---|---|---|---|

| hadoop100 | 是(nn2) | HMaster | |||

| hadoop101 | 是(nn1) | 是 | 是 | 是 | 备份HMaster HRegionServer |

| hadoop102 | 是 | 是 | 是 | HRegionServer | |

| hadoop103 | 是 | 是 | 是 | HRegionServer |

tar -zxvf hbase-2.2.3-bin.tar.gz -C /soft/modulemv hbase-2.2.3 hbasesudo vim /etc/profile# HBase 环境变量配置

export HBASE_HOME=/hadoop/hbase

export PATH=$PATH:$HBASE_HOME/binsudo source /etc/profilevim conf/hbase-env.shexport JAVA_HOME=/soft/module/jdk1.8.0_161

export HBASE_MANAGES_ZK=false # line126vim conf/hbase-site.xml <!-- 每个regionServer的共享目录,用来持久化Hbase,默认情况下在/tmp/hbase下面 -->

<property>

<name>hbase.rootdir</name>

<value>hdfs://mycluster/HBase</value>

<description>

一定要把hadoop中的core-site.xml和hdf-site.xml复制到hbase的conf目录下,才能成功解析该集群名称;如果是hadoop单namenode集群,配置写成hdfs://master:9000/hbase (master是namenode主机名)

</description>

</property>

<!-- hbase集群模式,false表示hbase的单机,true表示是分布式模式 -->

<property>

<name>hbase.cluster.distributed</name>

<value>true</value>

</property>

<!-- 0.98 后的新变动,之前版本没有.port,默认端口为 60000 -->

<!-- hbase master节点的端口 -->

<property>

<name>hbase.master.port</name>

<value>16000</value>

</property>

<!-- hbase依赖的zk地址 -->

<property>

<name>hbase.zookeeper.quorum</name>

<value>hadoop101,hadoop102,hadoop103</value>

</property>

<property>

<name>hbase.zookeeper.property.dataDir</name>

<value>/soft/module/zookeeper-3.5.7/zkData</value>

</property>

<!--出错后加的这玩意,不懂是啥 | 在分布式情况下, 一定设置为false-->

<property>

<name>hbase.unsafe.stream.capability.enforce</name>

<value>false</value>

</property>3) 修改 regionservers: vim regionservers

RegionServer是HBase集群运行在每个工作节点上的服务。它是整个HBase系统的关键所在,一方面它维护了Region的状态,提供了对于Region的管理和服务;另一方面,它与Master交互,上传Region的负载信息上传,参与Master的分布式协调管理

hadoop101

hadoop102

hadoop1034) 软连接 hadoop 配置文件到 HBase:

ln -s /soft/module/hadoop-2.9.2/etc/hadoop/core-site.xml /soft/module/hbase/conf/core-site.xml

ln -s /soft/module/hadoop-2.9.2/etc/hadoop/hdfs-site.xml /soft/module/hbase/conf/hdfs-site.xml

5) 分发hbase文件 xsync.sh hbase

bin/zkServer.sh startstart-dfs.sh /soft/module/hadoop-2.9.2/sbin/start-yarn.shbin/hbase-daemon.sh start masterbin/hbase-daemon.sh start regionserverbin/start-hbase.shbin/stop-hbase.sh1)

java.lang.IllegalStateException: The procedure WAL relies on the ability to hsync for proper operation during component failures, but the underlying filesystem does not support doing so. Please check the config value of ‘hbase.procedure.store.wal.use.hsync‘ to set the desired level of robustness and ensure the config value of ‘hbase.wal.dir‘ points to a FileSystem mount that can provide it.

hbase-site.xml增加配置

<property>

<name>hbase.unsafe.stream.capability.enforce</name>

<value>false</value>

</property>2)启动时报错

java.lang.NoClassDefFoundError: org/apache/htrace/SamplerBuilder

java.lang.RuntimeException: Failed construction of Master:

class org.apache.hadoop.hbase.master.HMasterCommandLine$LocalHMasterorg.apache.htrace.SamplerBuilder

原因:jar包缺失

解决方法:把lib\client-facing-thirdparty包中的htrace-core-3.1.0-incubating.jar复制一个到lib包下即可

参考链接: https://blog.csdn.net/qq_35488412/article/details/78623518

标签:blog files const 配置环境 ring 情况下 config 没有 参考

原文地址:https://www.cnblogs.com/Hephaestus/p/12442553.html