标签:min edit target wan 很多 stat dom 自动 错误

使用lvscare 监控后端节点# 使用centos7

# lvscare 完成后端master 检查发现错误摘掉后端IP 恢复正常重新加入负载

# 使用sealyun 开源的LVScare 项目

# 项目地址:https://github.com/sealyun/LVScare

# haproxy 在流量很大节点数很多的时候占用cpu非常高整个性能也不会太高。1、go环境的搭建

#下载go 二进制包

cd /usr/local/src/

wget https://dl.google.com/go/go1.14.linux-amd64.tar.gz

# 解压 go 二进制包

tar -xvf go1.14.linux-amd64.tar.gz

# 复制解压完成的go 目录到 /usr/local/

mv go ../

# 配置环境变量

vi /etc/profile

export GOPATH=/root/go

export GOBIN=/root/go/bin

PATH=$PATH:/usr/local/go/bin:$HOME/bin:$GOBIN

export PATH

# 生效环境变量

source /etc/profile

#验证go 是否安装正常

go version

[root@localhost ]# go version

go version go1.14 linux/amd64

# 安装git

yum install git -y

# 编译LVScare

go get github.com/sealyun/lvscare

# 编译完成寻找编译结果

which lvscare

[root@localhost src]# which lvscare

/root/go/bin/lvscare# 创建配置文件

mkdir -p /apps/lvscare/{bin,conf}

cat << EOF | tee /apps/lvscare/conf/lvscare

LVSCARE_OPTS="care \ --vs 10.10.10.10:9443 \ --rs 192.168.2.10:6443 \ --rs 192.168.2.11:6443 \\

--rs 192.168.2.12:6443 \ --health-path / \ --health-schem https"

EOF

# 配置项说明:

# --vs vip IP 劲量使用一个陌生ip 新的端口

# --rs 后端服务器IP 负载端口号

# --health-path 路径

# health-schem http https

# tcp 负载可以删除 health-path health-schem

# 创建启动文件

cat << EOF | tee /usr/lib/systemd/system/lvscare.service

[Unit]

Description=lvscare

After=network.target

After=network-online.target

Wants=network-online.target

[Service]

LimitNOFILE=1024000

LimitNPROC=1024000

LimitCORE=infinity

LimitMEMLOCK=infinity

EnvironmentFile=-/apps/lvscare/conf/lvscare

ExecStart=/apps/lvscare/bin/lvscare \$LVSCARE_OPTS

Restart=on-failure

KillMode=process

[Install]

WantedBy=multi-user.target

EOF# 修改 kube-proxy ipvs 模式时会全局删除ipvs规则所以添加过滤IP

# 修改的参数ipvs-exclude-cidrs=10.10.10.10/32

# kubeadm 方式部署

# 由于修改configmaps kube-proxy 一直启动不了所以改为修改启动参数

kubectl -n kube-system edit configmaps kube-proxy

# 修改内容

ipvs:

excludeCIDRs:

- "10.10.10.10/32" vip ip

#二进制方式部署修改内容

# 修改内容

- --logtostderr=true

- --v=4

- --feature-gates=SupportIPVSProxyMode=true

- --masquerade-all=true

- --proxy-mode=ipvs

- --ipvs-min-sync-period=5s

- --ipvs-sync-period=5s

- --ipvs-scheduler=rr

- --cluster-cidr=10.244.0.0/16

- --metrics-bind-address=0.0.0.0

- --ipvs-exclude-cidrs=10.10.10.10/32 vip ip# 设置开机启动

systemctl enable lvscare.service

# 启动 lvscare

systemctl start lvscare.service

# 查看启动状态

systemctl status lvscare.service

[root@localhost apps]# systemctl status lvscare.service

● lvscare.service - lvscare Kubelet

Loaded: loaded (/usr/lib/systemd/system/lvscare.service; enabled; vendor preset: disabled)

Active: active (running) since Fri 2020-03-13 15:57:35 CST; 5min ago

Main PID: 118865 (lvscare)

Tasks: 12 (limit: 204628)

Memory: 24.4M

CGroup: /system.slice/lvscare.service

└─118865 /apps/lvscare/bin/lvscare care --vs 10.10.10.10:9443 --rs 192.168.2.10:6443 --rs 192.168.2.11:6443 --rs 192.168.2.12:6443 --health-path / --health-schem https

Mar 13 16:02:40 localhost.localdomain lvscare[118865]: check realserver ip: 192.168.2.11, port %!s(uint16=6443)

Mar 13 16:02:40 localhost.localdomain lvscare[118865]: check realserver ip: 192.168.2.12, port %!s(uint16=6443)

Mar 13 16:02:45 localhost.localdomain lvscare[118865]: check svc ip: 10.96.0.10, port 53

Mar 13 16:02:45 localhost.localdomain lvscare[118865]: check svc ip: 10.10.10.10, port 9443

Mar 13 16:02:45 localhost.localdomain lvscare[118865]: check realserver ip: 192.168.2.12, port %!s(uint16=6443)

Mar 13 16:02:45 localhost.localdomain lvscare[118865]: check realserver ip: 192.168.2.11, port %!s(uint16=6443)

Mar 13 16:02:45 localhost.localdomain lvscare[118865]: check realserver ip: 192.168.2.10, port %!s(uint16=6443)

Mar 13 16:02:45 localhost.localdomain lvscare[118865]: check realserver ip: 192.168.2.12, port %!s(uint16=6443)

Mar 13 16:02:45 localhost.localdomain lvscare[118865]: check realserver ip: 192.168.2.11, port %!s(uint16=6443)

Mar 13 16:02:45 localhost.localdomain lvscare[118865]: check realserver ip: 192.168.2.12, port %!s(uint16=6443)[root@localhost apps]# ipvsadm -ln

IP Virtual Server version 1.2.1 (size=4096)

Prot LocalAddress:Port Scheduler Flags

-> RemoteAddress:Port Forward Weight ActiveConn InActConn

TCP 10.10.10.10:9443 rr

-> 192.168.2.10:6443 Masq 1 0 0

-> 192.168.2.11:6443 Masq 1 0 0

-> 192.168.2.12:6443 Masq 1 0 0

curl -k https://10.10.10.10:9443

[root@localhost apps]# curl -k https://10.10.10.10:9443

{

"kind": "Status",

"apiVersion": "v1",

"metadata": {

},

"status": "Failure",

"message": "forbidden: User \"system:anonymous\" cannot get path \"/\"",

"reason": "Forbidden",

"details": {

},

"code": 403

}[root@localhost apps]#

能正常返回数据 lvs 正常

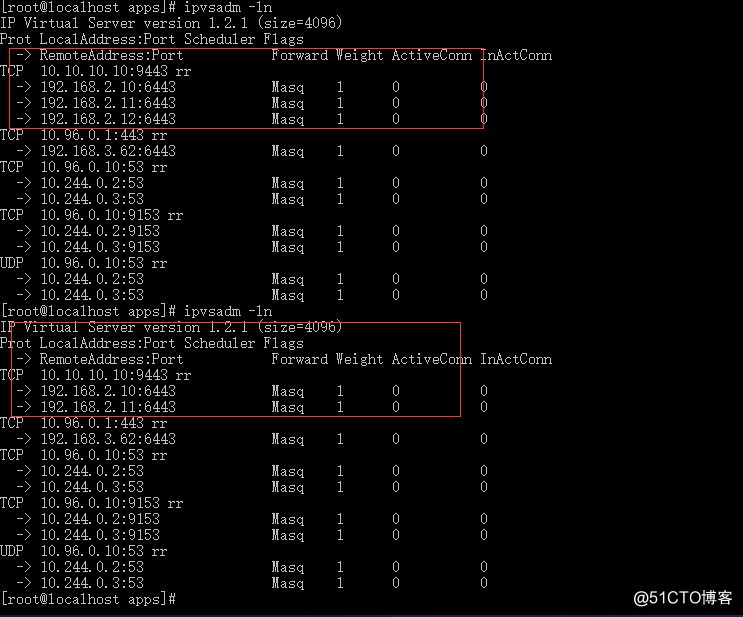

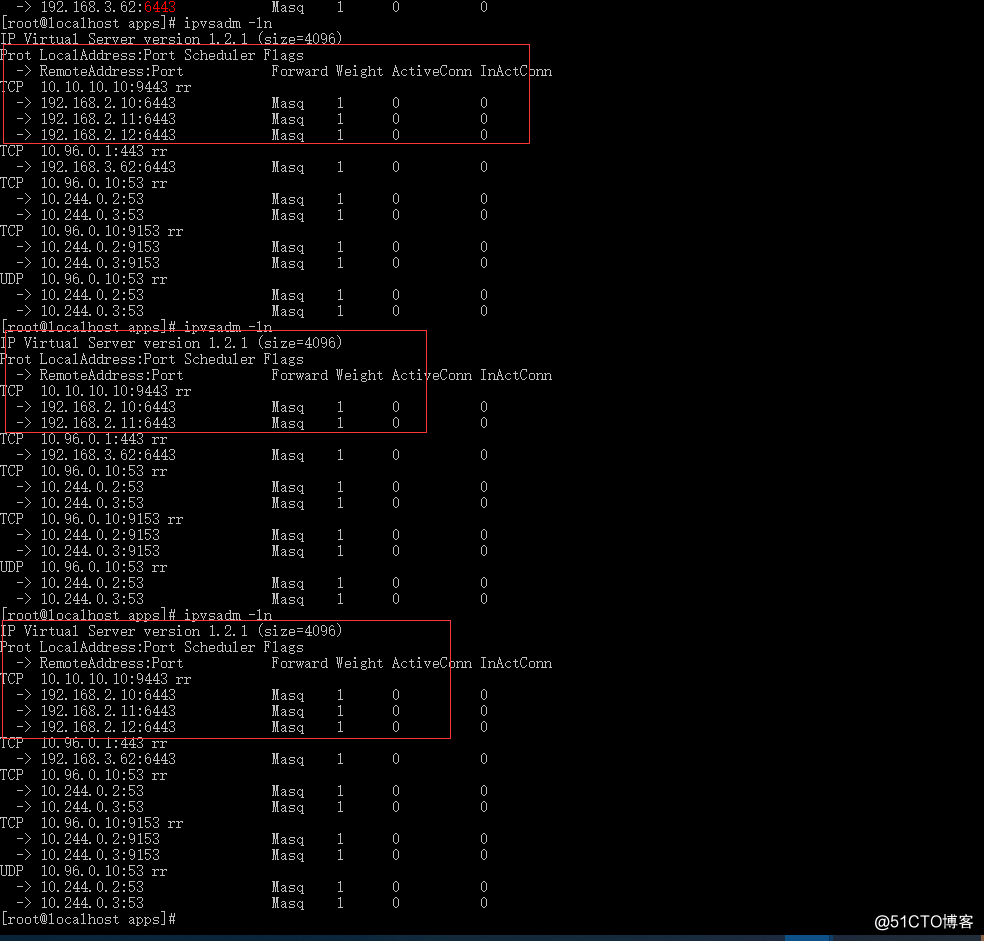

# 验证LVScare 是否能够计算删除故障节点及故障节点恢复自动添加

[root@localhost apps]# ipvsadm -ln

IP Virtual Server version 1.2.1 (size=4096)

Prot LocalAddress:Port Scheduler Flags

-> RemoteAddress:Port Forward Weight ActiveConn InActConn

TCP 10.10.10.10:9443 rr

-> 192.168.2.10:6443 Masq 1 0 0

-> 192.168.2.11:6443 Masq 1 0 0

-> 192.168.2.12:6443 Masq 1 0 0

6443 端口后端正常

#关闭 192.168.2.12 kube-apiserver

service kube-apiserver stop

[root@k8s-master3 ~]# ps -ef | grep kube-apiserver

root 3770208 3769875 0 16:07 pts/0 00:00:00 grep --color=auto kube-apiserver

# 192.168.2.12:6443 已经删除

启动 192.168.2.12 kube-apiserver

[root@k8s-master3 ~]# service kube-apiserver start

Redirecting to /bin/systemctl start kube-apiserver.service

[root@k8s-master3 ~]# ps -ef | grep kube-apiserver

root 3771436 1 99 16:09 ? 00:00:02 /apps/k8s/bin/kube-apiserver -

# 节点已经恢复# 记得在每个node 节点部署lvscare

# vip ip 子网是32的只能本机访问丢弃haproxy+Keepalived 使用内核级LVS实现K8S master高可用

标签:min edit target wan 很多 stat dom 自动 错误

原文地址:https://blog.51cto.com/juestnow/2478068