标签:软件 调整 hang nat 相同 配置 管理 play 扩容

LVM(Logical Volume Manager,逻辑卷管理器)是动态划分磁盘分区的工具,它在硬盘分区和文件系统之间添加了一个逻辑层,可以让磁盘分区容量调整变得简单而高效。使用LVM可以动态的增加/缩小LVM磁盘分区的容量而不会破坏数据。

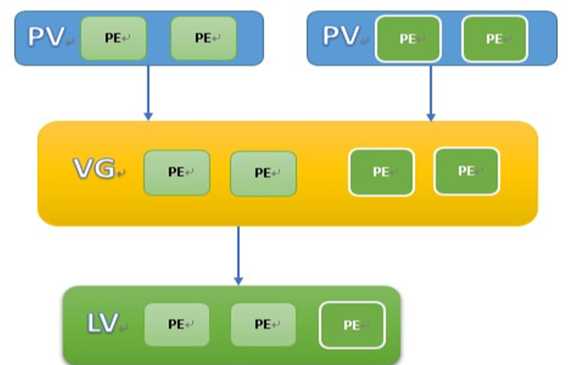

物理卷(Physical Volume,PV)可以是单独磁盘,也可以是磁盘分区或RAID磁盘阵列。

卷组(Volume Group,VG)PV的组合,VG可以看作单独的逻辑磁盘。

逻辑卷(Logical Volume,LV)逻辑上的分区。

PE(Physical Extent):物理卷被划分为称为PE的基本单元,具有唯一编号的PE是可以被LVM寻址的最小单元。PE大小可配置,默认为4MB。

LE(Logical Extent):逻辑卷被划分为称为LE的可寻址的基本单元,在同一卷组中,LE的大小和PE的大小是相同的,并且一一对应。

新建逻辑卷

1. 准备两块新硬盘设备

NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINT

sda 8:0 0 20G 0 disk

├─sda1 8:1 0 1G 0 part /boot

└─sda2 8:2 0 19G 0 part

├─rhel-root 253:0 0 17G 0 lvm /

└─rhel-swap 253:1 0 2G 0 lvm [SWAP]

sdb 8:16 0 20G 0 disk

sdc 8:32 0 20G 0 disk

sr0 11:0 1 4.2G 0 rom /run/media/root/RHEL-7.6 Server.x86_64

2. 首先对物理磁盘做PV声明(在物理层做事儿)——让新添加的两块硬盘设备支持LVM技术

[root@promote ~]# pvcreate /dev/sdb /dev/sdc

Physical volume "/dev/sdb" successfully created.

Physical volume "/dev/sdc" successfully created.

3. 将物理卷融合成一个新的逻辑磁盘——卷组

将两块硬盘设备加入到myVG卷组

[root@promote ~]# vgcreate myVG /dev/sdb /dev/sdc

Volume group "myVG" successfully created

查看卷组状态

[root@promote ~]# vgcreate myVG /dev/sdb /dev/sdc

Volume group "myVG" successfully created

[root@promote ~]#

[root@promote ~]#

[root@promote ~]# vgdisplay

--- Volume group ---

VG Name rhel

System ID

Format lvm2

Metadata Areas 1

Metadata Sequence No 3

VG Access read/write

VG Status resizable

MAX LV 0

Cur LV 2

Open LV 2

Max PV 0

Cur PV 1

Act PV 1

VG Size <19.00 GiB

PE Size 4.00 MiB

Total PE 4863

Alloc PE / Size 4863 / <19.00 GiB

Free PE / Size 0 / 0

VG UUID FxGwXE-2slI-Mk1r-3Dfe-EqUq-MkMK-MyPerA

--- Volume group ---

VG Name myVG

System ID

Format lvm2

Metadata Areas 2

Metadata Sequence No 1

VG Access read/write

VG Status resizable

MAX LV 0

Cur LV 0

Open LV 0

Max PV 0

Cur PV 2

Act PV 2

VG Size 39.99 GiB

PE Size 4.00 MiB

Total PE 10238

Alloc PE / Size 0 / 0

Free PE / Size 10238 / 39.99 GiB

VG UUID AeQ4hc-OBVg-yPBO-Sw1b-o1sr-vehG-lntDPV

4. 对卷组进行分区形成逻辑卷(在LVM软件内操作)

切割出一个100MB的的逻辑卷设备。对逻辑卷切割时有两种计量单位,第一种以容量为单位,所使用参数为 -L ;另一种以基本单元的个数为单位,使用的参数是-l。

[root@promote ~]# lvcreate -n lv0 -L 100M myVG

Logical volume "lv0" created.

[root@promote ~]# lvdisplay

--- Logical volume ---

LV Path /dev/rhel/root

LV Name root

VG Name rhel

LV UUID WYj3d9-IIEq-f1HK-K2Y6-t4R6-5bwn-JJShp4

LV Write Access read/write

LV Creation host, time localhost, 2020-03-24 19:55:51 +0800

LV Status available

# open 1

LV Size <17.00 GiB

Current LE 4351

Segments 1

Allocation inherit

Read ahead sectors auto

- currently set to 8192

Block device 253:0

--- Logical volume ---

LV Path /dev/rhel/swap

LV Name swap

VG Name rhel

LV UUID 0mj0nq-qLDj-r8CM-Ftr8-NQTJ-uX6O-yW3QI9

LV Write Access read/write

LV Creation host, time localhost, 2020-03-24 19:55:51 +0800

LV Status available

# open 2

LV Size 2.00 GiB

Current LE 512

Segments 1

Allocation inherit

Read ahead sectors auto

- currently set to 8192

Block device 253:1

--- Logical volume ---

LV Path /dev/myVG/lv0

LV Name lv0

VG Name myVG

LV UUID h0Ko7Q-t20v-iZfq-zObG-rc21-uMCK-3R2tHI

LV Write Access read/write

LV Creation host, time promote.cache-dns.local, 2020-04-18 11:39:13 +0800

LV Status available

# open 0

LV Size 100.00 MiB

Current LE 25

Segments 1

Allocation inherit

Read ahead sectors auto

- currently set to 8192

Block device 253:2

5. 格式化逻辑卷并挂载到相应目录

[root@promote ~]# mkfs.ext4 /dev/myVG/lv0

mke2fs 1.42.9 (28-Dec-2013)

Filesystem label=

OS type: Linux

Block size=1024 (log=0)

Fragment size=1024 (log=0)

Stride=0 blocks, Stripe width=0 blocks

25688 inodes, 102400 blocks

5120 blocks (5.00%) reserved for the super user

First data block=1

Maximum filesystem blocks=33685504

13 block groups

8192 blocks per group, 8192 fragments per group

1976 inodes per group

Superblock backups stored on blocks:

8193, 24577, 40961, 57345, 73729

Allocating group tables: done

Writing inode tables: done

Creating journal (4096 blocks): done

Writing superblocks and filesystem accounting information: done

[root@promote ~]#

[root@promote ~]#

[root@promote ~]#

[root@promote ~]# mkdir /lv0

[root@promote ~]# echo "/dev/myVG/lv0 /lv0 ext4 defaults 0 0" >> /etc/fstab

[root@promote ~]# mount -a

[root@promote ~]# df -h

Filesystem Size Used Avail Use% Mounted on

/dev/mapper/rhel-root 17G 8.6G 8.4G 51% /

devtmpfs 894M 0 894M 0% /dev

tmpfs 910M 0 910M 0% /dev/shm

tmpfs 910M 11M 900M 2% /run

tmpfs 910M 0 910M 0% /sys/fs/cgroup

/dev/sda1 1014M 178M 837M 18% /boot

tmpfs 182M 4.0K 182M 1% /run/user/42

tmpfs 182M 28K 182M 1% /run/user/0

/dev/sr0 4.2G 4.2G 0 100% /run/media/root/RHEL-7.6 Server.x86_64

/dev/mapper/myVG-lv0 93M 1.6M 85M 2% /lv0

扩容逻辑卷(扩容前一定先卸载设备与挂载点的关联)

1. 卸载

[root@promote ~]# umount /lv0

2. 扩展逻辑卷至300M

[root@promote ~]# lvextend -L 300M /dev/myVG/lv0

Size of logical volume myVG/lv0 changed from 100.00 MiB (25 extents) to 300.00 MiB (75 extents).

Logical volume myVG/lv0 successfully resized.

3. 检查硬盘完整性并重置硬盘容量

[root@promote ~]# e2fsck -f /dev/myVG/lv0

e2fsck 1.42.9 (28-Dec-2013)

Pass 1: Checking inodes, blocks, and sizes

Pass 2: Checking directory structure

Pass 3: Checking directory connectivity

Pass 4: Checking reference counts

Pass 5: Checking group summary information

/dev/myVG/lv0: 11/25688 files (9.1% non-contiguous), 8896/102400 blocks

[root@promote ~]# resize2fs /dev/myVG/lv0

resize2fs 1.42.9 (28-Dec-2013)

Resizing the filesystem on /dev/myVG/lv0 to 307200 (1k) blocks.

The filesystem on /dev/myVG/lv0 is now 307200 blocks long.

4.重新挂载硬盘设备并查看挂载状态

[root@promote ~]# mount -a

[root@promote ~]# df -h

Filesystem Size Used Avail Use% Mounted on

/dev/mapper/rhel-root 17G 8.6G 8.4G 51% /

devtmpfs 894M 0 894M 0% /dev

tmpfs 910M 0 910M 0% /dev/shm

tmpfs 910M 11M 900M 2% /run

tmpfs 910M 0 910M 0% /sys/fs/cgroup

/dev/sda1 1014M 178M 837M 18% /boot

tmpfs 182M 4.0K 182M 1% /run/user/42

tmpfs 182M 28K 182M 1% /run/user/0

/dev/sr0 4.2G 4.2G 0 100% /run/media/root/RHEL-7.6 Server.x86_64

/dev/mapper/myVG-lv0 287M 2.0M 266M 1% /lv0

缩小逻辑卷

相较于扩容,缩小逻辑卷数据丢失的风险更大,因此要提前备份数据。Linux系统规定,在进行LVM缩容操作之前,需要检查文件系统的完整性。

1. 卸载文件系统

[root@promote ~]# umount /lv0

2. 检查文件系统的完整性

[root@promote ~]# e2fsck -f /dev/myVG/lv0

e2fsck 1.42.9 (28-Dec-2013)

Pass 1: Checking inodes, blocks, and sizes

Pass 2: Checking directory structure

Pass 3: Checking directory connectivity

Pass 4: Checking reference counts

Pass 5: Checking group summary information

/dev/myVG/lv0: 11/75088 files (9.1% non-contiguous), 15637/307200 blocks

3. 缩小逻辑卷容量(必须首先缩小逻辑卷的逻辑边界,再缩小物理边界)

[root@promote ~]# resize2fs /dev/m

mapper/ mcelog mem midi mqueue/ myVG/

[root@promote ~]# resize2fs /dev/myVG/lv0 100M

resize2fs 1.42.9 (28-Dec-2013)

Resizing the filesystem on /dev/myVG/lv0 to 102400 (1k) blocks.

The filesystem on /dev/myVG/lv0 is now 102400 blocks long.

[root@promote ~]# lvreduce -L 100M /dev/myVG/lv0

WARNING: Reducing active logical volume to 100.00 MiB.

THIS MAY DESTROY YOUR DATA (filesystem etc.)

Do you really want to reduce myVG/lv0? [y/n]: y

Size of logical volume myVG/lv0 changed from 300.00 MiB (75 extents) to 100.00 MiB (25 extents).

Logical volume myVG/lv0 successfully resized.

4. 重新挂载并查看挂载状态

[root@promote ~]# mount -a

[root@promote ~]# df -h

Filesystem Size Used Avail Use% Mounted on

/dev/mapper/rhel-root 17G 8.6G 8.4G 51% /

devtmpfs 894M 0 894M 0% /dev

tmpfs 910M 0 910M 0% /dev/shm

tmpfs 910M 11M 900M 2% /run

tmpfs 910M 0 910M 0% /sys/fs/cgroup

/dev/sda1 1014M 178M 837M 18% /boot

tmpfs 182M 4.0K 182M 1% /run/user/42

tmpfs 182M 28K 182M 1% /run/user/0

/dev/sr0 4.2G 4.2G 0 100% /run/media/root/RHEL-7.6 Server.x86_64

/dev/mapper/myVG-lv0 93M 1.6M 85M 2% /lv0

逻辑卷快照

LVM的快照卷功能的两个特点

1. 向逻辑卷lv0所挂载的目录中写入一个文件

[root@promote ~]# echo "Hello, World" > /lv0/readme.txt

[root@promote ~]# ls /lv0

lost+found readme.txt

2. 使用-s参数生成一个快照卷,使用-L 参数指定切割的大小,并在命令后写上针对的逻辑卷

[root@promote ~]# lvcreate -L 100M -s -n SNAP /dev/myVG/lv0

Logical volume "SNAP" created.

[root@promote ~]# lvdisplay

--- Logical volume ---

LV Path /dev/rhel/root

LV Name root

VG Name rhel

LV UUID WYj3d9-IIEq-f1HK-K2Y6-t4R6-5bwn-JJShp4

LV Write Access read/write

LV Creation host, time localhost, 2020-03-24 19:55:51 +0800

LV Status available

# open 1

LV Size <17.00 GiB

Current LE 4351

Segments 1

Allocation inherit

Read ahead sectors auto

- currently set to 8192

Block device 253:0

--- Logical volume ---

LV Path /dev/rhel/swap

LV Name swap

VG Name rhel

LV UUID 0mj0nq-qLDj-r8CM-Ftr8-NQTJ-uX6O-yW3QI9

LV Write Access read/write

LV Creation host, time localhost, 2020-03-24 19:55:51 +0800

LV Status available

# open 2

LV Size 2.00 GiB

Current LE 512

Segments 1

Allocation inherit

Read ahead sectors auto

- currently set to 8192

Block device 253:1

--- Logical volume ---

LV Path /dev/myVG/lv0

LV Name lv0

VG Name myVG

LV UUID h0Ko7Q-t20v-iZfq-zObG-rc21-uMCK-3R2tHI

LV Write Access read/write

LV Creation host, time promote.cache-dns.local, 2020-04-18 11:39:13 +0800

LV snapshot status source of

SNAP [active]

LV Status available

# open 1

LV Size 100.00 MiB

Current LE 25

Segments 1

Allocation inherit

Read ahead sectors auto

- currently set to 8192

Block device 253:2

--- Logical volume ---

LV Path /dev/myVG/SNAP

LV Name SNAP

VG Name myVG

LV UUID vs61AA-nHnS-QxxE-Aclj-nB8x-32lY-0dlFM7

LV Write Access read/write

LV Creation host, time promote.cache-dns.local, 2020-04-18 12:01:00 +0800

LV snapshot status active destination for lv0

LV Status available

# open 0

LV Size 100.00 MiB

Current LE 25

COW-table size 100.00 MiB

COW-table LE 25

Allocated to snapshot 0.01%

Snapshot chunk size 4.00 KiB

Segments 1

Allocation inherit

Read ahead sectors auto

- currently set to 8192

Block device 253:5

3. 在逻辑卷lv0所挂载目录中创建一个100MB的垃圾文件,查看快照卷状态发现存储空间所占用量上升

[root@promote ~]# dd if=/dev/zero of=/lv0/files count=1 bs=100M

dd: error writing ‘/lv0/files’: No space left on device

1+0 records in

0+0 records out

93646848 bytes (94 MB) copied, 3.25314 s, 28.8 MB/s

[root@promote ~]# lvdisplay

--- Logical volume ---

LV Path /dev/rhel/root

LV Name root

VG Name rhel

LV UUID WYj3d9-IIEq-f1HK-K2Y6-t4R6-5bwn-JJShp4

LV Write Access read/write

LV Creation host, time localhost, 2020-03-24 19:55:51 +0800

LV Status available

# open 1

LV Size <17.00 GiB

Current LE 4351

Segments 1

Allocation inherit

Read ahead sectors auto

- currently set to 8192

Block device 253:0

--- Logical volume ---

LV Path /dev/rhel/swap

LV Name swap

VG Name rhel

LV UUID 0mj0nq-qLDj-r8CM-Ftr8-NQTJ-uX6O-yW3QI9

LV Write Access read/write

LV Creation host, time localhost, 2020-03-24 19:55:51 +0800

LV Status available

# open 2

LV Size 2.00 GiB

Current LE 512

Segments 1

Allocation inherit

Read ahead sectors auto

- currently set to 8192

Block device 253:1

--- Logical volume ---

LV Path /dev/myVG/lv0

LV Name lv0

VG Name myVG

LV UUID h0Ko7Q-t20v-iZfq-zObG-rc21-uMCK-3R2tHI

LV Write Access read/write

LV Creation host, time promote.cache-dns.local, 2020-04-18 11:39:13 +0800

LV snapshot status source of

SNAP [active]

LV Status available

# open 1

LV Size 100.00 MiB

Current LE 25

Segments 1

Allocation inherit

Read ahead sectors auto

- currently set to 8192

Block device 253:2

--- Logical volume ---

LV Path /dev/myVG/SNAP

LV Name SNAP

VG Name myVG

LV UUID vs61AA-nHnS-QxxE-Aclj-nB8x-32lY-0dlFM7

LV Write Access read/write

LV Creation host, time promote.cache-dns.local, 2020-04-18 12:01:00 +0800

LV snapshot status active destination for lv0

LV Status available

# open 0

LV Size 100.00 MiB

Current LE 25

COW-table size 100.00 MiB

COW-table LE 25

Allocated to snapshot 89.79%

Snapshot chunk size 4.00 KiB

Segments 1

Allocation inherit

Read ahead sectors auto

- currently set to 8192

Block device 253:5

4. 对逻辑卷进行快照还原操作(先卸载逻辑卷设备与目录的挂载)

root@promote ~]# umount /lv0

[root@promote ~]# lvconvert --merge /dev/myVG/SNAP

Merging of volume myVG/SNAP started.

myVG/lv0: Merged: 92.96%

5. 挂载并查看

[root@promote ~]# mount -a

[root@promote ~]# ls /lv0

lost+found readme.txt

删除逻辑卷

1. 取消逻辑卷与目录的挂载关联,删除配置文件中永久生效的设备参数

[root@promote ~]# umount /lv0

2. 删除逻辑卷设备

root@promote ~]# lvremove /dev/myVG/lv0

Do you really want to remove active logical volume myVG/lv0? [y/n]: y

Logical volume "lv0" successfully removed

3. 删除卷组

[root@promote ~]# vgremove myVG

Volume group "myVG" successfully removed

4. 删除物理卷设备

[root@promote ~]# pvremove /dev/sdb /dev/sdc

Labels on physical volume "/dev/sdb" successfully wiped.

Labels on physical volume "/dev/sdc" successfully wiped.

注意点:

标签:软件 调整 hang nat 相同 配置 管理 play 扩容

原文地址:https://www.cnblogs.com/wanao/p/12725041.html