标签:direct nsf eth 报错 atomic cto hang metadata apr

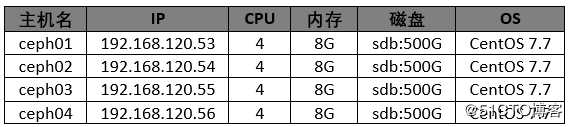

由于搞不到红帽官网的软件包,只能使用CentOS的软件仓库,环境信息如下:

[root@ceph01 ~]# vi /etc/yum.repos.d/CentOS-Base.repo

[Storage]

name=CentOS-$releasever - Storage

baseurl=https://mirrors.huaweicloud.com/centos/7.7.1908/storage/x86_64/ceph-nautilus/

gpgcheck=0

enabled=1

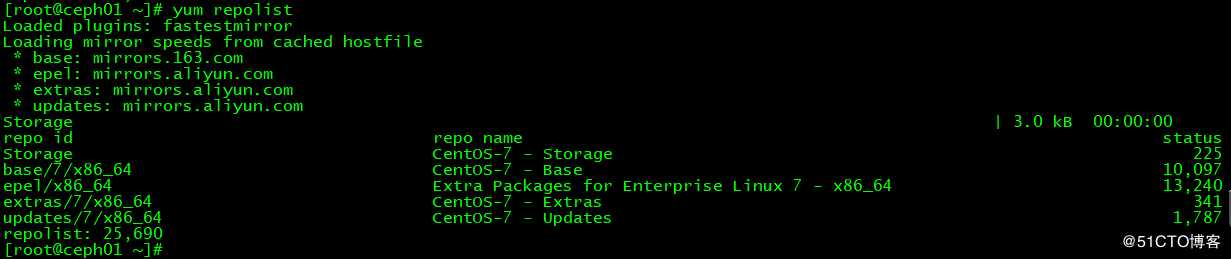

[root@ceph01 ~]# yum repolist

这里就是配置ssh互信操作,可以参考其他文章。

这里使用ceph01作为ansible的管理节点。

[root@ceph01 ~]# yum install ceph-ansible切换目录至/usr/share/ceph-ansible文件夹,分别创建all.yml、osds.yml以及site.yml。

[root@ceph01 ceph-ansible]# cd group_vars/

[root@ceph01 group_vars]# cp all.yml.sample all.yml

[root@ceph01 group_vars]# vi all.yml

dummy:

fetch_directory: ~/ceph-ansible-keys

ceph_origin: distro

ceph_repository: dummy

cephx: true

monitor_interface: eth0

journal_size: 5120

public_network: 192.168.120.0/24

dashboard_admin_user: admin

dashboard_admin_password: redhat

grafana_admin_user: admin

grafana_admin_password: redhat

[root@ceph01 group_vars]# cp osds.yml.sample osds.yml

[root@ceph01 group_vars]# vi osds.yml

dummy:

devices:

- /dev/sdb

osd_auto_discovery: true

[root@ceph01 group_vars]# cd ../

[root@ceph01 ceph-ansible]# cp site.yml.sample site.yml此文件就是/etc/ansible/hosts文件,加入以下内容:

[root@ceph01 ceph-ansible]# vi /etc/ansible/hosts

[mons]

ceph01

ceph02

ceph03

[osds]

ceph01

ceph02

ceph03

ceph04

[mgrs]

ceph01

ceph02

[grafana-server]

ceph03[root@ceph01 ceph-ansible]# ansible all -m ping

[WARNING]: log file at /root/ansible/ansible.log is not writeable and we cannot create it, aborting

[DEPRECATION WARNING]: The TRANSFORM_INVALID_GROUP_CHARS settings is set to allow bad characters in group names by

default, this will change, but still be user configurable on deprecation. This feature will be removed in version 2.10.

Deprecation warnings can be disabled by setting deprecation_warnings=False in ansible.cfg.

[WARNING]: Invalid characters were found in group names but not replaced, use -vvvv to see details

ceph03 | SUCCESS => {

"ansible_facts": {

"discovered_interpreter_python": "/usr/bin/python"

},

"changed": false,

"ping": "pong"

}

ceph04 | SUCCESS => {

"ansible_facts": {

"discovered_interpreter_python": "/usr/bin/python"

},

"changed": false,

"ping": "pong"

}

ceph02 | SUCCESS => {

"ansible_facts": {

"discovered_interpreter_python": "/usr/bin/python"

},

"changed": false,

"ping": "pong"

}

ceph01 | SUCCESS => {

"ansible_facts": {

"discovered_interpreter_python": "/usr/bin/python"

},

"changed": false,

"ping": "pong"

}在执行之前,一定要切换到all.yml和site.yml文件所在的目录,否则会报错的。

[root@ceph01 ~]# cd /usr/share/ceph-ansible

[root@ceph01 ceph-ansible]# ansible-playbook site.yml

......

PLAY RECAP ***************************************************************************************************************

ceph01 : ok=353 changed=46 unreachable=0 failed=0 skipped=509 rescued=0 ignored=0

ceph02 : ok=299 changed=42 unreachable=0 failed=0 skipped=451 rescued=0 ignored=0

ceph03 : ok=260 changed=53 unreachable=0 failed=0 skipped=385 rescued=0 ignored=0

ceph04 : ok=141 changed=23 unreachable=0 failed=0 skipped=264 rescued=0 ignored=0

INSTALLER STATUS *********************************************************************************************************

Install Ceph Monitor : Complete (0:01:21)

Install Ceph Manager : Complete (0:02:19)

Install Ceph OSD : Complete (0:01:36)

Install Ceph Dashboard : Complete (0:01:11)

Install Ceph Grafana : Complete (0:03:12)

Install Ceph Node Exporter : Complete (0:01:54)

Friday 24 April 2020 12:20:47 +0800 (0:00:00.123) 0:38:03.733 **********

===============================================================================

ceph-common : install redhat ceph packages --------------------------------------------------------------------- 1267.52s

ceph-infra : install firewalld python binding ------------------------------------------------------------------- 131.81s

ceph-grafana : wait for grafana to start ------------------------------------------------------------------------ 106.77s

ceph-mgr : install ceph-mgr packages on RedHat or SUSE ----------------------------------------------------------- 62.62s

ceph-container-engine : install container packages --------------------------------------------------------------- 60.26s

check for python ------------------------------------------------------------------------------------------------- 21.11s

ceph-common : install centos dependencies ------------------------------------------------------------------------ 19.93s

ceph-grafana : install ceph-grafana-dashboards package on RedHat or SUSE ----------------------------------------- 19.47s

gather and delegate facts ---------------------------------------------------------------------------------------- 12.02s

ceph-osd : use ceph-volume lvm batch to create bluestore osds ---------------------------------------------------- 10.15s

ceph-facts : generate cluster fsid -------------------------------------------------------------------------------- 9.09s

ceph-mgr : wait for all mgr to be up ------------------------------------------------------------------------------ 8.02s

ceph-mon : fetch ceph initial keys -------------------------------------------------------------------------------- 7.40s

ceph-dashboard : set or update dashboard admin username and password ---------------------------------------------- 6.92s

ceph-facts : check if it is atomic host --------------------------------------------------------------------------- 6.21s

ceph-container-engine : start container service ------------------------------------------------------------------- 5.94s

ceph-mgr : create ceph mgr keyring(s) on a mon node --------------------------------------------------------------- 4.98s

ceph-mgr : add modules to ceph-mgr -------------------------------------------------------------------------------- 4.95s

ceph-facts : check for a ceph mon socket -------------------------------------------------------------------------- 4.39s

ceph-mgr : disable ceph mgr enabled modules ----------------------------------------------------------------------- 4.34s配置完成后,使用下面的目录验证集群状态:

[root@ceph01 ceph-ansible]# ceph -s

cluster:

id: ba2c9862-473f-4ca9-ae28-e907c1cbda45

health: HEALTH_OK

services:

mon: 3 daemons, quorum ceph01,ceph02,ceph03 (age 14m)

mgr: ceph01(active, since 4m), standbys: ceph02

osd: 4 osds: 4 up (since 10m), 4 in (since 10m)

data:

pools: 0 pools, 0 pgs

objects: 0 objects, 0 B

usage: 4.0 GiB used, 1.9 TiB / 1.9 TiB avail

pgs: [root@ceph01 ceph-ansible]# vi /etc/ansible/hosts

[mdss]

ceph01

ceph02

ceph03

ceph04

[root@ceph01 ceph-ansible]# cp group_vars/mdss.yml.sample group_vars/mdss.yml

[root@ceph01 ceph-ansible]# ansible-playbook site.yml --limit mdss

......

PLAY RECAP ***************************************************************************************************************

ceph01 : ok=419 changed=20 unreachable=0 failed=0 skipped=561 rescued=0 ignored=0

ceph02 : ok=344 changed=16 unreachable=0 failed=0 skipped=498 rescued=0 ignored=0

ceph03 : ok=301 changed=16 unreachable=0 failed=0 skipped=435 rescued=0 ignored=0

ceph04 : ok=186 changed=11 unreachable=0 failed=0 skipped=325 rescued=0 ignored=0

INSTALLER STATUS *********************************************************************************************************

Install Ceph Monitor : Complete (0:01:09)

Install Ceph Manager : Complete (0:00:59)

Install Ceph OSD : Complete (0:01:34)

Install Ceph MDS : Complete (0:02:01)

Install Ceph Dashboard : Complete (0:01:24)

Install Ceph Grafana : Complete (0:01:10)

Install Ceph Node Exporter : Complete (0:00:56)

Friday 24 April 2020 15:02:58 +0800 (0:00:00.121) 0:11:36.111 **********

===============================================================================

ceph-grafana : wait for grafana to start ------------------------------------------------------------------------- 35.44s

ceph-mds : install ceph-mds package on redhat or suse ------------------------------------------------------------ 16.13s

ceph-infra : install firewalld python binding -------------------------------------------------------------------- 15.14s

ceph-dashboard : set or update dashboard admin username and password ---------------------------------------------- 7.62s

gather and delegate facts ----------------------------------------------------------------------------------------- 6.37s

ceph-mds : create filesystem pools -------------------------------------------------------------------------------- 6.11s

ceph-mds : assign application to cephfs pools --------------------------------------------------------------------- 5.12s

ceph-mds : set pg_autoscale_mode value on pool(s) ----------------------------------------------------------------- 4.83s

ceph-mds : customize pool size ------------------------------------------------------------------------------------ 4.17s

ceph-mds : get keys from monitors --------------------------------------------------------------------------------- 3.40s

ceph-config : look up for ceph-volume rejected devices ------------------------------------------------------------ 3.34s

ceph-osd : get keys from monitors --------------------------------------------------------------------------------- 3.08s

ceph-mgr : create ceph mgr keyring(s) on a mon node --------------------------------------------------------------- 3.01s

ceph-config : look up for ceph-volume rejected devices ------------------------------------------------------------ 2.80s

ceph-config : look up for ceph-volume rejected devices ------------------------------------------------------------ 2.71s

check for python -------------------------------------------------------------------------------------------------- 2.70s

ceph-osd : apply operating system tuning -------------------------------------------------------------------------- 2.67s

ceph-osd : copy ceph key(s) if needed ----------------------------------------------------------------------------- 2.56s

ceph-mds : copy ceph key(s) if needed ----------------------------------------------------------------------------- 2.44s

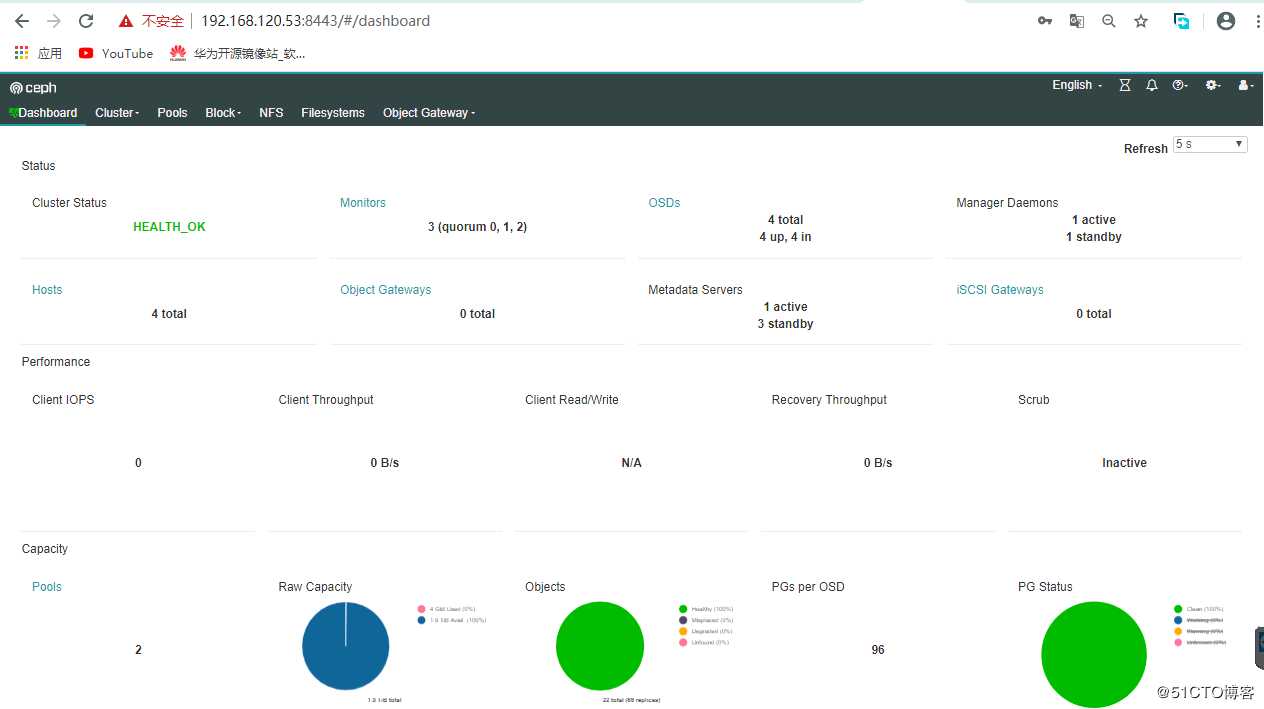

ceph-osd : set noup flag ------------------------------------------------------------------------------------------ 2.40s如果安装了mgr服务,则使用web界面进行访问并操作,如下:

标签:direct nsf eth 报错 atomic cto hang metadata apr

原文地址:https://blog.51cto.com/candon123/2490054