标签:def art 重置 The code next 环境 ima ecif

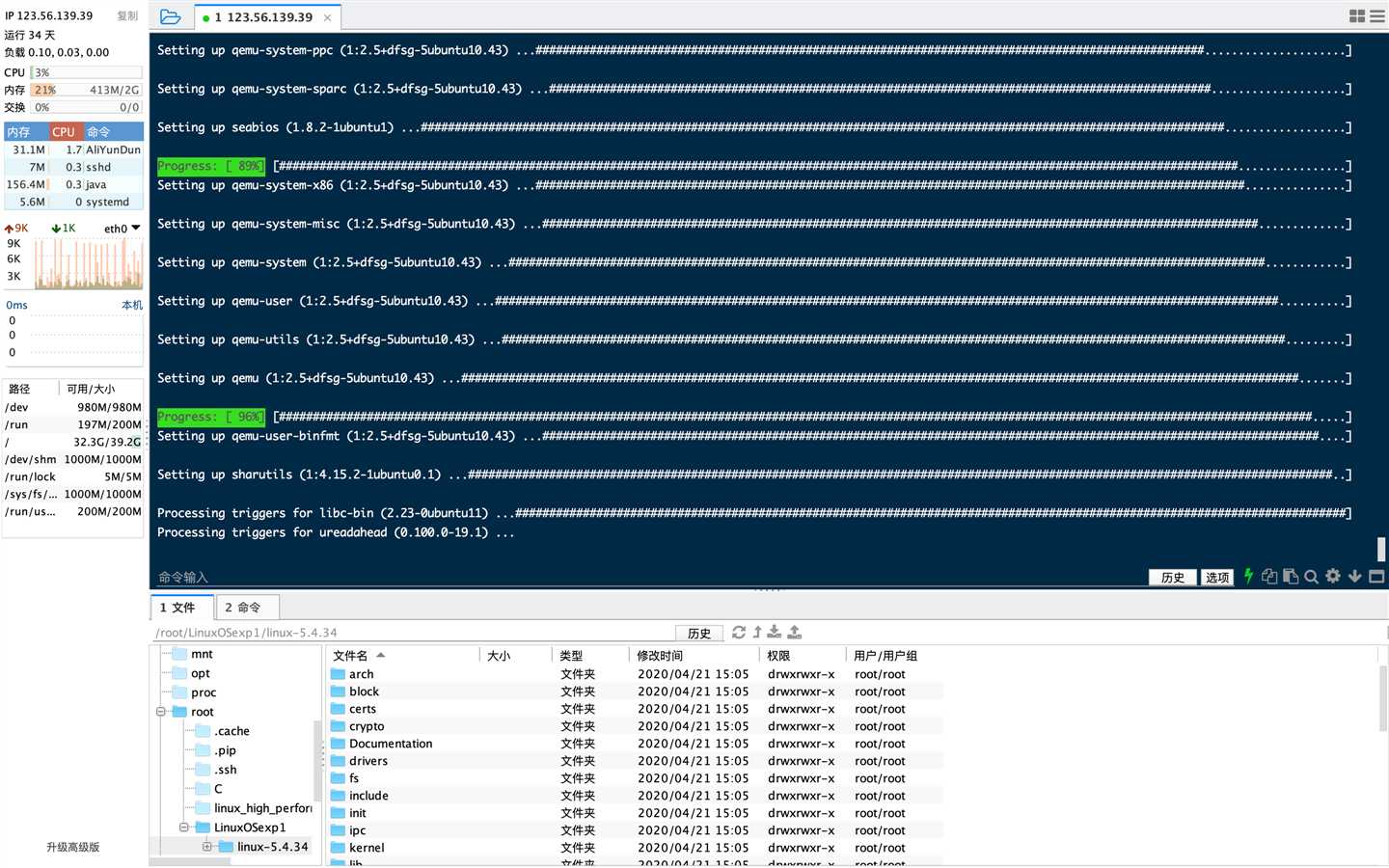

一、实验环境

阿里云学生版 Ubuntu 16.04

二、基于mykernel 2.0编写一个操作系统内核,参照https://github.com/mengning/mykernel 提供的范例代码

wget https://raw.github.com/mengning/mykernel/master/mykernel-2.0_for_linux-5.4.34.patch

sudo apt install axel

axel -n 20 https://mirrors.edge.kernel.org/pub/linux/kernel/v5.x/linux-5.4.34.tar.xz

xz -d linux-5.4.34.tar.xz

tar -xvf linux-5.4.34.tar

cd linux-5.4.34

patch -p1 < ../mykernel-2.0_for_linux-5.4.34.patch

sudo apt install build-essential libncurses-dev bison flex libssl-dev libelf-dev

make defconfig # Default configuration is based on ‘x86_64_defconfig‘

# 使用allnoconfig编译出来qemu无法加载启动,不知道为什么?有明白的告诉我,完整编译太慢了,消耗的资源也多。

make -j$(nproc) # 编译的时间比较久哦

sudo apt install qemu # install QEMU

qemu-system-x86_64 -kernel arch/x86/boot/bzImage

三、结合详细代码简要分析操作系统内核核心功能及运行工作机制

#define MAX_TASK_NUM 4

#define KERNEL_STACK_SIZE 1024*2

/* CPU-specific state of this task */

struct Thread {

unsigned long ip;

unsigned long sp;

};

typedef struct PCB{

int pid;

volatile long state; /* -1 unrunnable, 0 runnable, >0 stopped */

unsigned long stack[KERNEL_STACK_SIZE];

/* CPU-specific state of this task */

struct Thread thread;

unsigned long task_entry;

struct PCB *next;

}tPCB;

void my_schedule(void);

mypcb.h 模拟真实内核中的进程控制块,基于这个角度,可以更好理解内容。

mymain.c

#include "mypcb.h"

tPCB task[MAX_TASK_NUM];

tPCB * my_current_task = NULL;

volatile int my_need_sched = 0;

void my_process(void);

void __init my_start_kernel(void)

{

int pid = 0;

int i;

/* Initialize process 0*/

task[pid].pid = pid;

task[pid].state = 0;/* -1 unrunnable, 0 runnable, >0 stopped */

task[pid].task_entry = task[pid].thread.ip = (unsigned long)my_process;

task[pid].thread.sp = (unsigned long)&task[pid].stack[KERNEL_STACK_SIZE-1];

task[pid].next = &task[pid];

/*fork more process */

for(i=1;i<MAX_TASK_NUM;i++)

{

memcpy(&task[i],&task[0],sizeof(tPCB));

task[i].pid = i;

task[i].thread.sp = (unsigned long)(&task[i].stack[KERNEL_STACK_SIZE-1]);

task[i].next = task[i-1].next;

task[i-1].next = &task[i];

}

/* start process 0 by task[0] */

pid = 0;

my_current_task = &task[pid];

asm volatile(

"movq %1,%%rsp\n\t" /* set task[pid].thread.sp to rsp */

"pushq %1\n\t" /* push rbp */

"pushq %0\n\t" /* push task[pid].thread.ip */

"ret\n\t" /* pop task[pid].thread.ip to rip */

:

: "c" (task[pid].thread.ip),"d" (task[pid].thread.sp) /* input c or d mean %ecx/%edx*/

);

}

int i = 0;

void my_process(void)

{

while(1)

{

i++;

if(i%10000000 == 0)

{

printk(KERN_NOTICE "this is process %d -\n",my_current_task->pid);

if(my_need_sched == 1)

{

my_need_sched = 0;

my_schedule();

}

printk(KERN_NOTICE "this is process %d +\n",my_current_task->pid);

}

}

}

my_start_kernel()

my_process()

关键汇编代码分析

myinterrupt.c

#include "mypcb.h"

extern tPCB task[MAX_TASK_NUM];

extern tPCB * my_current_task;

extern volatile int my_need_sched;

volatile int time_count = 0;

/*

* Called by timer interrupt.

* it runs in the name of current running process,

* so it use kernel stack of current running process

*/

void my_timer_handler(void)

{

if(time_count%1000 == 0 && my_need_sched != 1)

{

printk(KERN_NOTICE ">>>my_timer_handler here<<<\n");

my_need_sched = 1;

}

time_count ++ ;

return;

}

void my_schedule(void)

{

tPCB * next;

tPCB * prev;

if(my_current_task == NULL

|| my_current_task->next == NULL)

{

return;

}

printk(KERN_NOTICE ">>>my_schedule<<<\n");

/* schedule */

next = my_current_task->next;

prev = my_current_task;

if(next->state == 0)/* -1 unrunnable, 0 runnable, >0 stopped */

{

my_current_task = next;

printk(KERN_NOTICE ">>>switch %d to %d<<<\n",prev->pid,next->pid);

/* switch to next process */

asm volatile(

"pushq %%rbp\n\t" /* save rbp of prev */

"movq %%rsp,%0\n\t" /* save rsp of prev */

"movq %2,%%rsp\n\t" /* restore rsp of next */

"movq $1f,%1\n\t" /* save rip of prev */

"pushq %3\n\t"

"ret\n\t" /* restore rip of next */

"1:\t" /* next process start here */

"popq %%rbp\n\t"

: "=m" (prev->thread.sp),"=m" (prev->thread.ip)

: "m" (next->thread.sp),"m" (next->thread.ip)

);

}

return;

}

my_schedule()

关键汇编代码分析

标签:def art 重置 The code next 环境 ima ecif

原文地址:https://www.cnblogs.com/yxzh-ustc/p/12878170.html