标签:位置 无法启动 bin 解压 ssh免登陆 nal common desc get

hadoop完全分布式集群有一般集群和HA高可用集群

HA集群具有主备切换机制,有两个namenode节点,active/standby namenode,两个节点元数据同步,

当active namenode节点出现宕机时,standby namenode节点快速拉起,切换为active namenode节点,保证集群正常运行

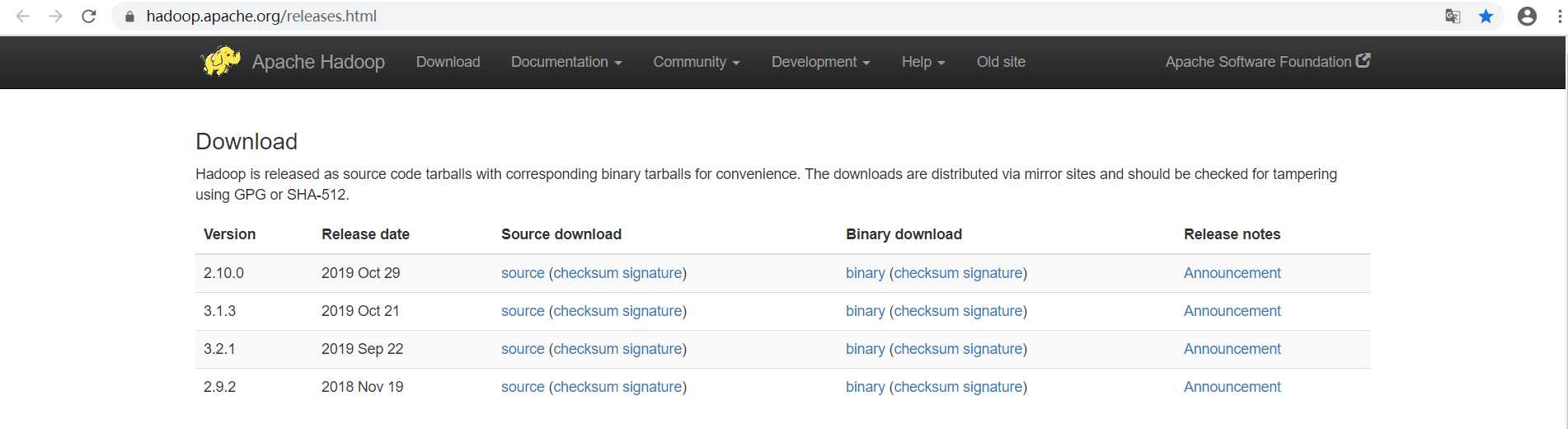

1、安装包下载

下载地址:https://hadoop.apache.org/releases.html

2、解压

tar -zxvf hadoop-3.2.1.tar.gz -C ~/soft/app/

3、修改配置文件

3.1、配置hadoop-env.sh

export JAVA_HOME=/root/opt/jdk1.8.0_221 export HDFS_NAMENODE_USER=root export HDFS_DATANODE_USER=root export HDFS_SECONDARYNAMENODE_USER=root export YARN_RESOURCEMANAGER_USER=root export YARN_NODEMANAGER_USER=root

#HA集群配置 export HDFS_ZKFC_USER=root export HDFS_JOURNALNODE_USER=root

3.2、配置core-site.xml

<configuration>

<property>

<name>fs.defaultFS</name>

<value>hdfs://centos1:9000</value>

</property>

<property>

<name>hadoop.tmp.dir</name>

<value>/root/opt/hadoop/data/tmp</value>

</property>

</configuration>

HA集群配置:

<configuration>

<!-- 指定hdfs的nameservice为ns -->

<property>

<name>fs.defaultFS</name>

<value>hdfs://ns</value>

</property>

<property>

<name>hadoop.tmp.dir</name>

<value>/root/opt/hadoop/data/tmp</value>

</property>

<!--指定zookeeper地址-->

<property>

<name>ha.zookeeper.quorum</name>

<value>centos1:2181,centos2:2181,centos3:2181</value>

</property>

<!-- hadoop链接zookeeper的超时时长设置 -->

<property>

<name>ha.zookeeper.session-timeout.ms</name>

<value>1000</value>

<description>ms</description>

</property>

</configuration>

3.3、配置hdfs-site.xml

<configuration>

<property>

<name>dfs.replication</name>

<value>3</value>

</property>

<property>

<name>dfs.namenode.name.dir</name>

<value>/root/opt/hadoop/data/namenode</value>

</property>

<property>

<name>dfs.datanode.data.dir</name>

<value>/root/opt/hadoop/data/datanode</value>

</property>

</configuration>

HA集群配置:

<configuration>

<property>

<name>dfs.replication</name>

<value>3</value>

</property>

<property>

<name>dfs.namenode.name.dir</name>

<value>/root/opt/hadoop/data/namenode</value>

</property>

<property>

<name>dfs.datanode.data.dir</name>

<value>/root/opt/hadoop/data/datanode</value>

</property>

<!--指定hdfs的nameservice为ns,需要和core-site.xml中的保持一致 -->

<property>

<name>dfs.nameservices</name>

<value>ns</value>

</property>

<!-- ns下面有两个NameNode,分别是nn1,nn2 -->

<property>

<name>dfs.ha.namenodes.ns</name>

<value>nn1,nn2</value>

</property>

<!-- nn1的RPC通信地址 -->

<property>

<name>dfs.namenode.rpc-address.ns.nn1</name>

<value>centos1:9000</value>

</property>

<!-- nn1的http通信地址 -->

<property>

<name>dfs.namenode.http-address.ns.nn1</name>

<value>centos1:50070</value>

</property>

<!-- nn2的RPC通信地址 -->

<property>

<name>dfs.namenode.rpc-address.ns.nn2</name>

<value>centos2:9000</value>

</property>

<!-- nn2的http通信地址 -->

<property>

<name>dfs.namenode.http-address.ns.nn2</name>

<value>centos2:50070</value>

</property>

<!-- 指定NameNode的元数据在JournalNode上的存放位置 -->

<property>

<name>dfs.namenode.shared.edits.dir</name>

<value>qjournal://centos1:8485;centos2:8485;centos3:8485/ns</value>

</property>

<!-- 指定JournalNode在本地磁盘存放数据的位置 -->

<property>

<name>dfs.journalnode.edits.dir</name>

<value>/root/opt/hadoop/data/journal</value>

</property>

<!-- 开启NameNode故障时自动切换 -->

<property>

<name>dfs.ha.automatic-failover.enabled</name>

<value>true</value>

</property>

<!-- 配置失败自动切换实现方式 -->

<property>

<name>dfs.client.failover.proxy.provider.ns</name>

<value>org.apache.hadoop.hdfs.server.namenode.ha.ConfiguredFailoverProxyProvider</value>

</property>

<!-- 配置隔离机制 -->

<property>

<name>dfs.ha.fencing.methods</name>

<value>sshfence</value>

<value>shell(true)</value>

</property>

<!-- 使用隔离机制时需要ssh免登陆 -->

<property>

<name>dfs.ha.fencing.ssh.private-key-files</name>

<value>/root/.ssh/id_rsa</value>

</property>

<!-- 配置sshfence隔离机制超时时间 -->

<property>

<name>dfs.ha.fencing.ssh.connect-timeout</name>

<value>30000</value>

</property>

<property>

<name>dfs.permissions</name>

<value>false</value>

</property>

</configuration>

3.4、配置mapred-site.xml

<configuration>

<property>

<name>mapreduce.framework.name</name>

<value>yarn</value>

</property>

<property>

<name>mapreduce.admin.user.env</name>

<value>HADOOP_MAPRED_HOME=$HADOOP_COMMON_HOME</value>

</property>

<property>

<name>yarn.app.mapreduce.am.env</name>

<value>HADOOP_MAPRED_HOME=$HADOOP_COMMON_HOME</value>

</property>

</configuration>

3.5、配置yarn-site.xml

<configuration>

<property>

<name>yarn.resourcemanager.hostname</name>

<value>centos3</value>

</property>

<property>

<name>yarn.nodemanager.aux-service</name>

<value>mapreduce_shuffle</value>

</property>

<property>

<name>yarn.resourcemanager.address</name>

<value>centos1:8032</value>

</property>

<property>

<name>yarn.resourcemanager.scheduler.address</name>

<value>centos1:8030</value>

</property>

<property>

<name>yarn.resourcemanager.resource-tracker.address</name>

<value>centos1:8031</value>

</property>

<property>

<name>yarn.resourcemanager.admin.address</name>

<value>centos1:8033</value>

</property>

<property>

<name>yarn.resourcemanager.webapp.address</name>

<value>centos1:8088</value>

</property>

</configuration>

HA集群配置:

<configuration>

<!-- 开启RM高可用 -->

<property>

<name>yarn.resourcemanager.ha.enabled</name>

<value>true</value>

</property>

<!-- 指定RM的cluster id -->

<property>

<name>yarn.resourcemanager.cluster-id</name>

<value>yrc</value>

</property>

<!-- 指定RM的名字 -->

<property>

<name>yarn.resourcemanager.ha.rm-ids</name>

<value>rm1,rm2</value>

</property>

<!-- 分别指定RM的地址 -->

<property>

<name>yarn.resourcemanager.hostname.rm1</name>

<value>centos1</value>

</property>

<property>

<name>yarn.resourcemanager.hostname.rm2</name>

<value>centos2</value>

</property>

<!-- 指定zk集群地址 -->

<property>

<name>yarn.resourcemanager.zk-address</name>

<value>centos1:2181,centos2:2181,centos3:2181</value>

</property>

<property>

<name>yarn.nodemanager.aux-services</name>

<value>mapreduce_shuffle</value>

</property>

</configuration>

3.6、配置workers

centos1

centos2

centos3

4、将配置好的hadoop分发到各个节点

scp -r /root/app/hadoop centos2:/root/app/

scp -r /root/app/hadoop centos3:/root/app/

5、配置环境变量

vim /etc/profile

export HADOOP_HOME=/root/soft/app/hadoop/hadoop-3.2.1 export PATH=$JAVA_HOME/bin:$PATH:$HADOOP_HOME/bin:$HADOOP_HOME/sbin

source /etc/profile

6、集群初始化与启动

6.1、非HA集群:

[root@centos1 hadoop-3.2.1]# bin/hdfs namenode -format

[root@bs1 hadoop-3.2.1]# sbin/start-dfs.sh [root@bs1 hadoop-3.2.1]# sbin/start-yarn.sh

6.2、HA集群:

zkServer.sh start

bin/hdfs zkfc -formatZK

sbin/hadoop-daemon.sh start journalnode

bin/hdfs namenode -format ns1

sbin/hadoop-daemon.sh start namenode

bin/hdfs namenode -bootstrapStandby

sbin/hadoop-daemon.sh start namenode

sbin/start-dfs.sh

sbin/start-yarn.sh

bin/hdfs haadmin -getServiceState nn1 standby bin/hdfs haadmin -getServiceState nn2 active9.

bin/hdfs dfsadmin -report

<configuration><!-- 指定hdfs的nameservice为ns --> <property> <name>fs.defaultFS</name> <value>hdfs://ns</value> </property> <property> <name>hadoop.tmp.dir</name> <value>/root/opt/hadoop/data/tmp</value> </property><!--指定zookeeper地址--> <property> <name>ha.zookeeper.quorum</name> <value>centos1:2181,centos2:2181,centos3:2181</value> </property> <!-- hadoop链接zookeeper的超时时长设置 --> <property> <name>ha.zookeeper.session-timeout.ms</name> <value>1000</value> <description>ms</description> </property><!-- 解决start-all.sh无法启动namenode --> <property> <name>ipc.client.connect.max.retries</name> <value>30</value> </property></configuration>

标签:位置 无法启动 bin 解压 ssh免登陆 nal common desc get

原文地址:https://www.cnblogs.com/kylinyang/p/12943959.html