标签:key 词汇 select label cat 准确率和召回率 span 表示 mode

from sklearn.model_selection import train_test_split

x_train,x_test, y_train, y_test = train_test_split(data, target, test_size=0.2, random_state=0, stratify=y_train)

def split_dataset(data, label): x_train, x_test, y_train, y_test = train_test_split(data, label, test_size=0.2, random_state=0, stratify=label) return x_train, x_test, y_train, y_test

sklearn.feature_extraction.text.CountVectorizer

sklearn.feature_extraction.text.TfidfVectorizer

from sklearn.feature_extraction.text import TfidfVectorizer

tfidf2 = TfidfVectorizer()

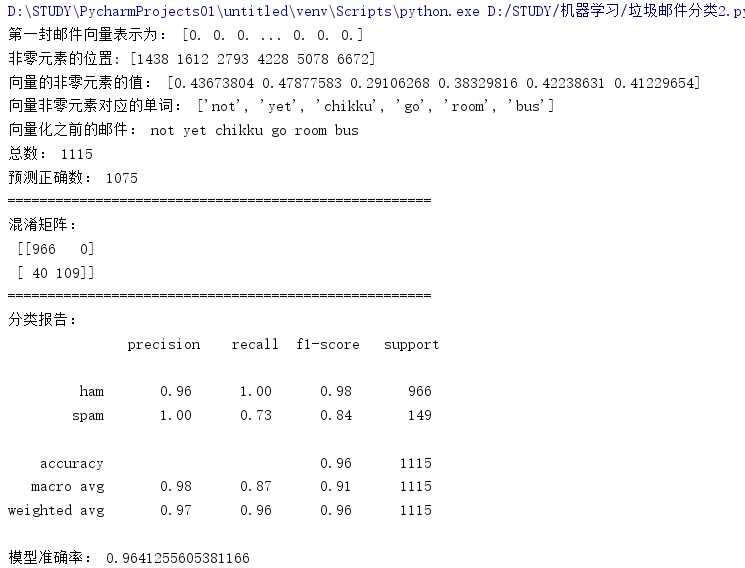

观察邮件与向量的关系

向量还原为邮件

# 把文本转化为tf-idf的特征矩阵 def tfidf_dataset(x_train,x_test): tfidf2 = TfidfVectorizer() X_train = tfidf2.fit_transform(x_train) X_test = tfidf2.transform(x_test) return X_train, X_test, tfidf2 # 向量还原成邮件 def revert_mail(x_train, X_train, model): s = X_train.toarray()[0] print("第一封邮件向量表示为:", s) a = np.flatnonzero(X_train.toarray()[0]) print("非零元素的位置:", a) print("向量的非零元素的值:", s[a]) b = model.vocabulary_ # 词汇表 key_list = [] for key, value in b.items(): if value in a: key_list.append(key) # key非0元素对应的单词 print("向量非零元素对应的单词:", key_list) print("向量化之前的邮件:", x_train[0])

from sklearn.naive_bayes import GaussianNB

from sklearn.naive_bayes import MultinomialNB

说明为什么选择这个模型?

邮件数据是概率性的,不符合正态分布,应该选择多项式分布模型

def mnb_model(x_train, x_test, y_train, y_test): mnb = MultinomialNB() mnb.fit(x_train, y_train) predict = mnb.predict(x_test) print("总数:", len(y_test)) print("预测正确数:", (predict == y_test).sum()) print("预测准确率:",sum(predict == y_test) / len(y_test)) return predict

from sklearn.metrics import confusion_matrix

confusion_matrix = confusion_matrix(y_test, y_predict)

说明混淆矩阵的含义

混淆矩阵是机器学习中总结分类模型预测结果的情形分析表,以矩阵形式将数据集中的记录按照真实的类别与分类模型预测的类别判断两个标准进行汇总。

from sklearn.metrics import classification_report

说明准确率、精确率、召回率、F值分别代表的意义

def class_report(ypre_mnb, y_test): conf_matrix = confusion_matrix(y_test, ypre_mnb) print("=====================================================") print("混淆矩阵:\n", conf_matrix) c = classification_report(y_test, ypre_mnb) print("=====================================================") print("分类报告:\n", c) print("模型准确率:", (conf_matrix[0][0] + conf_matrix[1][1]) / np.sum(conf_matrix))

如果用CountVectorizer进行文本特征生成,与TfidfVectorizer相比,效果如何?

CountVectorizer只考虑单个词在训练文本中出现的频率,而TfidfVectorizer除了考量某个词在当前训练文本中出现的频率之外,同时包含这个词的其它训练文本数量。

所以训练文本的数量越多,TfidfVectorizer更有优势,而且TfidfVectorizer可以削减高频率没有意义的词。

标签:key 词汇 select label cat 准确率和召回率 span 表示 mode

原文地址:https://www.cnblogs.com/ccw1124486193/p/12970082.html