标签:创建 epo ack flink keep which svc mes ica

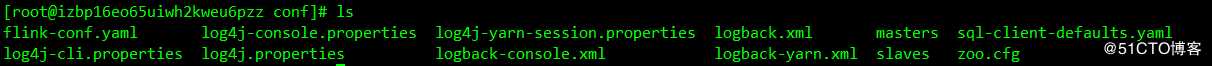

https://flink.apache.org/ # Flink官网先把Flink的所有配置文件拷贝到本地, 再把本地的配置文件挂在到POD里面

1.1 jobmanager的配置文件

jobmanager.rpc.address: flink-jobmanager-svc

jobmanager.rpc.port: 6123

jobmanager.heap.size: 1024m

taskmanager.heap.size: 1024m

taskmanager.numberOfTaskSlots: 1

parallelism.default: 1

high-availability: zookeeper

high-availability.zookeeper.path.root: /flink

high-availability.cluster-id: 1

high-availability.storageDir: file:///data/ha

high-availability.zookeeper.quorum: localhost:2181

zookeeper.sasl.disable: true

high-availability.jobmanager.port: 6123

state.backend: filesystem

state.backend.fs.checkpointdir: file:///data/state

web.upload.dir: /data/upload

blob.server.port: 6124

metrics.internal.query-service.port: 6125

classloader.resolve-order: parent-first# The number of milliseconds of each tick

tickTime=2000

# The number of ticks that the initial synchronization phase can take

initLimit=10

# The number of ticks that can pass between sending a request and getting an acknowledgement

syncLimit=5

# The directory where the snapshot is stored.

# dataDir=/tmp/zookeeper

# The port at which the clients will connect

clientPort=2181

# ZooKeeper quorum peers

server.1=localhost:2888:3888

dataDir=/usr/local/flink-1.8.1/zookeeper/data

dataLogDir=/usr/local/flink-1.8.1/zookeeper/log1.2 taskmanager的配置文件

jobmanager.rpc.address: flink-jobmanager-svc

jobmanager.rpc.port: 6123

jobmanager.heap.size: 1024m

taskmanager.heap.size: 1024m

taskmanager.numberOfTaskSlots: 4

parallelism.default: 1

high-availability: zookeeper

high-availability.cluster-id: 1

high-availability.storageDir: file:///tmp

high-availability.zookeeper.quorum: flink-jobmanager-svc:2181

zookeeper.sasl.disable: true

classloader.resolve-order: parent-firstjobmanager 部署

apiVersion: v1

kind: PersistentVolume

metadata:

name: flink-jobmanager-conf-pv

spec:

accessModes:

- ReadWriteMany

capacity:

storage: 10Gi

persistentVolumeReclaimPolicy: Retain

storageClassName: nas

nfs:

path: /flink/jobmanager/conf

server: 自建

---

kind: PersistentVolumeClaim

apiVersion: v1

metadata:

name: flink-jobmanager-conf-pvc

spec:

accessModes:

- ReadWriteMany

storageClassName: nas

resources:

requests:

storage: 10Gi

---

apiVersion: v1

kind: PersistentVolume

metadata:

name: flink-jobmanager-lib-pv

spec:

accessModes:

- ReadWriteMany

capacity:

storage: 20Gi

persistentVolumeReclaimPolicy: Retain

storageClassName: nas

nfs:

path: /flink/jobmanager/lib

server: 自建

---

kind: PersistentVolumeClaim

apiVersion: v1

metadata:

name: flink-jobmanager-lib-pvc

spec:

accessModes:

- ReadWriteMany

storageClassName: nas

resources:

requests:

storage: 20Gi

apiVersion: v1

kind: PersistentVolume

metadata:

name: flink-jobmanager-zookeeper-pv

spec:

accessModes:

- ReadWriteMany

capacity:

storage: 20Gi

persistentVolumeReclaimPolicy: Retain

storageClassName: nas

nfs:

path: /flink/jobmanager/zookeeper

server: 自建

---

kind: PersistentVolumeClaim

apiVersion: v1

metadata:

name: flink-jobmanager-zookeeper-pvc

spec:

accessModes:

- ReadWriteMany

storageClassName: nas

resources:

requests:

storage: 20Gi

apiVersion: v1

kind: PersistentVolume

metadata:

name: flink-jobmanager-data-pv

spec:

accessModes:

- ReadWriteMany

capacity:

storage: 20Gi

persistentVolumeReclaimPolicy: Retain

storageClassName: nas

nfs:

path: /flink/jobmanager/data

server: 自建

---

kind: PersistentVolumeClaim

apiVersion: v1

metadata:

name: flink-jobmanager-data-pvc

spec:

accessModes:

- ReadWriteMany

storageClassName: nas

resources:

requests:

storage: 20Gi

---

apiVersion: v1

kind: Service

metadata:

name: flink-jobmanager-svc

spec:

ports:

- name: flink-jobmanager-web

nodePort: 30044

port: 8081

targetPort: 8081

- name: flink-jobmanager-rpc

nodePort: 32000

port: 6123

targetPort: 6123

- name: blob

nodePort: 32001

targetPort: 6124

port: 6124

- name: query

nodePort: 32002

targetPort: 6125

port: 6125

type: NodePort

selector:

app: flink-jobmanager

---

apiVersion: apps/v1beta1

kind: Deployment

metadata:

name: flink-jobmanager

spec:

selector:

matchLabels:

app: flink-jobmanager

template:

metadata:

labels:

app: flink-jobmanager

spec:

containers:

- image: flink:1.8.1

name: flink-jobmanager

ports:

- containerPort: 8081

name: flink-job

command: ["/bin/sh","-c"]

args: ["./zookeeper.sh start 1 && ./jobmanager.sh start-foreground"]

volumeMounts:

- name: flink-jobmanager-conf-pv

mountPath: /usr/local/flink-1.8.1/conf

- name: flink-jobmanager-lib-pv

mountPath: /usr/local/flink-1.8.1/lib

- name: flink-jobmanager-zookeeper-pv

mountPath: /usr/local/flink-1.8.1/zookeeper

- name: flink-jobmanager-data-pv

mountPath: /data/

volumes:

- name: flink-jobmanager-conf-pv

persistentVolumeClaim:

claimName: flink-jobmanager-conf-pvc

- name: flink-jobmanager-lib-pv

persistentVolumeClaim:

claimName: flink-jobmanager-lib-pvc

- name: flink-jobmanager-zookeeper-pv

persistentVolumeClaim:

claimName: flink-jobmanager-zookeeper-pvc

- name: flink-jobmanager-data-pv

persistentVolumeClaim:

claimName: flink-jobmanager-data-pvctaskmanager 部署

apiVersion: v1

kind: PersistentVolume

metadata:

name: flink-taskmanager-pv

spec:

accessModes:

- ReadWriteMany

capacity:

storage: 10Gi

persistentVolumeReclaimPolicy: Retain

storageClassName: nas

nfs:

path: /flink/taskmanager/conf

server: 自建

---

kind: PersistentVolumeClaim

apiVersion: v1

metadata:

name: flink-taskmanager-pvc

spec:

accessModes:

- ReadWriteMany

storageClassName: nas

resources:

requests:

storage: 10Gi

---

apiVersion: apps/v1beta1

kind: Deployment

metadata:

name: flink-taskmanager

spec:

replicas: 2

selector:

matchLabels:

app: flink-taskmanager

template:

metadata:

labels:

app: flink-taskmanager

spec:

containers:

- image: flink:1.8.1

name: flink-taskmanager

ports:

- containerPort: 8081

name: flink-task

args:

- ./taskmanager.sh

- start-foreground

volumeMounts:

- name: flink-taskmanager-pv

mountPath: /usr/local/flink-1.8.1/conf

- name: flink-jobmanager-lib-pv

mountPath: /usr/local/flink-1.8.1/lib

volumes:

- name: flink-taskmanager-pv

persistentVolumeClaim:

claimName: flink-taskmanager-pvc

- name: flink-jobmanager-lib-pv

persistentVolumeClaim:

claimName: flink-jobmanager-lib-pvc注意事项: jobmanager和taskmanager lib的jar 要保持一致

点外卖的小伙伴可以进群

标签:创建 epo ack flink keep which svc mes ica

原文地址:https://blog.51cto.com/xiaocuik/2498727