标签:des style blog http io color ar os sp

计算毫秒级的时间差算是一个常见的需求吧...

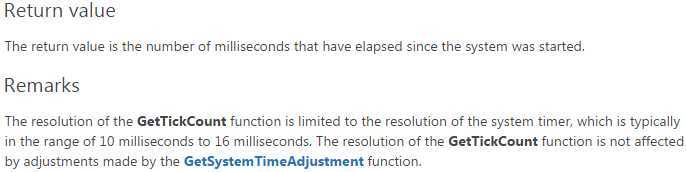

手头上是windows编程的项目,所以首先就想到的是GetTickCount(),但MSDN上这么说:

写个程序试一下吧:

1 #include <stdio.h> 2 #include <windows.h> 3 4 int main(void) 5 { 6 DWORD dwLastTime = GetTickCount(); 7 for (int i = 0; i != 10; ++i) 8 { 9 DWORD dwCurrentTime = GetTickCount(); 10 printf("GetTickCount = %ldms TimeDiff = %ldms\n", dwCurrentTime, dwCurrentTime - dwLastTime); 11 dwLastTime = dwCurrentTime; 12 Sleep(500); 13 } 14 return 0; 15 }

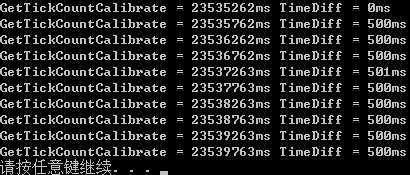

可以看到,算了10次,每次偏差一般都有1ms,更有甚者,达到15ms,跟MSDN里说的实际精度一样。

所以,用GetTickCount()计算毫秒级的时间差是不靠谱的!

那下面,如何满足我们的需求呢?

需求1:计算毫秒级别的时间差。

需求2:返回值最好是unsigned long级别的,以便与现有代码保持兼容。

解决方案1:

clock_t clock(void);

这个函数返回的是从程序启动到当前时刻所经历的CPU时钟周期数。将这个函数封装一下即可:

1 #include <ctime> 2 3 ULONG GetTickCountClock() 4 { 5 return (ULONG)((LONGLONG)clock() * 1000 / CLOCKS_PER_SEC); 6 }

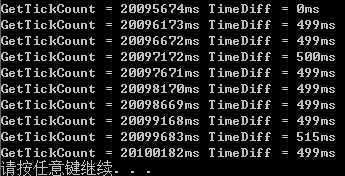

测试结果:

解决方案2:

通过SYSTEMTIME和FILETIME,我们可以得到距离1601年1月1日凌晨所经历的时间,单位是100纳秒。

这个时间肯定是足够精确了,但是得到的数值是一个LONGLONG,没关系,我们可以用这个时间来校准原生的GetTickCount()。

1 ULONG GetTickCountCalibrate() 2 { 3 static ULONG s_ulFirstCallTick = 0; 4 static LONGLONG s_ullFirstCallTickMS = 0; 5 6 SYSTEMTIME systemtime; 7 FILETIME filetime; 8 GetLocalTime(&systemtime); 9 SystemTimeToFileTime(&systemtime, &filetime); 10 LARGE_INTEGER liCurrentTime; 11 liCurrentTime.HighPart = filetime.dwHighDateTime; 12 liCurrentTime.LowPart = filetime.dwLowDateTime; 13 LONGLONG llCurrentTimeMS = liCurrentTime.QuadPart / 10000; 14 15 if (s_ulFirstCallTick == 0) 16 { 17 s_ulFirstCallTick = GetTickCount(); 18 } 19 if (s_ullFirstCallTickMS == 0) 20 { 21 s_ullFirstCallTickMS = llCurrentTimeMS; 22 } 23 24 return s_ulFirstCallTick + (ULONG)(llCurrentTimeMS - s_ullFirstCallTickMS); 25 }

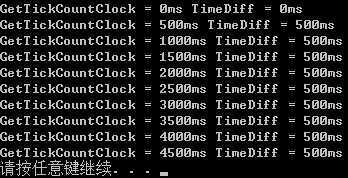

测试结果:

精度比较

每隔50ms获取一次当前时刻,对比TimeDiff与50之间的差距,统计1000次:

1 #include <math.h> 2 3 int main(void) 4 { 5 int nMaxDeviation = 0; 6 int nMinDeviation = 99; 7 int nSumDeviation = 0; 8 9 DWORD dwLastTime = GetTickCountCalibrate(); 10 Sleep(50); 11 12 for (int i = 0; i != 1000; ++i) 13 { 14 DWORD dwCurrentTime = GetTickCountCalibrate(); 15 int nDeviation= abs(dwCurrentTime - dwLastTime - 50); 16 nMaxDeviation = nDeviation > nMaxDeviation ? nDeviation : nMaxDeviation; 17 nMinDeviation = nDeviation < nMinDeviation ? nDeviation : nMinDeviation; 18 nSumDeviation += nDeviation; 19 dwLastTime = dwCurrentTime; 20 Sleep(50); 21 } 22 printf("nMaxDiff = %2dms, nMinDiff = %dms, nSumDiff = %4dms, AverDiff = %.3fms\n", 23 nMaxDeviation, nMinDeviation, nSumDeviation, nSumDeviation / 1000.0f); 24 25 return 0; 26 }

比较GetTickCount、GetTickCountClock、GetTickCountCalibrate的精度如下:

1 GetTickCount nMaxDiff = 13ms, nMinDiff = 3ms, nSumDiff = 5079ms, AverDiff = 5.079ms 2 GetTickCountClock nMaxDiff = 2ms, nMinDiff = 0ms, nSumDiff = 4ms, AverDiff = 0.004ms 3 GetTickCountCalibrate nMaxDiff = 1ms, nMinDiff = 0ms, nSumDiff = 3ms, AverDiff = 0.003ms

可以看到,原生的GetTickCount误差过大,最大误差13ms,平均误差5ms,肯定无法满足毫秒级的计时需求。

GetTickCountClock与GetTickCountCalibrate在精度上相差无几,都可以满足毫秒级的计时需求。

区别在于,GetTickCountClock是从当前程序运行开始计时,GetTickCountCalibrate是从系统启动开始计时。

有关溢出

4个字节的ULONG最大值是4294967296ms,也就是49.7天,超过这个值就会溢出。

标签:des style blog http io color ar os sp

原文地址:http://www.cnblogs.com/goagent/p/4083812.html