标签:sum style xpl import classes pytorch png 技术 class

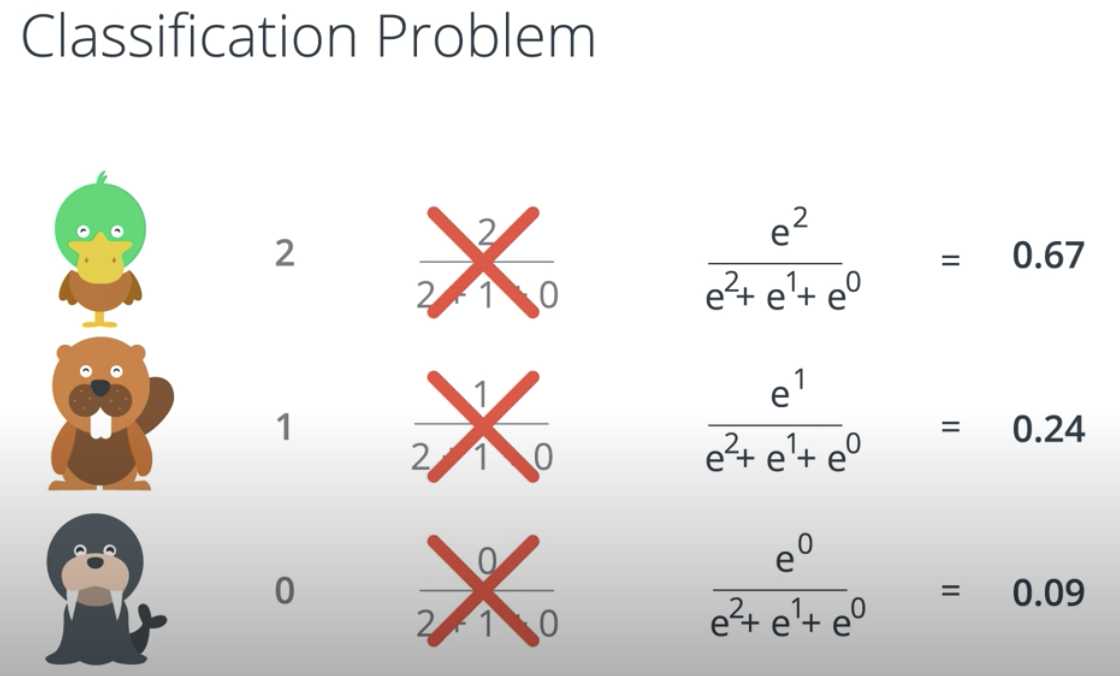

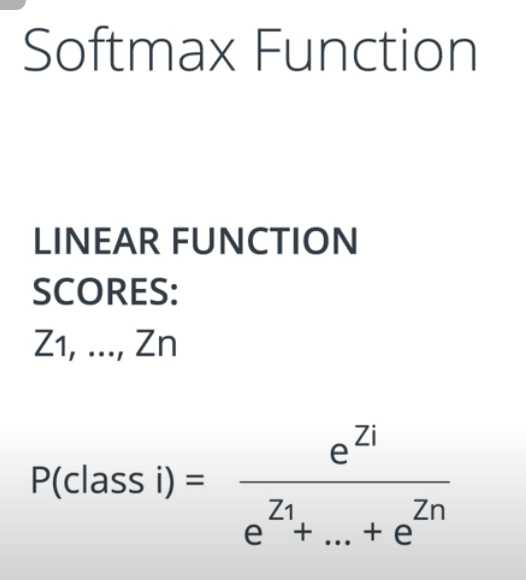

In the next video, we‘ll learn about the softmax function, which is the equivalent of the sigmoid activation function, but when the problem has 3 or more classes.

import numpy as np def softmax(L): expL = np.exp(L) sumExpL = sum(expL) result = [] for i in expL: result.append(i*1.0/sumExpL) return result

[Intro to Deep Learning with PyTorch -- L2 -- N15] Softmax function

标签:sum style xpl import classes pytorch png 技术 class

原文地址:https://www.cnblogs.com/Answer1215/p/13090754.html