标签:ado load -name 了解 ready efault board lse docker

root@master1:/k8s-data/yaml/namespaces# pwd

/k8s-data/yaml/namespaces

root@master1:/k8s-data/yaml/namespaces# cat danran-ns.yml

apiVersion: v1 #API版本

kind: Namespace #类型为namespac

metadata: #定义元数据

name: danran

root@master1:/k8s-data/yaml/namespaces# kubectl apply -f danran-ns.yml

namespace/danran created

root@master1:/k8s-data/yaml/namespaces# cat jevon-ns.yml

apiVersion: v1 #API版本

kind: Namespace #类型为namespac

metadata: #定义元数据

name: jevon

root@master1:/k8s-data/yaml/namespaces# kubectl apply -f jevon-ns.yml

namespace/jevon created

danran和jevon两个namespaces已创建

root@master1:/k8s-data/yaml/namespaces# kubectl get ns

NAME STATUS AGE

danran Active 97s

default Active 26h

jevon Active 3s

kube-node-lease Active 26h

kube-public Active 26h

kube-system Active 26h

kubernetes-dashboard Active 23h

Replication Controller:副本控制器(selector = !=)

ReplicaSet:副本控制集,和副本控制器的区别是:对选择器的支 持(selector 还支持in notin)

Deployment:比rs更高一级的控制器,除了有rs的功能之外,还 有很多高级功能,,比如说最重要的:滚动升级、回滚等

https://kubernetes.io/zh/docs/concepts/workloads/controllers/deployment/

root@master1:/k8s-data/yaml/danran/case1# cat deployment.yml

#apiVersion: extensions/v1beta1

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-deployment

namespace: danran

spec:

replicas: 2

selector:

#app: ng-deploy-80 #rc

#matchLabels: #rs or deployment

# app: ng-deploy-80

matchExpressions:

- {key: app, operator: In, values: [ng-deploy-80,ng-rs-81]}

template:

metadata:

labels:

app: ng-deploy-80

spec:

containers:

- name: ng-deploy-80

image: harbor.linux.com/baseimages/nginx:1.14.2

ports:

- containerPort: 80

root@master1:/k8s-data/yaml/danran/case1# kubectl apply -f deployment.yml

deployment.apps/nginx-deployment configured

root@master1:/kubernetes# kubectl get pod -n danran

NAME READY STATUS RESTARTS AGE

nginx-deployment-6fd69d55d4-6dmlc 1/1 Running 0 7s

nginx-deployment-6fd69d55d4-rjwq2 1/1 Running 0 4s

查看指定容器的controler

root@master1:/kubernetes# kubectl describe pod nginx-deployment-6fd69d55d4-rjwq2 -n danran

root@master1:/k8s-data/yaml/danran/case1# kubectl delete -f deployment.yml

deployment.apps "nginx-deployment" deleted

root@master1:/k8s-data/yaml/danran/case1# cat rc.yml

apiVersion: v1

kind: ReplicationController

metadata:

name: ng-rc

namespace: danran

spec:

replicas: 2

selector:

app: ng-rc-80

#app1: ng-rc-81

template:

metadata:

labels:

app: ng-rc-80

#app1: ng-rc-81

spec:

containers:

- name: ng-rc-80

image: harbor.linux.com/baseimages/nginx:1.14.2

ports:

- containerPort: 80

root@master1:/k8s-data/yaml/danran/case1# kubectl apply -f rc.yml

replicationcontroller/ng-rc created

root@master1:/kubernetes# kubectl get pod -n danran

NAME READY STATUS RESTARTS AGE

ng-rc-28pml 1/1 Running 0 24s

ng-rc-ltfk9 1/1 Running 0 24s

root@master1:/k8s-data/yaml/danran/case1# kubectl delete -f rc.yml

replicationcontroller "ng-rc" deleted

root@master1:/k8s-data/yaml/danran/case1# cat rs.yml

#apiVersion: extensions/v1beta1

apiVersion: apps/v1

kind: ReplicaSet

metadata:

name: frontend

namespace: danran

spec:

replicas: 3

selector:

#matchLabels:

# app: ng-rs-80

matchExpressions:

- {key: app, operator: In, values: [ng-rs-80,ng-rs-81]}

template:

metadata:

labels:

app: ng-rs-80

spec:

containers:

- name: ng-rs-80

image: harbor.linux.com/baseimages/nginx:1.14.2

ports:

- containerPort: 80

root@master1:/k8s-data/yaml/danran/case1# kubectl apply -f rs.yml

replicaset.apps/frontend created

root@master1:/kubernetes# kubectl get pod -n danran

NAME READY STATUS RESTARTS AGE

frontend-4zd77 1/1 Running 0 9m1s

frontend-drqts 1/1 Running 0 9m1s

frontend-wfqcq 1/1 Running 0 9m1s

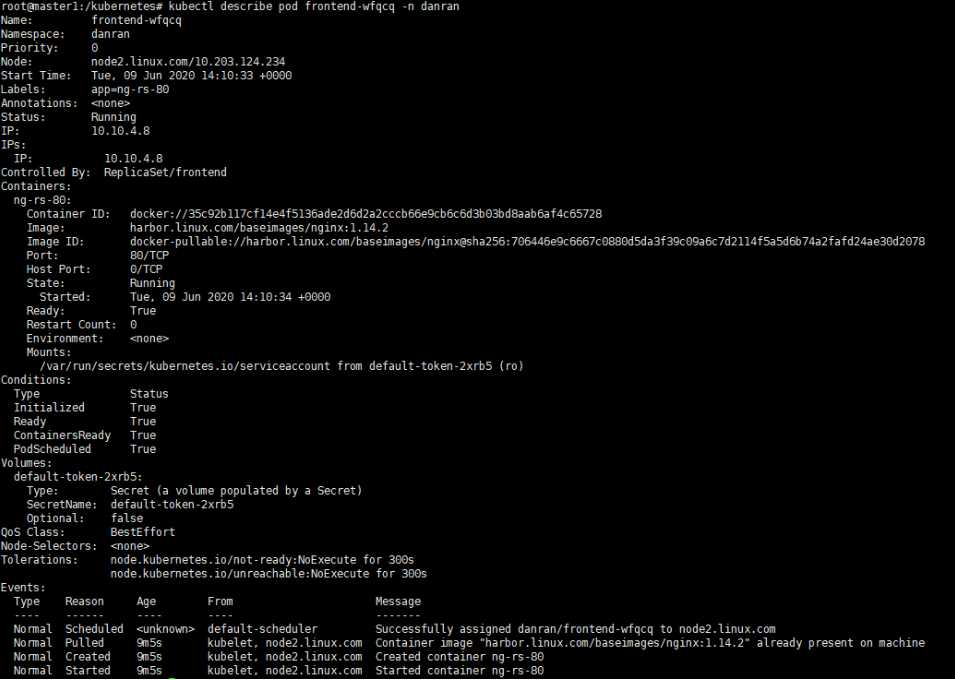

查看指定容器的controler

root@master1:/kubernetes# kubectl describe pod frontend-wfqcq -n danran

root@master1:/k8s-data/yaml/danran/case2# cat 1-deploy_node.yml

#apiVersion: extensions/v1beta1

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-deployment

namespace: danran

spec:

replicas: 1

selector:

#matchLabels: #rs or deployment

# app: ng-deploy3-80

matchExpressions:

- {key: app, operator: In, values: [ng-deploy-80,ng-rs-81]}

template:

metadata:

labels:

app: ng-deploy-80

spec:

containers:

- name: ng-deploy-80

image: harbor.linux.com/baseimages/nginx:1.14.2

ports:

- containerPort: 80

#nodeSelector:

# env: group1

root@master1:/k8s-data/yaml/danran/case2# kubectl apply -f 1-deploy_node.yml

deployment.apps/nginx-deployment created

root@master1:/kubernetes# kubectl get pod -n danran

NAME READY STATUS RESTARTS AGE

nginx-deployment-6f48df4946-vp5ph 1/1 Running 0 19s

root@master1:/k8s-data/yaml/danran/case2# cat 2-svc_service.yml

apiVersion: v1

kind: Service

metadata:

name: ng-deploy-80

namespace: danran

spec:

ports:

- name: http

port: 80

targetPort: 80

protocol: TCP

type: ClusterIP

selector:

app: ng-deploy-80

root@master1:/k8s-data/yaml/danran/case2# kubectl apply -f 2-svc_service.yml

service/ng-deploy-80 created

root@master1:/kubernetes# kubectl get service -n danran

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

ng-deploy-80 ClusterIP 172.16.224.0 <none> 80/TCP 100s

创建测试容器busybox

root@master1:/k8s-data/yaml/danran/case2# cat busybox.yaml

apiVersion: v1

kind: Pod

metadata:

name: busybox

namespace: danran #default namespace的DNS

spec:

containers:

- image: harbor.linux.com/baseimages/busybox

command:

- sleep

- "3600"

imagePullPolicy: Always

name: busybox

restartPolicy: Always

root@master1:/k8s-data/yaml/danran/case2# kubectl apply -f busybox.yaml

pod/busybox created

在busybox容器中测试网络是否与创建的ng-deploy-80 service 互通

root@master1:/kubernetes# kubectl get pod -n danran

NAME READY STATUS RESTARTS AGE

busybox 1/1 Running 0 35s

nginx-deployment-6f48df4946-vp5ph 1/1 Running 0 14m

容器中可以正常解析service ng-deploy-80

root@master1:/kubernetes# kubectl exec -it busybox sh -n danran

/ # ping ng-deploy-80

PING ng-deploy-80 (172.16.224.0): 56 data bytes

root@master1:/k8s-data/yaml/danran/case2# cat 3-svc_NodePort.yml

apiVersion: v1

kind: Service

metadata:

name: ng-deploy-80

namespace: danran

spec:

ports:

- name: http

port: 81

targetPort: 80

nodePort: 30012

protocol: TCP

type: NodePort

selector:

app: ng-deploy-80

root@master1:/k8s-data/yaml/danran/case2# kubectl apply -f 3-svc_NodePort.yml

service/ng-deploy-80 created

root@master1:/kubernetes# kubectl get service -n danran

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

ng-deploy-80 NodePort 172.16.206.246 <none> 81:30012/TCP 21s

通过在浏览器中,访问node或master节点的30012端口,即可访问ng-deploy-80 Nginx Controller对应的Pod

https://kubernetes.io/zh/docs/concepts/storage/

当 Pod 被分配给节点时,首先创建 emptyDir 卷,并且只要该 Pod 在该节点上运行,该卷就会存在。正如卷的名字所述,它最初是 空的。Pod 中的容器可以读取和写入 emptyDir 卷中的相同文件, 尽管该卷可以挂载到每个容器中的相同或不同路径上。当出于任 何原因从节点中删除 Pod 时,emptyDir 中的数据将被永久删除

root@master1:/k8s-data/yaml/danran/case3# cat deploy_empty.yml

#apiVersion: extensions/v1beta1

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-deployment

namespace: danran

spec:

replicas: 1

selector:

matchLabels: #rs or deployment

app: ng-deploy-80

template:

metadata:

labels:

app: ng-deploy-80

spec:

containers:

- name: ng-deploy-80

image: harbor.linux.com/baseimages/nginx:1.14.2

ports:

- containerPort: 80

volumeMounts:

- mountPath: /cache

name: cache-volume

volumes:

- name: cache-volume

emptyDir: {}

root@master1:/k8s-data/yaml/danran/case3# kubectl apply -f deploy_empty.yml

deployment.apps/nginx-deployment created

在容器中生成部分数据

root@master1:/kubernetes# kubectl get pod -n danran

NAME READY STATUS RESTARTS AGE

nginx-deployment-764876fc9f-dkjfq 1/1 Running 0 3m22s

root@master1:/kubernetes# kubectl exec -it nginx-deployment-764876fc9f-dkjfq bash -n danran

root@nginx-deployment-764876fc9f-dkjfq:/# cd /cache/

root@nginx-deployment-764876fc9f-dkjfq:/cache# ls

root@nginx-deployment-764876fc9f-dkjfq:/cache# echo 123 > danran.txt

root@nginx-deployment-764876fc9f-dkjfq:/cache# ls

danran.txt

查看容器所在node节点上的目录是否有数据生成

查看容器所在的node节点

root@master1:/k8s-data/yaml/danran/case3# kubectl get pod -n danran -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

nginx-deployment-764876fc9f-dkjfq 1/1 Running 0 6m6s 10.10.4.11 node2.linux.com <none> <none>

root@node2:~# find / -name danran.txt

/var/lib/kubelet/pods/eeacb4cb-f694-4f19-a31d-73628c26f2d6/volumes/kubernetes.io~empty-dir/cache-volume/danran.txt

root@node2:~# cat /var/lib/kubelet/pods/eeacb4cb-f694-4f19-a31d-73628c26f2d6/volumes/kubernetes.io~empty-dir/cache-volume/danran.txt

123

hostPath 卷将主机节点的文件系统中的文件或目录挂载到集群中, pod删除的时候,卷不会被删除

root@master1:/k8s-data/yaml/danran/case4# cat deploy_hostPath.yml

#apiVersion: extensions/v1beta1

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-deployment

namespace: danran

spec:

replicas: 1

selector:

matchLabels:

app: ng-deploy-80

template:

metadata:

labels:

app: ng-deploy-80

spec:

containers:

- name: ng-deploy-80

image: harbor.linux.com/baseimages/nginx:1.14.2

ports:

- containerPort: 80

volumeMounts:

- mountPath: /data/mysql

name: data-volume

volumes:

- name: data-volume

hostPath:

path: /data/mysql

root@master1:/k8s-data/yaml/danran/case4# kubectl apply -f deploy_hostPath.yml

deployment.apps/nginx-deployment created

root@master1:/kubernetes# kubectl get pod -n danran

NAME READY STATUS RESTARTS AGE

nginx-deployment-68b7cf75b8-jlbpd 1/1 Running 0 31s

在容器中生成数据测试

root@master1:/kubernetes# kubectl get pod -n danran

NAME READY STATUS RESTARTS AGE

nginx-deployment-68b7cf75b8-jlbpd 1/1 Running 0 31s

必须在mountPath路径下数据才能与宿主机同步

root@master1:/kubernetes# kubectl exec -it nginx-deployment-68b7cf75b8-jlbpd bash -n danran

root@nginx-deployment-68b7cf75b8-jlbpd:/#

root@nginx-deployment-68b7cf75b8-jlbpd:/# cd /data/mysql/

root@nginx-deployment-68b7cf75b8-jlbpd:/data/mysql# mkdir logs

root@nginx-deployment-68b7cf75b8-jlbpd:/data/mysql# cd logs/

root@nginx-deployment-68b7cf75b8-jlbpd:/data/mysql/logs# echo 12345qaz > a.txt

root@nginx-deployment-68b7cf75b8-jlbpd:/data/mysql/logs# pwd

/data/mysql/logs

在容器所在的宿主机上查看是否有数据

查看pod所在的宿主机

root@master1:/k8s-data/yaml/danran/case4# kubectl get pod -n danran -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

nginx-deployment-68b7cf75b8-jlbpd 1/1 Running 0 3m4s 10.10.4.12 node2.linux.com <none> <none>

root@node2:~# ls /data/mysql/

logs

root@node2:~# cat /data/mysql/logs/a.txt

12345qaz

nfs 卷允许将现有的 NFS(网络文件系统)共享挂载到您的容器中。 不像 emptyDir,当删除 Pod 时,nfs 卷的内容被保留,卷仅仅是 被卸载。这意味着 NFS 卷可以预填充数据,并且可以在 pod 之间 “切换”数据。 NFS 可以被多个写入者同时挂载。

root@ha1:~# apt install nfs-server

将/data/k8sdata 目录共享给其他主机

root@ha1:~# cat /etc/exports

/data/k8sdata *(rw,no_root_squash)

/data/danran *(rw,no_root_squash)

root@ha1:~# mkdir /data/k8sdata -p

root@ha1:~# mkdir /data/danran -p

root@ha1:~# systemctl restart nfs-server

root@ha1:~# systemctl enable nfs-server

root@ha1:/data/k8sdata# cat index.html

web server

root@master1:/k8s-data/yaml/danran/case5# cat deploy_nfs2.yml

#apiVersion: extensions/v1beta1

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-deployment-site2

spec:

replicas: 1

selector:

matchLabels:

app: ng-deploy-81

template:

metadata:

labels:

app: ng-deploy-81

spec:

containers:

- name: ng-deploy-81

image: harbor.linux.com/baseimages/nginx:1.14.2

ports:

- containerPort: 80

volumeMounts:

- mountPath: /usr/share/nginx/html/mysite

name: my-nfs-volume

volumes:

- name: my-nfs-volume

nfs:

server: 10.203.124.237

path: /data/k8sdata

---

apiVersion: v1

kind: Service

metadata:

name: ng-deploy-81

spec:

ports:

- name: http

port: 80

targetPort: 80

nodePort: 30017

protocol: TCP

type: NodePort

selector:

app: ng-deploy-81

root@master1:/k8s-data/yaml/danran/case5# kubectl apply -f deploy_nfs2.yml

deployment.apps/nginx-deployment-site2 created

service/ng-deploy-81 created

root@master1:/kubernetes# kubectl get pod

NAME READY STATUS RESTARTS AGE

nginx-7b466bcdb8-sbkqt 1/1 Running 0 44h

nginx-deployment-site2-685f87bf5c-vxztk 1/1 Running 0 81s

tomcat-deployment-567999ddc-wrv5v 1/1 Running 0 43h

进入容器内查看nfs挂载目录

root@master1:/kubernetes# kubectl exec -it nginx-deployment-site2-685f87bf5c-vxztk bash

root@nginx-deployment-site2-685f87bf5c-vxztk:/# df

Filesystem 1K-blocks Used Available Use% Mounted on

overlay 20961280 3208024 17753256 16% /

tmpfs 65536 0 65536 0% /dev

tmpfs 4084052 0 4084052 0% /sys/fs/cgroup

/dev/mapper/vg00-rootvol 20961280 3208024 17753256 16% /etc/hosts

shm 65536 0 65536 0% /dev/shm

10.203.124.237:/data/k8sdata 20961280 2511872 18449408 12% /usr/share/nginx/html/mysite

tmpfs 4084052 12 4084040 1% /run/secrets/kubernetes.io/serviceaccount

tmpfs 4084052 0 4084052 0% /proc/acpi

tmpfs 4084052 0 4084052 0% /proc/scsi

tmpfs 4084052 0 4084052 0% /sys/firmware

root@nginx-deployment-site2-685f87bf5c-vxztk:/# cat /usr/share/nginx/html/mysite/index.html

web server

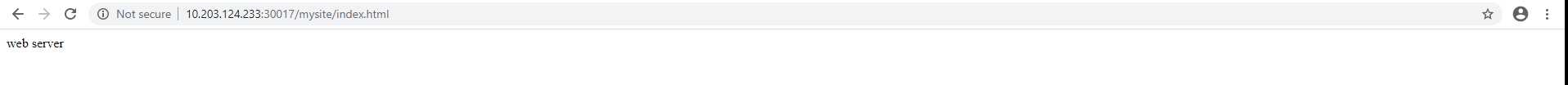

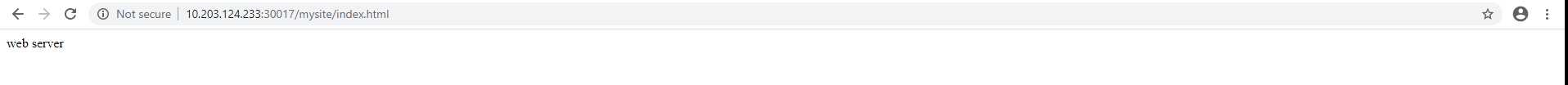

浏览器访问nginx,测试数据是否为nfs目录的数据

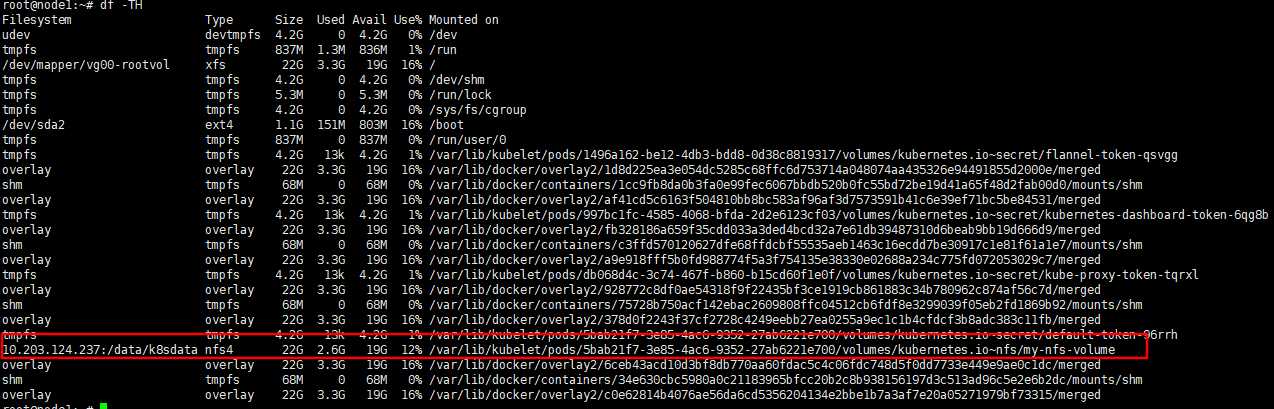

在pod所在的node上确认是否挂载了nfs目录

root@master1:/usr/local/src/kubeadm/nginx# kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

nginx-7b466bcdb8-sbkqt 1/1 Running 0 44h 10.10.4.2 node2.linux.com <none> <none>

nginx-deployment-site2-685f87bf5c-t8wsb 1/1 Running 0 46s 10.10.3.12 node1.linux.com <none> <none>

tomcat-deployment-567999ddc-wrv5v 1/1 Running 0 43h 10.10.4.3 node2.linux.com <none> <none>

root@master1:/k8s-data/yaml/danran/case5# cat deploy_nfs.yml

#apiVersion: extensions/v1beta1

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-deployment-site

spec:

replicas: 1

selector:

matchLabels:

app: ng-deploy-80

template:

metadata:

labels:

app: ng-deploy-80

spec:

containers:

- name: ng-deploy-80

image: harbor.linux.com/baseimages/nginx:1.14.2

ports:

- containerPort: 80

volumeMounts:

- mountPath: /usr/share/nginx/html/mysite

name: my-nfs-volume

- mountPath: /data/danran

name: danran-nfs-volume

volumes:

- name: my-nfs-volume

nfs:

server: 10.203.124.237

path: /data/k8sdata

- name: danran-nfs-volume

nfs:

server: 10.203.124.237

path: /data/danran

---

apiVersion: v1

kind: Service

metadata:

name: ng-deploy-80

spec:

ports:

- name: http

port: 80

targetPort: 80

nodePort: 30016

protocol: TCP

type: NodePort

selector:

app: ng-deploy-80

root@master1:/k8s-data/yaml/danran/case5# kubectl apply -f deploy_nfs.yml

deployment.apps/nginx-deployment-site created

service/ng-deploy-80 unchanged

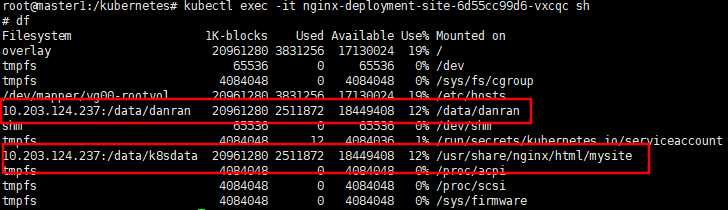

在pod和pod所在的node中,确认是否否挂载了两个nfs目录

root@master1:/kubernetes# kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

nginx-7b466bcdb8-sbkqt 1/1 Running 0 44h 10.10.4.2 node2.linux.com <none> <none>

nginx-deployment-site-6d55cc99d6-vxcqc 1/1 Running 0 105s 10.10.4.14 node2.linux.com <none> <none>

tomcat-deployment-567999ddc-wrv5v 1/1 Running 0 43h 10.10.4.3 node2.linux.com <none> <none>

root@master1:/kubernetes# kubectl exec -it nginx-deployment-site-6d55cc99d6-vxcqc sh

配置Configmap 的yml文件

root@master1:/k8s-data/yaml/danran/case6# cat deploy_configmap.yml

apiVersion: v1

kind: ConfigMap

metadata:

name: nginx-config

data:

default: |

server {

listen 80;

server_name www.mysite.com;

index index.html;

location / {

root /data/nginx/html;

if (!-e $request_filename) {

rewrite ^/(.*) /index.html last;

}

}

}

---

#apiVersion: extensions/v1beta1

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-deployment

spec:

replicas: 1

selector:

matchLabels:

app: ng-deploy-80

template:

metadata:

labels:

app: ng-deploy-80

spec:

containers:

- name: ng-deploy-80

image: harbor.linux.com/baseimages/nginx:1.14.2

ports:

- containerPort: 80

volumeMounts:

- mountPath: /data/nginx/html

name: nginx-static-dir

- name: nginx-config

mountPath: /etc/nginx/conf.d

volumes:

- name: nginx-static-dir

hostPath:

path: /data/nginx/danran

- name: nginx-config

configMap:

name: nginx-config

items:

- key: default

path: mysite.conf

---

apiVersion: v1

kind: Service

metadata:

name: ng-deploy-80

spec:

ports:

- name: http

port: 81

targetPort: 80

nodePort: 30019

protocol: TCP

type: NodePort

selector:

app: ng-deploy-80

创建pod

root@master1:/k8s-data/yaml/danran/case6# kubectl apply -f deploy_configmap.yml

configmap/nginx-config created

deployment.apps/nginx-deployment created

service/ng-deploy-80 created

root@master1:/kubernetes# kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

nginx-7b466bcdb8-sbkqt 1/1 Running 0 46h 10.10.4.2 node2.linux.com <none> <none>

nginx-deployment-64b664464f-k4j5p 1/1 Running 0 64s 10.10.3.13 node1.linux.com <none> <none>

tomcat-deployment-567999ddc-wrv5v 1/1 Running 0 45h 10.10.4.3 node2.linux.com <none> <none>

root@master1:/kubernetes# kubectl get service -o wide

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTOR

danran-nginx-service NodePort 172.16.175.123 <none> 80:30004/TCP 46h app=nginx

danran-tomcat-service NodePort 172.16.218.15 <none> 80:31794/TCP 45h app=tomcat

kubernetes ClusterIP 172.16.0.1 <none> 443/TCP 2d <none>

ng-deploy-80 NodePort 172.16.135.46 <none> 81:30019/TCP 83s app=ng-deploy-80

确认pod中是否有/etc/nginx/conf.d/mysite.conf配置文件

root@nginx-deployment-64b664464f-k4j5p:/# cat /etc/nginx/conf.d/mysite.conf

server {

listen 80;

server_name www.mysite.com;

index index.html;

location / {

root /data/nginx/html;

if (!-e $request_filename) {

rewrite ^/(.*) /index.html last;

}

}

}

root@master1:/k8s-data/yaml/danran/case6# cat deploy_configmapenv.yml

apiVersion: v1

kind: ConfigMap

metadata:

name: nginx-config

data:

username: user1

---

#apiVersion: extensions/v1beta1

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-deployment

spec:

replicas: 1

selector:

matchLabels:

app: ng-deploy-80

template:

metadata:

labels:

app: ng-deploy-80

spec:

containers:

- name: ng-deploy-80

image: harbor.linux.com/baseimages/nginx:1.14.2

env:

- name: MY_USERNAME

valueFrom:

configMapKeyRef:

name: nginx-config

key: username

ports:

- containerPort: 80

https://kubernetes.io/zh/docs/concepts/workloads/controllers/daemonset/

root@master1:/k8s-data/yaml/danran# vim daemonset.yml

apiVersion: apps/v1

kind: DaemonSet

metadata:

name: fluentd-elasticsearch

namespace: kube-system

labels:

k8s-app: fluentd-logging

spec:

selector:

matchLabels:

name: fluentd-elasticsearch

template:

metadata:

labels:

name: fluentd-elasticsearch

spec:

tolerations:

# this toleration is to have the daemonset runnable on master nodes

# remove it if your masters can‘t run pods

- key: node-role.kubernetes.io/master

effect: NoSchedule

containers:

- name: fluentd-elasticsearch

image: quay.io/fluentd_elasticsearch/fluentd:v2.5.2

resources:

limits:

memory: 200Mi

requests:

cpu: 100m

memory: 200Mi

volumeMounts:

- name: varlog

mountPath: /var/log

- name: varlibdockercontainers

mountPath: /var/lib/docker/containers

readOnly: true

terminationGracePeriodSeconds: 30

volumes:

- name: varlog

hostPath:

path: /var/log

- name: varlibdockercontainers

hostPath:

path: /var/lib/docker/containers

root@master1:/k8s-data/yaml/danran# kubectl apply -f daemonset.yaml

https://kubernetes.io/zh/docs/concepts/workloads/controllers/statefulset/

标签:ado load -name 了解 ready efault board lse docker

原文地址:https://www.cnblogs.com/JevonWei/p/13184399.html