标签:otl load tps plt its png size sub inf

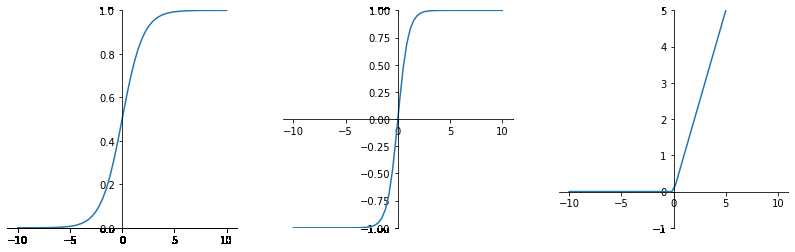

import torch import torch.nn.functional as F import matplotlib.pyplot as plt import numpy as np x = torch.linspace(-10,10,60) fig = plt.figure(figsize=(14,4)) ae = fig.add_subplot(131) #sigmod激活函数 ax = plt.gca() ax.spines[‘top‘].set_color(‘none‘) ax.spines[‘right‘].set_color(‘none‘) ax.xaxis.set_ticks_position(‘bottom‘) ax.spines[‘bottom‘].set_position((‘data‘,0)) ax.yaxis.set_ticks_position(‘left‘) ax.spines[‘left‘].set_position((‘data‘,0)) y = torch.sigmoid(x) plt.plot(x.numpy(),y.numpy()) plt.ylim((0,1)) ae = fig.add_subplot(132) #tanh激活函数 ax = plt.gca() ax.spines[‘top‘].set_color(‘none‘) ax.spines[‘right‘].set_color(‘none‘) ax.xaxis.set_ticks_position(‘bottom‘) ax.spines[‘bottom‘].set_position((‘data‘,0)) ax.yaxis.set_ticks_position(‘left‘) ax.spines[‘left‘].set_position((‘data‘,0)) y1 = torch.tanh(x) plt.plot(x.numpy(),y1.numpy()) plt.ylim((-1,1)) ae = fig.add_subplot(133) # ReLU激活函数 ax = plt.gca() ax.spines[‘top‘].set_color(‘none‘) ax.spines[‘right‘].set_color(‘none‘) ax.xaxis.set_ticks_position(‘bottom‘) ax.spines[‘bottom‘].set_position((‘data‘,0)) ax.yaxis.set_ticks_position(‘left‘) ax.spines[‘left‘].set_position((‘data‘,0)) y2 = F.relu(x) plt.plot(x.numpy(),y2.numpy()) plt.ylim((-1,5)) plt.show()

输出:

sigmod公式:

一般会造成梯度消失。

tanh公式:

tanh是以0为中心点,如果使用tanh作为激活函数,能够起到归一化(均值为0)的效果。

Relu(Rectified Linear Units)修正线性单元

导数大于0时1,小于0时0。

标签:otl load tps plt its png size sub inf

原文地址:https://www.cnblogs.com/peixu/p/13212178.html