标签:mapjoin $2 mon cal safe apache final ado tis

● 系统环境说明

Linux环境:centos7.4

EMR:3.0.0

Java:1.8.0_112

● 集群配置

机器数量:50

内存:128G

硬盘:100T

CPU核心数:32C

SQL中使用了LEFT JOIN,在执行过程中遇到以下报错:

java.lang.RuntimeException: Map operator initialization failed: java.lang.ClassCastException: org.apache.hadoop.hive.ql.exec.persistence.HashMapWrapper cannot be cast to org.apache.hadoop.hive.ql.exec.persistence.MapJoinTableContainerDirectAccess

at org.apache.hadoop.hive.ql.exec.spark.SparkMapRecordHandler.init(SparkMapRecordHandler.java:118)

at org.apache.hadoop.hive.ql.exec.spark.HiveMapFunction.call(HiveMapFunction.java:55)

at org.apache.hadoop.hive.ql.exec.spark.HiveMapFunction.call(HiveMapFunction.java:30)

at org.apache.spark.api.java.JavaRDDLike$$anonfun$fn$7$1.apply(JavaRDDLike.scala:186)

at org.apache.spark.api.java.JavaRDDLike$$anonfun$fn$7$1.apply(JavaRDDLike.scala:186)

at org.apache.spark.rdd.RDD$$anonfun$mapPartitions$1$$anonfun$apply$23.apply(RDD.scala:801)

at org.apache.spark.rdd.RDD$$anonfun$mapPartitions$1$$anonfun$apply$23.apply(RDD.scala:801)

at org.apache.spark.rdd.MapPartitionsRDD.compute(MapPartitionsRDD.scala:52)

at org.apache.spark.rdd.RDD.computeOrReadCheckpoint(RDD.scala:324)

at org.apache.spark.rdd.RDD.iterator(RDD.scala:288)

at org.apache.spark.scheduler.ShuffleMapTask.runTask(ShuffleMapTask.scala:99)

at org.apache.spark.scheduler.ShuffleMapTask.runTask(ShuffleMapTask.scala:55)

at org.apache.spark.scheduler.Task.run(Task.scala:121)

at org.apache.spark.executor.Executor$TaskRunner$$anonfun$10.apply(Executor.scala:408)

at org.apache.spark.util.Utils$.tryWithSafeFinally(Utils.scala:1360)

at org.apache.spark.executor.Executor$TaskRunner.run(Executor.scala:414)

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1149)

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:624)

at java.lang.Thread.run(Thread.java:748)

Caused by: java.lang.ClassCastException: org.apache.hadoop.hive.ql.exec.persistence.HashMapWrapper cannot be cast to org.apache.hadoop.hive.ql.exec.persistence.MapJoinTableContainerDirectAccess

at org.apache.hadoop.hive.ql.exec.vector.mapjoin.optimized.VectorMapJoinOptimizedHashTable.(VectorMapJoinOptimizedHashTable.java:92)

at org.apache.hadoop.hive.ql.exec.vector.mapjoin.optimized.VectorMapJoinOptimizedHashMultiSet.(VectorMapJoinOptimizedHashMultiSet.java:101)

at org.apache.hadoop.hive.ql.exec.vector.mapjoin.optimized.VectorMapJoinOptimizedStringHashMultiSet.(VectorMapJoinOptimizedStringHashMultiSet.java:61)

at org.apache.hadoop.hive.ql.exec.vector.mapjoin.optimized.VectorMapJoinOptimizedCreateHashTable.createHashTable(VectorMapJoinOptimizedCreateHashTable.java:85)

at org.apache.hadoop.hive.ql.exec.vector.mapjoin.VectorMapJoinCommonOperator.setUpHashTable(VectorMapJoinCommonOperator.java:483)

at org.apache.hadoop.hive.ql.exec.vector.mapjoin.VectorMapJoinCommonOperator.completeInitializationOp(VectorMapJoinCommonOperator.java:461)

at org.apache.hadoop.hive.ql.exec.Operator.completeInitialization(Operator.java:470)

at org.apache.hadoop.hive.ql.exec.Operator.initialize(Operator.java:400)

at org.apache.hadoop.hive.ql.exec.Operator.initialize(Operator.java:573)

at org.apache.hadoop.hive.ql.exec.Operator.initializeChildren(Operator.java:525)

at org.apache.hadoop.hive.ql.exec.Operator.initialize(Operator.java:386)

at org.apache.hadoop.hive.ql.exec.spark.SparkMapRecordHandler.init(SparkMapRecordHandler.java:109)

... 18 more

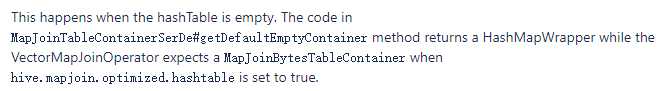

当hashTable为空时,会发生这种情况。

官方说法:

所以将hashtable关闭即可:

set hive.mapjoin.optimized.hashtable=false;

问题解决。

Hve on Spark left join的hashTable问题

标签:mapjoin $2 mon cal safe apache final ado tis

原文地址:https://www.cnblogs.com/daemonyue/p/13326516.html