标签:false 集成开发 batch conv2 val const range put jpg

操作系统:Win10

python版本:3.6

集成开发环境:pycharm

tensorflow版本:1.*

mnist数据集:mnist数据集下载地址

??MNIST 数据集来自美国国家标准与技术研究所, National Institute of Standards and Technology (NIST). 训练集 (training set) 由来自 250 个不同人手写的数字构成, 其中 50% 是高中学生, 50% 来自人口普查局 (the Census Bureau) 的工作人员. 测试集(test set) 也是同样比例的手写数字数据.

??图片是以字节的形式进行存储, 我们需要把它们读取到 NumPy array 中, 以便训练和测试算法。

读取mnist数据集

mnist = input_data.read_data_sets("mnist_data", one_hot=True)

def getMnistModel(savemodel,is_train):

"""

:param savemodel: 模型保存路径

:param is_train: true为训练,false为测试模型

:return:None

"""

mnist = input_data.read_data_sets("mnist_data", one_hot=True)

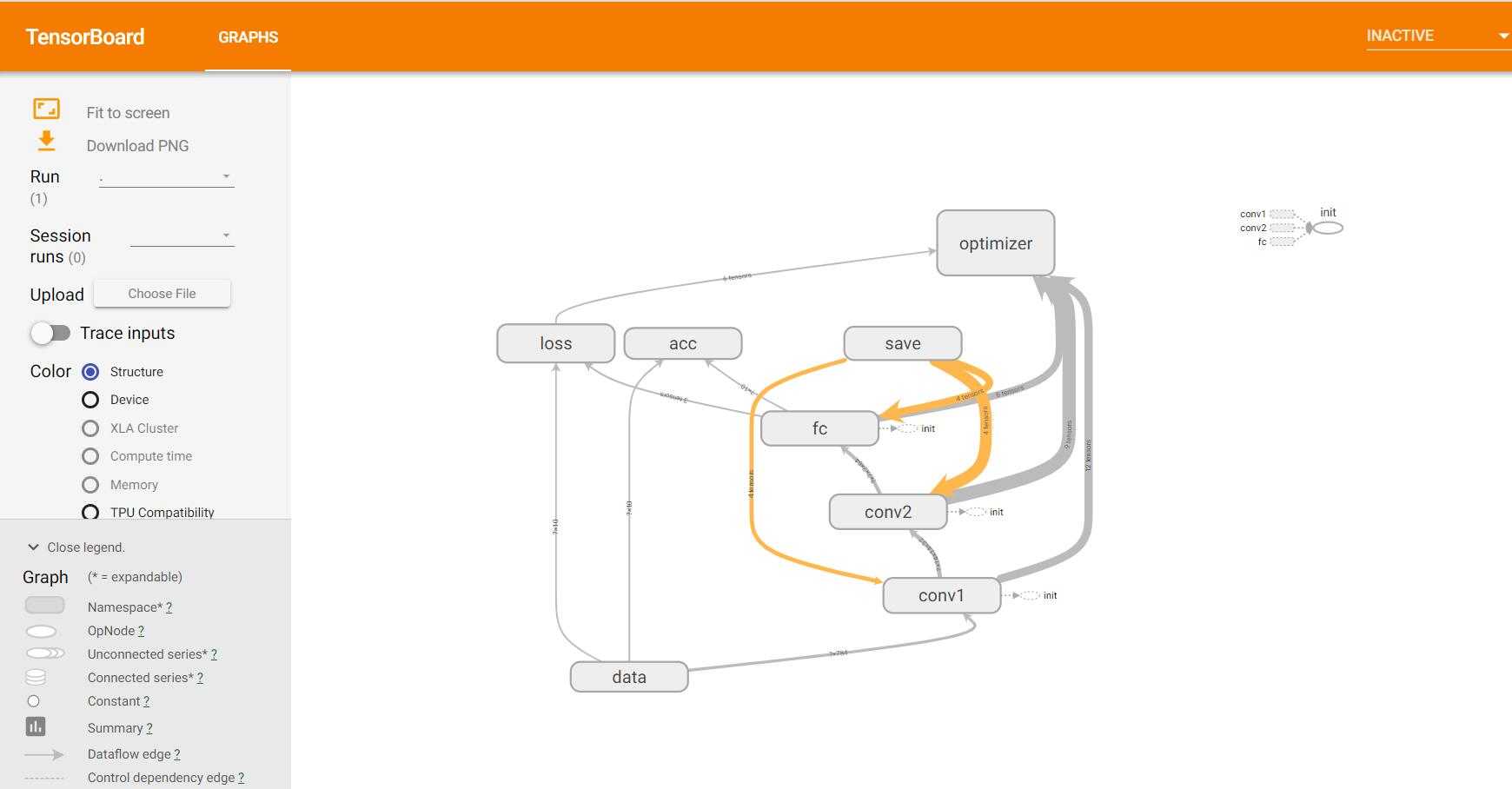

with tf.variable_scope("data"):

x = tf.placeholder(tf.float32,shape=[None,784]) # 784=28*28*1 宽长为28,单通道图片

y_true = tf.placeholder(tf.int32,shape=[None,10]) # 10个类别

with tf.variable_scope("conv1"):

w_conv1 = tf.Variable(tf.random_normal([10,10,1,32])) # 10*10的卷积核 1个通道的输入图像 32个不同的卷积核,得到32个特征图

b_conv1 = tf.Variable(tf.constant(0.0,shape=[32]))

x_reshape = tf.reshape(x,[-1,28,28,1]) # n张 28*28 的单通道图片

conv1 = tf.nn.relu(tf.nn.conv2d(x_reshape,w_conv1,strides=[1,1,1,1],padding="SAME")+b_conv1) #[1, 1, 1, 1] 中间2个1,卷积每次滑动的步长 padding=‘SAME‘ 边缘自动补充

pool1 = tf.nn.max_pool(conv1,ksize=[1,2,2,1],strides=[1,2,2,1],padding="SAME") # 池化窗口为[1,2,2,1] 中间2个2,池化窗口每次滑动的步长 padding="SAME" 考虑边界,如果不够用 用0填充

with tf.variable_scope("conv2"):

w_conv2 = tf.Variable(tf.random_normal([10,10,32,64]))

b_conv2 = tf.Variable(tf.constant(0.0,shape=[64]))

conv2 = tf.nn.relu(tf.nn.conv2d(pool1,w_conv2,strides=[1,1,1,1],padding="SAME")+b_conv2)

pool2 = tf.nn.max_pool(conv2,ksize=[1,2,2,1],strides=[1,2,2,1],padding="SAME")

with tf.variable_scope("fc"):

w_fc = tf.Variable(tf.random_normal([7*7*64,10])) # 经过两次卷积和池化 28 * 28/(2+2) = 7 * 7

b_fc = tf.Variable(tf.constant(0.0,shape=[10]))

xfc_reshape = tf.reshape(pool2,[-1,7*7*64])

y_predict = tf.matmul(xfc_reshape,w_fc)+b_fc

with tf.variable_scope("loss"):

loss = tf.reduce_mean(tf.nn.softmax_cross_entropy_with_logits(labels=y_true,logits=y_predict))

with tf.variable_scope("optimizer"):

train_op = tf.train.GradientDescentOptimizer(0.001).minimize(loss)

with tf.variable_scope("acc"):

equal_list = tf.equal(tf.arg_max(y_true,1),tf.arg_max(y_predict,1))

accuracy = tf.reduce_mean(tf.cast(equal_list,tf.float32))

# tensorboard

# tf.summary.histogram用来显示直方图信息

# tf.summary.scalar用来显示标量信息

# Summary:所有需要在TensorBoard上展示的统计结果

tf.summary.histogram("weight",w_fc)

tf.summary.histogram("bias",b_fc)

tf.summary.scalar("loss",loss)

tf.summary.scalar("acc",accuracy)

merged = tf.summary.merge_all()

init = tf.global_variables_initializer()

saver = tf.train.Saver()

with tf.Session() as sess:

sess.run(init)

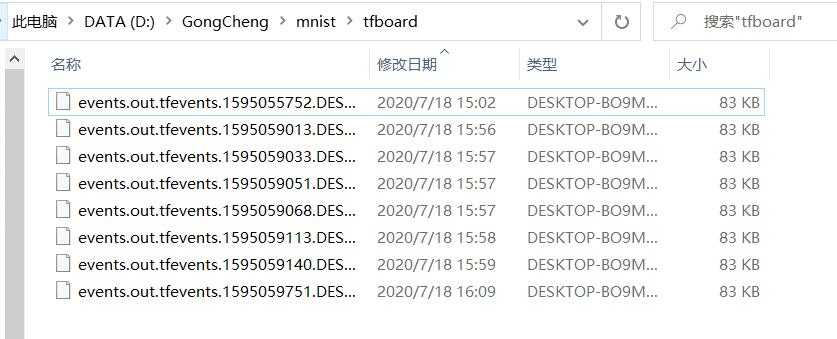

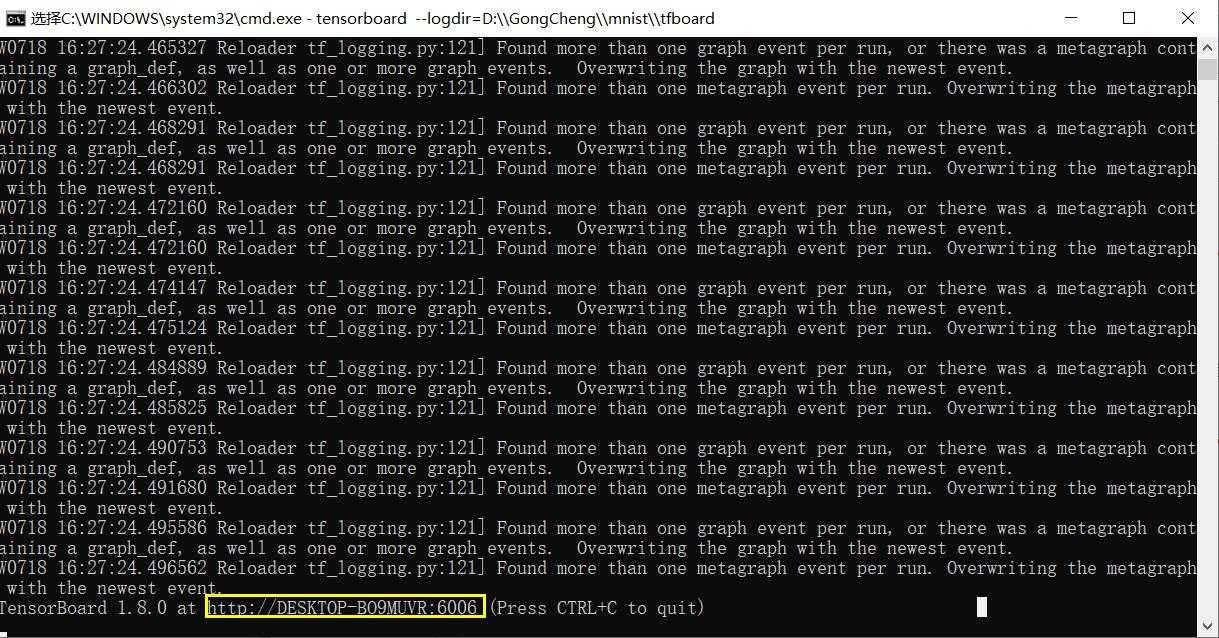

filewriter = tf.summary.FileWriter("tfboard",graph=sess.graph)

if is_train:

for i in range(500):

x_train,y_train = mnist.train.next_batch(100)

sess.run(train_op,feed_dict={x:x_train,y_true:y_train})

summary = sess.run(merged,feed_dict={x:x_train,y_true:y_train})

filewriter.add_summary(summary,i)

print("第%d训练,准确率为%f"%(i+1,sess.run(accuracy,feed_dict={x:x_train,y_true:y_train})))

saver.save(sess,savemodel)

else:

count = 0.0

epochs = 100

saver.restore(sess, savemodel)

for i in range(epochs):

x_test, y_test = mnist.train.next_batch(1)

print("第%d张图片,真实值为:%d预测值为:%d" % (i + 1,

tf.argmax(sess.run(y_true, feed_dict={x: x_test, y_true: y_test}),

1).eval(),

tf.argmax(

sess.run(y_predict, feed_dict={x: x_test, y_true: y_test}),

1).eval()

))

if (tf.argmax(sess.run(y_true, feed_dict={x: x_test, y_true: y_test}), 1).eval() == tf.argmax(

sess.run(y_predict, feed_dict={x: x_test, y_true: y_test}), 1).eval()):

count = count + 1

print("正确率为 %.2f " % float(count * 100 / epochs) + "%")

?? TensorBoard是TensorFlow下的一个可视化的工具,能够帮助我们在训练大规模神经网络过程中出现的复杂且不好理解的运算。TensorBoard能展示你训练过程中绘制的图像、网络结构等。

import tensorflow as tf

from tensorflow.examples.tutorials.mnist import input_data

def getMnistModel(savemodel,is_train):

"""

:param savemodel: 模型保存路径

:param is_train: True为训练,False为测试模型

:return:None

"""

mnist = input_data.read_data_sets("mnist_data", one_hot=True)

with tf.variable_scope("data"):

x = tf.placeholder(tf.float32,shape=[None,784]) # 784=28*28*1 宽长为28,单通道图片

y_true = tf.placeholder(tf.int32,shape=[None,10]) # 10个类别

with tf.variable_scope("conv1"):

w_conv1 = tf.Variable(tf.random_normal([10,10,1,32])) # 10*10的卷积核 1个通道的输入图像 32个不同的卷积核,得到32个特征图

b_conv1 = tf.Variable(tf.constant(0.0,shape=[32]))

x_reshape = tf.reshape(x,[-1,28,28,1]) # n张 28*28 的单通道图片

conv1 = tf.nn.relu(tf.nn.conv2d(x_reshape,w_conv1,strides=[1,1,1,1],padding="SAME")+b_conv1) #[1, 1, 1, 1] 中间2个1,卷积每次滑动的步长 padding=‘SAME‘ 边缘自动补充

pool1 = tf.nn.max_pool(conv1,ksize=[1,2,2,1],strides=[1,2,2,1],padding="SAME") # 池化窗口为[1,2,2,1] 中间2个2,池化窗口每次滑动的步长 padding="SAME" 考虑边界,如果不够用 用0填充

with tf.variable_scope("conv2"):

w_conv2 = tf.Variable(tf.random_normal([10,10,32,64]))

b_conv2 = tf.Variable(tf.constant(0.0,shape=[64]))

conv2 = tf.nn.relu(tf.nn.conv2d(pool1,w_conv2,strides=[1,1,1,1],padding="SAME")+b_conv2)

pool2 = tf.nn.max_pool(conv2,ksize=[1,2,2,1],strides=[1,2,2,1],padding="SAME")

with tf.variable_scope("fc"):

w_fc = tf.Variable(tf.random_normal([7*7*64,10])) # 经过两次卷积和池化 28 * 28/(2+2) = 7 * 7

b_fc = tf.Variable(tf.constant(0.0,shape=[10]))

xfc_reshape = tf.reshape(pool2,[-1,7*7*64])

y_predict = tf.matmul(xfc_reshape,w_fc)+b_fc

with tf.variable_scope("loss"):

loss = tf.reduce_mean(tf.nn.softmax_cross_entropy_with_logits(labels=y_true,logits=y_predict))

with tf.variable_scope("optimizer"):

train_op = tf.train.GradientDescentOptimizer(0.001).minimize(loss)

with tf.variable_scope("acc"):

equal_list = tf.equal(tf.arg_max(y_true,1),tf.arg_max(y_predict,1))

accuracy = tf.reduce_mean(tf.cast(equal_list,tf.float32))

# tensorboard

# tf.summary.histogram用来显示直方图信息

# tf.summary.scalar用来显示标量信息

# Summary:所有需要在TensorBoard上展示的统计结果

tf.summary.histogram("weight",w_fc)

tf.summary.histogram("bias",b_fc)

tf.summary.scalar("loss",loss)

tf.summary.scalar("acc",accuracy)

merged = tf.summary.merge_all()

init = tf.global_variables_initializer()

saver = tf.train.Saver()

with tf.Session() as sess:

sess.run(init)

filewriter = tf.summary.FileWriter("tfboard",graph=sess.graph)

if is_train:

for i in range(500):

x_train,y_train = mnist.train.next_batch(100)

sess.run(train_op,feed_dict={x:x_train,y_true:y_train})

summary = sess.run(merged,feed_dict={x:x_train,y_true:y_train})

filewriter.add_summary(summary,i)

print("第%d训练,准确率为%f"%(i+1,sess.run(accuracy,feed_dict={x:x_train,y_true:y_train})))

saver.save(sess,savemodel)

else:

count = 0.0

epochs = 100

saver.restore(sess, savemodel)

for i in range(epochs):

x_test, y_test = mnist.train.next_batch(1)

print("第%d张图片,真实值为:%d预测值为:%d" % (i + 1,

tf.argmax(sess.run(y_true, feed_dict={x: x_test, y_true: y_test}),

1).eval(),

tf.argmax(

sess.run(y_predict, feed_dict={x: x_test, y_true: y_test}),

1).eval()

))

if (tf.argmax(sess.run(y_true, feed_dict={x: x_test, y_true: y_test}), 1).eval() == tf.argmax(

sess.run(y_predict, feed_dict={x: x_test, y_true: y_test}), 1).eval()):

count = count + 1

print("正确率为 %.2f " % float(count * 100 / epochs) + "%")

if __name__ == ‘__main__‘:

modelPath = "model/mnist_model"

getMnistModel(modelPath,True)

标签:false 集成开发 batch conv2 val const range put jpg

原文地址:https://www.cnblogs.com/lyhLive/p/13336232.html