标签:dom 比例 nbsp 文件夹 利用 mamicode group ted and

#方式1:获取小规模的数据集 import sklearn.datasets as datasets iris = datasets.load_iris() #提取样本数据 feature = iris[‘data‘] target = iris[‘target‘] feature.shape (150, 4) target.shape (150,) target array([0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 2, 2, 2, 2, 2, 2, 2, 2, 2, 2, 2, 2, 2, 2, 2, 2, 2, 2, 2, 2, 2, 2, 2, 2, 2, 2, 2, 2, 2, 2, 2, 2, 2, 2, 2, 2, 2, 2, 2, 2, 2, 2, 2, 2, 2, 2, 2, 2, 2, 2]) #切分样本数据 from sklearn.model_selection import train_test_split x_train,x_test,y_train,y_test = train_test_split(feature,target,test_size=0.2,random_state=2020) x_train,y_train #训练集数据

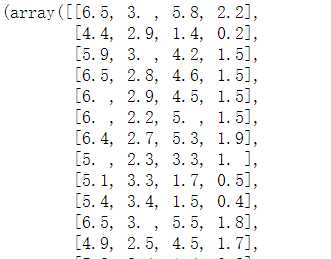

x_test,y_test #测试集数据

#方式2:获取较大规模的数据集 datasets.fetch_20newsgroups(data_home=‘数据集保存路径‘,subset=‘all‘)

标签:dom 比例 nbsp 文件夹 利用 mamicode group ted and

原文地址:https://www.cnblogs.com/linranran/p/13347843.html