标签:resource 复制 nec K8S集群 csr http 允许 ntc article

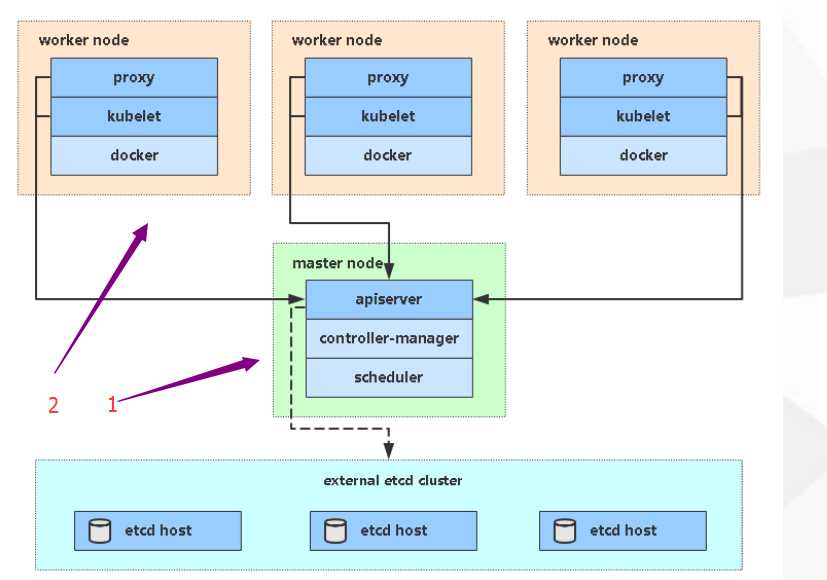

k8s集群分为三个部分。如下图所示

1. master节点

2. node节点

3. etcd存储

部署master.com节点

根据上图所示,master节点分为三个组件(apiserver、controller-manager、scheduler)

apiserver:k8s集群的总入口,基于hppts通讯。所以要部署证书。

第一步:准备k8s证书:

vim ca-config.json

{ "signing": { "default": { "expiry": "87600h" }, "profiles": { "kubernetes": { "expiry": "87600h", "usages": [ "signing", "key encipherment", "server auth", "client auth" ] } } } }

vim ca-csr.json

{ "CN": "kubernetes", "key": { "algo": "rsa", "size": 2048 }, "names": [ { "C": "CN", "L": "Beijing", "ST": "Beijing", "O": "k8s", "OU": "System" } ] }

vim kube-proxy-csr.json

{ "CN": "system:kube-proxy", "hosts": [], "key": { "algo": "rsa", "size": 2048 }, "names": [ { "C": "CN", "L": "BeiJing", "ST": "BeiJing", "O": "k8s", "OU": "System" } ] }

vim server-csr.json 此处记得修改ip

{ "CN": "kubernetes", "hosts": [ "10.0.0.1", "127.0.0.1", "kubernetes", "kubernetes.default", "kubernetes.default.svc", "kubernetes.default.svc.cluster", "kubernetes.default.svc.cluster.local", "192.168.1.46", "192.168.1.47", "192.168.1.46" ], "key": { "algo": "rsa", "size": 2048 }, "names": [ { "C": "CN", "L": "BeiJing", "ST": "BeiJing", "O": "k8s", "OU": "System" } ] }

若已安装这三个工具,请忽略。若未安装,复制即可。且给755的权限。

curl -L https://pkg.cfssl.org/R1.2/cfssl_linux-amd64 -o /usr/local/bin/cfssl

curl -L https://pkg.cfssl.org/R1.2/cfssljson_linux-amd64 -o /usr/local/bin/cfssljson

curl -L https://pkg.cfssl.org/R1.2/cfssl-certinfo_linux-amd64 -o /usr/local/bin/cfssl-certinfo

cfssl gencert -initca ca-csr.json | cfssljson -bare ca -

cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes server-csr.json | cfssljson -bare server

cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes kube-proxy-csr.json | cfssljson -bare kube-proxy

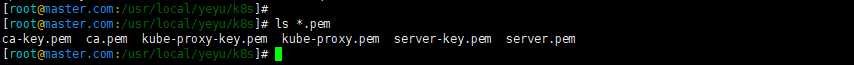

生成证书,待备用。

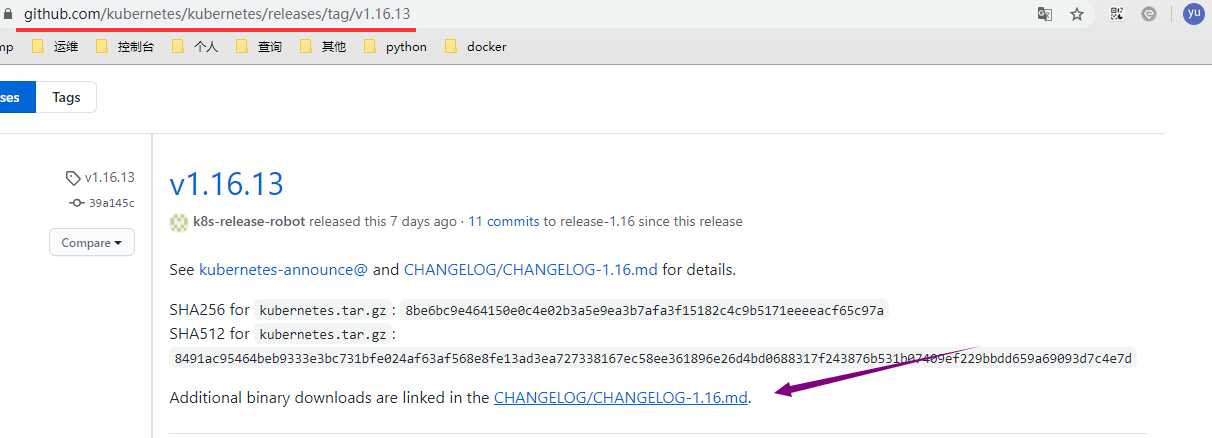

第二步:下载二进制文件。

下载官网:

wget https://dl.k8s.io/v1.16.13/kubernetes-server-linux-amd64.tar.gz

tar -zxvf kubernetes-server-linux-amd64.tar.gz

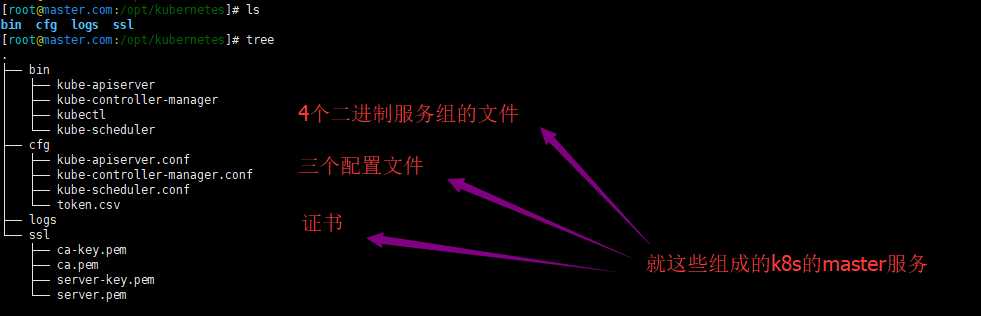

所以接下来,我的服务规划还是放在opt下

bin:二进制文件

cfg:配置文件

logs:日志

ssl:证书

mkdir -p /opt/kubernetes/{bin,cfg,logs,ssl}

cp ./kubernetes/server/bin/{kube-apiserver,kube-controller-manager,kubectl,kube-scheduler} /opt/kubernetes/bin/

复制刚才的证书过来

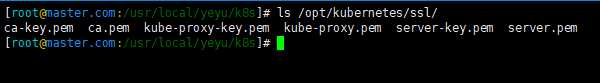

cp *.pem /opt/kubernetes/ssl/ (proxy的是node用的。可以不复制)

第三步:配置三个服务的配置文件

kube-apiserver要用到token,所以我们还需要生成一个token文件。

head -c 16 /dev/urandom | od -An -t x | tr -d ‘ ‘ # 命令会随机生成一个token

vim /opt/kubernetes/cfg/token.csv

# 若需要更换token,请使用: head -c 16 /dev/urandom | od -An -t x | tr -d ‘ ‘ # 命令会随机生成一个token

c47ffb939f5ca36231d9e3121a252940,kubelet-bootstrap,10001,"system:node-bootstrapper"

下面正式编写三个组件的配置文件

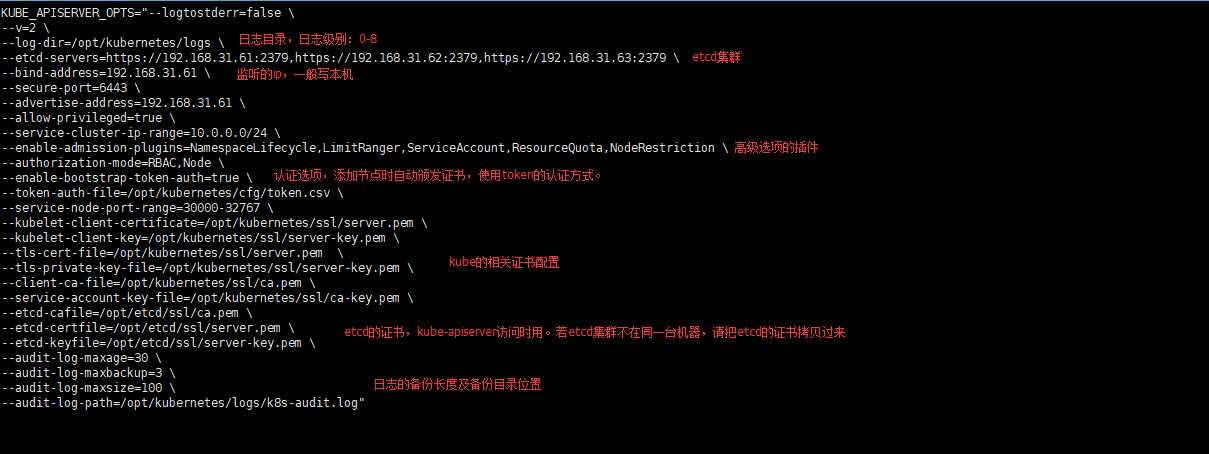

vim /opt/kubernetes/cfg/kube-apiserver.conf (记得修改etcd集群的ip和监听地址的ip)

KUBE_APISERVER_OPTS="--logtostderr=false \ --v=2 --log-dir=/opt/kubernetes/logs --etcd-servers=https://192.168.31.61:2379,https://192.168.31.62:2379,https://192.168.31.63:2379 \ --bind-address=192.168.31.61 --secure-port=6443 --advertise-address=192.168.31.61 --allow-privileged=true --service-cluster-ip-range=10.0.0.0/24 --enable-admission-plugins=NamespaceLifecycle,LimitRanger,ServiceAccount,ResourceQuota,NodeRestriction --authorization-mode=RBAC,Node --enable-bootstrap-token-auth=true --token-auth-file=/opt/kubernetes/cfg/token.csv --service-node-port-range=30000-32767 --kubelet-client-certificate=/opt/kubernetes/ssl/server.pem --kubelet-client-key=/opt/kubernetes/ssl/server-key.pem --tls-cert-file=/opt/kubernetes/ssl/server.pem --tls-private-key-file=/opt/kubernetes/ssl/server-key.pem --client-ca-file=/opt/kubernetes/ssl/ca.pem --service-account-key-file=/opt/kubernetes/ssl/ca-key.pem --etcd-cafile=/opt/etcd/ssl/ca.pem --etcd-certfile=/opt/etcd/ssl/server.pem --etcd-keyfile=/opt/etcd/ssl/server-key.pem --audit-log-maxage=30 --audit-log-maxbackup=3 --audit-log-maxsize=100 --audit-log-path=/opt/kubernetes/logs/k8s-audit.log"

更多选项参数,请参考官方文档。

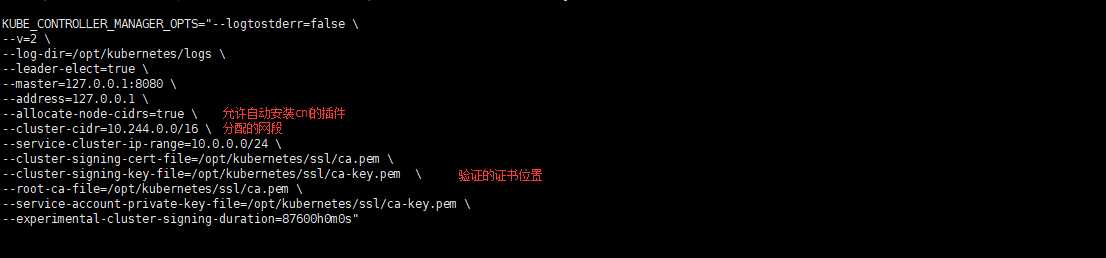

vim /opt/kubernetes/cfg/kube-controller-manager.conf

KUBE_CONTROLLER_MANAGER_OPTS="--logtostderr=false \ --v=2 --log-dir=/opt/kubernetes/logs --leader-elect=true --master=127.0.0.1:8080 --address=127.0.0.1 --allocate-node-cidrs=true --cluster-cidr=10.244.0.0/16 --service-cluster-ip-range=10.0.0.0/24 --cluster-signing-cert-file=/opt/kubernetes/ssl/ca.pem --cluster-signing-key-file=/opt/kubernetes/ssl/ca-key.pem --root-ca-file=/opt/kubernetes/ssl/ca.pem --service-account-private-key-file=/opt/kubernetes/ssl/ca-key.pem --experimental-cluster-signing-duration=87600h0m0s"

vim /opt/kubernetes/cfg/kube-scheduler.conf

KUBE_SCHEDULER_OPTS="--logtostderr=false \ --v=2 --log-dir=/opt/kubernetes/logs --leader-elect --master=127.0.0.1:8080 --address=127.0.0.1"

master已经部署完毕。master其实就只有这么点内容。(如下图)

下面就可以启动三个组件服务了。为了方便,我们顺便编写一下三个服务的启动文件吧

第四步:编写组件的启动文件,然后启动服务

vim /usr/lib/systemd/system/kube-apiserver.service

[Unit] Description=Kubernetes API Server Documentation=https://github.com/kubernetes/kubernetes [Service] EnvironmentFile=/opt/kubernetes/cfg/kube-apiserver.conf ExecStart=/opt/kubernetes/bin/kube-apiserver $KUBE_APISERVER_OPTS Restart=on-failure [Install] WantedBy=multi-user.target

vim /usr/lib/systemd/system/kube-controller-manager.service

[Unit] Description=Kubernetes Controller Manager Documentation=https://github.com/kubernetes/kubernetes [Service] EnvironmentFile=/opt/kubernetes/cfg/kube-controller-manager.conf ExecStart=/opt/kubernetes/bin/kube-controller-manager $KUBE_CONTROLLER_MANAGER_OPTS Restart=on-failure [Install] WantedBy=multi-user.target

vim /usr/lib/systemd/system/kube-scheduler.service

[Unit] Description=Kubernetes Scheduler Documentation=https://github.com/kubernetes/kubernetes [Service] EnvironmentFile=/opt/kubernetes/cfg/kube-scheduler.conf ExecStart=/opt/kubernetes/bin/kube-scheduler $KUBE_SCHEDULER_OPTS Restart=on-failure [Install] WantedBy=multi-user.target

启动服务,并设置开机自启动

systemctl start kube-apiserver systemctl start kube-controller-manager systemctl start kube-scheduler systemctl enable kube-apiserver systemctl enable kube-controller-manager systemctl enable kube-scheduler

最后一步:因为加入节点利用token自动颁发证书。所以kubelet-bootstrap授权

kubectl create clusterrolebinding kubelet-bootstrap --clusterrole=system:node-bootstrapper --user=kubelet-bootstrap

部署node节点

部署前的准备,同样的关闭防火墙,swap,配置hosts文件。

node节点也是由三个组件组成的。分别是docker、kube-proxy、kubelet

安装docker-ce。

docker的安装省略,请参考相关的文档:https://developer.aliyun.com/mirror/docker-ce?spm=a2c6h.13651102.0.0.3e221b11P6sarI

sudo yum install -y yum-utils device-mapper-persistent-data lvm2 sudo yum-config-manager --add-repo https://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo sudo yum makecache fast sudo yum -y install docker-ce sudo systemctl start docker

必须先启动kubelet,再到kube-proxy

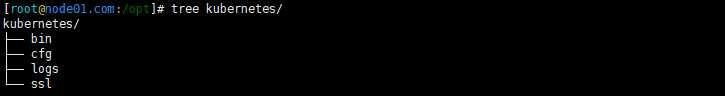

规划目录

mkdir /opt/kubernetes/{bin,cfg,logs,ssl} -p

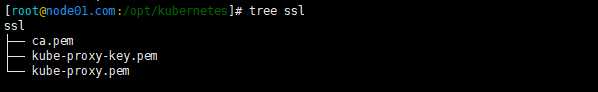

第一步:把证书复制到ssl目录下。(ca.pem kube-proxy-key.pem kube-proxy.pem)

此处的ca证书必须与master上的ca-key.pem是一对的

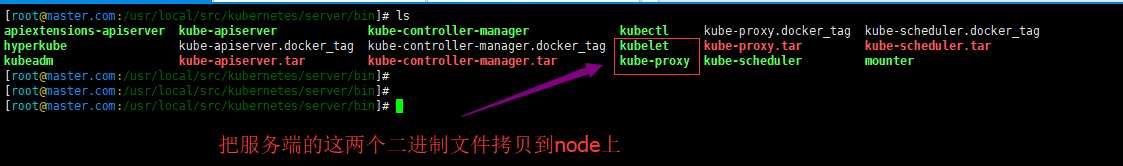

第二步:把二进制文件拷贝到bin目录

官网下载。服务端中,有kube-proxy的相关组件的。所以可以直接复制过来使用

第三步:编写kubelet,kube-proxyt的配置文件。

kubelet和kube-proxy都是由三个组件组成的服务。所以两个服务共有6个配置文件

kubelet的三个配置文件

1. bootstrap.kubeconfig:自动颁发证书的配置文件

2. kubelet.conf:主配置文件

3. kubelet-config.yml:生成服务的配置文件

kube-proxy的服务主要由三个配置文件组成

1. conf文件:基本的配置文件

2. kubeconfig:连接api-server的配置文件

3. yml文件:kube-proxy的主要配置文件。此文件是从早期版本的conf文件分离出来。

所以我们先编辑kubelet三个配置文件

vim /opt/kubernetes/cfg/bootstrap.kubeconfig

apiVersion: v1 clusters: - cluster: certificate-authority: /opt/kubernetes/ssl/ca.pem server: https://192.168.31.61:6443 name: kubernetes contexts: - context: cluster: kubernetes user: kubelet-bootstrap name: default current-context: default kind: Config preferences: {} users: - name: kubelet-bootstrap user: token: c47ffb939f5ca36231d9e3121a252940

温馨提示:记得修改ip,还有连接服务端的token要一致

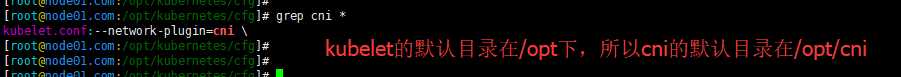

vim /opt/kubernetes/cfg/kubelet.conf 节点名字,必须与其他节点不一样。

KUBELET_OPTS="--logtostderr=false \ --v=2 --log-dir=/opt/kubernetes/logs --hostname-override=k8s-node1 --network-plugin=cni --kubeconfig=/opt/kubernetes/cfg/kubelet.kubeconfig --bootstrap-kubeconfig=/opt/kubernetes/cfg/bootstrap.kubeconfig --config=/opt/kubernetes/cfg/kubelet-config.yml --cert-dir=/opt/kubernetes/ssl --pod-infra-container-image=lizhenliang/pause-amd64:3.0"

温馨提示:pause-amd64,请参考阿里云https://developer.aliyun.com/article/680942?spm=a2c6h.14164896.0.0.72ac5d8d3HQauK

vim /opt/kubernetes/cfg/kubelet-config.yml

kind: KubeletConfiguration apiVersion: kubelet.config.k8s.io/v1beta1 address: 0.0.0.0 port: 10250 readOnlyPort: 10255 cgroupDriver: cgroupfs clusterDNS: - 10.0.0.2 clusterDomain: cluster.local failSwapOn: false authentication: anonymous: enabled: false webhook: cacheTTL: 2m0s enabled: true x509: clientCAFile: /opt/kubernetes/ssl/ca.pem authorization: mode: Webhook webhook: cacheAuthorizedTTL: 5m0s cacheUnauthorizedTTL: 30s evictionHard: imagefs.available: 15% memory.available: 100Mi nodefs.available: 10% nodefs.inodesFree: 5% maxOpenFiles: 1000000 maxPods: 110

编写kubelet.service服务启动文件,并启动服务

vim /usr/lib/systemd/system/kubelet.service

[Unit] Description=Kubernetes Kubelet After=docker.service Before=docker.service [Service] EnvironmentFile=/opt/kubernetes/cfg/kubelet.conf ExecStart=/opt/kubernetes/bin/kubelet $KUBELET_OPTS Restart=on-failure LimitNOFILE=65536 [Install] WantedBy=multi-user.target

kubelet服务部署完毕。

编写kube-proxy的配置3个配置文件

vim /opt/kubernetes/cfg/kube-proxy.conf

KUBE_PROXY_OPTS="--logtostderr=false \ --v=2 --log-dir=/opt/kubernetes/logs --config=/opt/kubernetes/cfg/kube-proxy-config.yml"

vim /opt/kubernetes/cfg/kube-proxy.kubeconfig 温馨提示:此处记得修改连接kube-apiserver的ip哦

apiVersion: v1 clusters: - cluster: certificate-authority: /opt/kubernetes/ssl/ca.pem server: https://192.168.1.47:6443 name: kubernetes contexts: - context: cluster: kubernetes user: kube-proxy name: default current-context: default kind: Config preferences: {} users: - name: kube-proxy user: client-certificate: /opt/kubernetes/ssl/kube-proxy.pem client-key: /opt/kubernetes/ssl/kube-proxy-key.pem

vim /opt/kubernetes/cfg/kube-proxy-config.yml # 此处的名字不能与其他节点名字一样,所以需要修改

kind: KubeProxyConfiguration apiVersion: kubeproxy.config.k8s.io/v1alpha1 address: 0.0.0.0 metricsBindAddress: 0.0.0.0:10249 clientConnection: kubeconfig: /opt/kubernetes/cfg/kube-proxy.kubeconfig hostnameOverride: k8s-node1 clusterCIDR: 10.0.0.0/24 mode: ipvs ipvs: scheduler: "rr" iptables: masqueradeAll: true

编写启动文件kube-proxy.service

vim /usr/lib/systemd/system/kube-proxy.service

[Unit] Description=Kubernetes Proxy After=network.target [Service] EnvironmentFile=/opt/kubernetes/cfg/kube-proxy.conf ExecStart=/opt/kubernetes/bin/kube-proxy $KUBE_PROXY_OPTS Restart=on-failure LimitNOFILE=65536 [Install] WantedBy=multi-user.target

到此kubelet和kube-proxy全部配置完毕。

启动docker、kubelet、kube-proxy三个服务。

systemctl daemon-reload

systemctl start kubelet && systemctl enable kubelet

systemctl start kube-proxy && systemctl enable kube-proxy

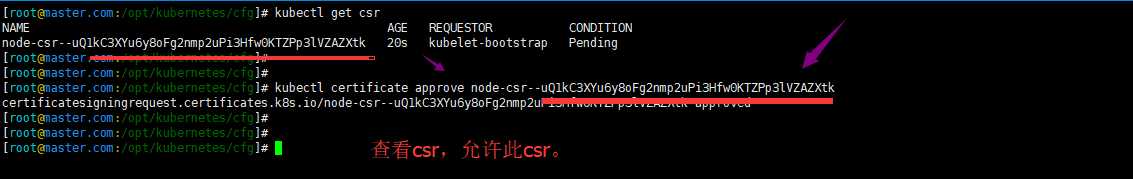

最后还有一步非常关键的一步,就是在master主节点上允许颁发证书。

kubectl get csr kubectl certificate approve node-csr-MYUxbmf_nmPQjmH3LkbZRL2uTO-_FCzDQUoUfTy7YjI

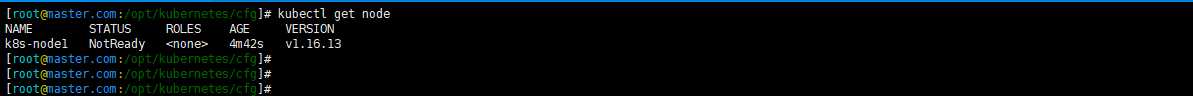

检查验证:node是否成功加入到主节点上

node节点添加成功。

【master、node部署完毕】

集群部署完毕后,但我们还需要一个插件来维持集群的网络。

安装cni网络 ——>> flannel

第一步:在node节点下载cni,并解压到bin目录下 (所有node)

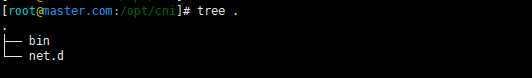

规划cni服务:mkdir /opt/cni/{bin,net.d} -p

温馨提示:kubelet服务会调用cni,所以node节点上都必须有cni插件。

cni下载地址:https://github.com/containernetworking/plugins/releases

wget https://github.com/containernetworking/plugins/releases/download/v0.8.6/cni-plugins-linux-amd64-v0.8.6.tgz

tar -zxvf cni-plugins-linux-amd64-v0.8.6.tgz -C /opt/cni/bin/

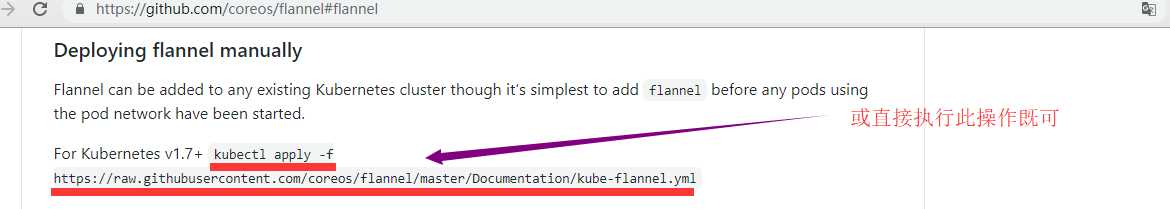

第二步:在master下安装flannel工具。官网地址:https://github.com/coreos/flannel#flannel

wget https://raw.githubusercontent.com/coreos/flannel/master/Documentation/kube-flannel.yml

kubectl apply -f kube-flannel.yml

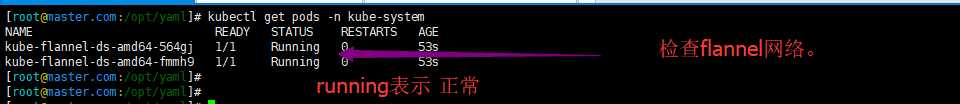

第三步:检查结果。flannel其实也是一个pod,安装的默认命令空间在kube-system

【整个k8s单master集群部署完毕】

标签:resource 复制 nec K8S集群 csr http 允许 ntc article

原文地址:https://www.cnblogs.com/yeyu1314/p/13360286.html