标签:limits 软件 lock docke sha K8S集群 source harbor spec

CNI网络插件:如果集群足够小,其实不需要网络插件,只需要改iptables规则,增加几条路由就可以了。

在恰当的时候,用恰当的技术,解决恰当的问题。

CNI网络插件

K8S的服务发现

K8S的服务暴露

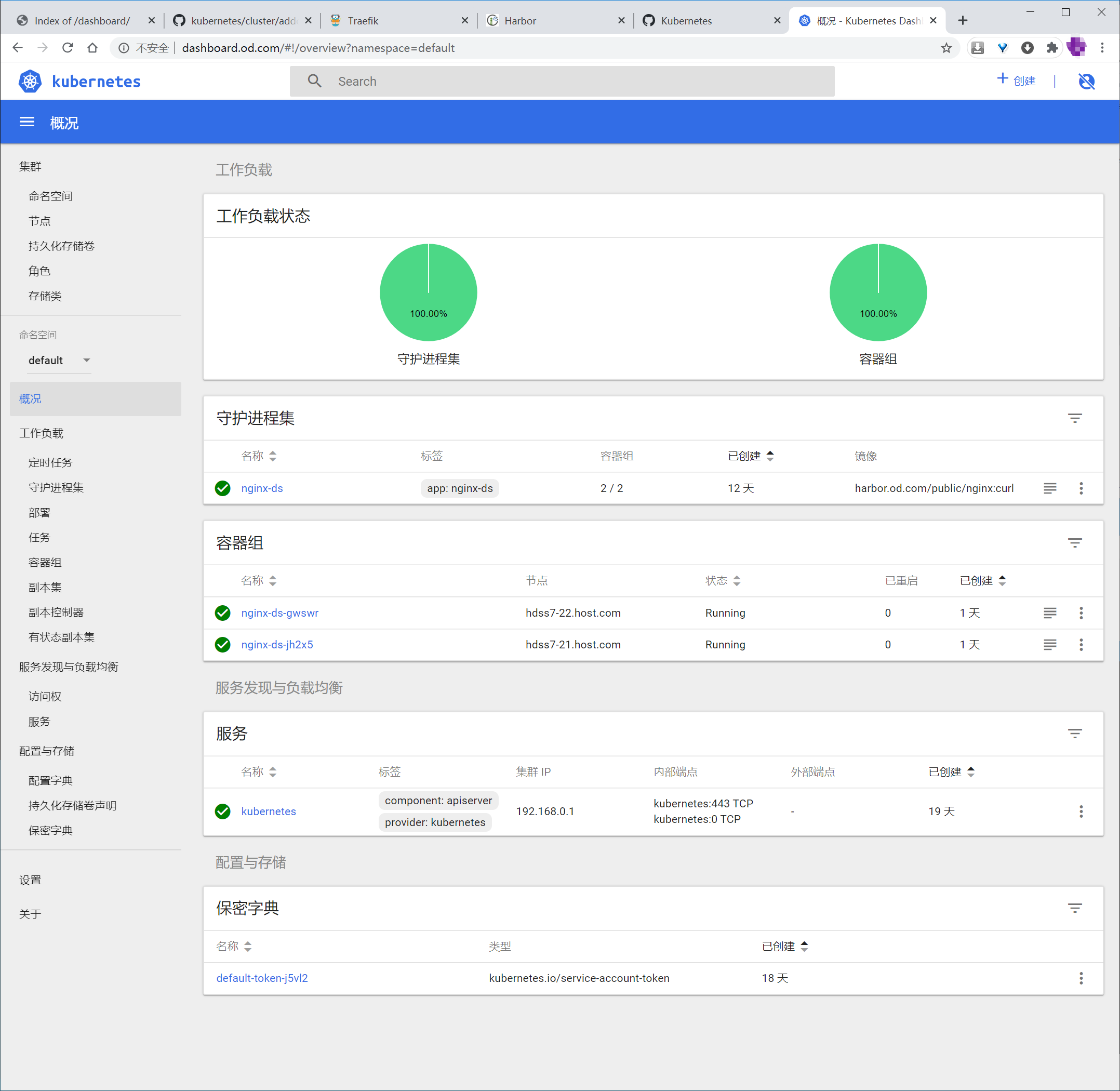

GUI图形化管理插件

在运维主机上hdss7-200

[root@hdss7-200 k8s-yaml]# docker pull k8scn/kubernetes-dashboard-amd64:v1.8.3

[root@hdss7-200 k8s-yaml]# docker images | grep dashboard

k8scn/kubernetes-dashboard-amd64 v1.8.3 fcac9aa03fd6 2 years ago 102MB

[root@hdss7-200 k8s-yaml]# docker tag fcac9aa03fd6 harbor.od.com/public/dashboard.od.com:v1.8.3

[root@hdss7-200 k8s-yaml]# docker push !$

rbac.yaml

apiVersion: v1

kind: ServiceAccount

metadata:

labels:

k8s-app: kubernetes-dashboard

addonmanager.kubernetes.io/mode: Reconcile

name: kubernetes-dashboard-admin

namespace: kube-system

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: kubernetes-dashboard-admin

namespace: kube-system

labels:

k8s-app: kubernetes-dashboard

addonmanager.kubernetes.io/mode: Reconcile

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: cluster-admin

subjects:

- kind: ServiceAccount

name: kubernetes-dashboard-admin

namespace: kube-system

dp.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: kubernetes-dashboard

namespace: kube-system

labels:

k8s-app: kubernetes-dashboard

kubernetes.io/cluster-service: "true"

addonmanager.kubernetes.io/mode: Reconcile

spec:

selector:

matchLabels:

k8s-app: kubernetes-dashboard

template:

metadata:

labels:

k8s-app: kubernetes-dashboard

annotations:

scheduler.alpha.kubernetes.io/critical-pod: ‘‘

spec:

priorityClassName: system-cluster-critical

containers:

- name: kubernetes-dashboard

image: harbor.od.com/public/dashboard:v1.8.3

resources:

limits:

cpu: 100m

memory: 300Mi

requests:

cpu: 50m

memory: 100Mi

ports:

- containerPort: 8443

protocol: TCP

args:

# PLATFORM-SPECIFIC ARGS HERE

- --auto-generate-certificates

volumeMounts:

- name: tmp-volume

mountPath: /tmp

livenessProbe:

httpGet:

scheme: HTTPS

path: /

port: 8443

initialDelaySeconds: 30

timeoutSeconds: 30

volumes:

- name: tmp-volume

emptyDir: {}

serviceAccountName: kubernetes-dashboard-admin

tolerations:

- key: "CriticalAddonsOnly"

operator: "Exists"

- --auto-generate-certificates

# 自动生成证书

livenessProbe:

# 容器存活性探针

# 容器就绪性探针

# 目标是判定容器在K8S容器编排环境下是否正常启动,或者运行的过程中是否有异常退出

# 就绪性探针

# 容器被拉起来之后,先用就绪性探针去探测它,直到它满足我的要求,我认定它为就绪状态,就是running状态

port: 8443

# 监听8443是否存在,存在则说明容器是正常状态

svc.yaml

apiVersion: v1

kind: Service

metadata:

name: kubernetes-dashboard

namespace: kube-system

labels:

k8s-app: kubernetes-dashboard

kubernetes.io/cluster-service: "true"

addonmanager.kubernetes.io/mode: Reconcile

spec:

selector:

k8s-app: kubernetes-dashboard

ports:

- port: 443

targetPort: 8443

ingress.yaml

apiVersion: extensions/v1beta1

kind: Ingress

metadata:

name: kubernetes-dashboard

namespace: kube-system

annotations:

kubernetes.io/ingress.class: traefik

spec:

rules:

- host: dashboard.od.com

http:

paths:

- backend:

serviceName: kubernetes-dashboard

servicePort: 443

创建资源

[root@hdss7-22 ~]# kubectl apply -f http://k8s-yaml.od.com/dashboard/rbac.yaml

serviceaccount/kubernetes-dashboard-admin created

clusterrolebinding.rbac.authorization.k8s.io/kubernetes-dashboard-admin created

[root@hdss7-22 ~]# kubectl apply -f http://k8s-yaml.od.com/dashboard/dp.yaml

deployment.apps/kubernetes-dashboard created

[root@hdss7-22 ~]# kubectl apply -f http://k8s-yaml.od.com/dashboard/svc.yaml

service/kubernetes-dashboard created

[root@hdss7-22 ~]# kubectl apply -f http://k8s-yaml.od.com/dashboard/ingress.yaml

ingress.extensions/kubernetes-dashboard created

查看web页面

标签:limits 软件 lock docke sha K8S集群 source harbor spec

原文地址:https://www.cnblogs.com/liuhuan086/p/13550053.html