标签:perm scheduler source rpc admin apache edits cat 默认

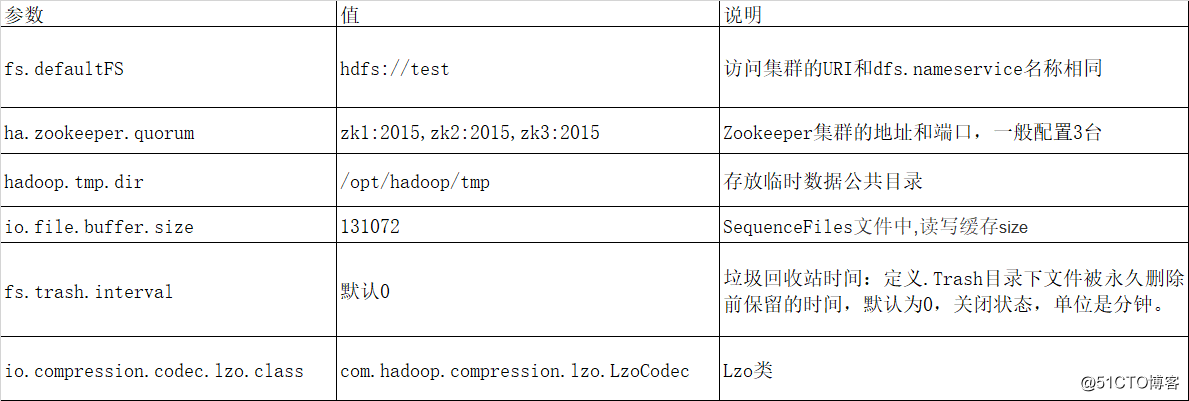

集群参数配置原则重写配置、默认覆盖,否则默认生效。下面总结Haoop常用配置文件参数。常用配置文件:core-site.xml、hdfs-site.xml、mapred-site.xml、yarn-site.xml,配置于Hadoop和Yarn这两个实例中,Hadoop和Yarn两个组件一个是负责存储一个是资源管理框架,相当于计算和存储,有的公司计算节点和存储节点分离,有的没有,按照需求使用。<configuration>

<property>

<name>fs.defaultFS</name>

<value>hdfs://HadoopHhy</value>

</property>

<property>

<name>ha.zookeeper.quorum</name>

<value>zk1:2015,zk2:2015,zk3:2015</value>

</property>

<property>

<name>hadoop.tmp.dir</name>

<value>/home/bigdata/hadoop/tmp</value>

<final>true</final>

</property>

<property>

<name>io.file.buffer.size</name>

<value>131072</value>

<final>true</final>

</property>

<property>

<name>fs.trash.interval</name>

<value>1440</value>

</property>

<property>

<name>hadoop.security.authorization</name>

<value>true</value>

</property>

</configuration>? ?

?参数配置和解释:

?

?

?

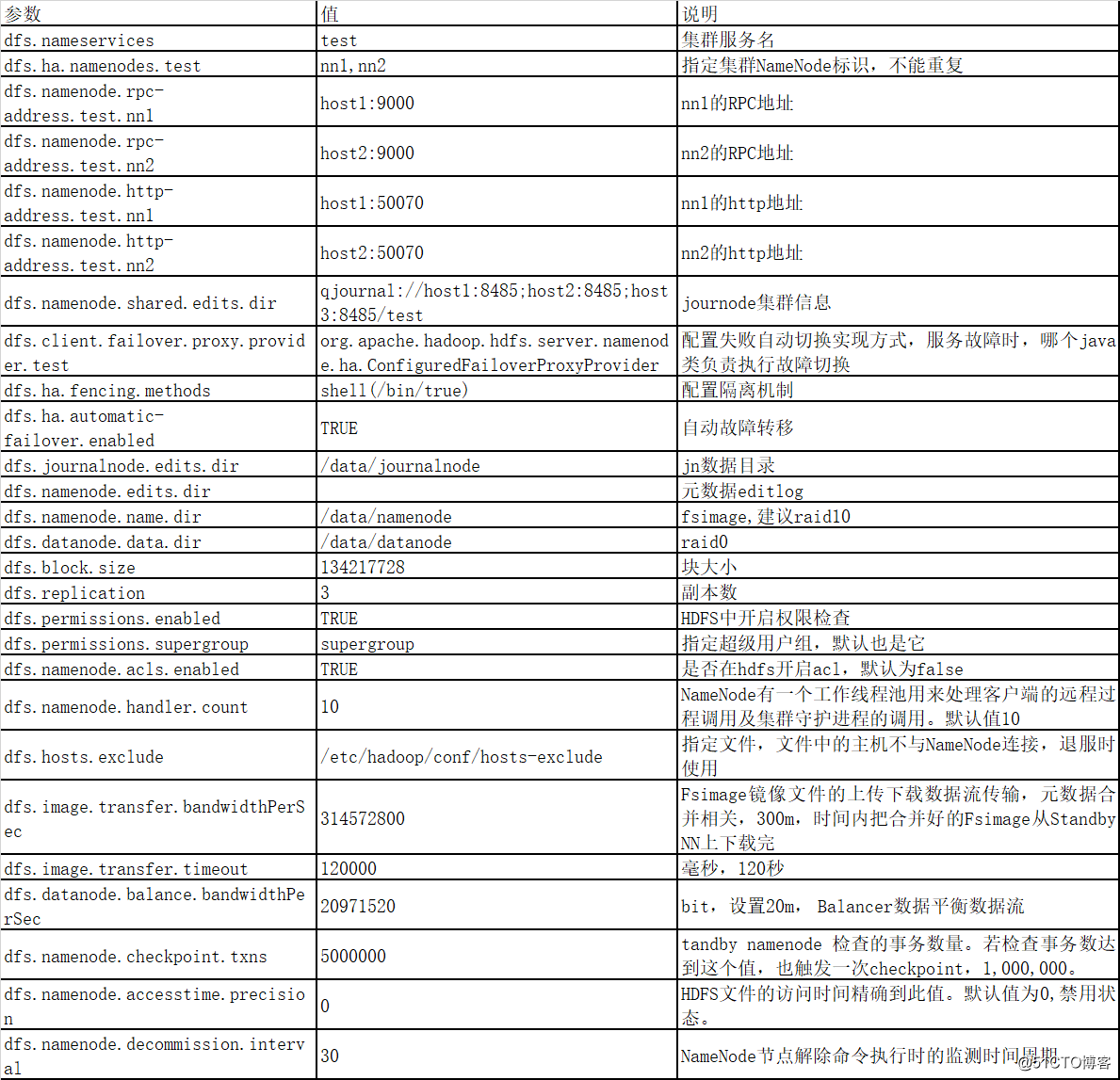

2 . hdfs-site.xml 文件

?

该文件是 HDFS 的核心配置文件,主要配置 NameNode、DataNode 的一些基于 HDFS 的属性信息、在 NameNode 和 DataNode 节点生效。

?

<configuration>

<property>

<name>dfs.nameservices</name>

<value>test</value>

</property>

<property>

<name>dfs.ha.namenodes.test</name>

<value>nn1,nn2</value>

</property>

<property>

<name>dfs.namenode.rpc-address.test.nn1</name>

<value>host1:9000</value>

</property>

<property>

<name>dfs.namenode.rpc-address.test.nn2</name>

<value>host2:9000</value>

</property>

<property>

<name>dfs.namenode.http-address.test.nn1</name>

<value>host1:50070</value>

</property>

<property>

<name>dfs.namenode.http-address.test.nn2</name>

<value>host2:50070</value>

</property>

<property>

<name>dfs.namenode.shared.edits.dir</name>

<value>qjournal://host1:8485;host2:8485;host3:8485/test</value>

</property>

<property>

<name>dfs.client.failover.proxy.provider.test</name>

<value>org.apache.hadoop.hdfs.server.namenode.ha.ConfiguredFailoverProxyProvider</value>

</property>

<property>

<name>dfs.ha.fencing.methods</name>

<value>shell(/bin/true)</value>

</property>

<property>

<name>dfs.journalnode.edits.dir</name>

<value>/data/journal</value>

</property>

<property>

<name>dfs.ha.automatic-failover.enabled</name>

<value>true</value>

</property>

<property>

<name>dfs.block.size</name>

<value>134217728</value>

<final>true</final>

</property>

<property>

<name>dfs.replication</name>

<value>3</value>

</property>

<property>

<name>dfs.name.dir</name>

<value>/data/namenode</value>

</property>

<property>

<name>dfs.data.dir</name>

<value>/data/datanode</value>

<final>true</final>

</property>

<property>

<name>dfs.permissions.enabled</name>

<value>true</value>

</property>

<property>

<name>dfs.namenode.acls.enabled</name>

<value>true</value>

</property>

<property>

<name>dfs.image.transfer.bandwidthPerSec</name>

<value>314572800</value>

</property>

<property>

<name>dfs.image.transfer.timeout</name>

<value>120000</value>

</property>

<property>

<name>dfs.namenode.checkpoint.txns</name>

<value>5000000</value>

</property>

<name>dfs.namenode.edits.dir</name>

<value>/data/editlog</value>

</property>

<property>

<name>dfs.hosts.exclude</name>

<value>/etc/hadoop/hosts-exclude</value>

</property>

<property>

<name>dfs.datanode.balance.bandwidthPerSec</name>

<value>20971520</value>

</property>

<property>

<name>dfs.namenode.accesstime.precision</name>

<value>0</value>

</property>

<property>

<name>dfs.namenode.decommission.interval</name>

<value>30</value>

</property>

</configuration>

?参数配置和解释:

?

?

?

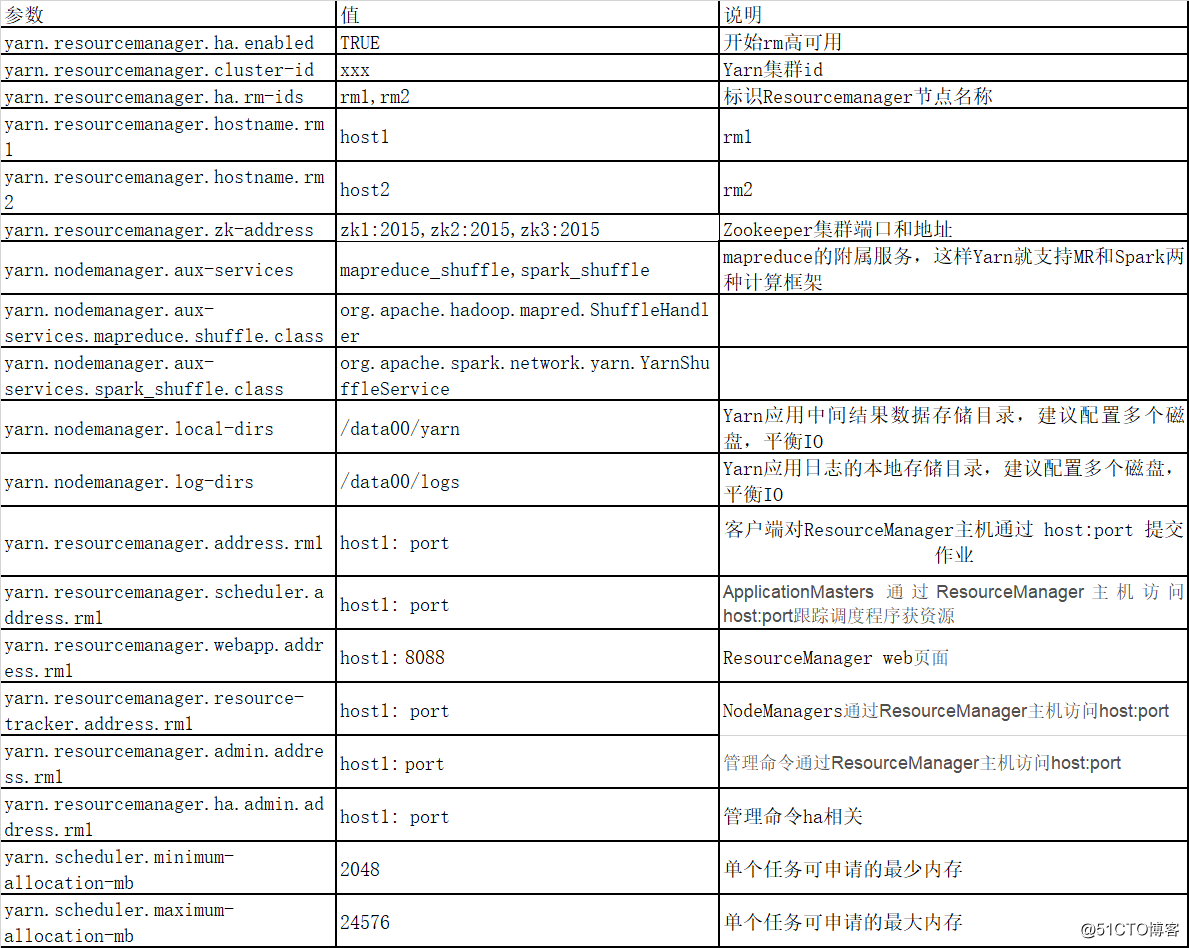

3 yarn-site.xml 文件

?

该文件是 Yarn 资源管理框架的核心配置文件,所有对 Yarn 的配置都在此文件中设置。

?

?

?参数配置和解释:

?

<configuration>

<property>

<name>yarn.resourcemanager.ha.enabled</name>

<value>true</value>

</property>

<property>

<name>yarn.resourcemanager.cluster-id</name>

<value>xxx</value>

</property>

<property>

<name>yarn.resourcemanager.ha.rm-ids</name>

<value>rm1,rm2</value>

</property>

<property>

<name>yarn.resourcemanager.hostname.rm1</name>

<value>host1</value>

</property>

<property>

<name>yarn.resourcemanager.hostname.rm2</name>

<value>host2</value>

</property>

<property>

<name>yarn.resourcemanager.zk-address</name>

<value>host1:2015,host2:2015,host3:2015</value>

</property>

<property>

<name>yarn.nodemanager.aux-services</name>

<value>mapreduce_shuffle,spark_shuffle</value>

</property>

<property>

<name>yarn.nodemanager.aux-services.mapreduce.shuffle.class</name>

<value>org.apache.hadoop.mapred.ShuffleHandler</value>

</property>

<property>

<name>yarn.nodemanager.aux-services.spark_shuffle.class</name>

<value>org.apache.spark.network.yarn.YarnShuffleService</value>

</property>

<property>

<name>yarn.nodemanager.local-dirs</name>

<value>/data/yarn</value>

</property>

<property>

<name>yarn.nodemanager.log-dirs</name>

<value>/data/logs</value>

</property>

<property>

<name>yarn.resourcemanager.address.rm1</name>

<value>host1:port</value>

</property>

<property>

<name>yarn.resourcemanager.scheduler.address.rm1</name>

<value>host1:port</value>

<final>true</final>

</property>

<property>

<name>yarn.resourcemanager.webapp.address.rm1</name>

<value>host1:8088</value>

</property>

<property>

<name>yarn.resourcemanager.resource-tracker.address.rm1</name>

<value>host1:port</value>

</property>

<property>

<name>yarn.resourcemanager.admin.address.rm1</name>

<value>host1:port</value>

</property>

<property>

<name>yarn.resourcemanager.ha.admin.address.rm1</name>

<value>host1:port</value>

</property>

<property>

<name>yarn.resourcemanager.address.rm2</name>

<value>host2:port</value>

</property>

<property>

<name>yarn.resourcemanager.scheduler.address.rm2</name>

<value>host2:port</value>

<final>true</final>

</property>

<property>

<name>yarn.resourcemanager.webapp.address.rm2</name>

<value>host2:8088</value>

</property>

<property>

<name>yarn.resourcemanager.resource-tracker.address.rm2</name>

<value>host2:port</value>

</property>

<property>

<name>yarn.resourcemanager.admin.address.rm2</name>

<value>host2:port</value>

</property>

<property>

<name>yarn.resourcemanager.ha.admin.address.rm2</name>

<value>host2:port</value>

</property>

<property>

<name>yarn.client.failover-proxy-provider</name>

<value>org.apache.hadoop.yarn.client.ConfiguredRMFailoverProxyProvider</value>

</property>

<property>

<name>yarn.resourcemanager.recovery.enabled</name>

<value>true</value>

</property>

<property>

<name>yarn.resourcemanager.store.class</name>

<value>org.apache.hadoop.yarn.server.resourcemanager.recovery.ZKRMStateStore</value>

</property>

<property>

<name>yarn.log-aggregation-enable</name>

<value>false</value>

</property>

<property>

<name>yarn.log-aggregation.retain-seconds</name>

<value>172800</value>

</property>

<property>

<name>yarn.log-aggregation.retain-check-interval-seconds</name>

<value>21600</value>

</property>

<property>

<name>yarn.scheduler.minimum-allocation-mb</name>

<value>2048</value>

</property>

<property>

<name>yarn.scheduler.maximum-allocation-mb</name>

<value>24576</value>

</property>

<property>

<name>yarn.log.server.url</name>

<value>http://host2:port/jobhistory/logs</value>

</property>

<property>

<name>yarn.nodemanager.resource.memory-mb</name>

<value>25600</value>

</property>

<property>

<name>yarn.nodemanager.resource.cpu-vcores</name>

<value>6</value>

</property>

<property>

<name>yarn.resourcemanager.nodemanager-connect-retries</name>

<value>10</value>

</property>

</configuration>

?

?

?

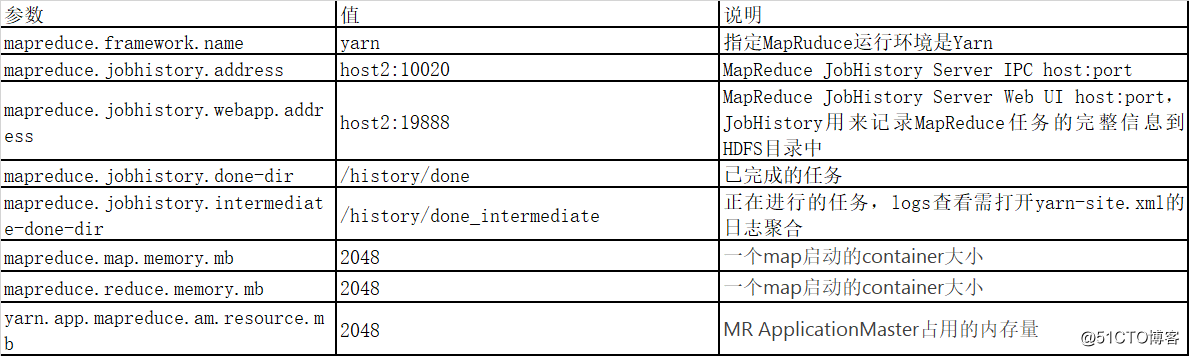

mapred-site.xml 文件

?

MR 的配置文件。

JobHistory用来记录MapReduce任务的完整信息到HDFS目录中。

?

?参数配置和解释:

?

标签:perm scheduler source rpc admin apache edits cat 默认

原文地址:https://blog.51cto.com/13836096/2532831