标签:web页面 ase tfs mon ystemd active rest mirrors code

一、增加计算节点需要步骤

1:配置yum源

2:时间同步

3:安装openstack基础包

4:安装nova-compute

5:安装neutron-linuxbridge-agent

6:启动服务nova-compute和linuxbridge-agent

7:验证

二、增加计算节点实战

1. 配置yum源

挂载光盘

[root@computer2 ~]# mount /dev/cdrom /mnt mount: /dev/sr0 is write-protected, mounting read-only [root@computer2 ~]# df -h Filesystem Size Used Avail Use% Mounted on /dev/sda2 48G 1.8G 47G 4% / devtmpfs 479M 0 479M 0% /dev tmpfs 489M 0 489M 0% /dev/shm tmpfs 489M 6.7M 482M 2% /run tmpfs 489M 0 489M 0% /sys/fs/cgroup tmpfs 98M 0 98M 0% /run/user/0 /dev/sr0 4.3G 4.3G 0 100% /mnt [root@computer2 ~]# ll /mnt total 664 -rw-rw-r-- 3 root root 14 Sep 5 2017 CentOS_BuildTag drwxr-xr-x 3 root root 2048 Sep 5 2017 EFI -rw-rw-r-- 3 root root 227 Aug 30 2017 EULA -rw-rw-r-- 3 root root 18009 Dec 10 2015 GPL drwxr-xr-x 3 root root 2048 Sep 5 2017 images drwxr-xr-x 2 root root 2048 Sep 5 2017 isolinux drwxr-xr-x 2 root root 2048 Sep 5 2017 LiveOS drwxrwxr-x 2 root root 641024 Sep 5 2017 Packages drwxr-xr-x 2 root root 4096 Sep 5 2017 repodata -rw-rw-r-- 3 root root 1690 Dec 10 2015 RPM-GPG-KEY-CentOS-7 -rw-rw-r-- 3 root root 1690 Dec 10 2015 RPM-GPG-KEY-CentOS-Testing-7 -r--r--r-- 1 root root 2883 Sep 6 2017 TRANS.TBL

上传openstack_rpm.tar.gz到/opt,解压

[root@computer2 ~]# cd /opt [root@computer2 opt]# ll total 241672 -rw-r--r-- 1 root root 247468369 Nov 21 20:23 openstack_rpm.tar.gz [root@computer2 opt]# tar -zxvf openstack_rpm.tar.gz

[root@computer2 opt]# ll total 241724 -rw-r--r-- 1 root root 247468369 Nov 21 20:23 openstack_rpm.tar.gz drwxr-xr-x 3 root root 36864 Jul 19 2017 repo [root@computer2 opt]# pwd /opt

生成repo配置文件

[root@computer2 opt]# echo ‘[local] > name=local > baseurl=file:///mnt > gpgcheck=0 > [openstack] > name=openstack > baseurl=file:///opt/repo > gpgcheck=0‘ >/etc/yum.repos.d/local.repo [root@computer2 opt]# cat /etc/yum.repos.d/local.repo [local] name=local baseurl=file:///mnt gpgcheck=0 [openstack] name=openstack baseurl=file:///opt/repo gpgcheck=0

生成缓存,开机自启挂载光盘

[root@computer2 opt]# yum makecache Loaded plugins: fastestmirror local | 3.6 kB 00:00:00 openstack | 2.9 kB 00:00:00 (1/3): openstack/primary_db | 398 kB 00:00:00 (2/3): openstack/other_db | 211 kB 00:00:00 (3/3): openstack/filelists_db | 465 kB 00:00:00 Determining fastest mirrors Metadata Cache Created [root@computer2 opt]# echo ‘mount /dev/cdrom /mnt‘ >>/etc/rc.local [root@computer2 opt]# chmod +x /etc/rc.d/rc.local

2. 时间同步

[root@computer2 opt]# rpm -qa |grep chrony chrony-3.1-2.el7.centos.x86_64 [root@computer2 opt]# vim /etc/chrony.conf [root@computer2 opt]# grep server /etc/chrony.conf # Use public servers from the pool.ntp.org project. server 10.0.0.11 iburst #server 0.centos.pool.ntp.org iburst #server 1.centos.pool.ntp.org iburst #server 2.centos.pool.ntp.org iburst #server 3.centos.pool.ntp.org iburst [root@computer2 opt]# systemctl restart chronyd [root@computer2 opt]# systemctl status chronyd ● chronyd.service - NTP client/server Loaded: loaded (/usr/lib/systemd/system/chronyd.service; enabled; vendor preset: enabled) Active: active (running) since Sat 2020-11-21 20:30:35 CST; 6s ago Docs: man:chronyd(8) man:chrony.conf(5) Process: 1769 ExecStartPost=/usr/libexec/chrony-helper update-daemon (code=exited, status=0/SUCCESS) Process: 1766 ExecStart=/usr/sbin/chronyd $OPTIONS (code=exited, status=0/SUCCESS) Main PID: 1768 (chronyd) CGroup: /system.slice/chronyd.service └─1768 /usr/sbin/chronyd Nov 21 20:30:35 computer2 systemd[1]: Starting NTP client/server... Nov 21 20:30:35 computer2 chronyd[1768]: chronyd version 3.1 starting (+CMDMON +NTP +REFCLOCK +RTC +PRIVDROP +SCFILTER +SECHA...+DEBUG) Nov 21 20:30:35 computer2 chronyd[1768]: Frequency -2.800 +/- 19.790 ppm read from /var/lib/chrony/drift Nov 21 20:30:35 computer2 systemd[1]: Started NTP client/server. Nov 21 20:30:39 computer2 chronyd[1768]: Selected source 10.0.0.11 Hint: Some lines were ellipsized, use -l to show in full.

3. 安装openstack客户端和openstack-selinux

[root@computer2 opt]# yum install python-openstackclient.noarch openstack-selinux.noarch -y

4.安装nova-compute

[root@computer2 opt]# yum install openstack-nova-compute -y

修改配置文件/etc/nova/nova.conf

[root@computer2 opt]# yum install openstack-utils.noarch -y

[root@computer2 opt]# cp /etc/nova/nova.conf{,.bak} [root@computer2 opt]# grep -Ev ‘^$|#‘ /etc/nova/nova.conf.bak >/etc/nova/nova.conf [root@computer2 opt]# cat /etc/nova/nova.conf [DEFAULT] [api_database] [barbican] [cache] [cells] [cinder] [conductor] [cors] [cors.subdomain] [database] [ephemeral_storage_encryption] [glance] [guestfs] [hyperv] [image_file_url] [ironic] [keymgr] [keystone_authtoken] [libvirt] [matchmaker_redis] [metrics] [neutron] [osapi_v21] [oslo_concurrency] [oslo_messaging_amqp] [oslo_messaging_notifications] [oslo_messaging_rabbit] [oslo_middleware] [oslo_policy] [rdp] [serial_console] [spice] [ssl] [trusted_computing] [upgrade_levels] [vmware] [vnc] [workarounds] [xenserver]

[root@computer2 opt]# openstack-config --set /etc/nova/nova.conf DEFAULT rpc_backend rabbit [root@computer2 opt]# openstack-config --set /etc/nova/nova.conf DEFAULT auth_strategy keystone [root@computer2 opt]# openstack-config --set /etc/nova/nova.conf DEFAULT my_ip 10.0.0.13 [root@computer2 opt]# openstack-config --set /etc/nova/nova.conf DEFAULT use_neutron True [root@computer2 opt]# openstack-config --set /etc/nova/nova.conf DEFAULT firewall_driver nova.virt.firewall.NoopFirewallDriver [root@computer2 opt]# openstack-config --set /etc/nova/nova.conf glance api_servers http://controller:9292 [root@computer2 opt]# openstack-config --set /etc/nova/nova.conf keystone_authtoken auth_uri http://controller:5000 [root@computer2 opt]# openstack-config --set /etc/nova/nova.conf keystone_authtoken auth_url http://controller:35357 [root@computer2 opt]# openstack-config --set /etc/nova/nova.conf keystone_authtoken memcached_servers controller:11211 [root@computer2 opt]# openstack-config --set /etc/nova/nova.conf keystone_authtoken auth_type password [root@computer2 opt]# openstack-config --set /etc/nova/nova.conf keystone_authtoken project_domain_name default [root@computer2 opt]# openstack-config --set /etc/nova/nova.conf keystone_authtoken user_domain_name default [root@computer2 opt]# openstack-config --set /etc/nova/nova.conf keystone_authtoken project_name service [root@computer2 opt]# openstack-config --set /etc/nova/nova.conf keystone_authtoken username nova [root@computer2 opt]# openstack-config --set /etc/nova/nova.conf keystone_authtoken password 123456 [root@computer2 opt]# openstack-config --set /etc/nova/nova.conf oslo_concurrency lock_path /var/lib/nova/tmp [root@computer2 opt]# openstack-config --set /etc/nova/nova.conf oslo_messaging_rabbit rabbit_host controller [root@computer2 opt]# openstack-config --set /etc/nova/nova.conf oslo_messaging_rabbit rabbit_userid openstack [root@computer2 opt]# openstack-config --set /etc/nova/nova.conf oslo_messaging_rabbit rabbit_password 123456 [root@computer2 opt]# openstack-config --set /etc/nova/nova.conf vnc enabled True [root@computer2 opt]# openstack-config --set /etc/nova/nova.conf vnc vncserver_listen 0.0.0.0 [root@computer2 opt]# openstack-config --set /etc/nova/nova.conf vnc vncserver_proxyclient_address ‘$my_ip‘ [root@computer2 opt]# openstack-config --set /etc/nova/nova.conf vnc novncproxy_base_url http://controller:6080/vnc_auto.html [root@computer2 opt]# openstack-config --set /etc/nova/nova.conf neutron url http://controller:9696 [root@computer2 opt]# openstack-config --set /etc/nova/nova.conf neutron auth_url http://controller:35357 [root@computer2 opt]# openstack-config --set /etc/nova/nova.conf neutron auth_type password [root@computer2 opt]# openstack-config --set /etc/nova/nova.conf neutron project_domain_name default [root@computer2 opt]# openstack-config --set /etc/nova/nova.conf neutron user_domain_name default [root@computer2 opt]# openstack-config --set /etc/nova/nova.conf neutron region_name RegionOne [root@computer2 opt]# openstack-config --set /etc/nova/nova.conf neutron project_name service [root@computer2 opt]# openstack-config --set /etc/nova/nova.conf neutron username neutron [root@computer2 opt]# openstack-config --set /etc/nova/nova.conf neutron password 123456 [root@computer2 opt]# cat /etc/nova/nova.conf [DEFAULT] rpc_backend = rabbit auth_strategy = keystone my_ip = 10.0.0.13 use_neutron = True firewall_driver = nova.virt.firewall.NoopFirewallDriver [api_database] [barbican] [cache] [cells] [cinder] [conductor] [cors] [cors.subdomain] [database] [ephemeral_storage_encryption] [glance] api_servers = http://controller:9292 [guestfs] [hyperv] [image_file_url] [ironic] [keymgr] [keystone_authtoken] auth_uri = http://controller:5000 auth_url = http://controller:35357 memcached_servers = controller:11211 auth_type = password project_domain_name = default user_domain_name = default project_name = service username = nova password = 123456 [libvirt] [matchmaker_redis] [metrics] [neutron] url = http://controller:9696 auth_url = http://controller:35357 auth_type = password project_domain_name = default user_domain_name = default region_name = RegionOne project_name = service username = neutron password = 123456 [osapi_v21] [oslo_concurrency] lock_path = /var/lib/nova/tmp [oslo_messaging_amqp] [oslo_messaging_notifications] [oslo_messaging_rabbit] rabbit_host = controller rabbit_userid = openstack rabbit_password = 123456 [oslo_middleware] [oslo_policy] [rdp] [serial_console] [spice] [ssl] [trusted_computing] [upgrade_levels] [vmware] [vnc] enabled = True vncserver_listen = 0.0.0.0 vncserver_proxyclient_address = $my_ip novncproxy_base_url = http://controller:6080/vnc_auto.html [workarounds] [xenserver]

[root@computer2 opt]# openstack-config --set /etc/nova/nova.conf libvirt cpu_mode none [root@computer2 opt]# openstack-config --set /etc/nova/nova.conf libvirt virt_type qemu [root@computer2 opt]# cat /etc/nova/nova.conf [DEFAULT] rpc_backend = rabbit auth_strategy = keystone my_ip = 10.0.0.13 use_neutron = True firewall_driver = nova.virt.firewall.NoopFirewallDriver [api_database] [barbican] [cache] [cells] [cinder] [conductor] [cors] [cors.subdomain] [database] [ephemeral_storage_encryption] [glance] api_servers = http://controller:9292 [guestfs] [hyperv] [image_file_url] [ironic] [keymgr] [keystone_authtoken] auth_uri = http://controller:5000 auth_url = http://controller:35357 memcached_servers = controller:11211 auth_type = password project_domain_name = default user_domain_name = default project_name = service username = nova password = 123456 [libvirt] cpu_mode = none virt_type = qemu [matchmaker_redis] [metrics] [neutron] url = http://controller:9696 auth_url = http://controller:35357 auth_type = password project_domain_name = default user_domain_name = default region_name = RegionOne project_name = service username = neutron password = 123456 [osapi_v21] [oslo_concurrency] lock_path = /var/lib/nova/tmp [oslo_messaging_amqp] [oslo_messaging_notifications] [oslo_messaging_rabbit] rabbit_host = controller rabbit_userid = openstack rabbit_password = 123456 [oslo_middleware] [oslo_policy] [rdp] [serial_console] [spice] [ssl] [trusted_computing] [upgrade_levels] [vmware] [vnc] enabled = True vncserver_listen = 0.0.0.0 vncserver_proxyclient_address = $my_ip novncproxy_base_url = http://controller:6080/vnc_auto.html [workarounds] [xenserver]

5.安装neutron-linuxbridge-agent

[root@computer2 opt]# yum install openstack-neutron-linuxbridge ebtables ipset -y

修改配置文件/etc/neutron/neutron.conf

[root@computer2 opt]# cp /etc/neutron/neutron.conf{,.bak} [root@computer2 opt]# grep -Ev ‘^$|#‘ /etc/neutron/neutron.conf.bak >/etc/neutron/neutron.conf [root@computer2 opt]# cat /etc/neutron/neutron.conf [DEFAULT] [agent] [cors] [cors.subdomain] [database] [keystone_authtoken] [matchmaker_redis] [nova] [oslo_concurrency] [oslo_messaging_amqp] [oslo_messaging_notifications] [oslo_messaging_rabbit] [oslo_policy] [qos] [quotas] [ssl]

[root@computer2 opt]# openstack-config --set /etc/neutron/neutron.conf DEFAULT rpc_backend rabbit [root@computer2 opt]# openstack-config --set /etc/neutron/neutron.conf DEFAULT auth_strategy keystone [root@computer2 opt]# openstack-config --set /etc/neutron/neutron.conf keystone_authtoken auth_uri http://controller:5000 [root@computer2 opt]# openstack-config --set /etc/neutron/neutron.conf keystone_authtoken auth_url http://controller:35357 [root@computer2 opt]# openstack-config --set /etc/neutron/neutron.conf keystone_authtoken memcached_servers controller:11211 [root@computer2 opt]# openstack-config --set /etc/neutron/neutron.conf keystone_authtoken auth_type password [root@computer2 opt]# openstack-config --set /etc/neutron/neutron.conf keystone_authtoken project_domain_name default [root@computer2 opt]# openstack-config --set /etc/neutron/neutron.conf keystone_authtoken user_domain_name default [root@computer2 opt]# openstack-config --set /etc/neutron/neutron.conf keystone_authtoken project_name service [root@computer2 opt]# openstack-config --set /etc/neutron/neutron.conf keystone_authtoken username neutron [root@computer2 opt]# openstack-config --set /etc/neutron/neutron.conf keystone_authtoken password 123456 [root@computer2 opt]# openstack-config --set /etc/neutron/neutron.conf oslo_concurrency lock_path /var/lib/neutron/tmp [root@computer2 opt]# openstack-config --set /etc/neutron/neutron.conf oslo_messaging_rabbit rabbit_host controller [root@computer2 opt]# openstack-config --set /etc/neutron/neutron.conf oslo_messaging_rabbit rabbit_userid openstack [root@computer2 opt]# openstack-config --set /etc/neutron/neutron.conf oslo_messaging_rabbit rabbit_password 123456 [root@computer2 opt]# cat /etc/neutron/neutron.conf [DEFAULT] rpc_backend = rabbit auth_strategy = keystone [agent] [cors] [cors.subdomain] [database] [keystone_authtoken] auth_uri = http://controller:5000 auth_url = http://controller:35357 memcached_servers = controller:11211 auth_type = password project_domain_name = default user_domain_name = default project_name = service username = neutron password = 123456 [matchmaker_redis] [nova] [oslo_concurrency] lock_path = /var/lib/neutron/tmp [oslo_messaging_amqp] [oslo_messaging_notifications] [oslo_messaging_rabbit] rabbit_host = controller rabbit_userid = openstack rabbit_password = 123456 [oslo_policy] [qos] [quotas] [ssl]

修改配置文件/etc/neutron/plugins/ml2/linuxbridge_agent.ini

[root@computer2 opt]# cp /etc/neutron/plugins/ml2/linuxbridge_agent.ini{,.bak} [root@computer2 opt]# grep ‘^[a-Z\[]‘ /etc/neutron/plugins/ml2/linuxbridge_agent.ini.bak >/etc/neutron/plugins/ml2/linuxbridge_agent.ini [root@computer2 opt]# cat /etc/neutron/plugins/ml2/linuxbridge_agent.ini [DEFAULT] [agent] [linux_bridge] [securitygroup] [vxlan]

[root@computer2 opt]# openstack-config --set /etc/neutron/plugins/ml2/linuxbridge_agent.ini linux_bridge physical_interface_mappings provider:eth0 [root@computer2 opt]# openstack-config --set /etc/neutron/plugins/ml2/linuxbridge_agent.ini securitygroup enable_security_group True [root@computer2 opt]# openstack-config --set /etc/neutron/plugins/ml2/linuxbridge_agent.ini securitygroup firewall_driver neutron.agent.linux.iptables_firewall.IptablesFirewallDriver [root@computer2 opt]# openstack-config --set /etc/neutron/plugins/ml2/linuxbridge_agent.ini vxlan enable_vxlan False [root@computer2 opt]# cat /etc/neutron/plugins/ml2/linuxbridge_agent.ini [DEFAULT] [agent] [linux_bridge] physical_interface_mappings = provider:eth0 [securitygroup] enable_security_group = True firewall_driver = neutron.agent.linux.iptables_firewall.IptablesFirewallDriver [vxlan] enable_vxlan = False

6.启动服务

[root@computer2 opt]# systemctl start libvirtd openstack-nova-compute neutron-linuxbridge-agent [root@computer2 opt]# systemctl enable libvirtd openstack-nova-compute neutron-linuxbridge-agent Created symlink from /etc/systemd/system/multi-user.target.wants/openstack-nova-compute.service to /usr/lib/systemd/system/openstack-nova-compute.service. Created symlink from /etc/systemd/system/multi-user.target.wants/neutron-linuxbridge-agent.service to /usr/lib/systemd/system/neutron-linuxbridge-agent.service. [root@computer2 opt]# systemctl status libvirtd openstack-nova-compute neutron-linuxbridge-agent ● libvirtd.service - Virtualization daemon Loaded: loaded (/usr/lib/systemd/system/libvirtd.service; enabled; vendor preset: enabled) Active: active (running) since Sat 2020-11-21 20:52:03 CST; 50s ago Docs: man:libvirtd(8) http://libvirt.org Main PID: 2653 (libvirtd) CGroup: /system.slice/libvirtd.service └─2653 /usr/sbin/libvirtd Nov 21 20:52:02 computer2 systemd[1]: Starting Virtualization daemon... Nov 21 20:52:03 computer2 systemd[1]: Started Virtualization daemon. ● openstack-nova-compute.service - OpenStack Nova Compute Server Loaded: loaded (/usr/lib/systemd/system/openstack-nova-compute.service; enabled; vendor preset: disabled) Active: active (running) since Sat 2020-11-21 20:52:19 CST; 34s ago Main PID: 2687 (nova-compute) CGroup: /system.slice/openstack-nova-compute.service └─2687 /usr/bin/python2 /usr/bin/nova-compute Nov 21 20:52:03 computer2 systemd[1]: Starting OpenStack Nova Compute Server... Nov 21 20:52:19 computer2 nova-compute[2687]: /usr/lib/python2.7/site-packages/pkg_resources/__init__.py:187: RuntimeWarning: You ha... Nov 21 20:52:19 computer2 nova-compute[2687]: stacklevel=1, Nov 21 20:52:19 computer2 systemd[1]: Started OpenStack Nova Compute Server. ● neutron-linuxbridge-agent.service - OpenStack Neutron Linux Bridge Agent Loaded: loaded (/usr/lib/systemd/system/neutron-linuxbridge-agent.service; enabled; vendor preset: disabled) Active: active (running) since Sat 2020-11-21 20:52:03 CST; 51s ago Main PID: 2668 (neutron-linuxbr) CGroup: /system.slice/neutron-linuxbridge-agent.service ├─2668 /usr/bin/python2 /usr/bin/neutron-linuxbridge-agent --config-file /usr/share/neutron/neutron-dist.conf --config-fi... ├─2737 sudo neutron-rootwrap-daemon /etc/neutron/rootwrap.conf └─2738 /usr/bin/python2 /usr/bin/neutron-rootwrap-daemon /etc/neutron/rootwrap.conf Nov 21 20:52:03 computer2 neutron-enable-bridge-firewall.sh[2654]: net.bridge.bridge-nf-call-arptables = 1 Nov 21 20:52:03 computer2 neutron-enable-bridge-firewall.sh[2654]: net.bridge.bridge-nf-call-iptables = 1 Nov 21 20:52:03 computer2 neutron-enable-bridge-firewall.sh[2654]: net.bridge.bridge-nf-call-ip6tables = 1 Nov 21 20:52:03 computer2 systemd[1]: Started OpenStack Neutron Linux Bridge Agent. Nov 21 20:52:07 computer2 neutron-linuxbridge-agent[2668]: Guru mediation now registers SIGUSR1 and SIGUSR2 by default for back...orts. Nov 21 20:52:08 computer2 neutron-linuxbridge-agent[2668]: Option "verbose" from group "DEFAULT" is deprecated for removal. It...ture. Nov 21 20:52:11 computer2 neutron-linuxbridge-agent[2668]: Option "notification_driver" from group "DEFAULT" is deprecated. Use...ons". Nov 21 20:52:11 computer2 sudo[2737]: neutron : TTY=unknown ; PWD=/ ; USER=root ; COMMAND=/bin/neutron-rootwrap-daemon /etc/...ap.conf Nov 21 20:52:13 computer2 neutron-linuxbridge-agent[2668]: /usr/lib/python2.7/site-packages/pkg_resources/__init__.py:187: RuntimeWa... Nov 21 20:52:13 computer2 neutron-linuxbridge-agent[2668]: stacklevel=1, Hint: Some lines were ellipsized, use -l to show in full.

7. 控制节点上查看节点是否创建成功

[root@controller ~]# source admin-openrc [root@controller ~]# nova service-list +----+------------------+------------+----------+---------+-------+----------------------------+-----------------+ | Id | Binary | Host | Zone | Status | State | Updated_at | Disabled Reason | +----+------------------+------------+----------+---------+-------+----------------------------+-----------------+ | 1 | nova-scheduler | controller | internal | enabled | up | 2020-11-21T12:54:34.000000 | - | | 2 | nova-conductor | controller | internal | enabled | up | 2020-11-21T12:54:34.000000 | - | | 3 | nova-consoleauth | controller | internal | enabled | up | 2020-11-21T12:54:34.000000 | - | | 6 | nova-compute | computer1 | nova | enabled | up | 2020-11-21T12:54:36.000000 | - | | 7 | nova-compute | computer2 | nova | enabled | up | 2020-11-21T12:54:37.000000 | - | +----+------------------+------------+----------+---------+-------+----------------------------+-----------------+

[root@controller ~]# neutron agent-list +-----------------------+--------------------+------------+-------------------+-------+----------------+-----------------------+ | id | agent_type | host | availability_zone | alive | admin_state_up | binary | +-----------------------+--------------------+------------+-------------------+-------+----------------+-----------------------+ | 097037d9-fb3d-4576 | Linux bridge agent | controller | | :-) | True | neutron-linuxbridge- | | -a7ac-9908c16d9212 | | | | | | agent | | 888b4a72-3946-475e- | Linux bridge agent | computer1 | | :-) | True | neutron-linuxbridge- | | 9b40-fbe1d873b98b | | | | | | agent | | 974395f9-a42f-4770-98 | Linux bridge agent | computer2 | | :-) | True | neutron-linuxbridge- | | d2-f3f14cd96c1c | | | | | | agent | | ad827fd0-6163-49f5 | DHCP agent | controller | nova | :-) | True | neutron-dhcp-agent | | -9d7c-32c43ab02842 | | | | | | | | bbd32153-b3a0-4f34 | Metadata agent | controller | | :-) | True | neutron-metadata- | | -bb4e-eb392aac4921 | | | | | | agent | +-----------------------+--------------------+------------+-------------------+-------+----------------+-----------------------+

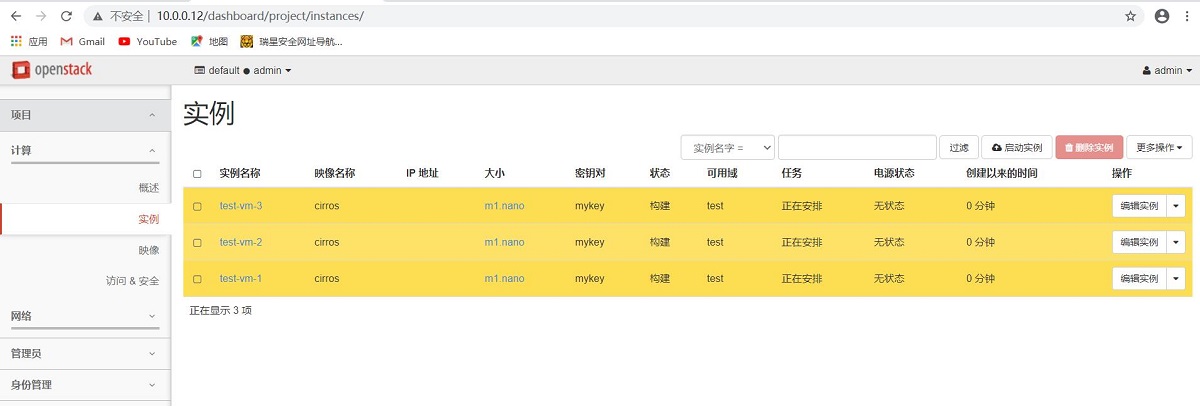

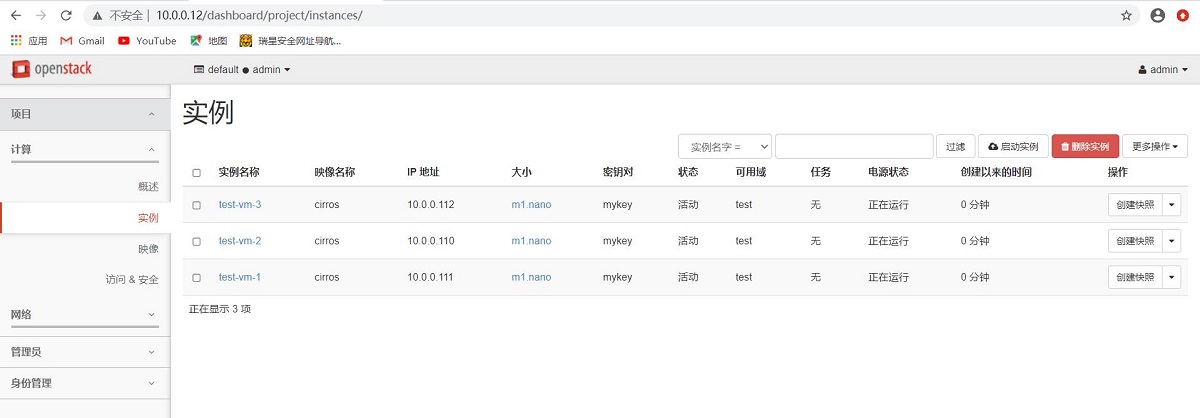

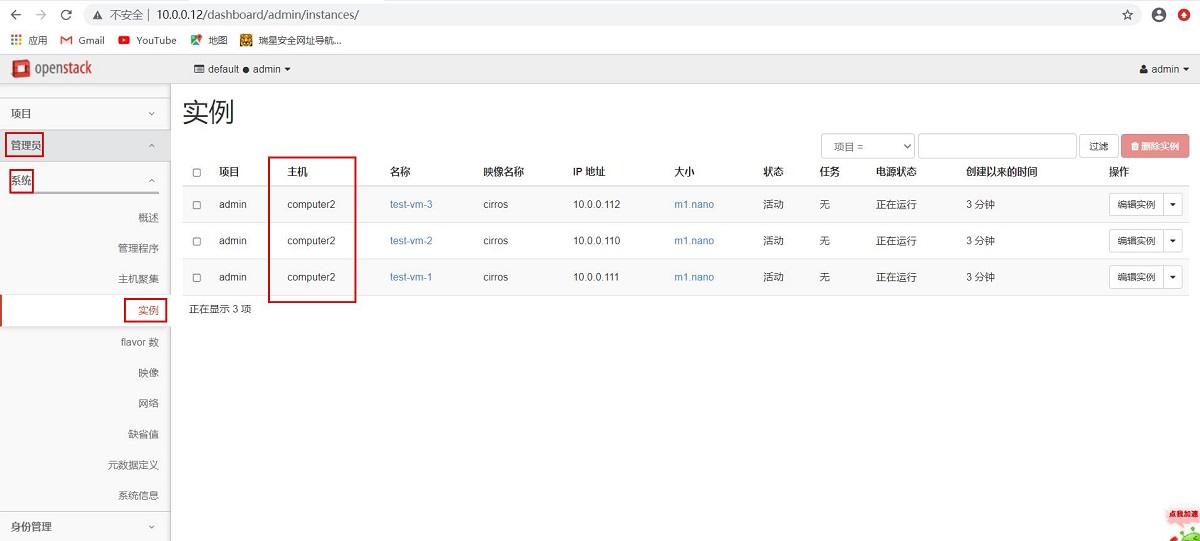

8.创建虚机来检查新增的计算节点是否可用

nova-scheduler 调度器选择一个节点创建实例

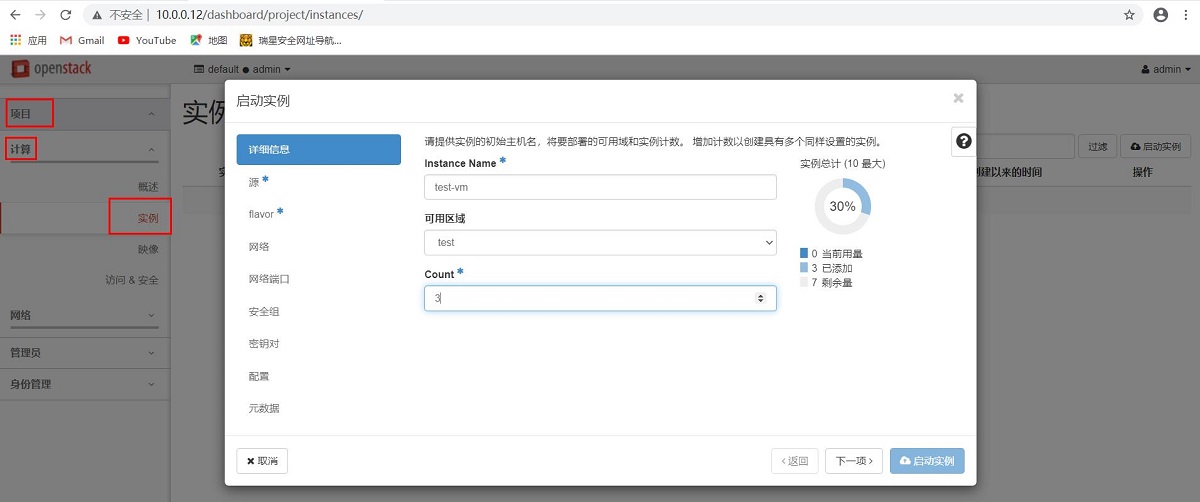

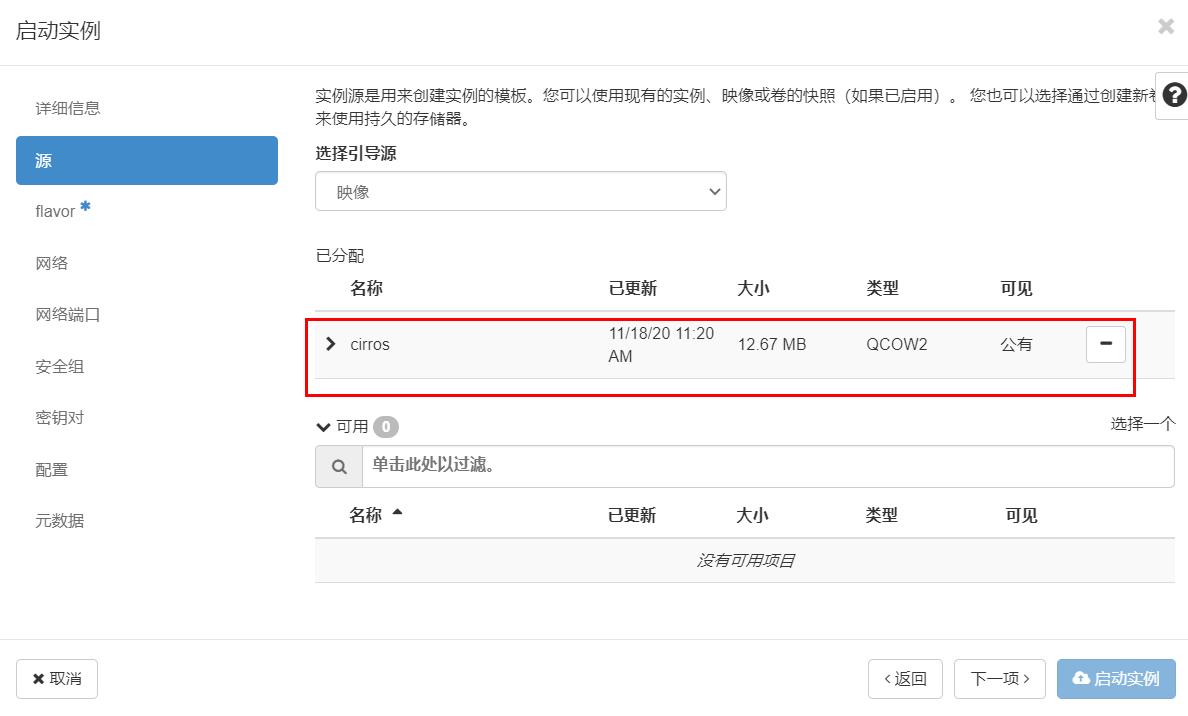

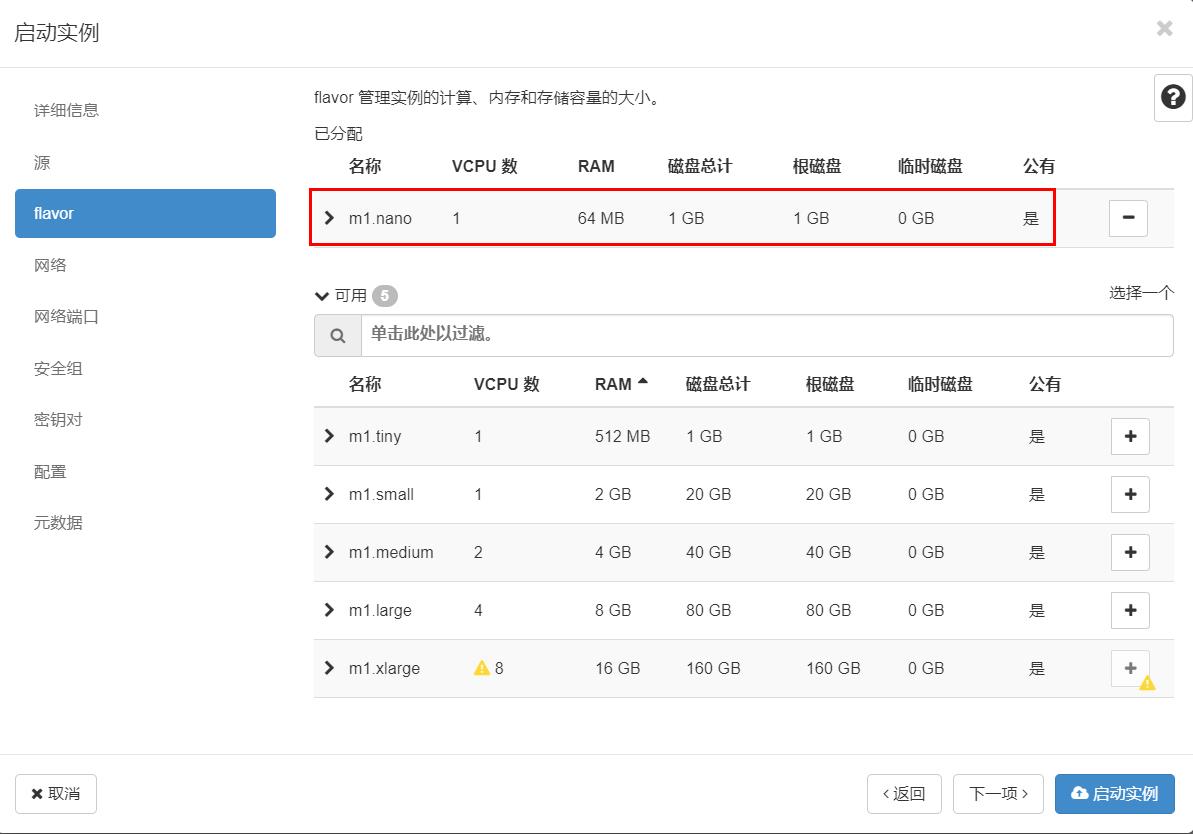

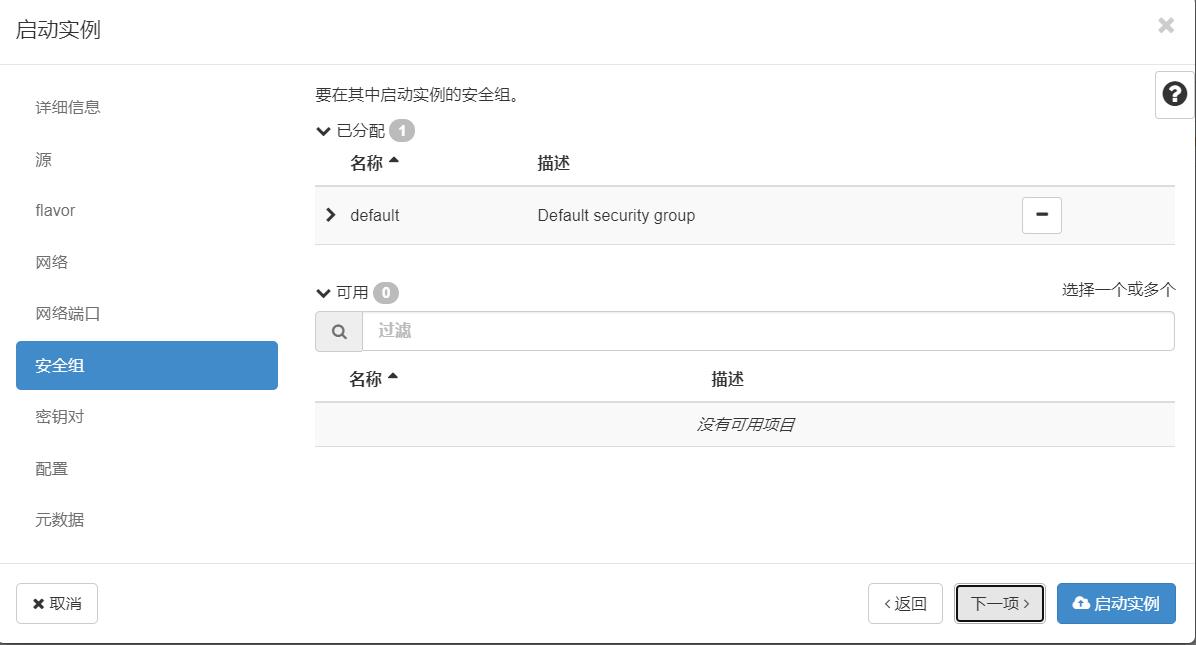

web页面创建实例

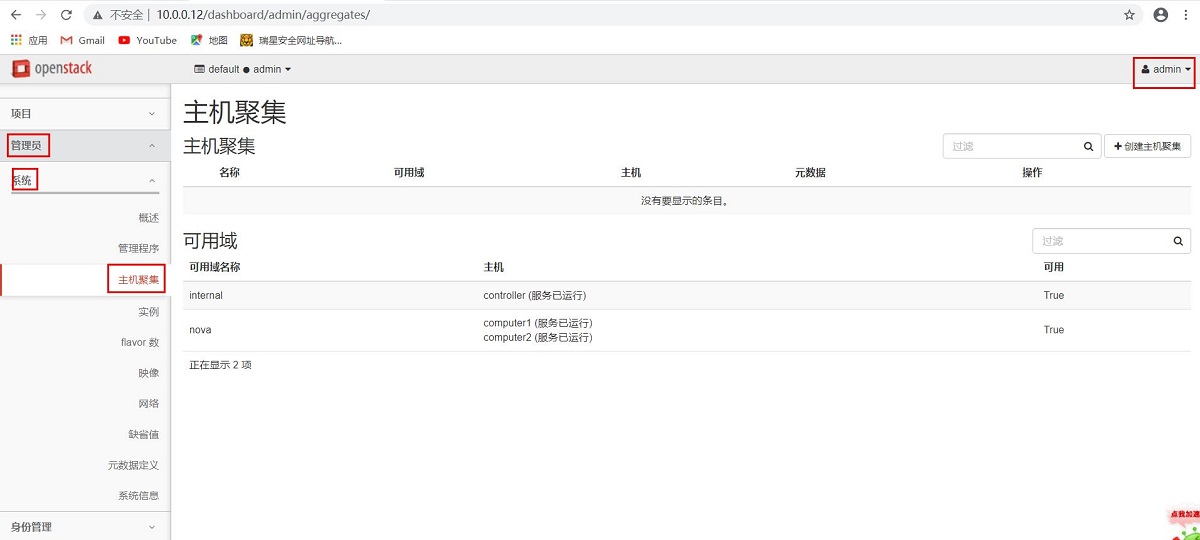

管理员—>系统——>主机聚集

项目—>计算——>实例

管理员—>系统——>实例

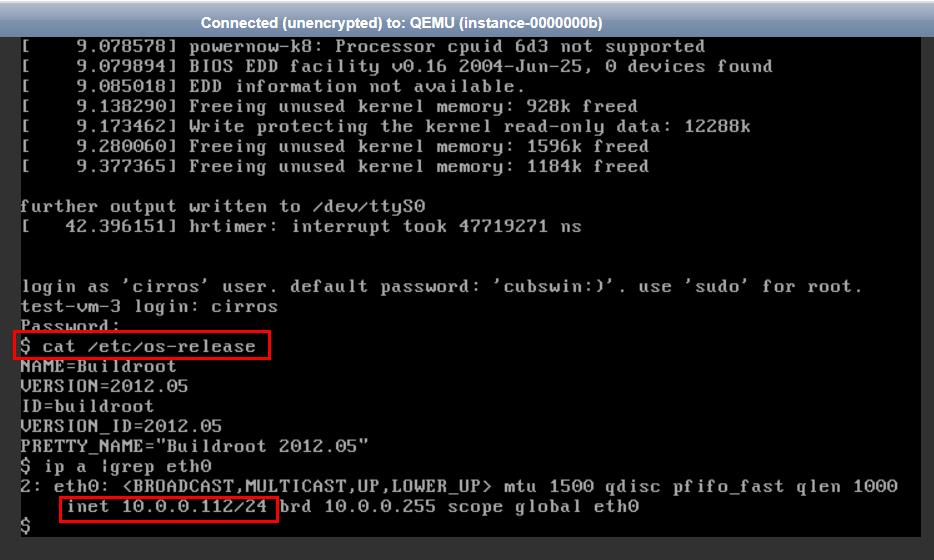

[root@controller ~]# nova list +--------------------------------------+-----------+--------+------------+-------------+---------------------+ | ID | Name | Status | Task State | Power State | Networks | +--------------------------------------+-----------+--------+------------+-------------+---------------------+ | 1da7aa0f-a032-4f18-8b0b-71b26ea3da0b | test-vm-1 | ACTIVE | - | Running | test-net=10.0.0.111 | | 09443c1e-2b54-4807-b1a1-00d81cc0cf04 | test-vm-2 | ACTIVE | - | Running | test-net=10.0.0.110 | | 79270573-c155-4021-b063-bd78d71a8ab2 | test-vm-3 | ACTIVE | - | Running | test-net=10.0.0.112 | +--------------------------------------+-----------+--------+------------+-------------+---------------------+

computer2计算节点上查看虚拟机实例

[root@computer2 opt]# yum install libvirt -y

[root@computer2 opt]# virsh list Id Name State ---------------------------------------------------- 1 instance-0000000a running 2 instance-00000009 running 3 instance-0000000b running

9.主机登录(实例—>控制台)

标签:web页面 ase tfs mon ystemd active rest mirrors code

原文地址:https://www.cnblogs.com/jiawei2527/p/14013507.html