标签:des style blog http io color os java sp

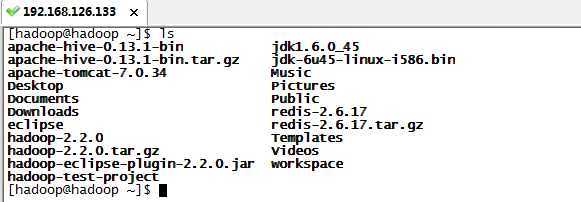

hadoop2.2.0

hive0.13.1

(事先已经安装好hadoop、MySQL以及在MySQL中建好了hive专用账号,数据创建不创建都可以)

1.下载解压

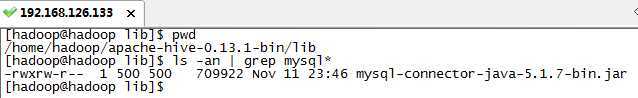

2.把MySQL驱动加入hive的lib包中

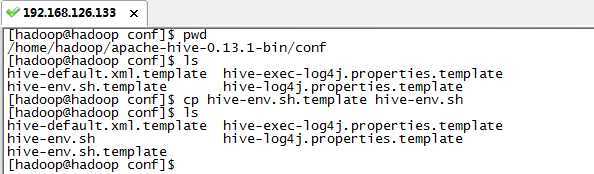

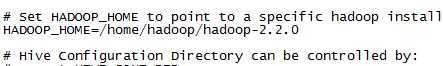

3.从模板文件中复制一份hive-env.sh出来,然后修改。设置hadoop目录

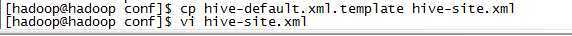

4.从模板文件复制一份hive-site.xml出来,然后修改

1 <property> 2 <name>javax.jdo.option.ConnectionURL</name> 3 <value>jdbc:mysql://localhost:3306/hive?createDatabaseIfNotExist=true</value> 4 <description>JDBC connect string for a JDBC metastore</description> 5 </property> 6 7 8 9 <property> 10 <name>javax.jdo.option.ConnectionDriverName</name> 11 <value>com.mysql.jdbc.Driver</value> 12 <description>Driver class name for a JDBC metastore</description> 13 </property> 14 15 16 <property> 17 <name>javax.jdo.option.ConnectionUserName</name> 18 <value>hive</value> 19 <description>username to use against metastore database</description> 20 </property> 21 22 23 <property> 24 <name>javax.jdo.option.ConnectionPassword</name> 25 <value>hadoop</value> 26 <description>password to use against metastore database</description> 27 </property>

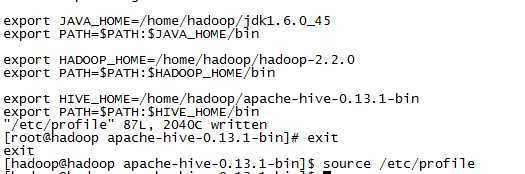

5.设置hive环境变量

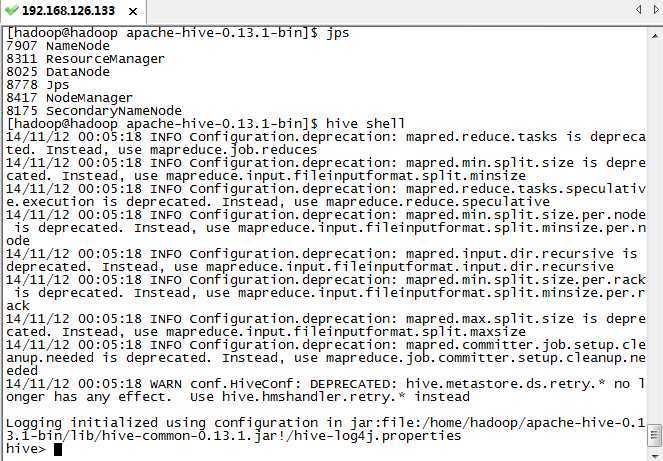

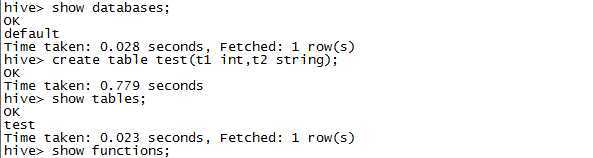

6.启动测试(hadoop要先启动)

hive是不是不用分发,单节点作为客户端就可以了?

我现在很疑问它完全依赖hadoop,那它怎么操作hadoop的HDFS和MR的?

它们之间的连接点在哪里?

标签:des style blog http io color os java sp

原文地址:http://www.cnblogs.com/admln/p/hive-0-13-1-hadoop2-2-0.html