标签:and pytho exp air ret ram key end 简单

tensorflow版本:1.15.0

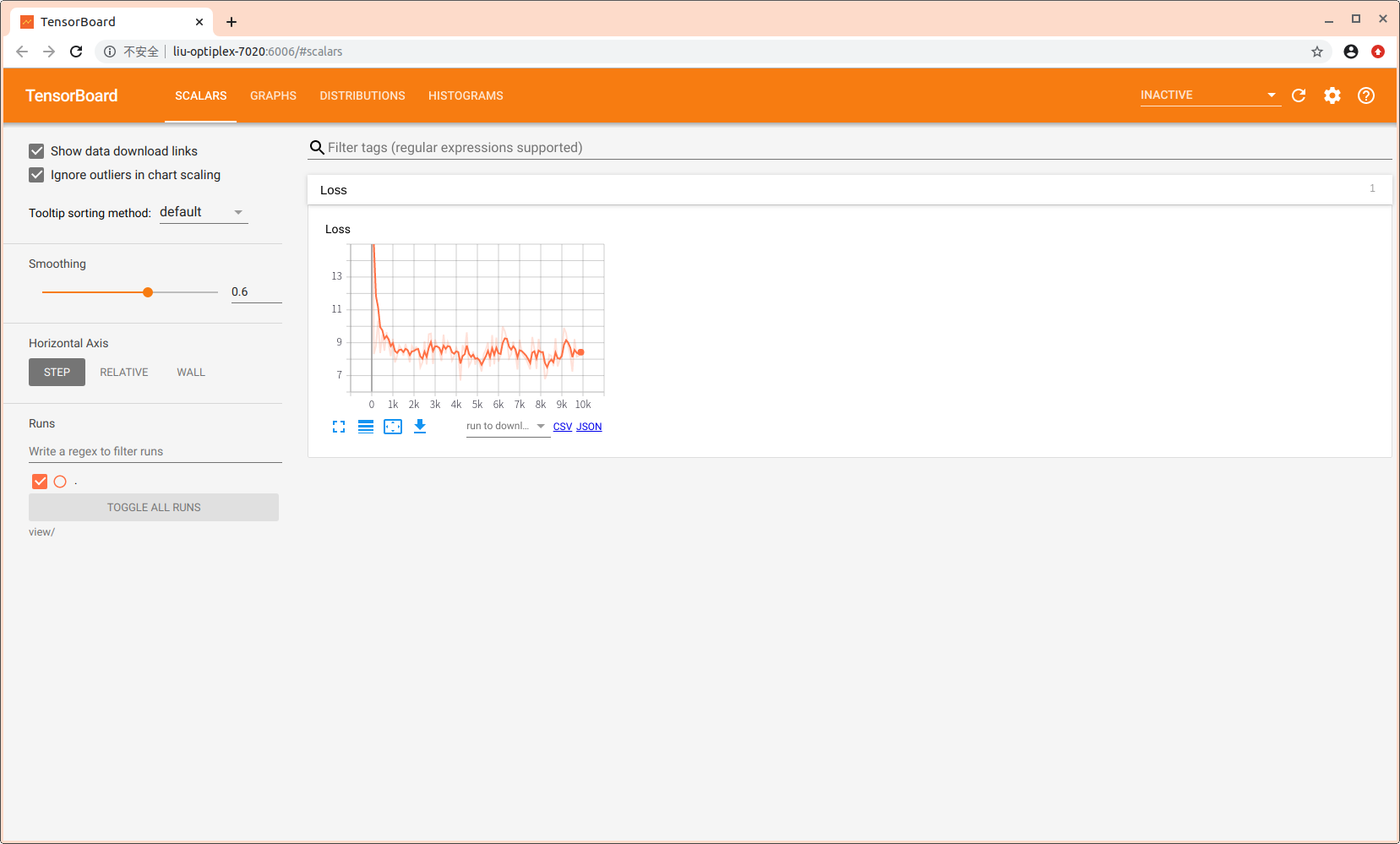

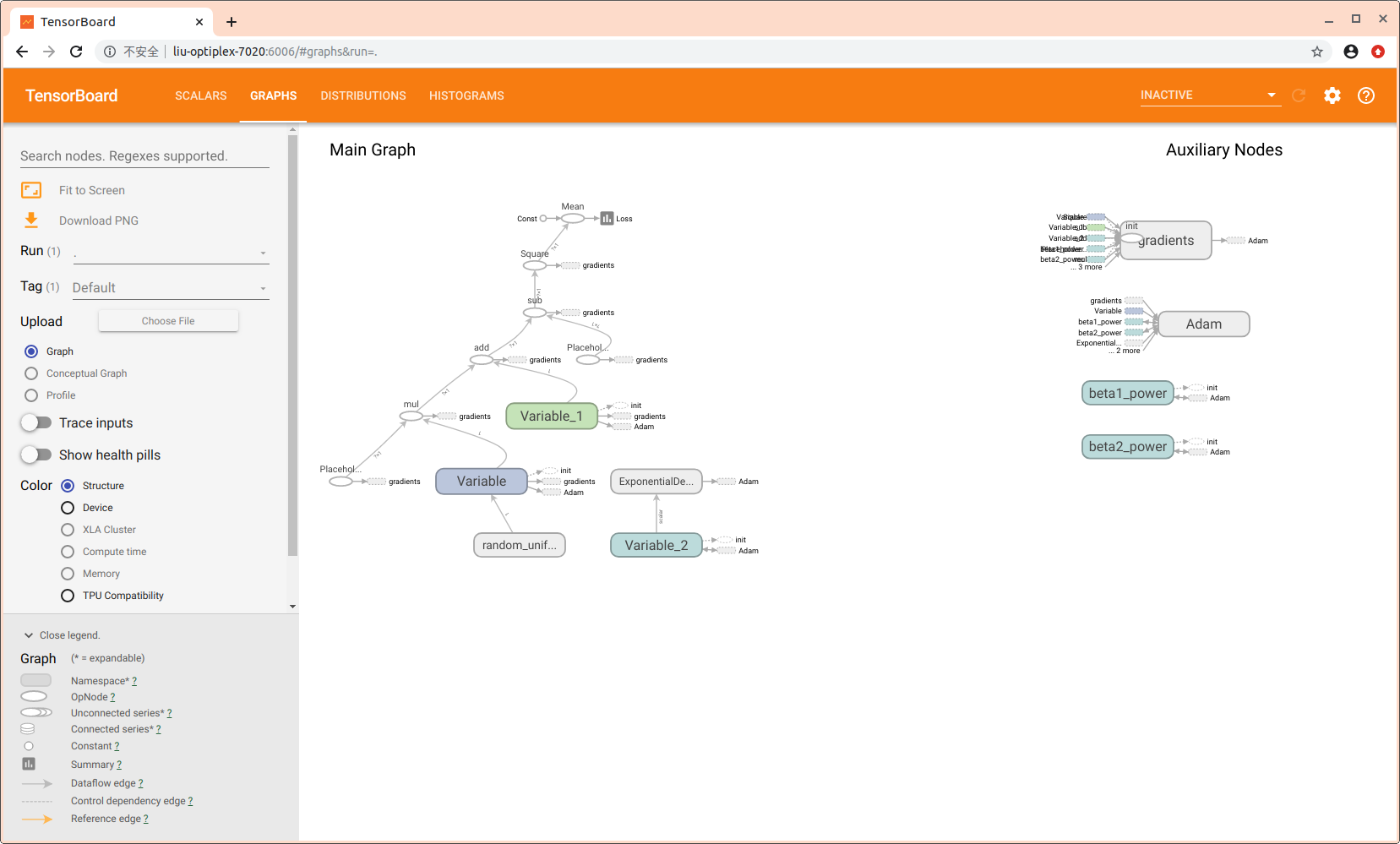

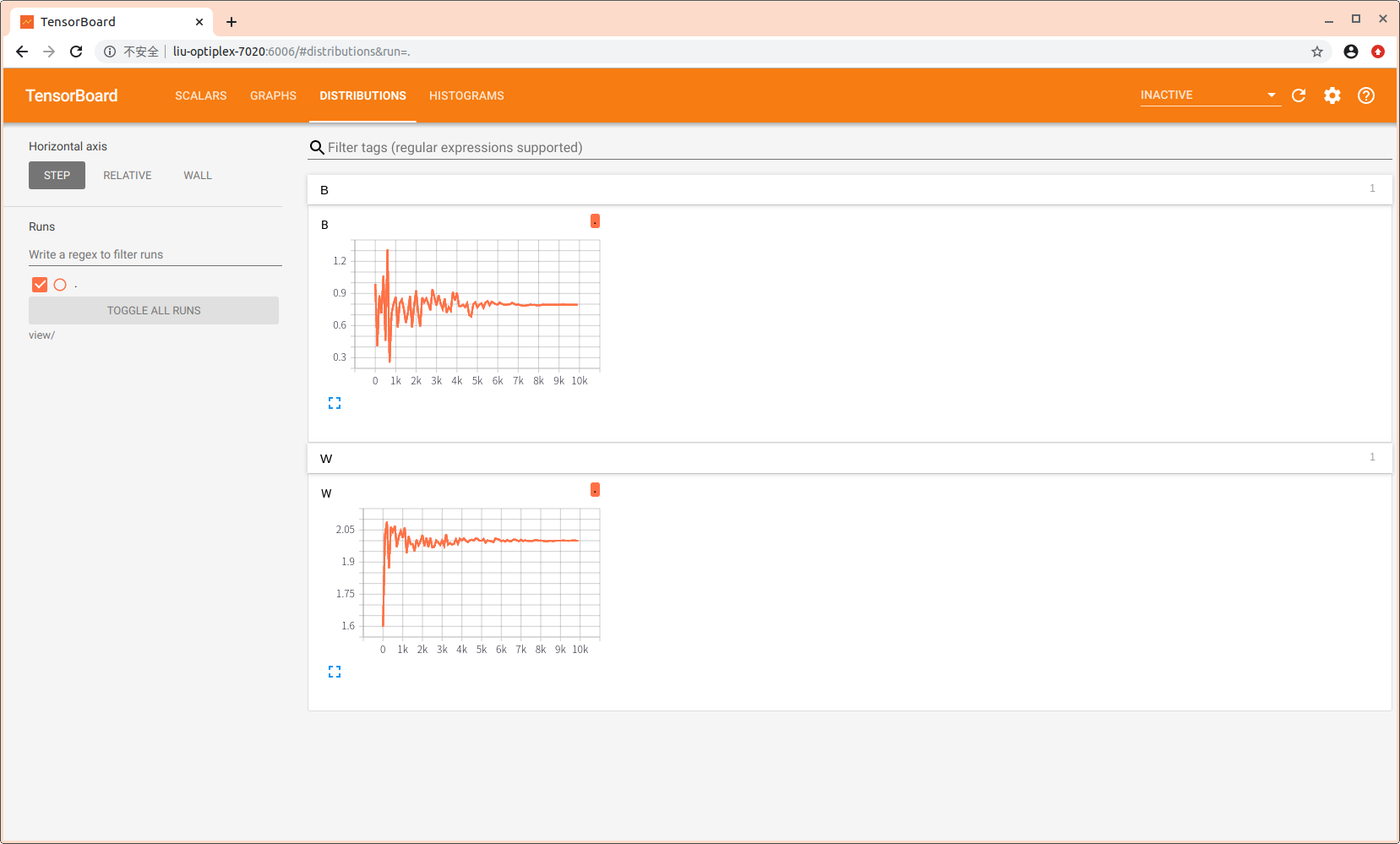

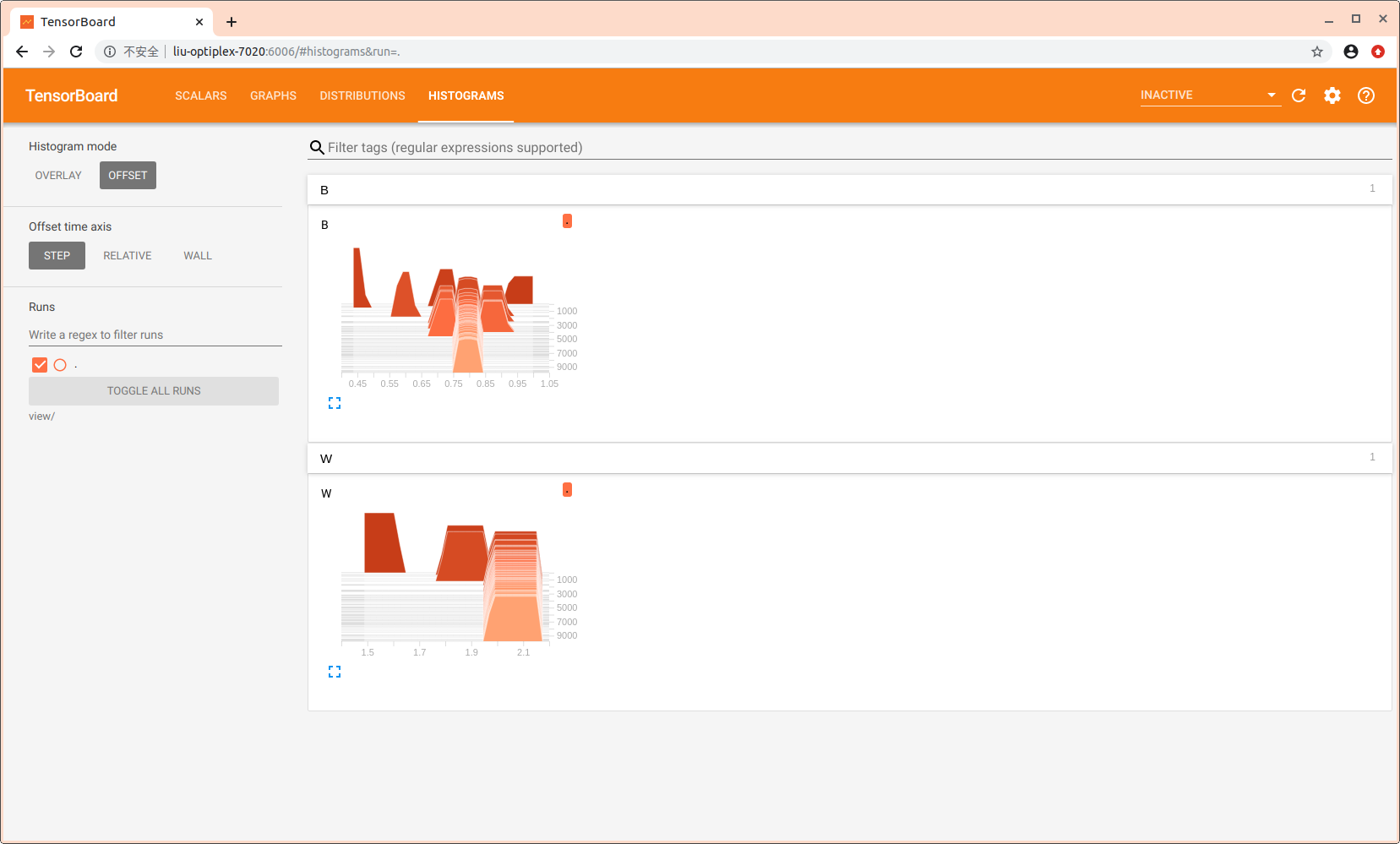

tf.summary.scalar,tf.summary.histogram,tf.summary.merge_all,tf.summary.merge,tf.summary.FileWriter,writer.add_summary用法简单演示!

import tensorflow as tf import numpy as np import random def generate_x_y(batchsize): x__data = np.random.uniform(-20, 20, batchsize).astype(np.float32) x__data = x__data.reshape(batchsize, 1) y__data = [] for i in range(batchsize): y__data.append(x__data[i][0] * 2.0 + 0.8 + random.uniform(-5, 5)) y__data = np.array(y__data).reshape(batchsize, 1) return x__data, y__data X_ = tf.placeholder(tf.float32, shape=[None, 1]) Y_ = tf.placeholder(tf.float32, shape=[None, 1]) W = tf.Variable(tf.random_uniform([1], -1.0, 1.0)) B = tf.Variable(tf.zeros([1]), dtype=tf.float32) def Build(X_Input): Y_Output = X_Input * W + B return Y_Output Y = Build(X_) Loss = tf.reduce_mean(tf.square((Y - Y_))) tf.summary.scalar("Loss", Loss) tf.summary.histogram("W", W) tf.summary.histogram("B", B) merge_summary = tf.summary.merge_all() # merge_summary = tf.summary.merge([tf.get_collection(tf.GraphKeys.SUMMARIES, ‘Loss‘)]) global_step = tf.Variable(0, trainable=False) learning_rate = tf.train.exponential_decay(0.99, global_step, 100, 0.9, staircase=True) train_step = tf.train.AdamOptimizer(learning_rate, beta1=0.5).minimize(Loss, global_step=global_step) with tf.Session() as sess: tf.global_variables_initializer().run() writer = tf.summary.FileWriter("view", sess.graph) for i in range(10000): x_data, y_data = generate_x_y(120) sess.run(train_step, feed_dict={X_: x_data, Y_: y_data}) if i % 100 == 0: print("W:", sess.run(W)) print("B:", sess.run(B)) print("第%i轮!!!!!!!!!" % i) summary = sess.run(merge_summary, feed_dict={X_: x_data, Y_: y_data}) writer.add_summary(summary=summary, global_step=i)

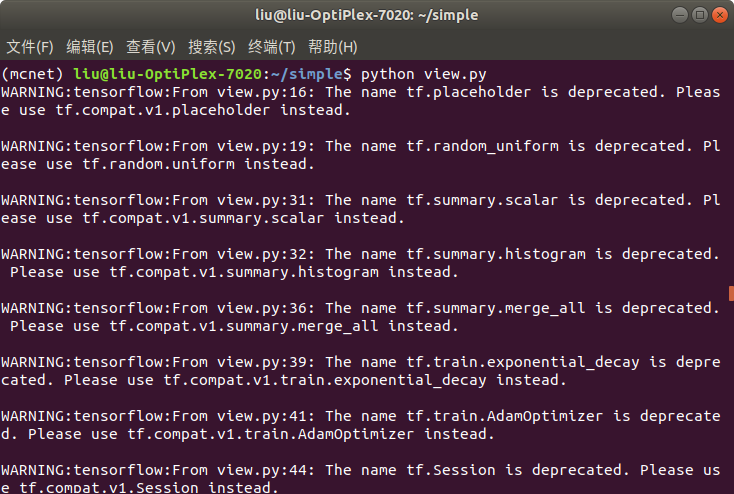

运行python代码命令: python view.py

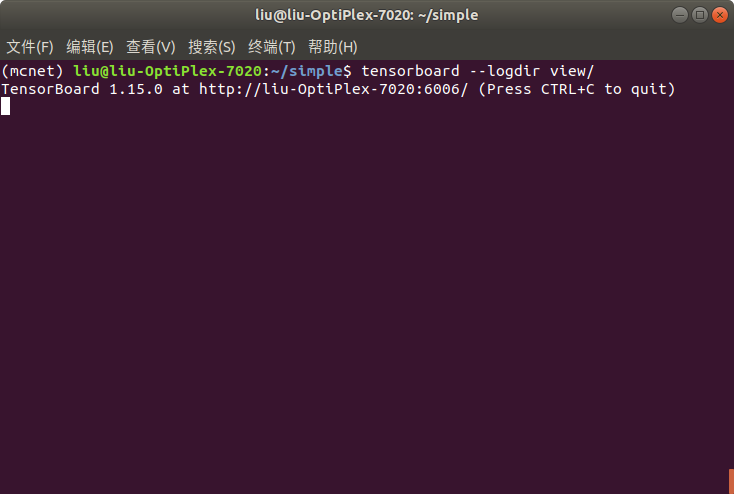

可视化命令: tensorboard --logdir view/

点击连接可视化结果:

标签:and pytho exp air ret ram key end 简单

原文地址:https://www.cnblogs.com/iuyy/p/14054008.html