标签:submit executor 存在 his adp exec count hlist memory

线上有一个消息消费服务xxx-consumer,使用spring-kafka框架,主线程批量从消费队列(kafka)拉取交易系统生产的消息,然后提交到子线程池中挨个处理消费。

public abstract class AbstractMessageDispatchListener implements

BatchAcknowledgingMessageListener<String, Msg>, ApplicationListener<ApplicationReadyEvent> {

?

private ThreadPoolExecutor executor;

?

public abstract MessageWorker chooseWorker(ConsumerRecord<String, Msg> data);

?

@Override

public void onMessage(List<ConsumerRecord<String, Msg>> datas, Acknowledgment acknowledgment) {

List<Future<?>> futureList = new ArrayList<>(datas.size());

try {

CountDownLatch countDownLatch = new CountDownLatch(datas.size());

for (ConsumerRecord<String, Msg> data : datas) {

Future<?> future = executor.submit(new Worker(data, countDownLatch));

futureList.add(future);

}

?

countDownLatch.await(20000L - 2000, TimeUnit.MILLISECONDS);

long countDownLatchCount = countDownLatch.getCount();

if (countDownLatchCount > 0) {

return;

}

acknowledgment.acknowledge();

} catch (Exception e) {

logger.error("onMessage error ", e);

} finally {

for (Future<?> future : futureList) {

if (future.isDone() || future.isCancelled()) {

continue;

}

future.cancel(true);

}

}

}

?

@Override

public void onApplicationEvent(ApplicationReadyEvent event) {

ThreadFactoryBuilder builder = new ThreadFactoryBuilder();

builder.setNameFormat(this.getClass().getSimpleName() + "-pool-%d");

builder.setDaemon(false);

executor = new ThreadPoolExecutor(12,

12 * 2,

60L,

TimeUnit.SECONDS,

new ArrayBlockingQueue<>(100),

builder.build());

}

?

private class Worker implements Runnable {

private ConsumerRecord<String, Msg> data;

private CountDownLatch countDownLatch;

?

Worker(ConsumerRecord<String, Msg> data, CountDownLatch countDownLatch) {

this.data = data;

this.countDownLatch = countDownLatch;

}

?

@Override

public void run() {

try {

MessageWorker worker = chooseWorker(data);

worker.work(data.value());

} finally {

countDownLatch.countDown();

}

}

}

}

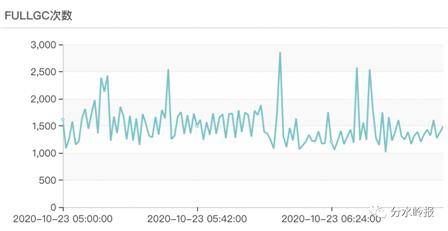

有一天早上xxx-consumer服务出现大量报警,人工排查发现30w+的消息未处理,业务日志正常,gc日志有大量Full gc,初步判断因为Full gc导致消息处理慢,大量的消息积压。

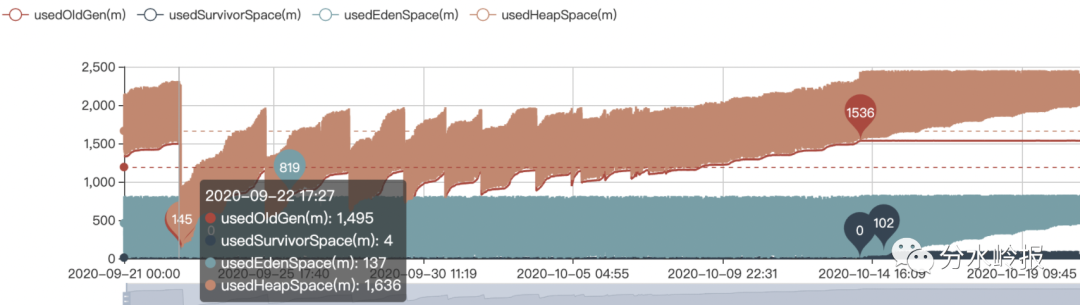

查看了近一个月的JVM内存信息,发现老年代内存无法被回收(9月22号的下降是因为服务有一次上线重启),初步判断发生了内存泄漏。

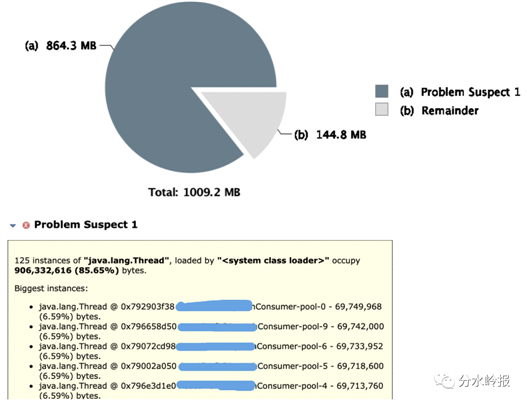

通过<jmap -dump:format=b,file=/home/work/app/xxx-consumer/logs/jmap_dump.hprof -F>命令导出内存快照,使用Memory Analyzer解析内存快照文件jmap_dump.hprof,发现有很明显的内存泄漏提示:

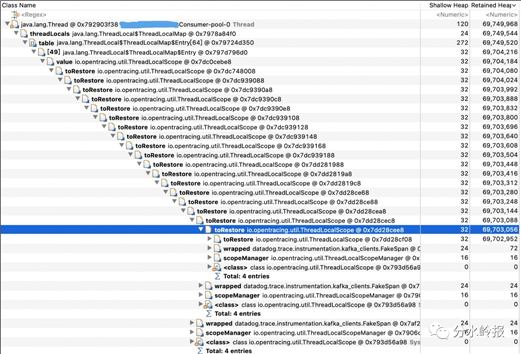

进一步查看线程细节,发现创建了大量的ThreadLocalScope对象且循环引用:

同时我们也看到了分布式追踪(dd-trace-java)jar包中的FakeSpan类,初步判断是dd-trace-java中自研扩展的kafka插件存在内存泄漏bug。

继续查看dd-trace-java中kafka插件的代码,其处理流程如下:

第一批消息

(SpringKafkaConsumerInstrumentation:L22)BatchAcknowledgingMessageListener.onMessage进入时,主线程会创建一个scope00=ThreadLocalScope(Type_BatchMessageListener_Value,toRestore=null)

(ExecutorInstrumentation:L21L47)消息被submit到线程池中处理时,子线程会创建一个scope10=ThreadLocalScope(Type_BatchMessageListener_Value,toRestore=null)

(SpringKafkaConsumerInstrumentation:L68)子线程处理消息时(ConsumerRecord.value),会创建一个scope11=ThreadLocalScope(Type_ConsumberRecord_Value,toRestore=scope10)

(ExecutorInstrumentation:L54)子线程处理完消息后,执行scope10.close(),而scopeManager.tlsScope.get()=scope11,命中ThreadLocalScope:L19,scope10和scope11均无法被GC

(SpringKafkaConsumerInstrumentation:L42)BatchAcknowledgingMessageListener.onMessage退出时,主线程会执行scope00.close(),scope00会被GC

第二批消息

(SpringKafkaConsumerInstrumentation:L22)BatchAcknowledgingMessageListener.onMessage进入时,主线程会创建一个scope01=ThreadLocalScope(Type_BatchMessageListener_Value,toRestore=null)

(ExecutorInstrumentation:L21L47)消息被submit到线程池中处理时,子线程会创建一个scope12=ThreadLocalScope(Type_BatchMessageListener_Value,toRestore=scope11)

(SpringKafkaConsumerInstrumentation:L68)子线程处理消息时(ConsumerRecord.value),会创建一个scope13=ThreadLocalScope(Type_ConsumberRecord_Value,toRestore=scope12)

(ExecutorInstrumentation:L54)子线程处理完消息后,执行scope12.close(),而scopeManager.tlsScope.get()=scope13,命中ThreadLocalScope:L19,scope12和scope13均无法被GC

(SpringKafkaConsumerInstrumentation:L42)BatchAcknowledgingMessageListener.onMessage退出时,主线程会执行scope01.close(),scope01会被GC

从上可以看到,主线程创建的ThreadLocalScope能被正确GC,而线程池中创建的ThreadLocalScope被循环引用,无法被正确GC,从而造成内存泄漏。

RecoredValueAdvice没有销毁自己创建的对象,而是寄希望于BatchMessageListenerAdvice去销毁。

但(SpringKafkaConsumerInstrumentation:L27)BatchAcknowledgingMessageListener.onMessage退出时,只会close主线程创建的ThreadLocalScope,不会close线程池中创建的ThreadLocalScope,导致子线程创建的ThreadLocalScope被循环引用,无法被正确GC,从而造成内存泄漏。

标签:submit executor 存在 his adp exec count hlist memory

原文地址:https://www.cnblogs.com/watershed/p/14414161.html