标签:模式 div nts hadoop gty ace view http 保存

1.pandas df 与 spark df的相互转换

df_s=spark.createDataFrame(df_p)

df_p=df_s.toPandas()

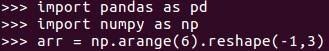

import pandas as pd import numpy as np arr = np.arange(6).reshape(-1,3)

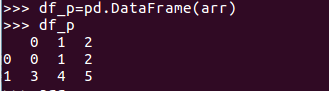

df_p=pd.DataFrame(arr) df_p

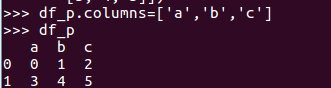

df_p.columns=[‘a‘,‘b‘,‘c‘] df_p

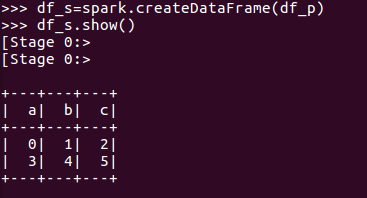

df_s=spark.createDataFrame(df_p) df_s.show()

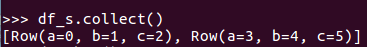

df_s.collect()

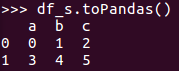

df_s.toPandas()

2. Spark与Pandas中DataFrame对比

http://www.lining0806.com/spark%E4%B8%8Epandas%E4%B8%ADdataframe%E5%AF%B9%E6%AF%94/

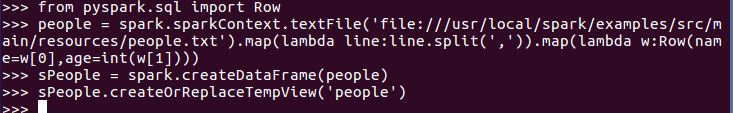

3.1 利用反射机制推断RDD模式

from pyspark.sql import Row people = spark.sparkContext.textFile(‘file:///usr/local/spark/examples/src/main/resources/people.txt‘).map(lambda line:line.split(‘,‘)).map(lambda w:Row(name=w[0],age=int(w[1]))) sPeople = spark.createDataFrame(people) sPeople.createOrReplaceTempView(‘people‘)

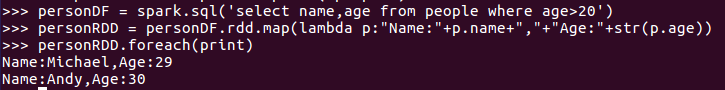

personDF = spark.sql(‘select name,age from people where age>20‘) personRDD = personDF.rdd.map(lambda p:"Name:"+p.name+","+"Age:"+str(p.age)) personRDD.foreach(print)

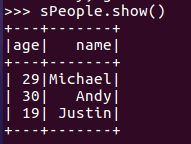

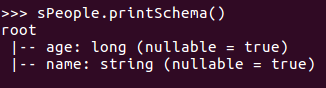

sPeople.show()

sPeople.printSchema()

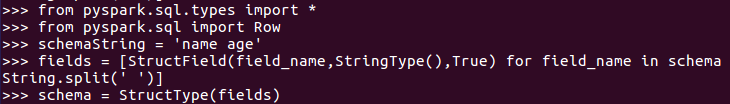

3.2 使用编程方式定义RDD模式

from pyspark.sql.types import * from pyspark.sql import Row schemaString = ‘name age‘ fields = [StructField(field_name,StringType(),True) for field_name in schemaString.split(‘ ‘)]

schema = StructType(fields)

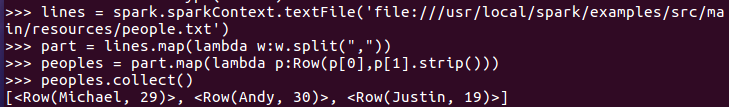

lines = spark.sparkContext.textFile(‘file:///usr/local/spark/examples/src/main/resources/people.txt‘)

part = lines.map(lambda w:w.split(","))

peoples = part.map(lambda p:Row(p[0],p[1].strip()))

peoples.collect()

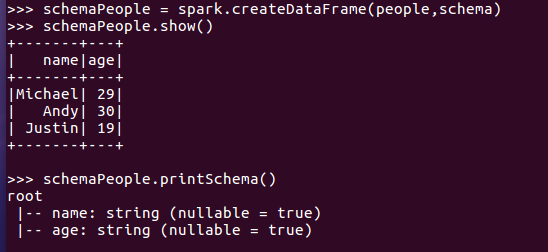

schemaPeople = spark.createDataFrame(people,schema) schemaPeople.show() schemaPeople.printSchema()

4. DataFrame保存为文件

df.write.json(dir)

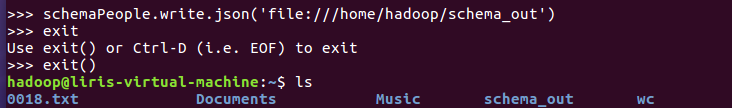

schemaPeople.write.json(‘file:///home/hadoop/schema_out‘)

标签:模式 div nts hadoop gty ace view http 保存

原文地址:https://www.cnblogs.com/wsqjl/p/14767867.html