标签:ref hit dict loading inf 上海 tokenize ons open

代码原地址:

https://www.mindspore.cn/tutorial/training/zh-CN/r1.2/use/load_dataset_text.html

=======================================================

完整代码:

import os os.system("rm -f ./datasets/tokenizer.txt") if not os.path.exists(‘./datasets‘): os.mkdir(‘./datasets‘) file_handle=open(‘./datasets/tokenizer.txt‘,mode=‘w‘) file_handle.write(‘Welcome to Beijing \n北京欢迎您! \n我喜欢English! \n‘) file_handle.close() import mindspore.dataset as ds import mindspore.dataset.text as text DATA_FILE = ‘./datasets/tokenizer.txt‘ dataset = ds.TextFileDataset(DATA_FILE, shuffle=False) ds.config.set_seed(58) dataset = dataset.shuffle(buffer_size=3) for data in dataset.create_dict_iterator(output_numpy=True): print(text.to_str(data[‘text‘])) print(‘=‘*30) replace_op1 = text.RegexReplace("Beijing", "Shanghai") replace_op2 = text.RegexReplace("北京", "上海") dataset = dataset.map(operations=replace_op1) dataset = dataset.map(operations=replace_op2) for data in dataset.create_dict_iterator(output_numpy=True):###need to mark print(text.to_str(data[‘text‘])) print(‘=‘*30) tokenizer = text.WhitespaceTokenizer() dataset = dataset.map(operations=tokenizer) for data in dataset.create_dict_iterator(num_epochs=1,output_numpy=True): print(text.to_str(data[‘text‘]).tolist())

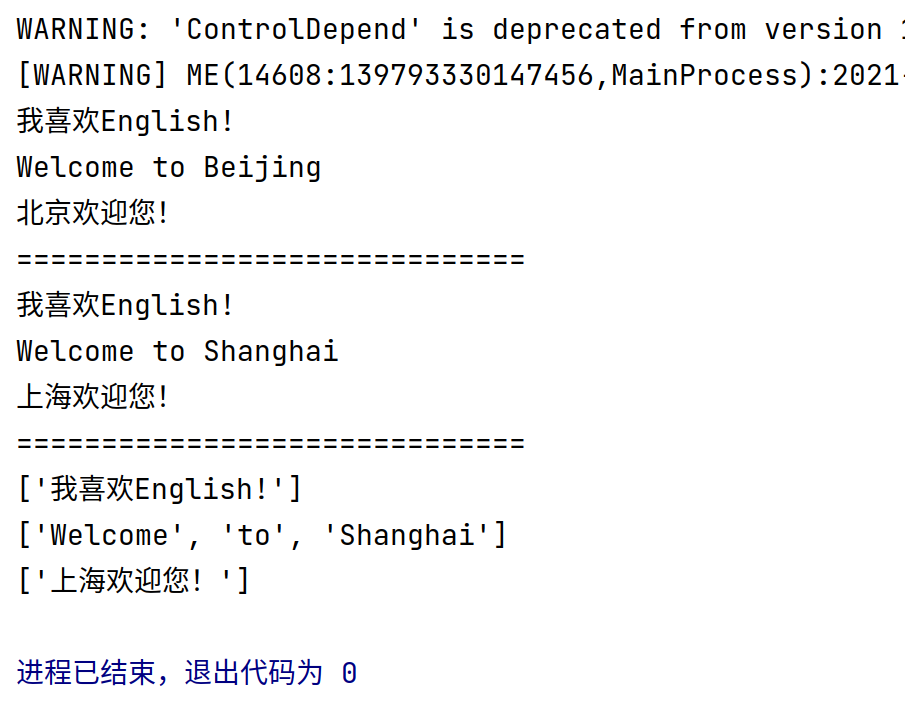

运行结果:

============================================================================

标签:ref hit dict loading inf 上海 tokenize ons open

原文地址:https://www.cnblogs.com/devilmaycry812839668/p/14995582.html