标签:blog http io ar sp for on 2014 log

A one stop solution for infomaiton management.5 capabilities:

ETL MetaData Management Text Data Analytics Data Quality Data Profiling

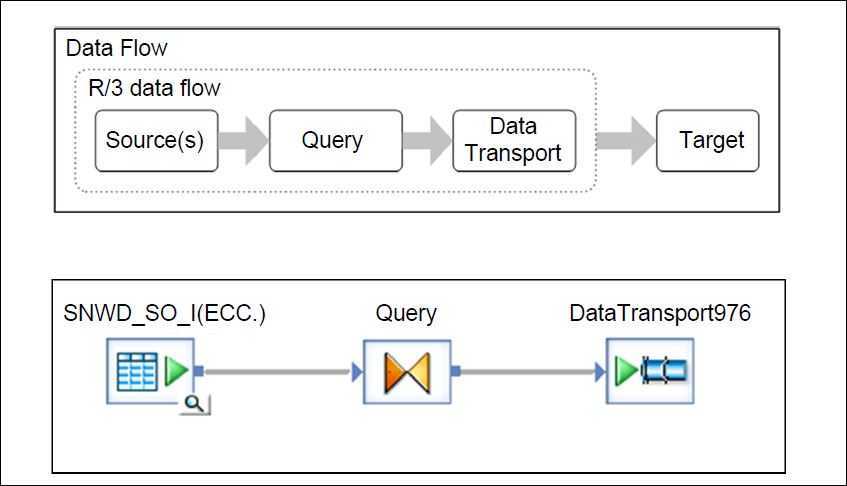

Standard VS ABAP dataflows

Standard dataflow can be used if you have the following requirements

reading a single table

small columns(data load buffer restricted to 512 Bytes per row)

Abap dataflow can be used if you have the following requirements

reading multiple ECC tables

push down any join operations to the SAP Applicaiton

better performance

Abap Data Flow will generate an ABAP program according to the fields which you selected from SAP tables, then this program is copied int SAP, then SAP executes this program and collects all the data from tables and creates a file in the SAP Work Dicrectory with the name you maintained in Data Transport object in the ABAP Data Flow, to store all the collected data in this file.

It means, what data you are expectiing from SAP tables, SAP will not allows BODS to directly take the data from SAP tables, instead it will give wht data in the form of file. It means the ABAP Data Flow pulls data from SAP tables then it places the data in a file in SAP work Directory.

Then with the help of Data Transfer Methods, system will move the file from SAP Work Directory to BODS Job Server local directory. Then the normal Data Flow will take that file as source to read and then loads to target table.

标签:blog http io ar sp for on 2014 log

原文地址:http://www.cnblogs.com/grantliu/p/4123691.html