标签:

Exercise:Vectorization

习题的链接:Exercise:Vectorization

注意点:

MNIST图片的像素点已经经过归一化。

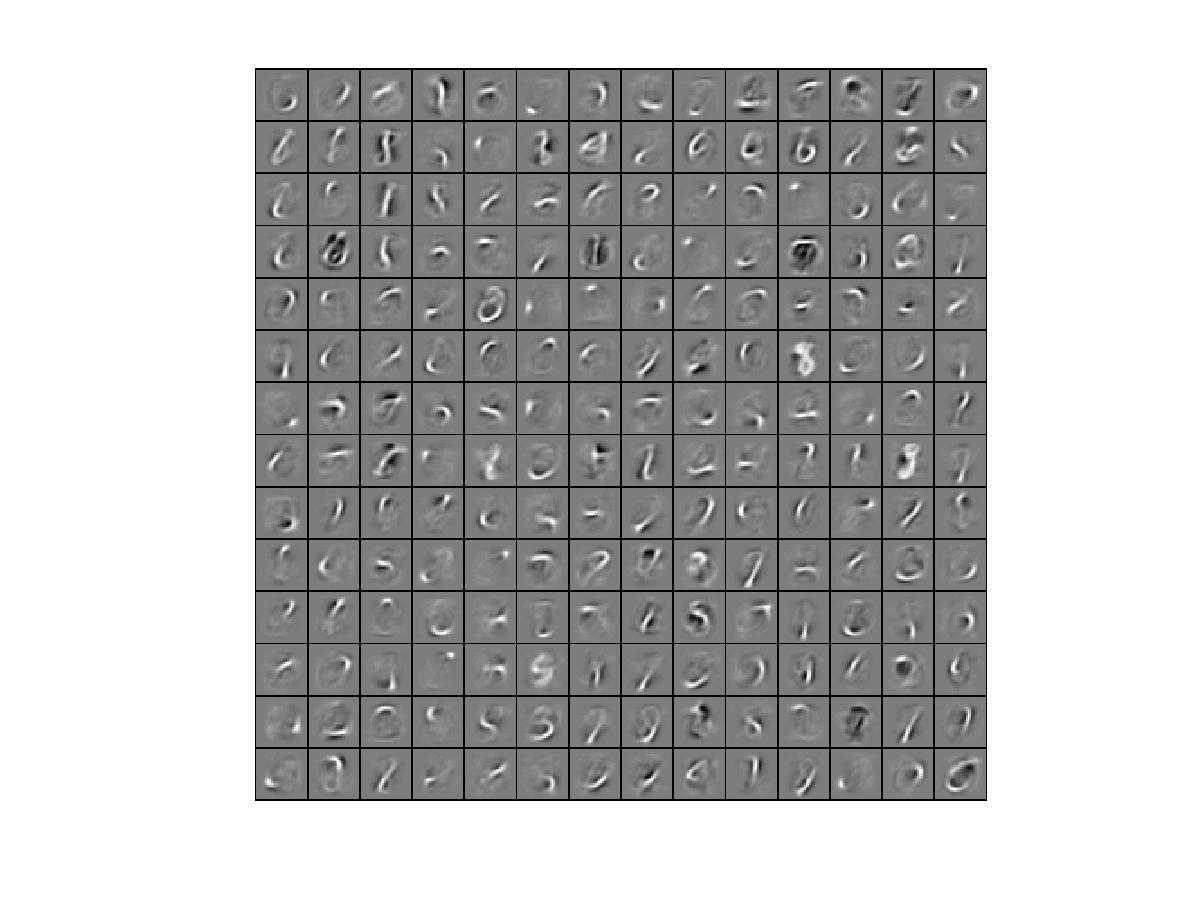

如果再使用Exercise:Sparse Autoencoder中的sampleIMAGES.m进行归一化,

将使得训练得到的可视化权值如下图:

我的实现:

更改train.m的参数设置及训练样本选取

%% STEP 0: Here we provide the relevant parameters values that will % allow your sparse autoencoder to get good filters; you do not need to % change the parameters below. visibleSize = 28*28; % number of input units hiddenSize = 196; % number of hidden units sparsityParam = 0.1; % desired average activation of the hidden units. % (This was denoted by the Greek alphabet rho, which looks like a lower-case "p", % in the lecture notes). lambda = 3e-3; % weight decay parameter beta = 3; % weight of sparsity penalty term %%====================================================================== %% STEP 1: Implement sampleIMAGES % % After implementing sampleIMAGES, the display_network command should % display a random sample of 200 patches from the dataset % MNIST images have already been normalized images = loadMNISTImages(‘train-images.idx3-ubyte‘); patches = images(:,1:10000); %display_network(patches(:,randi(size(patches,2),200,1)),8); % Obtain random parameters theta theta = initializeParameters(hiddenSize, visibleSize);

训练得到的W1可视化:

【DeepLearning】Exercise:Vectorization

标签:

原文地址:http://www.cnblogs.com/ganganloveu/p/4195584.html