标签:

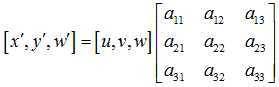

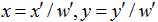

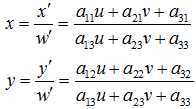

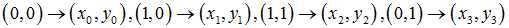

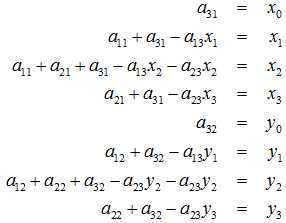

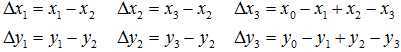

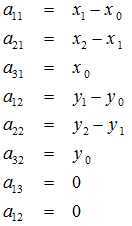

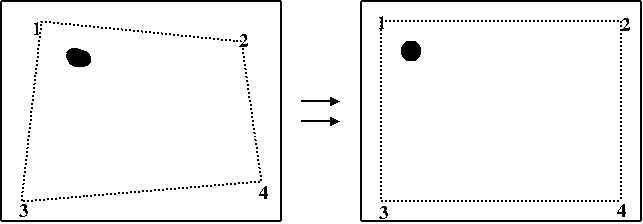

u,v是原始图片左边,对应得到变换后的图片坐标x,y,其中 重写之前的变换公式可以得到: 所以,已知变换对应的几个点就可以求取变换公式。反之,特定的变换公式也能新的变换后的图片。简单的看一个正方形到四边形的变换: 根据变换公式得到: 定义几个辅助变量: 求解出的变换矩阵就可以将一个正方形变换到四边形。反之,四边形变换到正方形也是一样的。于是,我们通过两次变换:四边形变换到正方形+正方形变换到四边形就可以将任意一个四边形变换到另一个四边形。 。

。

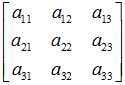

变换矩阵 可以拆成4部分,

可以拆成4部分, 表示线性变换,比如scaling,shearing和ratotion。

表示线性变换,比如scaling,shearing和ratotion。 用于平移,

用于平移, 产生透视变换。所以可以理解成仿射等是透视变换的特殊形式。经过透视变换之后的图片通常不是平行四边形(除非映射视平面和原来平面平行的情况)。

产生透视变换。所以可以理解成仿射等是透视变换的特殊形式。经过透视变换之后的图片通常不是平行四边形(除非映射视平面和原来平面平行的情况)。

变换的4组对应点可以表示成:

都为0时变换平面与原来是平行的,可以得到:

都为0时变换平面与原来是平行的,可以得到:

不为0时,得到:

不为0时,得到:

#include "opencv2/highgui.hpp"

#include "opencv2/imgproc.hpp"

#include <iostream>

#include <stdio.h>

using namespace cv;

using namespace std;

/** @function main */

int main( int argc, char** argv )

{

cv::Mat src= cv::imread( "test.jpg",0);

if (!src.data)

return 0;

vector<Point> not_a_rect_shape;

not_a_rect_shape.push_back(Point(122,0));

not_a_rect_shape.push_back(Point(814,0));

not_a_rect_shape.push_back(Point(22,540));

not_a_rect_shape.push_back(Point(910,540));

// For debugging purposes, draw green lines connecting those points

// and save it on disk

const Point* point = ¬_a_rect_shape[0];

int n = (int )not_a_rect_shape.size();

Mat draw = src.clone();

polylines(draw, &point, &n, 1, true, Scalar(0, 255, 0), 3, CV_AA);

imwrite( "draw.jpg", draw);

// topLeft, topRight, bottomRight, bottomLeft

cv::Point2f src_vertices[4];

src_vertices[0] = not_a_rect_shape[0];

src_vertices[1] = not_a_rect_shape[1];

src_vertices[2] = not_a_rect_shape[2];

src_vertices[3] = not_a_rect_shape[3];

Point2f dst_vertices[4];

dst_vertices[0] = Point(0, 0);

dst_vertices[1] = Point(960,0);

dst_vertices[2] = Point(0,540);

dst_vertices[3] = Point(960,540);

Mat warpMatrix = getPerspectiveTransform(src_vertices, dst_vertices);

cv::Mat rotated;

warpPerspective(src, rotated, warpMatrix, rotated.size(), INTER_LINEAR, BORDER_CONSTANT);

// Display the image

cv::namedWindow( "Original Image");

cv::imshow( "Original Image",src);

cv::namedWindow( "warp perspective");

cv::imshow( "warp perspective",rotated);

imwrite( "result.jpg",src);

cv::waitKey();

return 0;

} cv::Mat src= cv::imread( "test.jpg",0);

if (!src.data)

return 0; vector<Point> not_a_rect_shape;

not_a_rect_shape.push_back(Point(122,0));

not_a_rect_shape.push_back(Point(814,0));

not_a_rect_shape.push_back(Point(22,540));

not_a_rect_shape.push_back(Point(910,540)); const Point* point = ¬_a_rect_shape[0];

int n = (int )not_a_rect_shape.size();

Mat draw = src.clone();

polylines(draw, &point, &n, 1, true, Scalar(0, 255, 0), 3, CV_AA);

imwrite( "draw.jpg", draw); cv::Point2f src_vertices[4];

src_vertices[0] = not_a_rect_shape[0];

src_vertices[1] = not_a_rect_shape[1];

src_vertices[2] = not_a_rect_shape[2];

src_vertices[3] = not_a_rect_shape[3];

Point2f dst_vertices[4];

dst_vertices[0] = Point(0, 0);

dst_vertices[1] = Point(960,0);

dst_vertices[2] = Point(0,540);

dst_vertices[3] = Point(960,540);

Mat warpMatrix = getPerspectiveTransform(src_vertices, dst_vertices); cv::Mat rotated;

warpPerspective(src, rotated, warpMatrix, rotated.size(), INTER_LINEAR, BORDER_CONSTANT); // Display the image

cv::namedWindow( "Original Image");

cv::imshow( "Original Image",src);

cv::namedWindow( "warp perspective");

cv::imshow( "warp perspective",rotated);

imwrite( "result.jpg",src);

标签:

原文地址:http://www.cnblogs.com/jsxyhelu/p/4219564.html