标签:

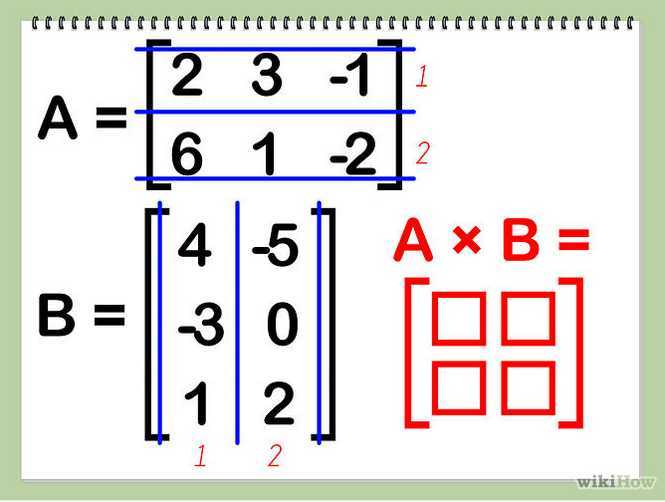

能相乘的两个矩阵,必须满足一个矩阵的行数和第二个矩阵的列数相同.

A(N*P) * B(P*M) = C(N*M). 其中P是行数,N是列数, 从宽高的角度来说,即 A的宽度和B的高度是相同的.C矩阵 = ha * wb.

//C = A*B void MatrixMulCPU(float* _C,const float *_A,const float *_B,int _wa,int _ha,int _wb) { float sum = 0; for (int i = 0; i < _ha; ++i) { for (int j = 0; j < _wb; ++j) { sum = 0; for (int k = 0; k < _wa; ++k) {

//i*_wa得到当前对应的是A矩阵中的哪一行,+k对应当前行的哪一列.矩阵A的stride是wa

//j对应当前矩阵B的哪一列,+k*wb依次表示第0行的j列,第1行的j列...第wa-1行的j列.矩阵B的stride是wb sum += (float)_A[i*_wa+k]*(float)_B[k*_wb+ j]; } _C[i*_wb+j] = (float)sum; } } }

计算版本0:

__global__ void matrix_kernel_0(float* _C,const float* _A,const float *_B,int _wa,int _wb) { float sum = 0; //找出该线程所在的行列 int row = blockIdx.y*blockDim.y + threadIdx.y; int col = blockIdx.x*blockDim.x + threadIdx.x; //线程Thread(row,col)负责计算C(row,col) for (int i = 0; i < _wa; ++i) { sum += _A[row*_wa + i]*_B[i*_wb + col]; } _C[row*_wb + col] = sum; }

这个是Heterogeneous Parallel Programming lab3:Basic Matrix Matrix Multiplication的代码:

#include <wb.h> #define wbCheck(stmt) do { cudaError_t err = stmt; if (err != cudaSuccess) { wbLog(ERROR, "Failed to run stmt ", #stmt); wbLog(ERROR, "Got CUDA error ... ", cudaGetErrorString(err)); return -1; } } while (0) #if 0 //This is C verison matrixMUl //C = A*B void MatrixMulCPU(float* _C,const float *_A,const float *_B,int _wa,int _ha,int _wb) { float sum = 0; for (int i = 0; i < _ha; ++i) { for (int j = 0; j < _wb; ++j) { sum = 0; for (int k = 0; k < _wa; ++k) { sum += (float)_A[i*_wa+k]*(float)_B[k*_wb+ j]; } _C[i*_wb+j] = (float)sum; } } } #endif // Compute C = A * B , Matrix C = hA * wB = rowA * columnB __global__ void matrixMultiply(float *A, float *B, float *C, int numARows, int numAColumns, int numBRows, int numBColumns, int numCRows, int numCColumns) { //@@ Insert code to implement matrix multiplication here float sum = 0.0f; int row = blockIdx.y*blockDim.y + threadIdx.y; int col = blockIdx.x*blockDim.x + threadIdx.x; if(row < numCRows && col < numCColumns){ for (int i = 0; i < numAColumns; ++i) { sum += A[row*numAColumns + i] * B[i*numBColumns + col]; } C[row*numBColumns + col] = sum; } printf("C = %f\n",C[row*numBColumns + col]); } int main(int argc, char **argv) { wbArg_t args; float *hostA; // The A matrix float *hostB; // The B matrix float *hostC; // The output C matrix float *deviceA; float *deviceB; float *deviceC; int numARows; // number of rows in the matrix A int numAColumns; // number of columns in the matrix A int numBRows; // number of rows in the matrix B int numBColumns; // number of columns in the matrix B int numCRows; // number of rows in the matrix C (you have to set this) int numCColumns; // number of columns in the matrix C (you have to set this) args = wbArg_read(argc, argv); wbTime_start(Generic, "Importing data and creating memory on host"); hostA = ( float * )wbImport(wbArg_getInputFile(args, 0), &numARows, &numAColumns); hostB = ( float * )wbImport(wbArg_getInputFile(args, 1), &numBRows, &numBColumns); //@@ Set numCRows and numCColumns numCRows = 0; numCColumns = 0; if(numAColumns != numBRows){ wbLog(TRACE, "numAColumns != numBRows, Break "); return 1; } numCRows = numARows; numCColumns = numBColumns; unsigned int A_size = numARows * numAColumns * sizeof(float); unsigned int B_size = numBRows * numBColumns * sizeof(float); unsigned int C_size = numCRows * numCColumns * sizeof(float); //@@ Allocate the hostC matrix hostC = ( float * )malloc(C_size); wbTime_stop(Generic, "Importing data and creating memory on host"); wbLog(TRACE, "The dimensions of A are ", numARows, " x ", numAColumns); wbLog(TRACE, "The dimensions of B are ", numBRows, " x ", numBColumns); wbTime_start(GPU, "Allocating GPU memory."); //@@ Allocate GPU memory here wbCheck(cudaMalloc((void**)&deviceA, A_size)); wbCheck(cudaMalloc((void**)&deviceB, B_size)); wbCheck(cudaMalloc((void**)&deviceC, C_size)); wbTime_stop(GPU, "Allocating GPU memory."); wbTime_start(GPU, "Copying input memory to the GPU."); //@@ Copy memory to the GPU here wbCheck(cudaMemcpy(deviceA, hostA, A_size, cudaMemcpyHostToDevice)); wbCheck(cudaMemcpy(deviceB, hostB, B_size, cudaMemcpyHostToDevice)); wbCheck(cudaMemcpy(deviceC, hostC, C_size, cudaMemcpyHostToDevice)); wbTime_stop(GPU, "Copying input memory to the GPU."); //@@ Initialize the grid and block dimensions here dim3 DimGrid((numCColumns-1)/16+1, (numCRows-1)/16+1, 1); dim3 DimBlock(16, 16, 1); wbTime_start(Compute, "Performing CUDA computation"); //@@ Launch the GPU Kernel here matrixMultiply<<< DimGrid, DimBlock >>>(deviceA, deviceB, deviceC, numARows, numAColumns, numBRows, numBColumns, numCRows, numCColumns); cudaDeviceSynchronize(); wbTime_stop(Compute, "Performing CUDA computation"); wbTime_start(Copy, "Copying output memory to the CPU"); //@@ Copy the GPU memory back to the CPU here //@@ Copy the GPU memory back to the CPU here wbCheck(cudaMemcpy(hostC, deviceC, C_size, cudaMemcpyDeviceToHost)); wbTime_stop(Copy, "Copying output memory to the CPU"); wbTime_start(GPU, "Freeing GPU Memory"); //@@ Free the GPU memory here wbCheck(cudaFree(deviceA)); wbCheck(cudaFree(deviceB)); wbCheck(cudaFree(deviceC)); wbTime_stop(GPU, "Freeing GPU Memory"); wbSolution(args, hostC, numCRows, numCColumns); free(hostA); free(hostB); free(hostC); return 0; }

另外一种思路,我们不让每一个线程完整计算一个C(i,j),通过C(i,j) = sum { A(i,k)*B(k,j) }发现,我们还可以再细度划分:

Csub(i,j) = sum{A(i,ksub+offsetA)*B(ksub+offsetB,j)} 0<=ksub < blockSize

C(i,j) = sum{Csub(i,j)}

就是把矩阵分成n*n个大的子块,然后每一个block负责计算子块i 和 子块j的子乘积,计算完毕后加起来则可。这里主要引入shared Memory来提高程序效率.

__global__ void matrix_kernel_1(float* _C,const float* _A,const float *_B,int _wa,int _wb) { int bx = blockIdx.x; //Block X的当前位置 int by = blockIdx.y; //Block y的当前位置 int tx = threadIdx.x; int ty = threadIdx.y; //该block要处理的A int aBegin = _wa*(by*BLOCK_SIZE);//A(0,by) int aEnd = aBegin + _wa - 1; int aStep = BLOCK_SIZE;//offsetA int bBegin = BLOCK_SIZE*bx;//B(bx,0) int bStep = BLOCK_SIZE*_wb;//offsetB float cSub = 0; for (int a = aBegin,b = bBegin; a <= aEnd; a += aStep,b += bStep) { __shared__ float As[BLOCK_SIZE][BLOCK_SIZE]; __shared__ float Bs[BLOCK_SIZE][BLOCK_SIZE]; //每个线程负责一个元素拷贝 As[ty][tx] = _A[a + _wa*ty + tx]; Bs[ty][tx] = _B[b + _wb*ty + tx]; __syncthreads(); //每个线程负责计算一个子块i 和 子块j的子乘积 for (int k = 0; k < BLOCK_SIZE; ++k) { cSub += As[ty][k]*Bs[k][tx]; } __syncthreads(); } //全局地址,向全局寄存器写回去 //一个线程负责一个元素,一个block负责一个子块 int cIndex = (by*BLOCK_SIZE + ty)*_wb + (bx*BLOCK_SIZE + tx); _C[cIndex] = cSub; }

标签:

原文地址:http://www.cnblogs.com/biglucky/p/4244187.html