#include "global.h"

#include "FFmpegReadCamera.h"

#include "H264LiveVideoServerMediaSubssion.hh"

#include "H264FramedLiveSource.hh"

#include "liveMedia.hh"

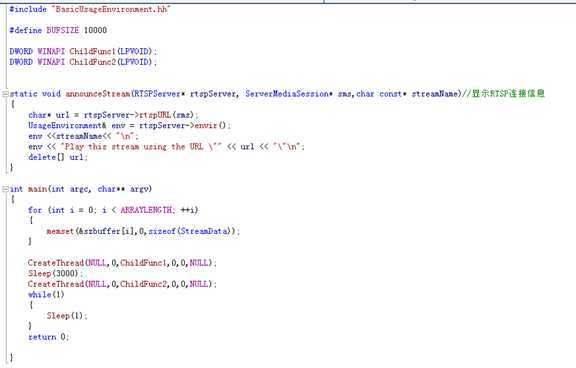

#include "BasicUsageEnvironment.hh"

?

#define BUFSIZE 10000

?

DWORD WINAPI ChildFunc1(LPVOID);

DWORD WINAPI ChildFunc2(LPVOID);

?

?

static

void announceStream(RTSPServer* rtspServer, ServerMediaSession* sms,char

const* streamName)//显示RTSP连接信息

{

???char* url = rtspServer->rtspURL(sms);

???UsageEnvironment& env = rtspServer->envir();

???env <<streamName<< "\n";

???env << "Play this stream using the URL \"" << url << "\"\n";

???delete[] url;

}

?

int main(int argc, char** argv)

{

???for (int i = 0; i < ARRAYLENGTH; ++i)

???{

??????memset(&szbuffer[i],0,sizeof(StreamData));

???}

?

???CreateThread(NULL,0,ChildFunc1,0,0,NULL);

???Sleep(3000);

???CreateThread(NULL,0,ChildFunc2,0,0,NULL);

???while(1)

???{

??????Sleep(1);

???}

????return 0;

?

}

?

DWORD WINAPI ChildFunc1(LPVOID p)

{

???int ret;

???AVFormatContext *ofmt_ctx = NULL;

???AVStream *out_stream;

???AVStream *in_stream;

???AVCodecContext *enc_ctx;

???AVCodecContext *dec_ctx;

???AVCodec* encoder;

???enum AVMediaType type;

???fp_write = fopen("test.h264","wb+");

???unsigned int stream_index;

???AVPacket enc_pkt;

???int enc_got_frame;

?

???AVFormatContext *pFormatCtx;

???int i, videoindex;

???AVCodecContext *pCodecCtx;

???AVCodec *pCodec;

?

???av_register_all();

?

???avformat_network_init();

???pFormatCtx = avformat_alloc_context();

?

???avformat_alloc_output_context2(&ofmt_ctx,NULL,"h264",NULL);

?

???//Register Device 注册所有硬件

???avdevice_register_all();

???//Show Dshow Device 显示所有可用的硬件

???show_dshow_device();

???//Show Device Options 显示某一个硬件的所有参数(摄像头参数)

???show_dshow_device_option();

???//Show VFW Options

???show_vfw_device();

???//Windows

#ifdef _WIN32

#if USE_DSHOW

???AVInputFormat *ifmt=av_find_input_format("dshow");

???//Set own video device‘s name

???if(avformat_open_input(&pFormatCtx,"video=Integrated Webcam",ifmt,NULL)!=0){

??????printf("Couldn‘t open input stream.(无法打开输入流)\n");

??????return -1;

???}

#else

???AVInputFormat *ifmt=av_find_input_format("vfwcap");

???if(avformat_open_input(&pFormatCtx,"0",ifmt,NULL)!=0){

??????printf("Couldn‘t open input stream.(无法打开输入流)\n");

??????return -1;

???}

#endif

#endif

???//Linux

#ifdef linux

???AVInputFormat *ifmt=av_find_input_format("video4linux2");

???if(avformat_open_input(&pFormatCtx,"/dev/video0",ifmt,NULL)!=0){

??????printf("Couldn‘t open input stream.(无法打开输入流)\n");

??????return -1;

???}

#endif

?

?

???if(avformat_find_stream_info(pFormatCtx,NULL)<0)

???{

??????printf("Couldn‘t find stream information.(无法获取流信息)\n");

??????return -1;

???}

???videoindex=-1;

???for(i=0; i<pFormatCtx->nb_streams; i++)

??????if(pFormatCtx->streams[i]->codec->codec_type==AVMEDIA_TYPE_VIDEO)

??????{

?????????videoindex=i;

?????????break;

??????}

??????if(videoindex==-1)

??????{

?????????printf("Couldn‘t find a video stream.(没有找到视频流)\n");

?????????return -1;

??????}

?

??????pCodecCtx=pFormatCtx->streams[videoindex]->codec;

??????pCodec=avcodec_find_decoder(pCodecCtx->codec_id);

??????if(pCodec==NULL)

??????{

?????????printf("Codec not found.(没有找到解码器)\n");

?????????return -1;

??????}

??????if(avcodec_open2(pCodecCtx, pCodec,NULL)<0)

??????{

?????????printf("Could not open codec.(无法打开解码器)\n");

?????????return -1;

??????}

?

??????AVFrame *pFrame,*pFrameYUV;

??????pFrame=avcodec_alloc_frame();

??????pFrameYUV=avcodec_alloc_frame();

??????int length = avpicture_get_size(PIX_FMT_YUV420P, pCodecCtx->width, pCodecCtx->height);

??????uint8_t *out_buffer=(uint8_t *)av_malloc(length);

?

?

??????/*open output file*/

??????AVIOContext *avio_out = avio_alloc_context(out_buffer,length,0,NULL,NULL,write_buffer,NULL);

??????if (avio_out == NULL)

??????{

?????????printf("申请内存失败! \n");

?????????return -1;

??????}

?

??????ofmt_ctx->pb = avio_out;

??????ofmt_ctx->flags = AVFMT_FLAG_CUSTOM_IO;

??????for(int i = 0; i < 1; i++)

??????{

?????????out_stream = avformat_new_stream(ofmt_ctx,NULL);

?????????if (!out_stream)

?????????{

????????????av_log(NULL,AV_LOG_ERROR,"failed allocating output stream");

????????????return AVERROR_UNKNOWN;

?????????}

?????????in_stream = pFormatCtx->streams[i];

?????????dec_ctx = in_stream->codec;

?????????enc_ctx = out_stream->codec;

?????????//设置编码格式

?????????if (dec_ctx->codec_type == AVMEDIA_TYPE_VIDEO)

?????????{

????????????encoder = avcodec_find_encoder(AV_CODEC_ID_H264);

????????????enc_ctx->height = dec_ctx->height;

????????????enc_ctx->width = dec_ctx->width;

????????????enc_ctx->sample_aspect_ratio = dec_ctx->sample_aspect_ratio;

????????????enc_ctx->pix_fmt = encoder->pix_fmts[0];

????????????enc_ctx->time_base = dec_ctx->time_base;

????????????enc_ctx->me_range = 16;

????????????enc_ctx->max_qdiff = 4;

????????????enc_ctx->qmin = 10;//10 这两个值调节清晰度

????????????enc_ctx->qmax = 51;//51

????????????enc_ctx->qcompress = 0.6;

????????????enc_ctx->refs = 3;

????????????enc_ctx->bit_rate = 500000;

?

????????????//enc_ctx->time_base.num = 1;

????????????//enc_ctx->time_base.den = 25;

????????????//enc_ctx->gop_size = 10;

????????????//enc_ctx->bit_rate = 3000000;

?

????????????ret = avcodec_open2(enc_ctx,encoder,NULL);

????????????if (ret < 0)

????????????{

???????????????av_log(NULL,AV_LOG_ERROR,"Cannot open video encoder for stream #%u\n",i);

???????????????return ret;

????????????}

????????????//av_opt_set(enc_ctx->priv_data,"tune","zerolatency",0);

?????????}

?????????else

if (dec_ctx->codec_type == AVMEDIA_TYPE_UNKNOWN)

?????????{

????????????av_log(NULL, AV_LOG_FATAL, "Elementary stream #%d is of unknown type, cannot proceed\n", i);

????????????return AVERROR_INVALIDDATA;

?????????}

?????????else

?????????{

????????????// if this stream must be remuxed

????????????ret = avcodec_copy_context(ofmt_ctx->streams[i]->codec,pFormatCtx->streams[i]->codec);

????????????if (ret < 0)

????????????{

???????????????av_log(NULL, AV_LOG_ERROR, "Copying stream context failed\n");

???????????????return ret;

????????????}

?????????}

?????????if (ofmt_ctx->oformat->flags & AVFMT_GLOBALHEADER)

?????????{

????????????enc_ctx->flags |= CODEC_FLAG_GLOBAL_HEADER;

?????????}

??????}

??????// init muxer, write output file header

??????ret = avformat_write_header(ofmt_ctx,NULL);

??????if (ret < 0)

??????{

?????????if (ret < 0)

?????????{

????????????av_log(NULL, AV_LOG_ERROR, "Error occurred when opening output file\n");

????????????return ret;

?????????}

??????}

?

??????i = 0;

??????// pCodecCtx 解码的codec_context

??????avpicture_fill((AVPicture *)pFrameYUV, out_buffer, PIX_FMT_YUV420P, pCodecCtx->width, pCodecCtx->height);

??????//SDL----------------------------

??????if(SDL_Init(SDL_INIT_VIDEO | SDL_INIT_AUDIO | SDL_INIT_TIMER)) {

?????????printf( "Could not initialize SDL - %s\n", SDL_GetError());

?????????return -1;

??????}

??????int screen_w=0,screen_h=0;

??????SDL_Surface *screen;

??????screen_w = pCodecCtx->width;

??????screen_h = pCodecCtx->height;

??????screen = SDL_SetVideoMode(screen_w, screen_h, 0,0);

?

??????if(!screen) {

?????????printf("SDL: could not set video mode - exiting:%s\n",SDL_GetError());

?????????return -1;

??????}

??????SDL_Overlay *bmp;

??????bmp = SDL_CreateYUVOverlay(pCodecCtx->width, pCodecCtx->height,SDL_YV12_OVERLAY, screen);

??????SDL_Rect rect;

??????//SDL End------------------------

??????int got_picture;

?

??????AVPacket *packet=(AVPacket *)av_malloc(sizeof(AVPacket));

??????//Output Information-----------------------------

??????printf("File Information(文件信息)---------------------\n");

??????av_dump_format(pFormatCtx,0,NULL,0);

??????printf("-------------------------------------------------\n");

?

#if OUTPUT_YUV420P

??????FILE *fp_yuv=fopen("output.yuv","wb+");

#endif

?

??????struct SwsContext *img_convert_ctx;

??????img_convert_ctx = sws_getContext(pCodecCtx->width, pCodecCtx->height, pCodecCtx->pix_fmt, pCodecCtx->width, pCodecCtx->height, PIX_FMT_YUV420P, SWS_BICUBIC, NULL, NULL, NULL);

??????//------------------------------

??????//read all packets

??????while(av_read_frame(pFormatCtx, packet)>=0)

??????{

?????????if(packet->stream_index==videoindex)

?????????{

?

????????????type = pFormatCtx->streams[packet->stream_index]->codec->codec_type;

????????????stream_index = packet->stream_index;

????????????av_log(NULL, AV_LOG_DEBUG, "Demuxer gave frame of stream_index %u\n",stream_index);

?

????????????if (!pFrame)

????????????{

???????????????ret = AVERROR(ENOMEM);

???????????????break;

????????????}

????????????packet->dts = av_rescale_q_rnd(packet->dts,

???????????????pFormatCtx->streams[stream_index]->time_base,

???????????????pFormatCtx->streams[stream_index]->codec->time_base,

???????????????(AVRounding)(AV_ROUND_NEAR_INF|AV_ROUND_PASS_MINMAX));

????????????packet->pts = av_rescale_q_rnd(packet->pts,

???????????????pFormatCtx->streams[stream_index]->time_base,

???????????????pFormatCtx->streams[stream_index]->codec->time_base,

???????????????(AVRounding)(AV_ROUND_NEAR_INF|AV_ROUND_PASS_MINMAX));

????????????ret = avcodec_decode_video2(pCodecCtx, pFrame, &got_picture, packet);

????????????printf("Decode 1 Packet\tsize:%d\tpts:%d\n",packet->size,packet->pts);

????????????if(ret < 0)

????????????{

???????????????printf("Decode Error.(解码错误)\n");

???????????????av_frame_free(&pFrame);

???????????????return -1;

????????????}

????????????if(got_picture)

????????????{

???????????????//这句话是转换格式的函数,可用将rgb变为yuv格式

???????????????sws_scale(img_convert_ctx, (const

uint8_t* const*)pFrame->data, pFrame->linesize, 0, pCodecCtx->height, pFrameYUV->data, pFrameYUV->linesize);

???????????????//下面对转换为YUV格式的AVFramer进行编码即可

???????????????pFrameYUV->width = pFrame->width;

???????????????pFrameYUV->height = pFrame->height;

?

???????????????/*pFrameYUV->pts = av_frame_get_best_effort_timestamp(pFrameYUV);

???????????????pFrameYUV->pict_type = AV_PICTURE_TYPE_NONE;*/

?

???????????????enc_pkt.data = NULL;

???????????????enc_pkt.size = 0;

???????????????av_init_packet(&enc_pkt);

???????????????enc_pkt.data = out_buffer;

???????????????enc_pkt.size = length;

?

???????????????//编码必须是YUV420P格式,不然编码不会成功。

???????????????ret = avcodec_encode_video2(ofmt_ctx->streams[stream_index]->codec,&enc_pkt,pFrameYUV,&enc_got_frame);

???????????????printf("Encode 1 Packet\tsize:%d\tpts:%d\n",enc_pkt.size,enc_pkt.pts);

???????????????if (ret == 0) //一定要记得 ret 值为0 ,代表成功。-1 才是代表失败。

???????????????{

??????????????????fwrite(enc_pkt.data,enc_pkt.size,1,fp_write); //存储编码后的h264文件,可以作为测试用

??????????????????memcpy(szbuffer[recvcount].str,enc_pkt.data,enc_pkt.size);

??????????????????szbuffer[recvcount].len = enc_pkt.size;

??????????????????recvcount = (recvcount + 1)%ARRAYLENGTH;

???????????????}

???????????????if (ret < 0)

???????????????{

??????????????????printf("encode failed");

??????????????????return -1;

???????????????}

???????????????if (!enc_got_frame)

???????????????{

??????????????????continue;

???????????????}

?

???????????????/* prepare packet for muxing */

???????????????enc_pkt.stream_index = stream_index;

???????????????enc_pkt.dts = av_rescale_q_rnd(enc_pkt.dts,

??????????????????ofmt_ctx->streams[stream_index]->codec->time_base,

??????????????????ofmt_ctx->streams[stream_index]->time_base,

??????????????????(AVRounding)(AV_ROUND_NEAR_INF|AV_ROUND_PASS_MINMAX));

???????????????enc_pkt.pts = av_rescale_q_rnd(enc_pkt.pts,

??????????????????ofmt_ctx->streams[stream_index]->codec->time_base,

??????????????????ofmt_ctx->streams[stream_index]->time_base,

??????????????????(AVRounding)(AV_ROUND_NEAR_INF|AV_ROUND_PASS_MINMAX));

???????????????enc_pkt.duration = av_rescale_q(enc_pkt.duration,

??????????????????ofmt_ctx->streams[stream_index]->codec->time_base,

??????????????????ofmt_ctx->streams[stream_index]->time_base);

???????????????av_log(NULL, AV_LOG_INFO, "Muxing frame %d\n",i);

???????????????/* mux encoded frame */

???????????????av_write_frame(ofmt_ctx,&enc_pkt);

???????????????if (ret < 0)

???????????????{

??????????????????printf("encode failed");

??????????????????return -1;

???????????????}

?

#if OUTPUT_YUV420P

???????????????int y_size=pCodecCtx->width*pCodecCtx->height;

???????????????fwrite(pFrameYUV->data[0],1,y_size,fp_yuv); //Y

???????????????fwrite(pFrameYUV->data[1],1,y_size/4,fp_yuv); //U

???????????????fwrite(pFrameYUV->data[2],1,y_size/4,fp_yuv); //V

#endif

???????????????SDL_LockYUVOverlay(bmp);

???????????????bmp->pixels[0]=pFrameYUV->data[0];

???????????????bmp->pixels[2]=pFrameYUV->data[1];

???????????????bmp->pixels[1]=pFrameYUV->data[2];

???????????????bmp->pitches[0]=pFrameYUV->linesize[0];

???????????????bmp->pitches[2]=pFrameYUV->linesize[1];

???????????????bmp->pitches[1]=pFrameYUV->linesize[2];

???????????????SDL_UnlockYUVOverlay(bmp);

???????????????rect.x = 0;

???????????????rect.y = 0;

???????????????rect.w = screen_w;

???????????????rect.h = screen_h;

???????????????SDL_DisplayYUVOverlay(bmp, &rect);

???????????????//Delay 40ms

???????????????SDL_Delay(40);

????????????}

?????????}

?????????av_free_packet(packet);

??????}

?

??????/* flush encoders */

??????for (i = 0; i < 1; i++) {

?????????/* flush encoder */

?????????ret = flush_encoder(ofmt_ctx,i);

?????????if (ret < 0) {

????????????av_log(NULL, AV_LOG_ERROR, "Flushing encoder failed\n");

????????????return -1;

?????????}

??????}

??????av_write_trailer(ofmt_ctx);

?

??????sws_freeContext(img_convert_ctx);

?

#if OUTPUT_YUV420P

??????fclose(fp_yuv);

#endif

?

??????SDL_Quit();

?

??????av_free(out_buffer);

??????av_free(pFrameYUV);

??????avcodec_close(pCodecCtx);

??????avformat_close_input(&pFormatCtx);

??????//fcloseall();

??????return 0;

}

?

DWORD WINAPI ChildFunc2(LPVOID p)

{

???//设置环境

???UsageEnvironment* env;

???Boolean reuseFirstSource = False;//如果为"true"则其他接入的客户端跟第一个客户端看到一样的视频流,否则其他客户端接入的时候将重新播放

???TaskScheduler* scheduler = BasicTaskScheduler::createNew();

???env = BasicUsageEnvironment::createNew(*scheduler);

?

???//创建RTSP服务器

???UserAuthenticationDatabase* authDB = NULL;

???RTSPServer* rtspServer = RTSPServer::createNew(*env, 8554, authDB);

???if (rtspServer == NULL) {

??????*env << "Failed to create RTSP server: " << env->getResultMsg() << "\n";

??????exit(1);

???}

???char

const* descriptionString= "Session streamed by \"testOnDemandRTSPServer\"";

?

???//模拟实时流发送相关变量

???int datasize;//数据区长度

???unsigned char* databuf;//数据区指针

???databuf = (unsigned char*)malloc(100000);

???bool dosent;//rtsp发送标志位,为true则发送,否则退出

?

???//从文件中拷贝1M数据到内存中作为实时网络传输内存模拟,如果实时网络传输应该是双线程结构,记得在这里加上线程锁

???//此外实时传输的数据拷贝应该是发生在H264FramedLiveSource文件中,所以这里只是自上往下的传指针过去给它

????datasize = szbuffer[(recvcount+ARRAYLENGTH-1)%ARRAYLENGTH].len;

???for (int i = 0; i < datasize; ++i)

???{

??????databuf[i] = szbuffer[(recvcount+ARRAYLENGTH-1)%ARRAYLENGTH].str[i];

???}

???dosent = true;

???//fclose(pf);

?

???//上面的部分除了模拟网络传输的部分外其他的基本跟live555提供的demo一样,而下面则修改为网络传输的形式,为此重写addSubsession的第一个参数相关文件

???char

const* streamName = "h264test";

???ServerMediaSession* sms = ServerMediaSession::createNew(*env, streamName, streamName,descriptionString);

???sms->addSubsession(H264LiveVideoServerMediaSubssion::createNew(*env, reuseFirstSource, &datasize, databuf,&dosent));//修改为自己实现的H264LiveVideoServerMediaSubssion

???rtspServer->addServerMediaSession(sms);

???announceStream(rtspServer, sms, streamName);//提示用户输入连接信息

?

???env->taskScheduler().doEventLoop(); //循环等待连接(没有链接的话,进不去下一步,无法调试)

?

???free(databuf);//释放掉内存

???return 0;

}