标签:

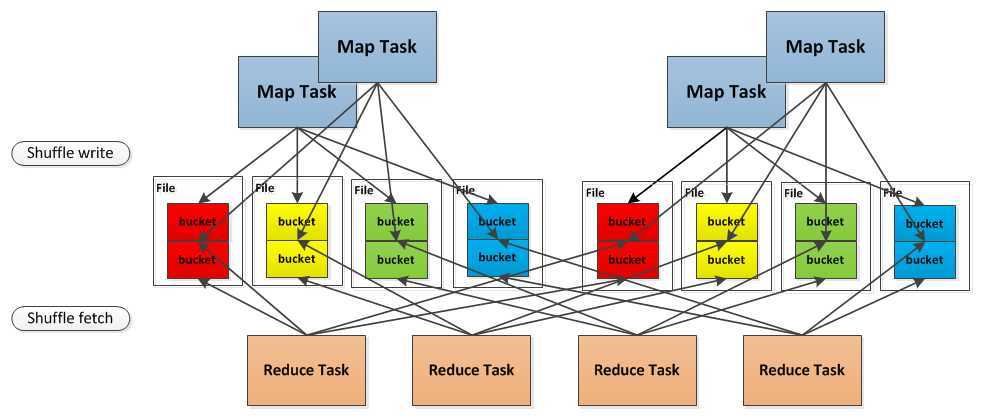

无论是Hadoop还是spark,shuffle操作都是决定其性能的重要因素。在不能减少shuffle的情况下,使用一个好的shuffle管理器也是优化性能的重要手段。* A ShuffleManager using hashing, that creates one output file per reduce partition on each

* mapper (possibly reusing these across waves of tasks).

/**

* Pluggable interface for shuffle systems. A ShuffleManager is created in SparkEnv on both the

* driver and executors, based on the spark.shuffle.manager setting. The driver registers shuffles

* with it, and executors (or tasks running locally in the driver) can ask to read and write data.

*

* NOTE: this will be instantiated by SparkEnv so its constructor can take a SparkConf and

* boolean isDriver as parameters.

*/

private[spark] trait ShuffleManager {

/**

* Register a shuffle with the manager and obtain a handle for it to pass to tasks.

*/

def registerShuffle[K, V, C](

shuffleId: Int,

numMaps: Int,

dependency: ShuffleDependency[K, V, C]): ShuffleHandle

/** Get a writer for a given partition. Called on executors by map tasks. */

def getWriter[K, V](handle: ShuffleHandle, mapId: Int, context: TaskContext): ShuffleWriter[K, V]

/**

* Get a reader for a range of reduce partitions (startPartition to endPartition-1, inclusive).

* Called on executors by reduce tasks.

*/

def getReader[K, C](

handle: ShuffleHandle,

startPartition: Int,

endPartition: Int,

context: TaskContext): ShuffleReader[K, C]

/** Remove a shuffle‘s metadata from the ShuffleManager. */

def unregisterShuffle(shuffleId: Int)

/** Shut down this ShuffleManager. */

def stop(): Unit

}

/**

* :: DeveloperApi ::

* Represents a dependency on the output of a shuffle stage. Note that in the case of shuffle,

* the RDD is transient since we don‘t need it on the executor side.

*

* @param _rdd the parent RDD

* @param partitioner partitioner used to partition the shuffle output

* @param serializer [[org.apache.spark.serializer.Serializer Serializer]] to use. If set to None,

* the default serializer, as specified by `spark.serializer` config option, will

* be used.

*/

@DeveloperApi

class ShuffleDependency[K, V, C](

@transient _rdd: RDD[_ <: Product2[K, V]],

val partitioner: Partitioner,

val serializer: Option[Serializer] = None,

val keyOrdering: Option[Ordering[K]] = None,

val aggregator: Option[Aggregator[K, V, C]] = None,

val mapSideCombine: Boolean = false)

extends Dependency[Product2[K, V]] {

override def rdd = _rdd.asInstanceOf[RDD[Product2[K, V]]]

val shuffleId: Int = _rdd.context.newShuffleId()

val shuffleHandle: ShuffleHandle = _rdd.context.env.shuffleManager.registerShuffle(

shuffleId, _rdd.partitions.size, this)

_rdd.sparkContext.cleaner.foreach(_.registerShuffleForCleanup(this))

}

/**

* A basic ShuffleHandle implementation that just captures registerShuffle‘s parameters.

*/

private[spark] class BaseShuffleHandle[K, V, C](

shuffleId: Int,

val numMaps: Int,

val dependency: ShuffleDependency[K, V, C])

extends ShuffleHandle(shuffleId)

/**

* Class that keeps track of the location of the map output of

* a stage. This is abstract because different versions of MapOutputTracker

* (driver and worker) use different HashMap to store its metadata.

*/

private[spark] abstract class MapOutputTracker(conf: SparkConf) extends Logging {

/**

* Result returned by a ShuffleMapTask to a scheduler. Includes the block manager address that the

* task ran on as well as the sizes of outputs for each reducer, for passing on to the reduce tasks.

* The map output sizes are compressed using MapOutputTracker.compressSize.

*/

private[spark] class MapStatus(var location: BlockManagerId, var compressedSizes: Array[Byte])

extends Externalizable {

private[spark] class BlockMessage() {

// Un-initialized: typ = 0

// GetBlock: typ = 1

// GotBlock: typ = 2

// PutBlock: typ = 3

private var typ: Int = BlockMessage.TYPE_NON_INITIALIZED

private var id: BlockId = null

private var data: ByteBuffer = null

private var level: StorageLevel = null

private[spark] class HashShuffleReader[K, C](

handle: BaseShuffleHandle[K, _, C],

startPartition: Int,

endPartition: Int,

context: TaskContext)

extends ShuffleReader[K, C]

{

require(endPartition == startPartition + 1,

"Hash shuffle currently only supports fetching one partition")

private val dep = handle.dependency

/** Read the combined key-values for this reduce task */

override def read(): Iterator[Product2[K, C]] = {

val readMetrics = context.taskMetrics.createShuffleReadMetricsForDependency()

val ser = Serializer.getSerializer(dep.serializer)

val iter = BlockStoreShuffleFetcher.fetch(handle.shuffleId, startPartition, context, ser,

readMetrics)

--下面这段是获取聚合器,它可以配置指定是map阶段聚合还是reduce阶段聚合。

val aggregatedIter: Iterator[Product2[K, C]] = if (dep.aggregator.isDefined) {

if (dep.mapSideCombine) {

new InterruptibleIterator(context, dep.aggregator.get.combineCombinersByKey(iter, context))

} else {

new InterruptibleIterator(context, dep.aggregator.get.combineValuesByKey(iter, context))

}

} else if (dep.aggregator.isEmpty && dep.mapSideCombine) {

throw new IllegalStateException("Aggregator is empty for map-side combine")

} else {

// Convert the Product2s to pairs since this is what downstream RDDs currently expect

iter.asInstanceOf[Iterator[Product2[K, C]]].map(pair => (pair._1, pair._2))

}

// Sort the output if there is a sort ordering defined.

dep.keyOrdering match {

case Some(keyOrd: Ordering[K]) => --是否有自定义的排序算法

// Create an ExternalSorter to sort the data. Note that if spark.shuffle.spill is disabled,

// the ExternalSorter won‘t spill to disk.

val sorter = new ExternalSorter[K, C, C](ordering = Some(keyOrd), serializer = Some(ser))

sorter.insertAll(aggregatedIter)

context.taskMetrics.memoryBytesSpilled += sorter.memoryBytesSpilled

context.taskMetrics.diskBytesSpilled += sorter.diskBytesSpilled

sorter.iterator

case None =>

aggregatedIter

}

}

/** Close this reader */

override def stop(): Unit = ???

}

private[spark] class HashShuffleWriter[K, V](

handle: BaseShuffleHandle[K, V, _],

mapId: Int,

context: TaskContext)

extends ShuffleWriter[K, V] with Logging {

private val blockManager = SparkEnv.get.blockManager

private val shuffleBlockManager = blockManager.shuffleBlockManager

private val ser = Serializer.getSerializer(dep.serializer.getOrElse(null))

private val shuffle = shuffleBlockManager.forMapTask(dep.shuffleId, mapId, numOutputSplits, ser,

writeMetrics)

/** Write a bunch of records to this task‘s output */

override def write(records: Iterator[_ <: Product2[K, V]]): Unit = {

val iter = if (dep.aggregator.isDefined) {

if (dep.mapSideCombine) {

dep.aggregator.get.combineValuesByKey(records, context)

} else {

records

}

} else if (dep.aggregator.isEmpty && dep.mapSideCombine) {

throw new IllegalStateException("Aggregator is empty for map-side combine")

} else {

records

}

for (elem <- iter) {

val bucketId = dep.partitioner.getPartition(elem._1)

shuffle.writers(bucketId).write(elem)

}

}

private[spark] class SortShuffleWriter[K, V, C](

handle: BaseShuffleHandle[K, V, C],

mapId: Int,

context: TaskContext)

extends ShuffleWriter[K, V] with Logging {

private val dep = handle.dependency

private val numPartitions = dep.partitioner.numPartitions

private val blockManager = SparkEnv.get.blockManager

private val ser = Serializer.getSerializer(dep.serializer.orNull)

private val conf = SparkEnv.get.conf

private val fileBufferSize = conf.getInt("spark.shuffle.file.buffer.kb", 32) * 1024

private var sorter: ExternalSorter[K, V, _] = null

private var outputFile: File = null

private var indexFile: File = null

// Are we in the process of stopping? Because map tasks can call stop() with success = true

// and then call stop() with success = false if they get an exception, we want to make sure

// we don‘t try deleting files, etc twice.

private var stopping = false

private var mapStatus: MapStatus = null

private val writeMetrics = new ShuffleWriteMetrics()

context.taskMetrics.shuffleWriteMetrics = Some(writeMetrics)

/** Write a bunch of records to this task‘s output */

override def write(records: Iterator[_ <: Product2[K, V]]): Unit = {

if (dep.mapSideCombine) {

if (!dep.aggregator.isDefined) {

throw new IllegalStateException("Aggregator is empty for map-side combine")

}

sorter = new ExternalSorter[K, V, C](

dep.aggregator, Some(dep.partitioner), dep.keyOrdering, dep.serializer)

sorter.insertAll(records)

} else {

// In this case we pass neither an aggregator nor an ordering to the sorter, because we don‘t

// care whether the keys get sorted in each partition; that will be done on the reduce side

// if the operation being run is sortByKey.

sorter = new ExternalSorter[K, V, V](

None, Some(dep.partitioner), None, dep.serializer)

sorter.insertAll(records)

}

// Create a single shuffle file with reduce ID 0 that we‘ll write all results to. We‘ll later

// serve different ranges of this file using an index file that we create at the end.

val blockId = ShuffleBlockId(dep.shuffleId, mapId, 0)

outputFile = blockManager.diskBlockManager.getFile(blockId)

indexFile = blockManager.diskBlockManager.getFile(blockId.name + ".index")

val partitionLengths = sorter.writePartitionedFile(blockId, context)

// Register our map output with the ShuffleBlockManager, which handles cleaning it over time

blockManager.shuffleBlockManager.addCompletedMap(dep.shuffleId, mapId, numPartitions)

mapStatus = new MapStatus(blockManager.blockManagerId,

partitionLengths.map(MapOutputTracker.compressSize))

}

spark 笔记 15: ShuffleManager,shuffle map两端的stage/task的桥梁

标签:

原文地址:http://www.cnblogs.com/zwCHAN/p/4249255.html