标签:

在iOS开发中,播放视频通常有两种方式,一种是使用MPMoviePlayerController(需要导入MediaPlayer.Framework),还有一种是使用AVPlayer。关于这两个类的区别可以参考http://stackoverflow.com/questions/8146942/avplayer-and-mpmovieplayercontroller-differences,简而言之就是MPMoviePlayerController使用更简单,功能不如AVPlayer强大,而AVPlayer使用稍微麻烦点,不过功能更加强大。这篇博客主要介绍下AVPlayer的基本使用,由于博主也是刚刚接触,所以有问题大家直接指出~

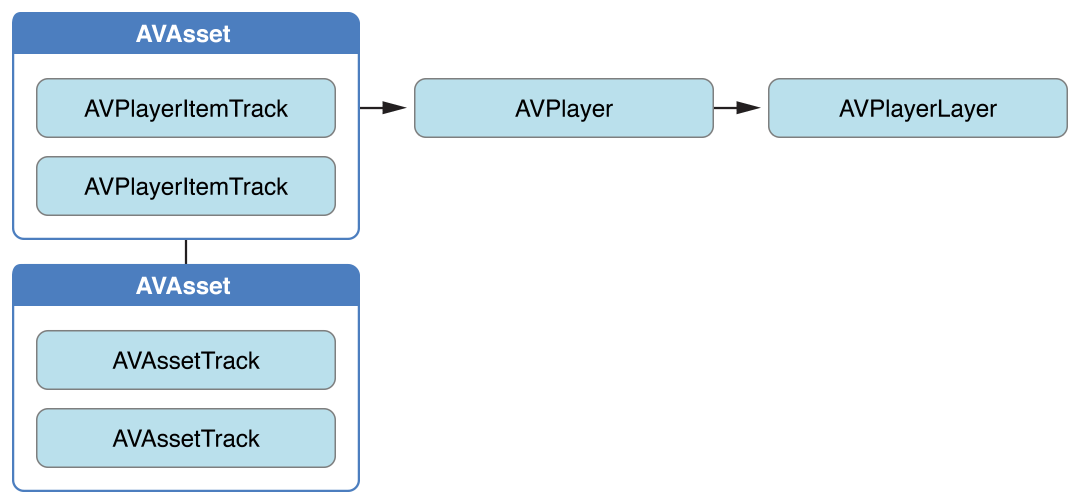

在开发中,单纯使用AVPlayer类是无法显示视频的,要将视频层添加至AVPlayerLayer中,这样才能将视频显示出来,所以先在ViewController的@interface中添加以下属性

|

1

2

|

@property (nonatomic ,strong) AVPlayer *player;@property (nonatomic ,strong) AVPlayerItem *playerItem;<br>@property (nonatomic ,weak) IBOutletPlayerView *playerView; |

其中playerView继承自UIView,不过重写了set和get方法,用于将player添加至playerView的AVPlayerLayer中,这样才能顺利将视频显示出来

在PlayerView.h中声明一个AVPlayer对象,由于默认的layer是CALayer,而AVPlayer只能添加至AVPlayerLayer中,所以我们改变一下layerClass,这样PlayerView的默认layer就变了,之后我们可以把在viewController中初始化的AVPlayer对象赋给AVPlayerLayer的player属性。

PlayerView.h

@property (nonatomic ,strong) AVPlayer *player;

PlayerView.m

|

1

2

3

4

5

6

7

8

9

10

11

|

+ (Class)layerClass { return [AVPlayerLayer class];}- (AVPlayer *)player { return [(AVPlayerLayer *)[self layer] player];}- (void)setPlayer:(AVPlayer *)player { [(AVPlayerLayer *)[self layer] setPlayer:player];} |

然后在viewDidLoad中执行初始化:

|

1

2

3

4

5

|

NSURL *videoUrl = [NSURL URLWithString:@"http://www.jxvdy.com/file/upload/201405/05/18-24-58-42-627.mp4"];self.playerItem = [AVPlayerItem playerItemWithURL:videoUrl];[self.playerItem addObserver:self forKeyPath:@"status" options:NSKeyValueObservingOptionNew context:nil];// 监听status属性[self.playerItem addObserver:self forKeyPath:@"loadedTimeRanges" options:NSKeyValueObservingOptionNew context:nil];// 监听loadedTimeRanges属性self.player = [AVPlayer playerWithPlayerItem:self.playerItem];<br>[[NSNotificationCenterdefaultCenter]addObserver:selfselector:@selector(moviePlayDidEnd:) name:AVPlayerItemDidPlayToEndTimeNotificationobject:self.playerItem]; |

先将在线视频链接存放在videoUrl中,然后初始化playerItem,playerItem是管理资源的对象(A player item manages the presentation state of an asset with which it is associated. A player item contains player item tracks—instances ofAVPlayerItemTrack—that correspond to the tracks in the asset.)

然后监听playerItem的status和loadedTimeRange属性,status有三种状态:

AVPlayerStatusUnknown,

AVPlayerStatusReadyToPlay,

AVPlayerStatusFailed

当status等于AVPlayerStatusReadyToPlay时代表视频已经可以播放了,我们就可以调用play方法播放了。

loadedTimeRange属性代表已经缓冲的进度,监听此属性可以在UI中更新缓冲进度,也是很有用的一个属性。

最后添加一个通知,用于监听视频是否已经播放完毕,然后实现KVO的方法:

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

|

- (void)observeValueForKeyPath:(NSString *)keyPath ofObject:(id)object change:(NSDictionary *)change context:(void *)context { AVPlayerItem *playerItem = (AVPlayerItem *)object; if ([keyPath isEqualToString:@"status"]) { if ([playerItem status] == AVPlayerStatusReadyToPlay) { NSLog(@"AVPlayerStatusReadyToPlay"); self.stateButton.enabled = YES; CMTime duration = self.playerItem.duration;// 获取<span id="2_nwp" style="width: auto; height: auto; float: none;"><a id="2_nwl" href="http://cpro.baidu.com/cpro/ui/uijs.php?rs=1&u=http%3A%2F%2Fwww%2Edaxueit%2Ecom%2Farticle%2F3407%2Ehtml&p=baidu&c=news&n=10&t=tpclicked3_hc&q=00007110_cpr&k=%CA%D3%C6%B5&k0=ios&kdi0=8&k1=%C8%ED%BC%FE&kdi1=8&k2=%C8%ED%BC%FE%BF%AA%B7%A2&kdi2=8&k3=%C6%BB%B9%FB&kdi3=8&k4=block&kdi4=8&k5=%CA%D3%C6%B5&kdi5=8&sid=2e3a805149a5f46f&ch=0&tu=u1704338&jk=6e6f3626bb79a9f4&cf=29&rb=0&fv=16&stid=9&urlid=0&luki=6&seller_id=1&di=128" target="_blank" mpid="2" style="text-decoration: none;"><span style="color:#0000ff;font-size:14px;width:auto;height:auto;float:none;">视频</span></a></span>总长度 CGFloat totalSecond = playerItem.duration.value / playerItem.duration.timescale;// 转换成秒 _totalTime = [self convertTime:totalSecond];// 转换成播放时间 [self customVideoSlider:duration];// 自定义UISlider外观 NSLog(@"movie total duration:%f",CMTimeGetSeconds(duration)); [self monitoringPlayback:self.playerItem];// 监听播放状态 } else if ([playerItem status] == AVPlayerStatusFailed) { NSLog(@"AVPlayerStatusFailed"); } } else if ([keyPath isEqualToString:@"loadedTimeRanges"]) { NSTimeInterval timeInterval = [self availableDuration];// 计算缓冲进度 NSLog(@"Time Interval:%f",timeInterval); CMTime duration = self.playerItem.duration; CGFloat totalDuration = CMTimeGetSeconds(duration); [self.videoProgress setProgress:timeInterval / totalDuration animated:YES]; }}- (NSTimeInterval)availableDuration { NSArray *loadedTimeRanges = [[self.playerView.player currentItem] loadedTimeRanges]; CMTimeRange timeRange = [loadedTimeRanges.firstObject CMTimeRangeValue];// 获取缓冲区域 float startSeconds = CMTimeGetSeconds(timeRange.start); float durationSeconds = CMTimeGetSeconds(timeRange.duration); NSTimeInterval result = startSeconds + durationSeconds;// 计算缓冲总进度 return result;}- (NSString *)convertTime:(CGFloat)second{ NSDate *d = [NSDate dateWithTimeIntervalSince1970:second]; NSDateFormatter *formatter = [[NSDateFormatter alloc] init]; if (second/3600 >= 1) { [formatter setDateFormat:@"HH:mm:ss"]; } else { [formatter setDateFormat:@"mm:ss"]; } NSString *showtimeNew = [formatter stringFromDate:d]; return showtimeNew;} |

此方法主要对status和loadedTimeRanges属性做出响应,status状态变为AVPlayerStatusReadyToPlay时,说明视频已经可以播放了,这时我们可以获取一些视频的信息,包含视频长度等,把播放按钮设备enabled,点击就可以调用play方法播放视频了。在AVPlayerStatusReadyToPlay的底部还有个monitoringPlayback方法:

|

1

2

3

4

5

6

7

8

|

- (void)monitoringPlayback:(AVPlayerItem *)playerItem { self.playbackTimeObserver = [self.playerView.player addPeriodicTimeObserverForInterval:CMTimeMake(1, 1) queue:NULL usingBlock:^(CMTime time) { CGFloat currentSecond = playerItem.currentTime.value/playerItem.currentTime.timescale;// 计算当前在第几秒 [self updateVideoSlider:currentSecond]; NSString *timeString = [self convertTime:currentSecond]; self.timeLabel.text = [NSString stringWithFormat:@"%@/%@",timeString,_totalTime]; }];} |

monitoringPlayback用于监听每秒的状态,- (id)addPeriodicTimeObserverForInterval:(CMTime)interval queue:(dispatch_queue_t)queue usingBlock:(void (^)(CMTime time))block;此方法就是关键,interval参数为响应的间隔时间,这里设为每秒都响应,queue是队列,传NULL代表在主线程执行。可以更新一个UI,比如进度条的当前时间等。

作为播放器,除了播放,暂停等功能外。还有一个必不可少的功能,那就是显示当前播放进度,还有缓冲的区域,我的思路是这样,用UIProgressView显示缓冲的可播放区域,用UISlider显示当前正在播放的进度,当然这里要对UISlider做一些自定义,代码如下:

|

1

2

3

4

5

6

7

8

9

|

- (void)customVideoSlider:(CMTime)duration { self.videoSlider.maximumValue = CMTimeGetSeconds(duration); UIGraphicsBeginImageContextWithOptions((CGSize){ 1, 1 }, NO, 0.0f); UIImage *transparentImage = UIGraphicsGetImageFromCurrentImageContext(); UIGraphicsEndImageContext(); [self.videoSlider setMinimumTrackImage:transparentImage forState:UIControlStateNormal]; [self.videoSlider setMaximumTrackImage:transparentImage forState:UIControlStateNormal];} |

这样UISlider就只有中间的ThumbImage了,而ThumbImage左右的颜色都变成透明的了,仅仅是用于显示当前的播放时间。UIProgressView则用于显示当前缓冲的区域,不做任何自定义的修改,在StoryBoard看起来是这样的:

把UISlider添加至UIProgressView上面,运行起来的效果就变成了这样:

这样基本的缓冲功能就做好了,当然还有一些功能没做,比如音量大小,滑动屏幕快进快退等,大家有时间可以自己做着玩儿下~ 最后的效果如下:

最后附上demo链接:https://github.com/mzds/AVPlayerDemo

标签:

原文地址:http://www.cnblogs.com/lee4519/p/4252021.html