标签:

第二次作业基本部分包含三部分,Q1: Two-layer Neural Network,Q2: Modular Neural Network,Q3: ConvNet on CIFAR-10。

Q1: Two-layer Neural Network

这部分将要实现一个两层的神经网络,包括前向传播与BP后向传播,以及梯度下降法的几种改进变型。

首先看neural_net.py,里面定义了两个函数:init_two_layer_model()与two_layer_net()。在后者中补充完整,前向传播、loss计算、梯度计算三个部分。

1 import numpy as np 2 import matplotlib.pyplot as plt 3 4 def init_two_layer_model(input_size, hidden_size, output_size): 5 """ 6 Initialize the weights and biases for a two-layer fully connected neural 7 network. The net has an input dimension of D, a hidden layer dimension of H, 8 and performs classification over C classes. Weights are initialized to small 9 random values and biases are initialized to zero. 10 11 Inputs: 12 - input_size: The dimension D of the input data 13 - hidden_size: The number of neurons H in the hidden layer 14 - ouput_size: The number of classes C 15 16 Returns: 17 A dictionary mapping parameter names to arrays of parameter values. It has 18 the following keys: 19 - W1: First layer weights; has shape (D, H) 20 - b1: First layer biases; has shape (H,) 21 - W2: Second layer weights; has shape (H, C) 22 - b2: Second layer biases; has shape (C,) 23 """ 24 # initialize a model 25 model = {} 26 model[‘W1‘] = 0.00001 * np.random.randn(input_size, hidden_size) 27 model[‘b1‘] = np.zeros(hidden_size) 28 model[‘W2‘] = 0.00001 * np.random.randn(hidden_size, output_size) 29 model[‘b2‘] = np.zeros(output_size) 30 return model 31 32 def two_layer_net(X, model, y=None, reg=0.0): 33 """ 34 Compute the loss and gradients for a two layer fully connected neural network. 35 The net has an input dimension of D, a hidden layer dimension of H, and 36 performs classification over C classes. We use a softmax loss function and L2 37 regularization the the weight matrices. The two layer net should use a ReLU 38 nonlinearity after the first affine layer. 39 40 Inputs: 41 - X: Input data of shape (N, D). Each X[i] is a training sample. 42 - model: Dictionary mapping parameter names to arrays of parameter values. 43 It should contain the following: 44 - W1: First layer weights; has shape (D, H) 45 - b1: First layer biases; has shape (H,) 46 - W2: Second layer weights; has shape (H, C) 47 - b2: Second layer biases; has shape (C,) 48 - y: Vector of training labels. y[i] is the label for X[i], and each y[i] is 49 an integer in the range 0 <= y[i] < C. This parameter is optional; if it 50 is not passed then we only return scores, and if it is passed then we 51 instead return the loss and gradients. 52 - reg: Regularization strength. 53 54 Returns: 55 If y not is passed, return a matrix scores of shape (N, C) where scores[i, c] 56 is the score for class c on input X[i]. 57 58 If y is not passed, instead return a tuple of: 59 - loss: Loss (data loss and regularization loss) for this batch of training 60 samples. 61 - grads: Dictionary mapping parameter names to gradients of those parameters 62 with respect to the loss function. This should have the same keys as model. 63 """ 64 65 # unpack variables from the model dictionary 66 W1,b1,W2,b2 = model[‘W1‘], model[‘b1‘], model[‘W2‘], model[‘b2‘] 67 N, D = X.shape 68 69 # compute the forward pass 70 scores = None 71 ############################################################################# 72 # TODO: Perform the forward pass, computing the class scores for the input. # 73 # Store the result in the scores variable, which should be an array of # 74 # shape (N, C). # 75 ############################################################################# 76 N1 = np.dot(X, W1) + b1 77 H1 = np.maximum(0, N1) 78 scores = np.dot(H1, W2) + b2 # output layer without activate function 79 ############################################################################# 80 # END OF YOUR CODE # 81 ############################################################################# 82 83 # If the targets are not given then jump out, we‘re done 84 if y is None: 85 return scores 86 87 # compute the loss 88 loss = None 89 ############################################################################# 90 # TODO: Finish the forward pass, and compute the loss. This should include # 91 # both the data loss and L2 regularization for W1 and W2. Store the result # 92 # in the variable loss, which should be a scalar. Use the Softmax # 93 # classifier loss. So that your results match ours, multiply the # 94 # regularization loss by 0.5 # 95 ############################################################################# 96 probs = np.exp(scores) 97 probs /= np.sum(probs, axis = 1, keepdims = True) 98 loss = -np.sum(np.log(probs[np.arange(N), y]))/N+0.5*reg*np.sum(W1*W1)+0.5*reg*np.sum(W2*W2) 99 ############################################################################# 100 # END OF YOUR CODE # 101 ############################################################################# 102 103 # compute the gradients 104 grads = {} 105 ############################################################################# 106 # TODO: Compute the backward pass, computing the derivatives of the weights # 107 # and biases. Store the results in the grads dictionary. For example, # 108 # grads[‘W1‘] should store the gradient on W1, and be a matrix of same size # 109 ############################################################################# 110 dscores = probs.copy() # N*C size 111 dscores[np.arange(N), y] -= 1 112 grads[‘W2‘] = (np.dot(H1.transpose(), dscores))/N + reg*W2 # H*C size 113 grads[‘b2‘] = np.sum(dscores, axis=0) / N 114 dH1 = (np.dot(dscores, W2.transpose())) / N # N*H 115 116 delta_relu = N1.copy() # N*H 117 delta_relu[delta_relu>=0] = 1 118 delta_relu[delta_relu<0] = 0 119 grads[‘W1‘] = np.dot(X.transpose(), dH1*delta_relu)+ reg*W1 # D*H 120 grads[‘b1‘] = np.sum(dH1*delta_relu, axis = 0) 121 ############################################################################# 122 # END OF YOUR CODE # 123 ############################################################################# 124 125 return loss, grads

forward pass部分,采取ReLu激活函数:

# compute the forward pass scores = None ############################################################################# # TODO: Perform the forward pass, computing the class scores for the input. # # Store the result in the scores variable, which should be an array of # # shape (N, C). # ############################################################################# N1 = np.dot(X, W1) + b1 H1 = np.maximum(0, N1) scores = np.dot(H1, W2) + b2 # output layer without activate function ############################################################################# # END OF YOUR CODE # #############################################################################

loss计算部分,加上L2正则:

# compute the loss loss = None ############################################################################# # TODO: Finish the forward pass, and compute the loss. This should include # # both the data loss and L2 regularization for W1 and W2. Store the result # # in the variable loss, which should be a scalar. Use the Softmax # # classifier loss. So that your results match ours, multiply the # # regularization loss by 0.5 # ############################################################################# probs = np.exp(scores) probs /= np.sum(probs, axis = 1, keepdims = True) loss = -np.sum(np.log(probs[np.arange(N), y]))/N+0.5*reg*np.sum(W1*W1)+0.5*reg*np.sum(W2*W2) ############################################################################# # END OF YOUR CODE # #############################################################################

gradients计算部分,ReLu激活函数 max(x,0)的导数,是离散delta(x) = 1 if x>=0, 0 else

# compute the gradients grads = {} ############################################################################# # TODO: Compute the backward pass, computing the derivatives of the weights # # and biases. Store the results in the grads dictionary. For example, # # grads[‘W1‘] should store the gradient on W1, and be a matrix of same size # ############################################################################# dscores = probs.copy() # N*C size dscores[np.arange(N), y] -= 1 grads[‘W2‘] = (np.dot(H1.transpose(), dscores))/N + reg*W2 # H*C size grads[‘b2‘] = np.sum(dscores, axis=0) / N dH1 = (np.dot(dscores, W2.transpose())) / N # N*H delta_relu = N1.copy() # N*H delta_relu[delta_relu>=0] = 1 delta_relu[delta_relu<0] = 0 grads[‘W1‘] = np.dot(X.transpose(), dH1*delta_relu)+ reg*W1 # D*H grads[‘b1‘] = np.sum(dH1*delta_relu, axis = 0) ############################################################################# # END OF YOUR CODE # #############################################################################

接下来,打开classifier_trainer.py,里面是一个名为ClassifierTrainer的class,是对分类器的训练过程。

import numpy as np class ClassifierTrainer(object): """ The trainer class performs SGD with momentum on a cost function """ def __init__(self): self.step_cache = {} # for storing velocities in momentum update def train(self, X, y, X_val, y_val, model, loss_function, reg=0.0, learning_rate=1e-2, momentum=0, learning_rate_decay=0.95, update=‘momentum‘, sample_batches=True, num_epochs=30, batch_size=100, acc_frequency=None, verbose=False): """ Optimize the parameters of a model to minimize a loss function. We use training data X and y to compute the loss and gradients, and periodically check the accuracy on the validation set. Inputs: - X: Array of training data; each X[i] is a training sample. - y: Vector of training labels; y[i] gives the label for X[i]. - X_val: Array of validation data - y_val: Vector of validation labels - model: Dictionary that maps parameter names to parameter values. Each parameter value is a numpy array. - loss_function: A function that can be called in the following ways: scores = loss_function(X, model, reg=reg) loss, grads = loss_function(X, model, y, reg=reg) - reg: Regularization strength. This will be passed to the loss function. - learning_rate: Initial learning rate to use. - momentum: Parameter to use for momentum updates. - learning_rate_decay: The learning rate is multiplied by this after each epoch. - update: The update rule to use. One of ‘sgd‘, ‘momentum‘, or ‘rmsprop‘. - sample_batches: If True, use a minibatch of data for each parameter update (stochastic gradient descent); if False, use the entire training set for each parameter update (gradient descent). - num_epochs: The number of epochs to take over the training data. - batch_size: The number of training samples to use at each iteration. - acc_frequency: If set to an integer, we compute the training and validation set error after every acc_frequency iterations. - verbose: If True, print status after each epoch. Returns a tuple of: - best_model: The model that got the highest validation accuracy during training. - loss_history: List containing the value of the loss function at each iteration. - train_acc_history: List storing the training set accuracy at each epoch. - val_acc_history: List storing the validation set accuracy at each epoch. """ N = X.shape[0] if sample_batches: iterations_per_epoch = N / batch_size # using SGD else: iterations_per_epoch = 1 # using GD num_iters = num_epochs * iterations_per_epoch epoch = 0 best_val_acc = 0.0 best_model = {} loss_history = [] train_acc_history = [] val_acc_history = [] for it in xrange(num_iters): if it % 10 == 0: print ‘starting iteration ‘, it # get batch of data if sample_batches: batch_mask = np.random.choice(N, batch_size) X_batch = X[batch_mask] y_batch = y[batch_mask] else: # no SGD used, full gradient descent X_batch = X y_batch = y # evaluate cost and gradient cost, grads = loss_function(X_batch, model, y_batch, reg) loss_history.append(cost) # perform a parameter update for p in model: # compute the parameter step if update == ‘sgd‘: dx = -learning_rate * grads[p] elif update == ‘momentum‘: if not p in self.step_cache: self.step_cache[p] = np.zeros(grads[p].shape) #dx = np.zeros_like(grads[p]) # you can remove this after ##################################################################### # TODO: implement the momentum update formula and store the step # # update into variable dx. You should use the variable # # step_cache[p] and the momentum strength is stored in momentum. # # Don‘t forget to also update the step_cache[p]. # ##################################################################### dx = momentum * self.step_cache[p] - learning_rate*grads[p] self.step_cache[p] = dx ##################################################################### # END OF YOUR CODE # ##################################################################### elif update == ‘rmsprop‘: decay_rate = 0.99 # you could also make this an option if not p in self.step_cache: self.step_cache[p] = np.zeros(grads[p].shape) #dx = np.zeros_like(grads[p]) # you can remove this after ##################################################################### # TODO: implement the RMSProp update and store the parameter update # # dx. Don‘t forget to also update step_cache[p]. Use smoothing 1e-8 # ##################################################################### self.step_cache[p] = decay_rate * self.step_cache[p] + (1 - decay_rate)*grads[p]*grads[p] rms = np.sqrt(self.step_cache[p] + 1e-8) dx = - learning_rate*grads[p]/rms ##################################################################### # END OF YOUR CODE # ##################################################################### else: raise ValueError(‘Unrecognized update type "%s"‘ % update) # update the parameters model[p] += dx # every epoch perform an evaluation on the validation set first_it = (it == 0) epoch_end = (it + 1) % iterations_per_epoch == 0 acc_check = (acc_frequency is not None and it % acc_frequency == 0) if first_it or epoch_end or acc_check: if it > 0 and epoch_end: # decay the learning rate learning_rate *= learning_rate_decay epoch += 1 # evaluate train accuracy if N > 1000: train_mask = np.random.choice(N, 1000) X_train_subset = X[train_mask] y_train_subset = y[train_mask] else: X_train_subset = X y_train_subset = y scores_train = loss_function(X_train_subset, model) y_pred_train = np.argmax(scores_train, axis=1) train_acc = np.mean(y_pred_train == y_train_subset) train_acc_history.append(train_acc) # evaluate val accuracy scores_val = loss_function(X_val, model) y_pred_val = np.argmax(scores_val, axis=1) val_acc = np.mean(y_pred_val == y_val) val_acc_history.append(val_acc) # keep track of the best model based on validation accuracy if val_acc > best_val_acc: # make a copy of the model best_val_acc = val_acc best_model = {} for p in model: best_model[p] = model[p].copy() # print progress if needed if verbose: print (‘Finished epoch %d / %d: cost %f, train: %f, val %f, lr %e‘ % (epoch, num_epochs, cost, train_acc, val_acc, learning_rate)) if verbose: print ‘finished optimization. best validation accuracy: %f‘ % (best_val_acc, ) # return the best model and the training history statistics return best_model, loss_history, train_acc_history, val_acc_history

这文件里面,其实主要就一个函数:

def train(self, X, y, X_val, y_val, model, loss_function, reg=0.0, learning_rate=1e-2, momentum=0, learning_rate_decay=0.95, update=‘momentum‘, sample_batches=True, num_epochs=30, batch_size=100, acc_frequency=None, verbose=False):

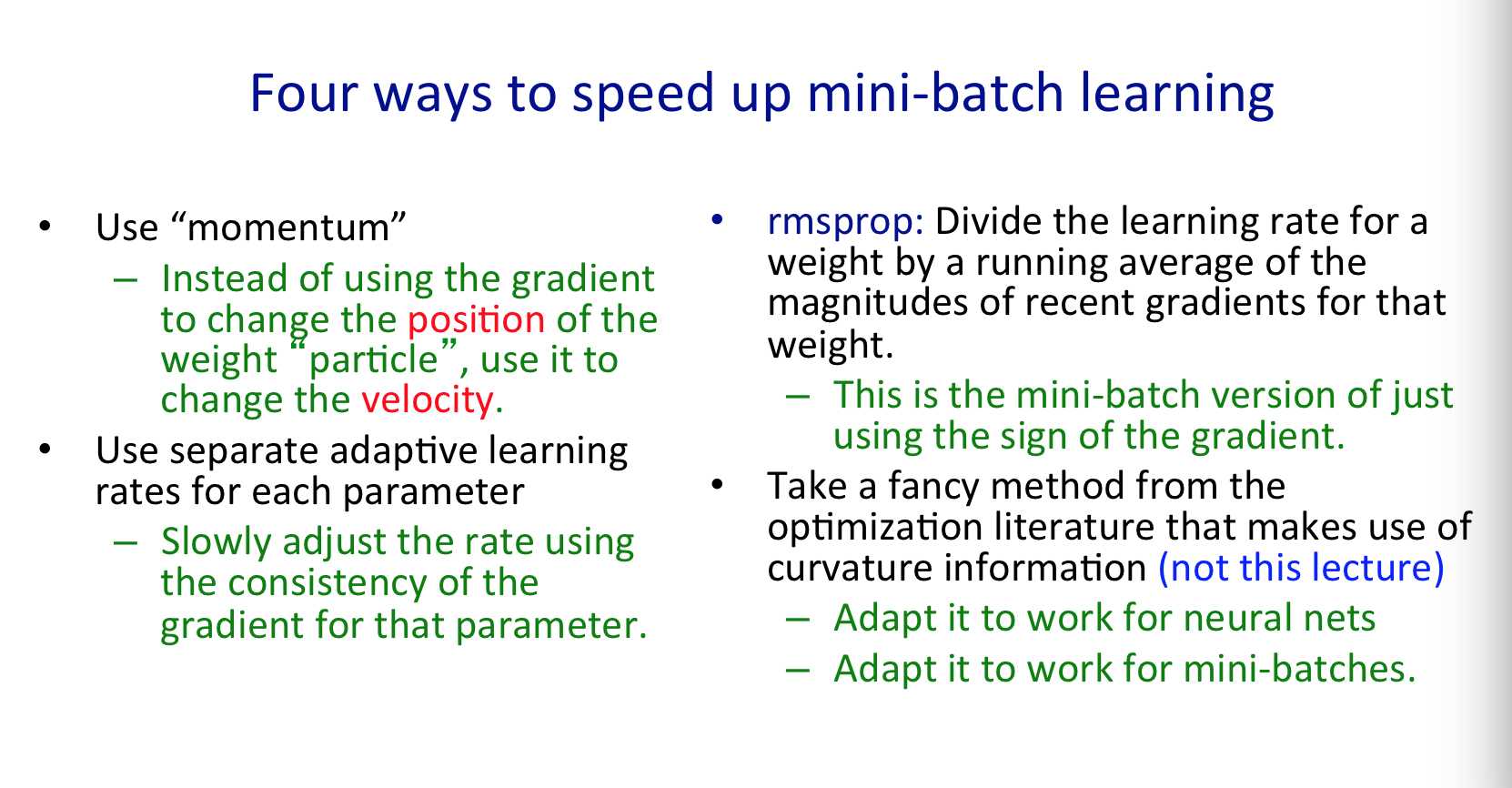

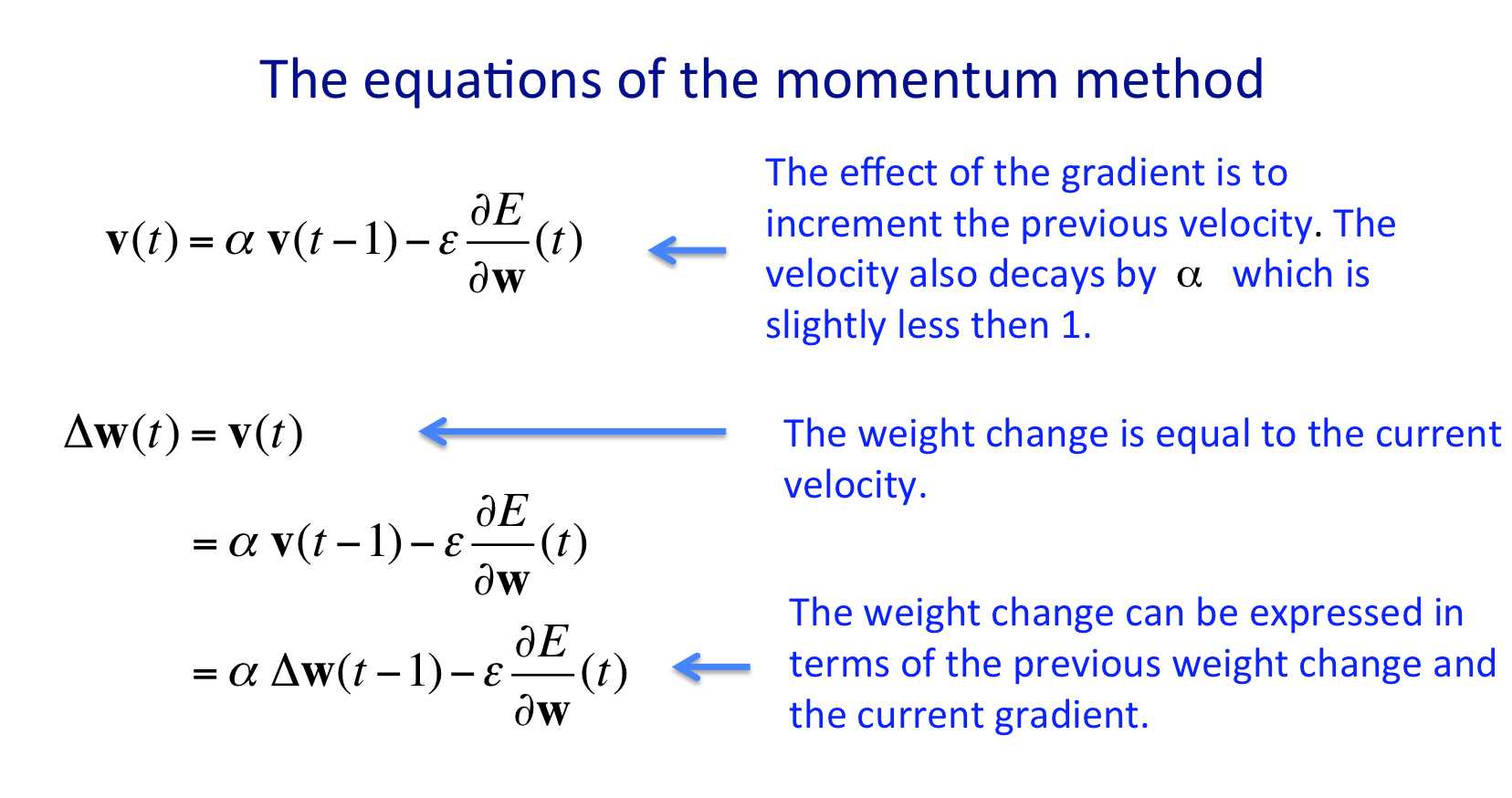

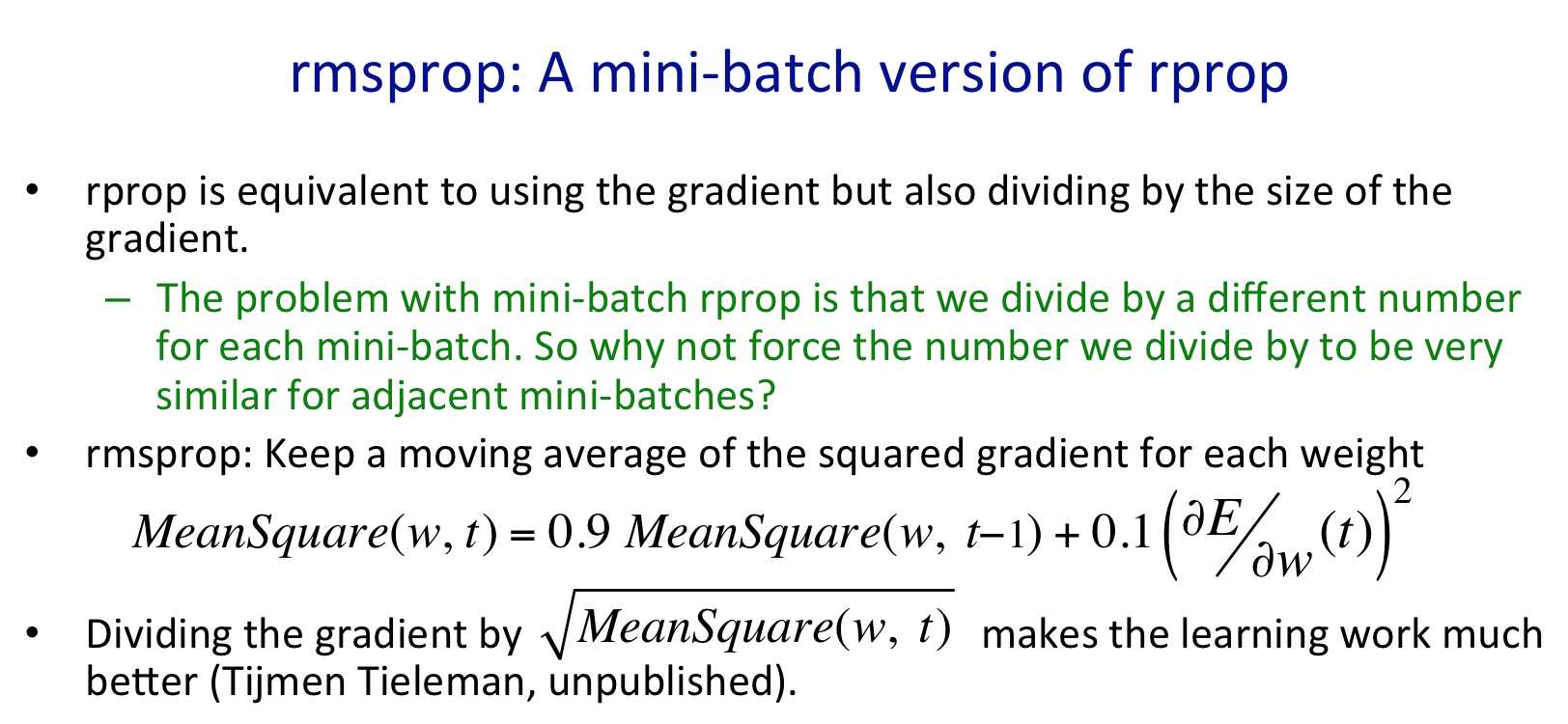

这里的train的输入参数里面除了数据与参数以外,最重要的就是模型model与损失函数loss_function。由于不同的网络结构会有五花八门的损失函数及其梯度形式,因此剥离出来,这样分类器的训练只需要简单的进行梯度迭代即可。这里需要实现两种方式:momentum与rmsprop

# perform a parameter update for p in model: # compute the parameter step if update == ‘sgd‘: dx = -learning_rate * grads[p] elif update == ‘momentum‘: if not p in self.step_cache: self.step_cache[p] = np.zeros(grads[p].shape) #dx = np.zeros_like(grads[p]) # you can remove this after ##################################################################### # TODO: implement the momentum update formula and store the step # # update into variable dx. You should use the variable # # step_cache[p] and the momentum strength is stored in momentum. # # Don‘t forget to also update the step_cache[p]. # ##################################################################### dx = momentum * self.step_cache[p] - learning_rate*grads[p] self.step_cache[p] = dx ##################################################################### # END OF YOUR CODE # ##################################################################### elif update == ‘rmsprop‘: decay_rate = 0.99 # you could also make this an option if not p in self.step_cache: self.step_cache[p] = np.zeros(grads[p].shape) #dx = np.zeros_like(grads[p]) # you can remove this after ##################################################################### # TODO: implement the RMSProp update and store the parameter update # # dx. Don‘t forget to also update step_cache[p]. Use smoothing 1e-8 # ##################################################################### self.step_cache[p] = decay_rate * self.step_cache[p] + (1 - decay_rate)*grads[p]*grads[p] rms = np.sqrt(self.step_cache[p] + 1e-8) dx = - learning_rate*grads[p]/rms ##################################################################### # END OF YOUR CODE # ##################################################################### else: raise ValueError(‘Unrecognized update type "%s"‘ % update)

关于学习率,参见Hinton的一页slides:

momentum方法中,不是沿着最速下降方向走,而是会在上一次的方向上结合梯度进行调整,它的特点是 consistent gradient

Q2: Modular Neural Network

在layers.py文件中,将要完成卷积神经网络的每一层的forward pass与backward pass,包括:affine layer,relu layer,convolution layer, max pool layer。

affine layer:

def affine_forward(x, w, b): """ Computes the forward pass for an affine (fully-connected) layer. The input x has shape (N, d_1, ..., d_k) where x[i] is the ith input. We multiply this against a weight matrix of shape (D, M) where D = \prod_i d_i Inputs: x - Input data, of shape (N, d_1, ..., d_k) w - Weights, of shape (D, M) b - Biases, of shape (M,) Returns a tuple of: - out: output, of shape (N, M) - cache: (x, w, b) """ out = None ############################################################################# # TODO: Implement the affine forward pass. Store the result in out. You # # will need to reshape the input into rows. # ############################################################################# N = x.shape[0] out = np.dot(x.reshape(N, -1), w) + b ############################################################################# # END OF YOUR CODE # ############################################################################# cache = (x, w, b) return out, cache def affine_backward(dout, cache): """ Computes the backward pass for an affine layer. Inputs: - dout: Upstream derivative, of shape (N, M) - cache: Tuple of: - x: Input data, of shape (N, d_1, ... d_k) - w: Weights, of shape (D, M) Returns a tuple of: - dx: Gradient with respect to x, of shape (N, d1, ..., d_k) - dw: Gradient with respect to w, of shape (D, M) - db: Gradient with respect to b, of shape (M,) """ x, w, b = cache dx, dw, db = None, None, None ############################################################################# # TODO: Implement the affine backward pass. # ############################################################################# dim = x.shape dx = np.dot(dout, w.transpose()).reshape(dim) dw = np.dot((x.reshape(dim[0], -1)).transpose(), dout) db = np.sum(dout, axis=0) ############################################################################# # END OF YOUR CODE # ############################################################################# return dx, dw, db

relu layer:

def relu_forward(x): """ Computes the forward pass for a layer of rectified linear units (ReLUs). Input: - x: Inputs, of any shape Returns a tuple of: - out: Output, of the same shape as x - cache: x """ out = None ############################################################################# # TODO: Implement the ReLU forward pass. # ############################################################################# out = np.maximum(0, x) ############################################################################# # END OF YOUR CODE # ############################################################################# cache = x return out, cache def relu_backward(dout, cache): """ Computes the backward pass for a layer of rectified linear units (ReLUs). Input: - dout: Upstream derivatives, of any shape - cache: Input x, of same shape as dout Returns: - dx: Gradient with respect to x """ dx, x = None, cache ############################################################################# # TODO: Implement the ReLU backward pass. # ############################################################################# dx = dout dx[cache<0] = 0 ############################################################################# # END OF YOUR CODE # ############################################################################# return dx

convolution layer:

注意到,$Y \in \mathcal{R}^{(out_H \cdot out_W) \times K}$, $ F \in \mathcal{R}^{(HH \cdot WW) \times K}$

def conv_forward_naive(x, w, b, conv_param): """ A naive implementation of the forward pass for a convolutional layer. The input consists of N data points, each with C channels, height H and width W. We convolve each input with F different filters, where each filter spans all C channels and has height HH and width HH. Input: - x: Input data of shape (N, C, H, W) - w: Filter weights of shape (F, C, HH, WW) - b: Biases, of shape (F,) - conv_param: A dictionary with the following keys: - ‘stride‘: The number of pixels between adjacent receptive fields in the horizontal and vertical directions. - ‘pad‘: The number of pixels that will be used to zero-pad the input. Returns a tuple of: - out: Output data. - cache: (x, w, b, conv_param) """ out = None ############################################################################# # TODO: Implement the convolutional forward pass. # # Hint: you can use the function np.pad for padding. # ############################################################################# N, C, H, W = x.shape F, _, HH, WW = w.shape stride = conv_param[‘stride‘] p = conv_param[‘pad‘] x_padded = np.pad(x, ((0, 0), (0, 0), (p, p), (p, p)), mode=‘constant‘) # assign memory for the convolved features out_H = (H + p*2 - HH)/stride+1 out_W = (W + p*2 - WW)/stride+1 out = np.zeros((N, F, out_H, out_W)) for img_num in xrange(N): for ftr_num in xrange(F): conv_img = np.zeros((out_H, out_W)) for cnl_num in xrange(C): img = x_padded[img_num, cnl_num, :, :] flt = w[ftr_num, cnl_num, :, :] for conv_row in xrange(out_H): row_start = conv_row * stride row_end = row_start + HH for conv_col in xrange(out_W): col_start = conv_col * stride col_end = col_start + WW conv_img[conv_row, conv_col] += np.sum(img[row_start:row_end, col_start:col_end]*flt) out[img_num, ftr_num, :, :] = conv_img + b[ftr_num] ############################################################################# # END OF YOUR CODE # ############################################################################# cache = (x, w, b, conv_param) return out, cache def conv_backward_naive(dout, cache): """ A naive implementation of the backward pass for a convolutional layer. Inputs: - dout: Upstream derivatives. - cache: A tuple of (x, w, b, conv_param) as in conv_forward_naive Returns a tuple of: - dx: Gradient with respect to x - dw: Gradient with respect to w - db: Gradient with respect to b """ dx, dw, db = None, None, None ############################################################################# # TODO: Implement the convolutional backward pass. # ############################################################################# x, w, b, conv_param = cache # unpack the cache N, C, H, W = x.shape F, _, HH, WW = w.shape _, _, out_H, out_W = dout.shape stride = conv_param[‘stride‘] p = conv_param[‘pad‘] x_padded = np.pad(x, ((0, 0), (0, 0), (p, p), (p, p)), mode=‘constant‘) # dw => F*C*HH*WW dw = np.zeros(w.shape) for ftr_num in xrange(F): for cnl_num in xrange(C): w_hat = np.zeros((HH, WW)) for img_num in xrange(N): img = x_padded[img_num, cnl_num, :, :] delta = dout[img_num, ftr_num, :, :] # using delta to element-wisely multiply a sampled input img in size out_H*out_W for w_row in xrange(HH): for w_col in xrange(WW): tmp = img[w_row:w_row + out_H*stride:stride, w_col:w_col + out_W*stride:stride] w_hat[w_row, w_col] += np.sum(tmp*delta) dw[ftr_num, cnl_num, :, :] += w_hat # dx => N*C*H*W dx = np.zeros(x.shape) for img_num in xrange(N): for cnl_num in xrange(C): for ftr_num in xrange(F): x_hat = np.dot(dout[img_num, ftr_num, :, :].reshape(-1, 1), w[ftr_num, cnl_num, : ,:].reshape(1, -1)) x_hat = x_hat.reshape(out_H, out_W, HH, WW) #print x_hat.shape dx_hat = np.zeros((H+2*p, W+2*p)) # temporally store the padded input #print dx_hat.shape for conv_row in xrange(out_H): row_start = conv_row * stride row_end = row_start + HH for conv_col in xrange(out_W): col_start = conv_col * stride col_end = col_start + WW dx_hat[row_start:row_end, col_start:col_end] += x_hat[conv_row, conv_col, :, :] dx[img_num, cnl_num, :, :] += dx_hat[p:-p, p:-p] db = np.sum(dout, axis = (0, 2, 3)) # F*1 size ############################################################################# # END OF YOUR CODE # ############################################################################# return dx, dw, db

max pool layer:

当然计算的时候,只需要针对S(x)的值,将dout累计回相应的input位置即可。

def max_pool_forward_naive(x, pool_param): """ A naive implementation of the forward pass for a max pooling layer. Inputs: - x: Input data, of shape (N, C, H, W) - pool_param: dictionary with the following keys: - ‘pool_height‘: The height of each pooling region - ‘pool_width‘: The width of each pooling region - ‘stride‘: The distance between adjacent pooling regions Returns a tuple of: - out: Output data - cache: (x, pool_param) """ out = None ############################################################################# # TODO: Implement the max pooling forward pass # ############################################################################# N, C, H, W = x.shape pool_height = pool_param[‘pool_height‘] pool_width = pool_param[‘pool_width‘] stride = pool_param[‘stride‘] # assign memory for the max_pooling features out_H = (H-pool_height)/stride + 1 out_W = (W-pool_width)/stride + 1 out = np.zeros((N, C, out_H, out_W)) for img_num in xrange(N): for cnl_num in xrange(C): for pool_row in xrange(out_H): row_start = pool_row * stride row_end = row_start + pool_height for pool_col in xrange(out_W): col_start = pool_col * stride col_end = col_start + pool_width patch = x[img_num, cnl_num, row_start:row_end, col_start:col_end] out[img_num, cnl_num, pool_row, pool_col] = patch.max() ############################################################################# # END OF YOUR CODE # ############################################################################# cache = (x, pool_param) return out, cache def max_pool_backward_naive(dout, cache): """ A naive implementation of the backward pass for a max pooling layer. Inputs: - dout: Upstream derivatives - cache: A tuple of (x, pool_param) as in the forward pass. Returns: - dx: Gradient with respect to x """ dx = None ############################################################################# # TODO: Implement the max pooling backward pass # ############################################################################# x, pool_param = cache N, C, H, W = x.shape pool_height = pool_param[‘pool_height‘] pool_width = pool_param[‘pool_width‘] stride = pool_param[‘stride‘] _, _, out_H, out_W = dout.shape dx = np.zeros_like(x) for img_num in xrange(N): for cnl_num in xrange(C): # processing for each element in dout for pool_row in xrange(out_H): row_start = pool_row * stride row_end = row_start + pool_height for pool_col in xrange(out_W): col_start = pool_col * stride col_end = col_start + pool_width patch = x[img_num, cnl_num, row_start:row_end, col_start:col_end] ind = np.unravel_index(patch.argmax(), patch.shape) # ind is the index of the max value in patch dx[img_num, cnl_num, row_start+ind[0], col_start+ind[1]] += dout[img_num, cnl_num, pool_row, pool_col] ############################################################################# # END OF YOUR CODE # ############################################################################# return dx

Q3: ConvNet on CIFAR-10

第三部分,就是利用第二部分的模块构建两层CNN,并进行实验。代码:

import numpy as np from cs231n.layers import * from cs231n.fast_layers import * from cs231n.layer_utils import * def two_layer_convnet(X, model, y=None, reg=0.0): """ Compute the loss and gradient for a simple two-layer ConvNet. The architecture is conv-relu-pool-affine-softmax, where the conv layer uses stride-1 "same" convolutions to preserve the input size; the pool layer uses non-overlapping 2x2 pooling regions. We use L2 regularization on both the convolutional layer weights and the affine layer weights. Inputs: - X: Input data, of shape (N, C, H, W) - model: Dictionary mapping parameter names to parameters. A two-layer Convnet expects the model to have the following parameters: - W1, b1: Weights and biases for the convolutional layer - W2, b2: Weights and biases for the affine layer - y: Vector of labels of shape (N,). y[i] gives the label for the point X[i]. - reg: Regularization strength. Returns: If y is None, then returns: - scores: Matrix of scores, where scores[i, c] is the classification score for the ith input and class c. If y is not None, then returns a tuple of: - loss: Scalar value giving the loss. - grads: Dictionary with the same keys as model, mapping parameter names to their gradients. """ # Unpack weights W1, b1, W2, b2 = model[‘W1‘], model[‘b1‘], model[‘W2‘], model[‘b2‘] N, C, H, W = X.shape # We assume that the convolution is "same", so that the data has the same # height and width after performing the convolution. We can then use the # size of the filter to figure out the padding. conv_filter_height, conv_filter_width = W1.shape[2:] assert conv_filter_height == conv_filter_width, ‘Conv filter must be square‘ assert conv_filter_height % 2 == 1, ‘Conv filter height must be odd‘ assert conv_filter_width % 2 == 1, ‘Conv filter width must be odd‘ conv_param = {‘stride‘: 1, ‘pad‘: (conv_filter_height - 1) / 2} pool_param = {‘pool_height‘: 2, ‘pool_width‘: 2, ‘stride‘: 2} # Compute the forward pass a1, cache1 = conv_relu_pool_forward(X, W1, b1, conv_param, pool_param) scores, cache2 = affine_forward(a1, W2, b2) if y is None: return scores # Compute the backward pass data_loss, dscores = softmax_loss(scores, y) # Compute the gradients using a backward pass da1, dW2, db2 = affine_backward(dscores, cache2) dX, dW1, db1 = conv_relu_pool_backward(da1, cache1) # Add regularization dW1 += reg * W1 dW2 += reg * W2 reg_loss = 0.5 * reg * sum(np.sum(W * W) for W in [W1, W2]) loss = data_loss + reg_loss grads = {‘W1‘: dW1, ‘b1‘: db1, ‘W2‘: dW2, ‘b2‘: db2} return loss, grads def init_two_layer_convnet(weight_scale=1e-3, bias_scale=0, input_shape=(3, 32, 32), num_classes=10, num_filters=32, filter_size=5): """ Initialize the weights for a two-layer ConvNet. Inputs: - weight_scale: Scale at which weights are initialized. Default 1e-3. - bias_scale: Scale at which biases are initialized. Default is 0. - input_shape: Tuple giving the input shape to the network; default is (3, 32, 32) for CIFAR-10. - num_classes: The number of classes for this network. Default is 10 (for CIFAR-10) - num_filters: The number of filters to use in the convolutional layer. - filter_size: The width and height for convolutional filters. We assume that all convolutions are "same", so we pick padding to ensure that data has the same height and width after convolution. This means that the filter size must be odd. Returns: A dictionary mapping parameter names to numpy arrays containing: - W1, b1: Weights and biases for the convolutional layer - W2, b2: Weights and biases for the fully-connected layer. """ C, H, W = input_shape assert filter_size % 2 == 1, ‘Filter size must be odd; got %d‘ % filter_size model = {} model[‘W1‘] = weight_scale * np.random.randn(num_filters, C, filter_size, filter_size) model[‘b1‘] = bias_scale * np.random.randn(num_filters) model[‘W2‘] = weight_scale * np.random.randn(num_filters * H * W / 4, num_classes) model[‘b2‘] = bias_scale * np.random.randn(num_classes) return model pass

标签:

原文地址:http://www.cnblogs.com/yyjiang/p/convolutional_neural_network_layer.html