标签:

下载druid

下载地址

http://static.druid.io/artifacts/releases/druid-services-0.6.145-bin.tar.gz

解压

tar -zxvf druid-services-*-bin.tar.gz

cd druid-services-*

外部依存关系

1.A "deep" storage,作为一个备份数据库

2.mysql

设置mysql

mysql -u root

GRANT ALL ON druid.* TO ‘druid‘@‘localhost‘ IDENTIFIED BY

‘diurd‘;

CREATE database druid;

3.Apache Zookeeper

curl http://apache.osuosl.org/zookeeper/zookeeper-3.4.5/zookeeper-3.4.5.tar.gz -o zookeeper-3.4.5.tar.gz

tar xzf zookeeper-3.4.5.tar.gz

cd zookeeper-3.4.5

cp conf/zoo_sample.cfg conf/zoo.cfg

./bin/zkServer.sh start

cd ..

集群

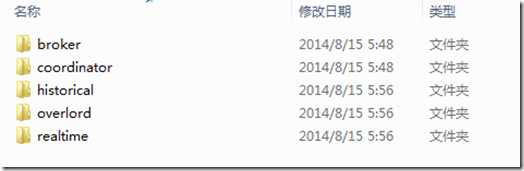

启动一些节点然后下载数据。首先你的保证在\config文件夹下面有这个几个节点:

在配置好mysql 和zookeeper 后,还需要5步,druid集群服务就搭建成功了。启动4个节点。

1:启动coordinator 节点

Coordinator 节点负责druid 的负载均衡

配置文件

config/coordinator/runtime.properties

druid.host=localhost

druid.service=coordinator

druid.port=8082

druid.zk.service.host=localhost

druid.db.connector.connectURI=jdbc\:mysql\://localhost\:3306/druid

druid.db.connector.user=druid

druid.db.connector.password=diurd

druid.coordinator.startDelay=PT70s

启动命令

java -Xmx256m -Duser.timezone=UTC -Dfile.encoding=UTF-8 -classpath lib/*:config/coordinator io.druid.cli.Main server coordinator &

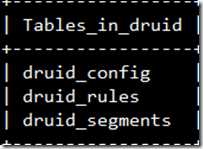

coordinator 启动后会在durid数据库中创建下面3个表:

2:启动Historical 节点

historiacl 节点是集群的核心组成部分,负责加载数据,使它们可用于查询

配置文件

druid.host=localhost

druid.service=historical

druid.port=8081

druid.zk.service.host=localhost

druid.extensions.coordinates=["io.druid.extensions:druid-s3-extensions:0.6.143"]

# Dummy read only AWS account (used to download example

data)

druid.s3.secretKey=QyyfVZ7llSiRg6Qcrql1eEUG7buFpAK6T6engr1b

druid.s3.accessKey=AKIAIMKECRUYKDQGR6YQ

druid.server.maxSize=10000000000

# Change these to make Druid

faster

druid.processing.buffer.sizeBytes=100000000

druid.processing.numThreads=1

druid.segmentCache.locations=[{"path": "/tmp/druid/indexCache", "maxSize"\: 10000000000}]

启动命令

java -Xmx256m -Duser.timezone=UTC -Dfile.encoding=UTF-8 -classpath lib/*:config/historical io.druid.cli.Main server historical &

3:启动Broker节点

broker 负责从 historiacl 节点 和realtime 节点中查询数据。

配置文件

druid.host=localhost

druid.service=broker

druid.port=8080

druid.zk.service.host=localhost

启动命令

java -Xmx256m -Duser.timezone=UTC -Dfile.encoding=UTF-8 -classpath lib/*:config/broker io.druid.cli.Main server broker &

4:load 数据

因为是做测试所以需要手动插入数据,在生产集群环境中,这些数据是自动保存到数据库中的。

use druid;

INSERT INTO

druid_segments (id, dataSource, created_date, start, end,

partitioned, version, used, payload) VALUES (‘wikipedia_2013-08-01T00:00:00.000Z_2013-08-02T00:00:00.000Z_2013-08-08T21:22:48.989Z‘,

‘wikipedia‘, ‘2013-08-08T21:26:23.799Z‘,

‘2013-08-01T00:00:00.000Z‘, ‘2013-08-02T00:00:00.000Z‘, ‘0‘,

‘2013-08-08T21:22:48.989Z‘, ‘1‘, ‘{\"dataSource\":\"wikipedia\",\"interval\":\"2013-08-01T00:00:00.000Z/2013-08-02T00:00:00.000Z\",\"version\":\"2013-08-08T21:22:48.989Z\",\"loadSpec\":{\"type\":\"s3_zip\",\"bucket\":\"static.druid.io\",\"key\":\"data/segments/wikipedia/20130801T000000.000Z_20130802T000000.000Z/2013-08-08T21_22_48.989Z/0/index.zip\"},\"dimensions\":\"dma_code,continent_code,geo,area_code,robot,country_name,network,city,namespace,anonymous,unpatrolled,page,postal_code,language,newpage,user,region_lookup\",\"metrics\":\"count,delta,variation,added,deleted\",\"shardSpec\":{\"type\":\"none\"},\"binaryVersion\":9,\"size\":24664730,\"identifier\":\"wikipedia_2013-08-01T00:00:00.000Z_2013-08-02T00:00:00.000Z_2013-08-08T21:22:48.989Z\"}‘);

5:启动realtime节点

配置文件

druid.host=localhost

druid.service=realtime

druid.port=8083

druid.zk.service.host=localhost

druid.extensions.coordinates=["io.druid.extensions:druid-examples:0.6.143","io.druid.extensions:druid-kafka-seven:0.6.143"]

# Change this config to db to hand off to the rest of the Druid

cluster

druid.publish.type=noop

# These configs are only required for real hand off

# druid.db.connector.connectURI=jdbc\:mysql\://localhost\:3306/druid

# druid.db.connector.user=druid

# druid.db.connector.password=diurd

druid.processing.buffer.sizeBytes=100000000

druid.processing.numThreads=1

druid.monitoring.monitors=["io.druid.segment.realtime.RealtimeMetricsMonitor"]

启动命令

java -Xmx256m -Duser.timezone=UTC -Dfile.encoding=UTF-8 -Ddruid.realtime.specFile=examples/wikipedia/wikipedia_realtime.spec -classpath lib/*:config/realtime io.druid.cli.Main server realtime &

最后就可以查询数据了。

标签:

原文地址:http://www.cnblogs.com/hanchanghong/p/4402700.html