标签:

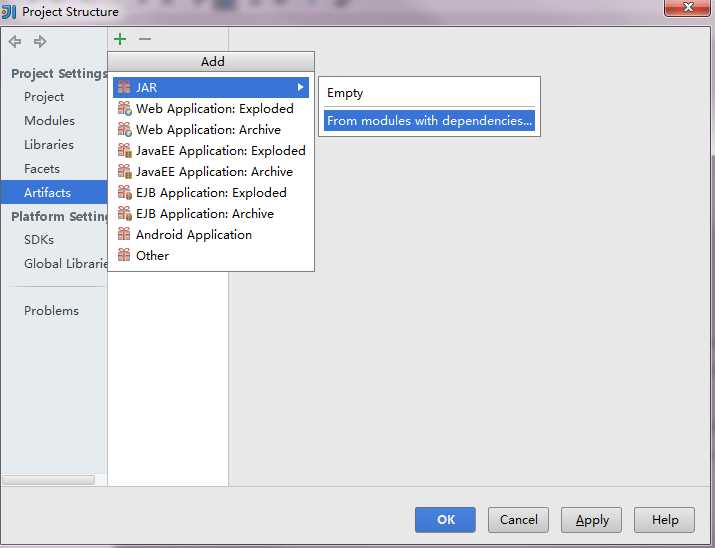

import scala.math.randomimport org.apache.spark._/** Computes an approximation to pi */object SparkPi {def main(args: Array[String]) {val conf = new SparkConf().setAppName("Spark Pi").setMaster("spark://192.168.1.88:7077").set("spark.driver.host","192.168.1.129").setJars(List("D:\\IdeaProjects\\scalalearn\\out\\artifacts\\scalalearn\\scalalearn.jar"))val spark = new SparkContext(conf)val slices = if (args.length > 0) args(0).toInt else 2val n = 100000 * slicesval count = spark.parallelize(1 to n, slices).map { i =>val x = random * 2 - 1val y = random * 2 - 1if (x*x + y*y < 1) 1 else 0}.reduce(_ + _)println("Pi is roughly " + 4.0 * count / n)spark.stop()}}

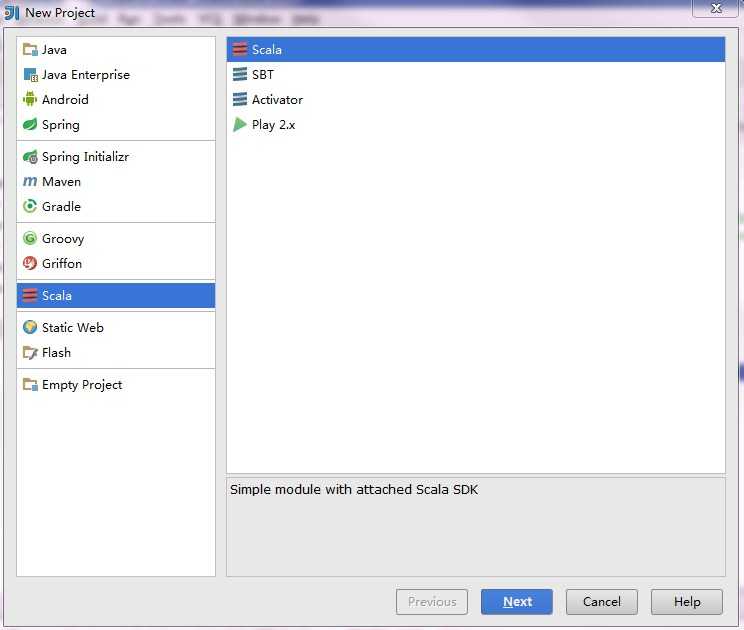

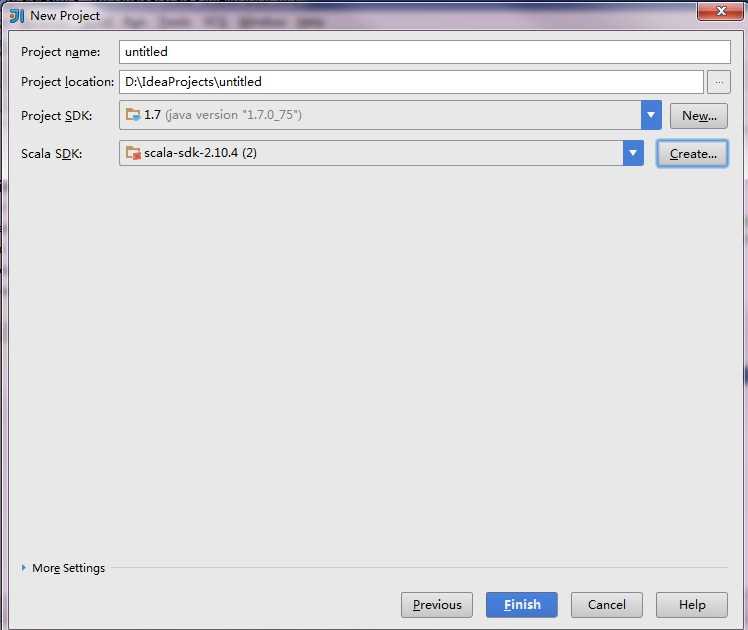

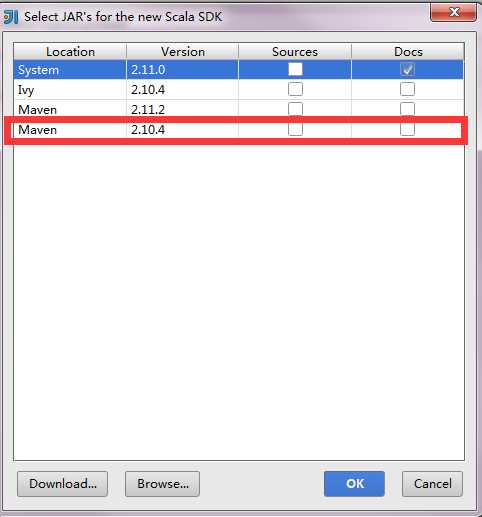

使用IDEA开发SPARK提交remote cluster执行

标签:

原文地址:http://www.cnblogs.com/gaoxing/p/4414362.html