标签:

hbase region split操作的一些细节,具体split步骤很多文档都有说明,本文主要关注regionserver如何选取split point

首先推荐web ui查看hbase region分布的一个开源工具hannibal,建议用daemontool管理hannibal意外退出,自动重启,之前博文写了博文介绍如何使用daemontool管理

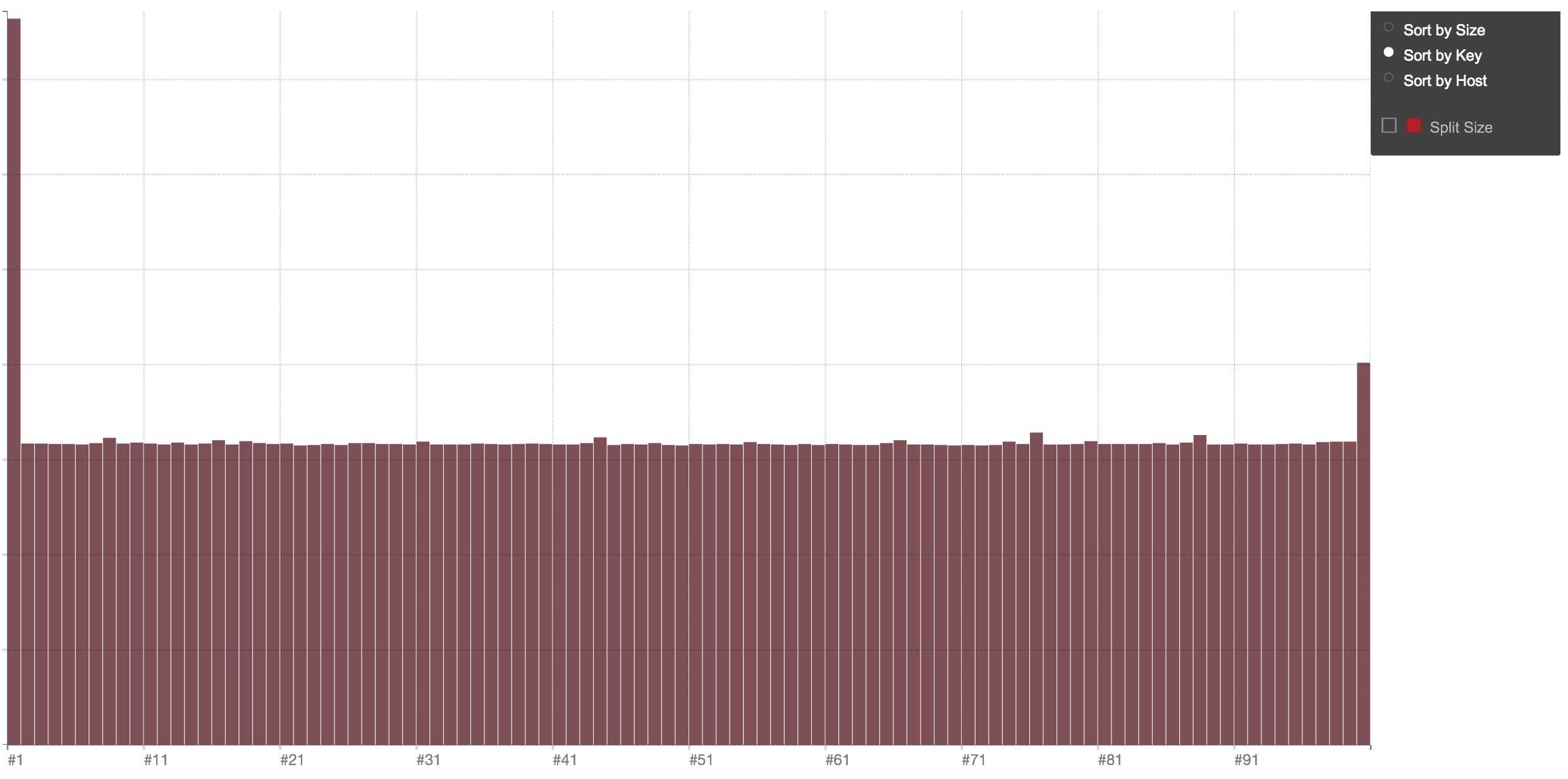

假设有一张hbase的table如下表所示,有一个region的大小比较大,可以对这个region进行手动split操作

HBase的物理存储树状图如下

Table (HBase table) Region (Regions for the table) Store (Store per ColumnFamily for each Region for the table) MemStore (MemStore for each Store for each Region for the table) StoreFile (StoreFiles for each Store for each Region for the table) Block (Blocks within a StoreFile within a Store for each Region for the table)

一种常见的分裂策略是:ConstantSizeRegionSplitPolicy,配置hbase.hregion.max.filesize是指某个store(对应一个column family)的大小

/<hdfs-dir>/<hbasetable>/<xxx(part of region-id)>/<columu-family>

RegionSplitPolicy.java Iterator i$ = stores.values().iterator(); while(i$.hasNext()) { Store s = (Store)i$.next(); byte[] splitPoint = s.getSplitPoint(); long storeSize = s.getSize(); if(splitPoint != null && largestStoreSize < storeSize) { splitPointFromLargestStore = splitPoint; largestStoreSize = storeSize; } }

Store.java public byte[] getSplitPoint() { long e = 0L; StoreFile largestSf = null; Iterator r = this.storefiles.iterator(); StoreFile midkey; while (r.hasNext()) { midkey = (StoreFile) r.next(); org.apache.hadoop.hbase.regionserver.StoreFile.Reader mk; if (midkey.isReference()) { assert false : "getSplitPoint() called on a region that can\‘t split!"; mk = null; return (byte[]) mk; } mk = midkey.getReader(); if (mk == null) { LOG.warn("Storefile " + midkey + " Reader is null"); } else { long fk = mk.length(); if (fk > e) { e = fk; largestSf = midkey; } } } org.apache.hadoop.hbase.regionserver.StoreFile.Reader r1 = largestSf.getReader(); if (r1 == null) { LOG.warn("Storefile " + largestSf + " Reader is null"); midkey = null; return (byte[]) midkey; } byte[] midkey1 = r1.midkey(); //...略 }

所以split实际上并不是完全的等分,因为split point不一定是数据分布的中位点。

参考:

标签:

原文地址:http://www.cnblogs.com/yanghuahui/p/4558910.html