标签:

感谢 网友 Vagrant的提醒。之前 一直就看个最后的accuracy。这个应该并不靠谱。最好把说有的信息都看一下。而一个一个看。根本记不住。只能把数据读取在图片中显示一下,才比较直观。

本文就是读的cifar10中的train_quick.sh输出的txt信息。

输出txt命令类似下面:

$ sh examples/mnist/train_lenet.sh 2>&1 l tee examples/mnist/文件名.txt | less

我的txt如下

I0504 16:10:30.715772 28960 solver.cpp:464] Iteration 900, lr = 0.001

I0504 16:11:05.512084 28960 solver.cpp:266] Iteration 1000, Testing net (#0)

I0504 16:11:15.169495 28960 solver.cpp:315] Test net output #0: accuracy = 0.6329

I0504 16:11:15.169548 28960 solver.cpp:315] Test net output #1: loss = 1.05425 (* 1 = 1.05425 loss)

I0504 16:11:15.310549 28960 solver.cpp:189] Iteration 1000, loss = 0.943602

I0504 16:11:15.310600 28960 solver.cpp:204] Train net output #0: loss = 0.943602 (* 1 = 0.943602 loss)

I0504 16:11:15.310612 28960 solver.cpp:464] Iteration 1000, lr = 0.001

I0504 16:11:52.422785 28960 solver.cpp:189] Iteration 1100, loss = 0.987194

I0504 16:11:52.422874 28960 solver.cpp:204] Train net output #0: loss = 0.987194 (* 1 = 0.987194 loss)

I0504 16:11:52.422888 28960 solver.cpp:464] Iteration 1100, lr = 0.001

I0504 16:12:30.034868 28960 solver.cpp:189] Iteration 1200, loss = 0.992985

I0504 16:12:30.034958 28960 solver.cpp:204] Train net output #0: loss = 0.992985 (* 1 = 0.992985 loss)

I0504 16:12:30.034971 28960 solver.cpp:464] Iteration 1200, lr = 0.001

I0504 16:13:08.997802 28960 solver.cpp:189] Iteration 1300, loss = 0.866693

I0504 16:13:08.997913 28960 solver.cpp:204] Train net output #0: loss = 0.866693 (* 1 = 0.866693 loss)

I0504 16:13:08.997926 28960 solver.cpp:464] Iteration 1300, lr = 0.001

I0504 16:13:49.289508 28960 solver.cpp:189] Iteration 1400, loss = 0.89818

I0504 16:13:49.289584 28960 solver.cpp:204] Train net output #0: loss = 0.89818 (* 1 = 0.89818 loss)

I0504 16:13:49.289597 28960 solver.cpp:464] Iteration 1400, lr = 0.001

I0504 16:14:30.803120 28960 solver.cpp:266] Iteration 1500, Testing net (#0)

I0504 16:14:42.165652 28960 solver.cpp:315] Test net output #0: accuracy = 0.6668

I0504 16:14:42.165700 28960 solver.cpp:315] Test net output #1: loss = 0.974285 (* 1 = 0.974285 loss)

I0504 16:14:42.337384 28960 solver.cpp:189] Iteration 1500, loss = 0.836957

I0504 16:14:42.337427 28960 solver.cpp:204] Train net output #0: loss = 0.836957 (* 1 = 0.836957 loss)

I0504 16:14:42.337445 28960 solver.cpp:464] Iteration 1500, lr = 0.001

I0504 16:15:25.932066 28960 solver.cpp:189] Iteration 1600, loss = 0.874446

I0504 16:15:25.932142 28960 solver.cpp:204] Train net output #0: loss = 0.874446 (* 1 = 0.874446 loss)

I0504 16:15:25.932154 28960 solver.cpp:464] Iteration 1600, lr = 0.001

I0504 16:16:09.884897 28960 solver.cpp:189] Iteration 1700, loss = 0.853356

I0504 16:16:09.885004 28960 solver.cpp:204] Train net output #0: loss = 0.853356 (* 1 = 0.853356 loss)

I0504 16:16:09.885017 28960 solver.cpp:464] Iteration 1700, lr = 0.001

I0504 16:16:54.762683 28960 solver.cpp:189] Iteration 1800, loss = 0.746637

I0504 16:16:54.762750 28960 solver.cpp:204] Train net output #0: loss = 0.746637 (* 1 = 0.746637 loss)

I0504 16:16:54.762763 28960 solver.cpp:464] Iteration 1800, lr = 0.001

I0504 16:17:39.067754 28960 solver.cpp:189] Iteration 1900, loss = 0.834299

I0504 16:17:39.067828 28960 solver.cpp:204] Train net output #0: loss = 0.834299 (* 1 = 0.834299 loss)

I0504 16:17:39.067841 28960 solver.cpp:464] Iteration 1900, lr = 0.001

I0504 16:18:23.519376 28960 solver.cpp:266] Iteration 2000, Testing net (#0)

I0504 16:18:35.585728 28960 solver.cpp:315] Test net output #0: accuracy = 0.6852

I0504 16:18:35.585774 28960 solver.cpp:315] Test net output #1: loss = 0.920548 (* 1 = 0.920548 loss)

I0504 16:18:35.765875 28960 solver.cpp:189] Iteration 2000, loss = 0.743887

I0504 16:18:35.765923 28960 solver.cpp:204] Train net output #0: loss = 0.743887 (* 1 = 0.743887 loss)

I0504 16:18:35.765940 28960 solver.cpp:464] Iteration 2000, lr = 0.001

I0504 16:19:21.532541 28960 solver.cpp:189] Iteration 2100, loss = 0.866103

I0504 16:19:21.532645 28960 solver.cpp:204] Train net output #0: loss = 0.866103 (* 1 = 0.866103 loss)

I0504 16:19:21.532667 28960 solver.cpp:464] Iteration 2100, lr = 0.001

I0504 16:20:04.737540 28960 solver.cpp:189] Iteration 2200, loss = 0.797212

I0504 16:20:04.737614 28960 solver.cpp:204] Train net output #0: loss = 0.797212 (* 1 = 0.797212 loss)

I0504 16:20:04.737627 28960 solver.cpp:464] Iteration 2200, lr = 0.001

I0504 16:20:40.654320 28960 solver.cpp:189] Iteration 2300, loss = 0.659485

I0504 16:20:40.654386 28960 solver.cpp:204] Train net output #0: loss = 0.659485 (* 1 = 0.659485 loss)

I0504 16:20:40.654399 28960 solver.cpp:464] Iteration 2300, lr = 0.001

I0504 16:21:16.076812 28960 solver.cpp:189] Iteration 2400, loss = 0.811878

I0504 16:21:16.076882 28960 solver.cpp:204] Train net output #0: loss = 0.811878 (* 1 = 0.811878 loss)

I0504 16:21:16.076894 28960 solver.cpp:464] Iteration 2400, lr = 0.001

I0504 16:21:50.666992 28960 solver.cpp:266] Iteration 2500, Testing net (#0)

I0504 16:21:59.957159 28960 solver.cpp:315] Test net output #0: accuracy = 0.6938

I0504 16:21:59.957201 28960 solver.cpp:315] Test net output #1: loss = 0.89744 (* 1 = 0.89744 loss)

I0504 16:22:00.098528 28960 solver.cpp:189] Iteration 2500, loss = 0.707217

I0504 16:22:00.098577 28960 solver.cpp:204] Train net output #0: loss = 0.707217 (* 1 = 0.707217 loss)

I0504 16:22:00.098589 28960 solver.cpp:464] Iteration 2500, lr = 0.001

I0504 16:22:35.216800 28960 solver.cpp:189] Iteration 2600, loss = 0.83809

I0504 16:22:35.216871 28960 solver.cpp:204] Train net output #0: loss = 0.83809 (* 1 = 0.83809 loss)

I0504 16:22:35.216883 28960 solver.cpp:464] Iteration 2600, lr = 0.001

I0504 16:23:10.688284 28960 solver.cpp:189] Iteration 2700, loss = 0.771752

I0504 16:23:10.688392 28960 solver.cpp:204] Train net output #0: loss = 0.771752 (* 1 = 0.771752 loss)

I0504 16:23:10.688405 28960 solver.cpp:464] Iteration 2700, lr = 0.001

I0504 16:23:45.953291 28960 solver.cpp:189] Iteration 2800, loss = 0.660784

I0504 16:23:45.953361 28960 solver.cpp:204] Train net output #0: loss = 0.660784 (* 1 = 0.660784 loss)

I0504 16:23:45.953374 28960 solver.cpp:464] Iteration 2800, lr = 0.001

Matlab代码如下

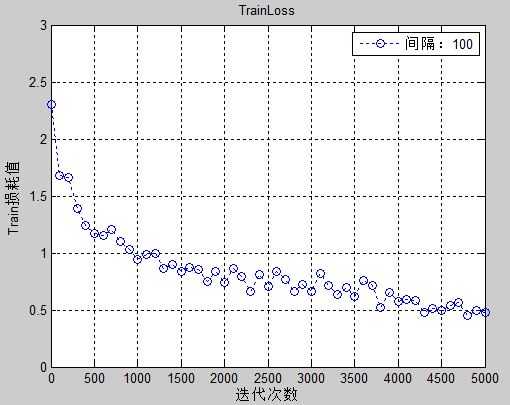

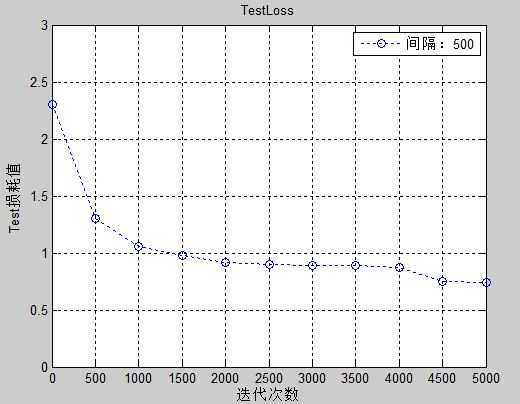

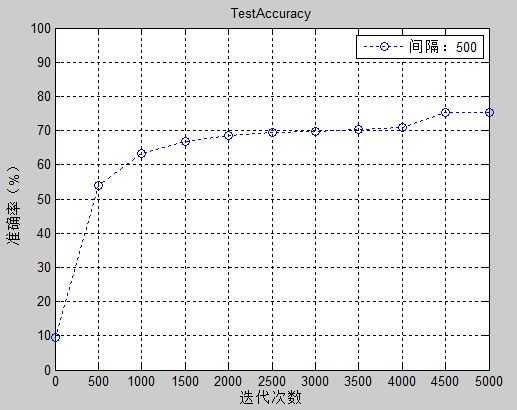

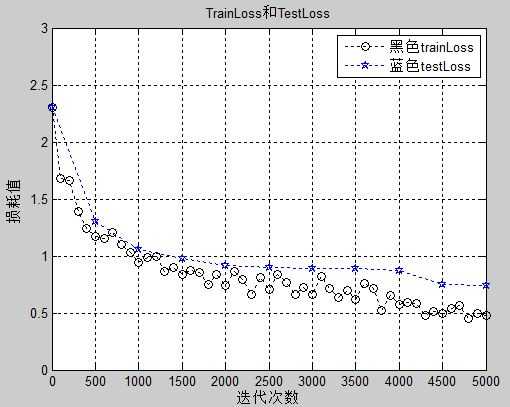

1 %这个程序根据caffe输出的txt文档,读取数据,画图 2 clear;close all;clc; 3 %%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%% 4 fileName = ‘record20150504.txt‘;%文件名 5 path = ‘C:\Users\Dy\Desktop\‘;%路径 6 7 stepSizeTestLoss = 500;%TestLoss数据的步长 8 stepSizeTrainLoss = 100; %TrainLoss数据的步长 9 stepSizeTestAccuracy = 500;%TestAccuracy数据的步长 10 11 maxIteration = 5000;%最大迭代量 12 13 %在cifar10模型中,在4000保存出一个中间变量,然后改变步长,再训练 14 %所以画图的过程中要去除掉一些重复的数值 15 %在迭代4000次保存中间变量后,再次调用的时候出现了一次重复 16 %4000%500= 8 + 1(0起始位置计算了一次) = 9 17 ignoreTestLoss = [10]; 18 %在snapshotting后4000次迭代,起始的地方就有一次计算TrainLoss。这和之前的重复了 19 %4000%100=40+1(0起始位置计算了一次)=41 20 ignoreTrainLoss = [42]; 21 %在snapshotting后4000次迭代,起始的地方就有一次计算Accuracy。这和之前的重复了 22 %4000%500=8+1(0起始位置计算了一次)=9 23 ignoreTestAccuracy = [10]; 24 %%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%% 25 26 fullPath = [path,fileName]; 27 28 %由于数值的长短不固定,所以不能采用数组,故采用cell 29 trainLoss = {}; 30 testLoss = {}; 31 testAccuracy = {}; 32 countTestLoss = 1;%TestLoss的统计变量 33 countTrainLoss = 1;%TrainLoss的统计变量 34 countTestAccuracy = 1;%TestAccuracy的统计变量 35 startTestLoss = 0;%TestLoss数据的起始点 36 startTrainLoss = 0;%TrainLoss数据的起始点 37 startTestAccuracy = 0;%TestLoss数据的起始点 38 39 fid = fopen(fullPath,‘r‘);%默认以只读的方式打开一个txt文档 40 while ~feof(fid)% 判断是否为文件末尾 41 tempLine = fgetl(fid);% 从文件读行 42 %判断这行是否有‘loss = ’这项 43 locatLoss = strfind(tempLine,‘loss = ‘); 44 if ~isempty(locatLoss)%判断是有loss 45 %由于各个loss的长短不固定,而且各种loss语句输出的长短不固定。 46 %所以编程考虑的角度如何保存各种loss的信息,而不是程序的运行效率 47 %输出共有Test net output #1:;Iteration;Train net output #0:三种类型 48 %而Iteration;Train net output #0信息相同 49 %但是必须以如下形式。并不是每一次‘Iteration XXX, loss = ’ 50 %后都会出现‘Train net output #0: loss = ’比如在smapshotting4000之前的 51 %第4000次计算的loss会忽略。虽然在后面一次会重新计算过一次,但是第5000次迭代的loss会丢失 52 %所以只能用下面的形式,才不然会遗漏信息 53 %其实这块查找,感觉如果熟悉正则表达式应该会方便很多。但发现好像各个不同语言,用正则表达式有些差别 54 %这已经记不住了,Matlab中用正则还没操练过。以后有机会来填坑 55 locatTrainLoss = strfind(tempLine,‘Iteration ‘); 56 if ~isempty(locatTrainLoss)%判断是否为trainloss 57 trainTempLoss = tempLine(locatLoss+7:end); 58 %之前用Train net output #0: loss = 查询,遗留下来的。后发现遗漏信息舍弃。。 59 % trainTempLoss = strtok(trainTempLoss); 60 trainLoss(countTrainLoss) = {trainTempLoss}; 61 countTrainLoss = countTrainLoss + 1; 62 continue;%如果找到就不用运行下面的步骤了 63 end 64 65 locatTestLoss = strfind(tempLine,‘Test net output #1: loss =‘); 66 if ~isempty(locatTestLoss)%判断是否为testloss 67 testTempLoss = tempLine(locatLoss+7:locatLoss+15); 68 testTempLoss = strtok(testTempLoss); 69 testLoss(countTestLoss) = {testTempLoss}; 70 countTestLoss = countTestLoss + 1; 71 continue;%如果找到就不用运行下面的步骤了 72 end 73 end 74 75 %由于caffe输出中的loss比较多,所以先进行判断,下面判断是否存在accuracy 76 locatAccuracy = strfind(tempLine,‘accuracy =‘); 77 if ~isempty(locatAccuracy) 78 %由于这个准确率非固定长度,所以用了end 79 testTempAccuracy = tempLine(locatAccuracy+11:end); 80 testAccuracy(countTestAccuracy) = {testTempAccuracy}; 81 countTestAccuracy = countTestAccuracy + 1; 82 end 83 end 84 fclose(fid);%关闭文本文件 85 fclose(‘all‘);%关闭所有连接,防止没关掉的情况 86 87 %由于上面程序中加入了countTestLoss countTrainLoss countTestAccuracy 88 %在caffe输出中都有一些中间重复项,这些都在ignore变量中标记出来了。 89 %所以分别减去下面这些量,得到了真实的总数据量 90 countTestLoss = countTestLoss - length(ignoreTestLoss); 91 countTrainLoss = countTrainLoss - length(ignoreTrainLoss); 92 countTestAccuracy = countTestAccuracy - length(ignoreTestAccuracy); 93 94 %调用函数去除trainLoss中的多余数据 95 trainLoss = deleteCell(trainLoss,ignoreTrainLoss); 96 stepTrainLoss = [startTrainLoss:stepSizeTrainLoss:maxIteration]; 97 tempTrainLoss = []; 98 for i=1:length(trainLoss) 99 tempTrainLoss = [tempTrainLoss; str2double(trainLoss{i})]; 100 end 101 figure; 102 plot(stepTrainLoss,tempTrainLoss,‘:bo‘); 103 axis([0 5000 0 3]);%axis([xmin xmax ymin ymax])控制坐标轴的范围和样式 104 xlabel(‘迭代次数‘);ylabel(‘Train损耗值‘); 105 title(‘TrainLoss‘); 106 legend(‘间隔:100‘); 107 grid on;%在图中添加网格线(虚线) 108 set(gcf,‘NumberTitle‘,‘off‘); 109 set(gcf,‘Name‘,‘TrainLoss‘); 110 111 %调用函数去除testLoss中的多余数据 112 testLoss = deleteCell(testLoss,ignoreTestLoss); 113 stepTestLoss = [startTestLoss:stepSizeTestLoss:maxIteration]; 114 tempTestLoss = [];%用于保存把cell转换为double的 115 for i=1:length(testLoss) 116 tempTestLoss = [tempTestLoss; str2double(testLoss{i})]; 117 end 118 figure; 119 plot(stepTestLoss,tempTestLoss,‘:bo‘); 120 axis([0 5000 0 3]);%axis([xmin xmax ymin ymax])控制坐标轴的范围和样式 121 xlabel(‘迭代次数‘);ylabel(‘Test损耗值‘); 122 title(‘TestLoss‘); 123 legend(‘间隔:500‘); 124 grid on;%在图中添加网格线(虚线) 125 set(gcf,‘NumberTitle‘,‘off‘); 126 set(gcf,‘Name‘,‘TestLoss‘); 127 128 %调用函数去除testAccuracy中的多余数据 129 testAccuracy = deleteCell(testAccuracy,ignoreTestAccuracy); 130 stepTestAccuracy = [startTestAccuracy:stepSizeTestAccuracy:maxIteration]; 131 tempTestAccuracy = [];%用于保存把cell转换为double的 132 for i=1:length(testAccuracy) 133 tempTestAccuracy = [tempTestAccuracy; str2double(testAccuracy{i})*100]; 134 end 135 figure; 136 plot(stepTestAccuracy,tempTestAccuracy,‘:bo‘); 137 axis([0 5000 0 100]);%axis([xmin xmax ymin ymax])控制坐标轴的范围和样式 138 xlabel(‘迭代次数‘);ylabel(‘准确率(%)‘); 139 title(‘TestAccuracy‘); 140 legend(‘间隔:500‘); 141 grid on;%在图中添加网格线(虚线) 142 set(gcf,‘NumberTitle‘,‘off‘); 143 set(gcf,‘Name‘,‘TestAccuracy‘); 144 145 %trainLoss和TestLoss在一个图中一起显示 146 figure; 147 plot(stepTrainLoss,tempTrainLoss,‘:ko‘); 148 hold on; 149 plot(stepTestLoss,tempTestLoss,‘:bp‘); 150 axis([0 5000 0 3]);%axis([xmin xmax ymin ymax])控制坐标轴的范围和样式 151 xlabel(‘迭代次数‘);ylabel(‘损耗值‘); 152 title(‘TrainLoss和TestLoss‘); 153 legend(‘黑色trainLoss‘,‘蓝色testLoss‘); 154 grid on;%在图中添加网格线(虚线) 155 set(gcf,‘NumberTitle‘,‘off‘); 156 set(gcf,‘Name‘,‘TrainLoss和TestLoss‘); 157 hold off;

函数deleteCell

1 function cellOutput=deleteCell(cellInput,delElement) 2 %由于caffe编程的过程,在更改学习率的时候会保存中间变量 3 %保存中间变量后,读取的数据就会有重复,所以要去除重复的数据 4 %这函数就是配合上面程序的编写的,显示各txt整的那么复杂,也是醉了 5 %cellInput输入的cell 6 %delElement删除的cell1中的元素的数组,默认这里面元素顺序是按照从小到大排列的 7 %cellOutput输出的处理后的cell 8 9 %下面的程序编的太水了。感觉这个从一个数组中删除另一个数组中指定位置的元素应该是一个 10 %比较经典的基础问题,但自己也琢磨了一下才编出来。。 11 %百度了下也没找到经典程序。所以就凑合着用吧。。。 12 [~,cellTotal]=size(cellInput); 13 [~,delTotal]=size(delElement); 14 delNum=1;%因为要不断定位delElement中的元素,先设一个变量来指定delElement中的元素 15 j=1;%用来定位cellInput中的元素 16 17 cellOutputNum = cellTotal - delTotal; 18 for i=1:cellOutputNum 19 if j < delElement(delNum) 20 cellOutput(i)= cellInput(j);%这里貌似可以优化,运行的时候看看 21 j=j+1; 22 elseif j == delElement(delNum) 23 if delNum < delTotal 24 delNum = delNum+1; 25 end 26 j=j+1; 27 cellOutput(i)= cellInput(j); 28 j=j+1; 29 else 30 cellOutput(i)= cellInput(j); 31 j=j+1; 32 end 33 end

输出如下图

感觉caffe的各个版本不同,参考下 应该能编出来。编这代码也花了1天时间。感觉如果能一行一行读取txt的信息,其实 提取信息还是挺简单的。发现这编这样的代码 其实没啥意义,只不过多了解了一些Matlab的函数,不用matlab来编写算法,还是不能提高编程能力。虽然能起到一定的辅助学习作用。但最好还是直接去改caffe的源代码,这才是正道。我这版本的caffe输出只有trainloss、testloss、testaccuracy。经网友 无可取代 提醒。感觉 不能看到 trainaccuracy 是个缺陷。但这个就要靠以后去改代码了。编了一天程序,也算一天没浪费:)

从这也能发现,这可视化是 很重要的。

Matlab读取cifar10 train_quick.sh输出txt中信息

标签:

原文地址:http://www.cnblogs.com/yymn/p/4569786.html